Why Hybrid Bonding is the Future of Packaging

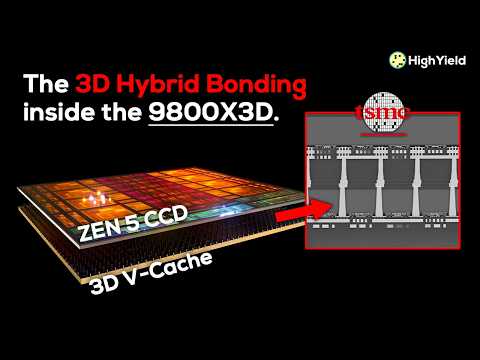

The 3D V-Cache secret has been revealed. Zen 5 X3D is placing the cache below the CPU chiplet in a move I honestly didn’t expect to see so soon. And while it does explain the changes we found in the Zen 5 chip deep-dive, it poses a entirely new question: how exactly is a Ryzen 9800X3D manufactured? Because the marketing animations, while nice to look at, never tell the whole story. I'm sure you have seen this animation before.

It shows AMD’s 1st gen 3D V-Cache. CPU chiplet on bottom and cache on top. But what if I told you that's not how the final chip actually looks like? AMD has left out one important step from the advanced packaging process that’s used to connect the 3D V-Cache to the CPU chiplet. And if we are wrong about the first generation, how close to reality is AMD's 9800X3D animation this time? That’s exactly what we will try to find out in this video.

Let's take a closer look at Zen 5 X3D, figure out how 1st and 2nd gen 3D V-Cache CPUs really look like and explore the advanced packaging technology that is making all of this possible. AMD first 3D V-Cache CPU, the Ryzen 5800X3D, wasn't just a lucky strike. It was made possible by a brand new packaging technology called "hybrid bonding". Hybrid bonding is what I

would call a "leap technology", because it's the foundation for a true technological leap: real 3D packaging at scale. AMD’s X3D CPUs are only the beginning. But what exactly is hybrid bonding, what makes it so special and how does it work? The reality is that ever since the invention of the so called flip chip in the 1960s, semiconductor packaging hasn't fundamentally changed. Even today, most chips are using the same principles. This form of packaging is solder based, and

it's very simple. If you want to connect a chip to something else, like the packaging substrate or even another chip, you just use solder. Of course you can't just slap solder onto the bottom of a chip and call it a day. That's where so called "solder balls" or "solder

bumps" come in. You start at the smallest layers of the metal interconnects of a chip, then you incrementally build them up as larger and larger structures until they are big enough for the soldering process. Then you add the solder. These solder balls are often called "C4 bumps". No, not the plastic explosive. I'm talking about the "Controlled Collapse Chip Connection", aka C4, which is the technical term for flip chip bonding using solder balls. Because the solder balls do collapse a bit during the bonding process, but in a controlled way. Take a look at this picture of a broken R9 Nano. If we zoom into the cracked GPU we can

actually see the solder bumps that are used to connect the GPU die to the packaging substrate, with our bare eyes. I'm not going to count them, but if you wanted to, you definitely could do so. That's how big they are. And we can see the same on Zen 5. This picture shows the broken 9600X that was used by Fritzchens Fritz to create the die shots for my Zen 5 deep-dive. The chiplet is removed from the PCB. And once again, if we zoom in, we can see the C4 bumps that are used to package the Zen 5 CCD onto the packaging substrate. In areas with a increased need for interconnect density, like die-to-die interconnects, solder bumps have been replaced by copper pillar bumps, also called "C2 bumps". They are, as the name implies, copper pillars with a solder bump on top. Because they are smaller and don't

fully collapse like a solder ball, you can place more of them in the same area, which increases interconnect density. Very small versions of this technology are usually called microbumps. But they still use the same core principle. In the end, even today, everything is still soldered. Hybrid bonding changes that. It introduces a completely new form

of interconnect that does not use solder. At its core, hybrid bonding places two pieces of silicon flush against each other which then form a connection through a special layer, the so called bonding layer. This layer contains tiny copper pads, which will form the electrical interconnects, and a dielectric material to isolate the copper pads from each other. That's where the name "hybrid bonding" comes from, because it's a hybrid bond of copper and dielectric material. The bonding process is easy to explain. You start with two silicon chips you want to bond to each other. Of course they can't be random chips,

they have to be designed with hybrid bonding in mind. Both chips need matching interconnect areas on their respective surfaces. In a first step, the surface of both chips, usually as part of a wafer, is treated and prepared with a dielectric material and the matching copper interconnects are revealed. The copper is a bit recessed from the dielectric material. Now both wafers are perfectly aligned in a vacuum and pressed against each other. Because the copper in the bonding layer is recessed,

the dielectric material, for example silicon dioxide, touches first. The pressure inside the vacuum is enough to start the initial dielectric bonding, which already begins at room temperature. To fully anneal the dielectric bond, the wafers are heated to about 150 degrees celsius, which completes the first bonding step. Next, the wafers are further heated to around 300 degrees celsius, which is the temperature that copper starts to anneal. The previously recessed copper interconnects on both chips start to expand and connect with each other. The result is that the copper pads on both chips are now fused with each other,

forming a single electrical interconnect. And they are isolated from each other by the surrounding dielectric material. This bond itself is strong enough to keep both chips bonded to each other without any additional support. This process flow is why hybrid bonding is

so special. It connects two chips in the most straight forward way, by directly forming copper-to-copper bonds, without the use of solder. You are basically fusing parts of the metal layers of both chips. Direct copper-to-copper connections offer a couple

of advantages. First, they can be much smaller than even the smallest of solder based microbumps, increasing the interconnect density. There are no solder related problems, no deforming. The placement of each copper pad is tightly controlled and they are fully isolated by the dielectric material surrounding them. That means you can place them much closer to each other. The main advantage of a higher interconnect density is a increase in energy efficiency.

Just by having more, smaller and shorter interconnects, hybrid bonding reduces resistance by about 90% compared to soldered interconnects. Which means the energy cost to transport a single bit is reduced. Using hybrid bonding, AMD was able to increase the interconnect density by 16x compared to a already small microbump technology. This slide gives a very good idea of just how much smaller hybrid bonding interconnects really are. In the

image you can see traditional C4 bumps as grey circles and how close they are usually placed to each other. The smaller blue dots are microbumps, a more advanced form, but still solder based. And the orange dots represent hybrid bonding. A massive difference. This means, you can bond two chips in a way that they behave almost like one single chip. The second advantage is that placing two chips directly on top of each other, and not having to use layers of microbumps and solder in-between, also reduces latency. A hybrid bonded chip can have shorter access times to parts of the chips below or above it than even some areas inside of that very chip. Because the signal only has to travel vertically. That's what allows AMD to

extend the L3 cache of its X3D CPUs, because the cache chiplet, no matter if above or below, is so close. Almost as if it was on the same die. In a nutshell, hybrid bonding allows you to connect chips with very high bandwidth, low latency and great energy efficiency. It basically bonds two chips as one. It's better in every single way compared to previous solder based solutions. But of course, it's not without drawbacks.

One disadvantage of hybrid bonding is that it requires a FAB like environment. Traditionally, semiconductor manufacturing and packaging have been two very different parts of the chip production. Manufacturing needs cleanrooms, because even a single particle can negatively affect the photo lithography process. Wafers are constantly cleaned and a very high precision is required. But once the silicon is finished, the packaging could be done in a much less controlled environment with less precise machines and without the need for cleanrooms. Which results in lower costs. Hybrid bonding changes that. The surfaces of the chips that you want to bond have to be super smooth, which requires CMP, so called chemical mechanical planarization. Even a single particle between the bonding layers can result in a lot

of defective interconnects, which is why you need a cleanroom. And smaller interconnects also means you have to use a lot more precision when aligning the chips with micron precision, which requires higher precision tools. With traditional packaging, you could choose from a variety of Outsourced Semiconductor Assembly and Test companies, so called OSATs, to handle the packaging of your chips. You would produce them at a FAB and then ship the finished

silicon to another company for packaging. With advanced packaging and specifically hybrid bonding, the packaging is usually done at the semiconductor manufacturing company itself, because it does need a FAB environment and FAB tools. And since AMD is manufacturing at TSMC, the hybrid bonding is also handled by TSMC. To be precise, AMD is using TSMCs SoIC-X, which is short for "System-on-Integrated-Chip". By the way, Intel's next-gen Foveros Direct is

also using hybrid bonding. With hybrid bonding, there are two primary options when it comes to the process flow. Hybrid bonding can either be done as Wafer-to-Wafer (W2W) or as Chip-to-Wafer (C2W), sometimes also called "Die-to-Wafer" (D2W). Wafer-to-Wafer, as the name implies, uses hybrid bonding to directly bond two whole wafers. The main advantage is that you have a high bonding throughput, as you bond entire wafers with little initial steps. For this type of bonding,

the chips on both wafers need to be the same size, as they have to be perfectly align with each other. After both wafers are manufactured, they are prepared, which might require some wafer thinning. Next, the wafers are cleaned, the hybrid bonding layer is activated and the wafers are bonded in the way we just discussed. Only after the bonding process is finished are the dies cut from the now double stacked wafer. But there's one disadvantage to this approach. When you bond wafers to each other, you can't bin the chips beforehand, you have to work with what you got. That means some of the top and bottom chips will have defects. You could end up with a working cache chiplet on a defect CPU or vice versa. That's where Chip-to-Wafer comes

in. In this hybrid bonding process flow the wafers are cut and binned before the bonding process and only so called "known good dies" (KGDs) are selected for the hybrid bonding. Chip-to-Wafer has two different ways of aligning the chips once they are cut and sorted. You can

either place the top chiplet directly onto the bottom chiplet, or you use a so called "reconstituted wafers". This means you are basically re-building or re-constituting the initial wafer, but this time only with working chips. So you take the original wafer, dice it, test the chips and then you place the good ones back on a new wafer. You can do this with only

one of the chips, so for example you could bin the cache chiplets, place then on a carrier wafer and then hybrid bond them to unbinned CPU chiplets - or the other way around. Or you can bin both chiplets and use two reconstituted carrier wafers for the hybrid bonding process. The bonding process itself then looks exactly the same as Wafer-to-Wafer bonding. The advantage of Chip-to-Wafer is clear, you can control which chips you use for the bonding process. And if the chips you want to bond to each other are of different size,

you don't have a choice, since you can't use Wafer-to-Wafer and have to use Chip-to-Wafer. But there are also disadvantages. Chip-to-Wafer requires a lot more steps. First you have to cut and bin the dies, then attach them to a carrier wafer, then perform the bonding process, then remove the carrier wafers again. Cutting the dies before bonding is also a problem, because the wafer dicing process releases a lot of particles, which can contaminate the sufaces of the chips and negatively affect bonding. A single particle can block the bonding process

for many interconnects. That's why Chip-to-Wafer bonding doesn't use blades or even lasers to cut the dies from the wafer, but plasma. Because plasma cutters create the least amount of particles during the wafer dicing process. If you are interested in learning more about advanced packaging, I highly recommend checking out SemiAnalysis. They cover everything,

from packaging technologies to process flow, the players in the industry and much more. There's a 5-part in-depth series on advanced packaging. I can guarantee that if you read them all, you will understand advanced packaging on a new level. I've linked all five articles in the description below. They are a lot to take in, but completely worth it in my opinion. But enough with theory. We're here to figure out how AMD's 3D V-Cache CPUs really look like. Let's start with the 1st gen, which is used on Zen 3 and Zen 4.

AMD's visual representation is very clear. The CPU chiplet, also called CCD, is on the bottom and the smaller 3D V-Cache chiplet sits on top, in the center of the CCD. Because it's not as big as the CCD, two pieces of silicon are placed next to it for structural support and to create a coherent surface. But that doesn't fit with the hybrid bonding process flow AMD is using. For Zen 3 and Zen 4, AMD uses reconstituted wafers for both the CPU chiplets and the cache chiplet. Let me explain. This is a Zen 4 CCD. Right now we are looking at

the side that's usually facing down, towards the packaging substrate. Remember, current chips are so called flip chips, they are placed face down. In order for us to see the Zen 4 CCD transistor structures in this picture, Fritzchens Fritz had to remove the CCD, flip it around and grind down all the metal layers that sit on top of the transistor layer. This is how it actually looks like, without the metal layers removed. These metal layers are facing downwards, and then solder bumps are used to bond it to the PCB. We can also see that the die itself is much thicker than the actual transistor layers. And because the side we are looking at right now

is facing down, all that unused silicon is on the backside, facing upwards. But if we want to build a X3D chip, that's where the cache chiplet is supposed to sit. What's going on? The reason silicon wafers are much thicker than the few layers that are actually needed to manufacture the transistors is structural integrity. Wafers have to go through thousands of process steps inside a semiconductor FAB and they are already thin and fragile. If

they were even thinner, you'd run the risk of damaging them during the manufacturing process. The extra silicon adds the needed structural support. But in order to stack a chip on top, which in the case of a flip chip means stacking it on the back, you have to remove the structural silicon on the backside. To allow the cache chiplet to connect to the CCD, the CCD first has to be thinned down in a process process called "wafer thinning". A entire wafer

of Zen 4 CCDs is thinned at the same time. The result is that only the super thin transistor and metal layers of the CCDs remain. It's also a very delicate process, because wafer thinning impedes the structural integrity of the original wafer, by removing the silicon that was providing the structural support in the first place. For that reason so called "support" or "carrier wafers"

are used during the process, which are bonded to the wafers that are being thinned down, so they don't collapse when they become too thin. The result of that wafer thinning process is that we have super thin Zen 4 CCDs, that are little more than transistor and metal layers, sitting on top of a carrier wafer. Next we have to prepare the 3D V-Cache. Because the cache chiplets are smaller than the CCDs they will be bonded to, they also have to be placed on a carrier wafer for bonding. First, to align them properly. And second, if TSMC would press different sized chips against each other, they would most likely break. Than means both chips that are being hybrid bonded via two wafers have to have the same surface size. The reconstituted carrier wafer has to be a combination of the 3D V-Cache chiplet and the two small pieces of structural silicon to achieve the same surface area as the CCDs.

To recap: on one side we have the Zen 4 CCDs which have been thinned down and placed on a carrier wafer for bonding. On the other side we have the 3D V-Cache chiplets, which also have been thinned down in order to be placed onto the carrier wafer and the two structural pieces next to it. These two reconstituted wafers are then prepared for the hybrid bonding process flow and bonded to each other. But that's not the final product. First, we have to remove the carrier wafer that's bonded to the bottom of the CCD, because that's where we want connect the chip to the packaging substrate and the carrier wafer would be in the way. But we can't remove the carrier wafer that's bonded to the top of the cache, because even combined, CCD and cache are still too thin to provide structural support.

Tthe X3D-stack has to be the same height as a standard Zen 4 CCD that wasn't thinned down. The final result looks like that: the thinned Zen 4 CCD is on the very bottom. Right above is the thinned cache chiplet and the two filler pieces that even out the surface area during hybrid bonding. And above that we have the actual structural silicon, that gives structural support to the whole stack and allows it to reach the same height as a standard Zen 4 CCD. That's how a 1st gen 3D V-Cache CPU really looks like. It's a silicon sandwich with five different pieces. Three of which are filler and support silicon, only two are active. This layout also

explains the thermal issues of Zen 3 and Zen 4 X3D CPUs. The problem isn't the amount of silicon that sits in-between the CCD and the heat spreader. Both variants are the same height. It's also not the 3D V-Cache that does produce some heat, because it only covers cache areas on the CCD. The real reason is all the bonding layers. Only the small area between the CCD and

the cache chiplet uses hybrid bonding, as in dielectric material and copper. The other areas, and that includes the entire bonding layer of the support silicon, only uses a simple oxide layer. That means the heat from the CPU cores on the CCD has to go through the oxide layer between the CCD and the filler silicon and then through another oxide layer between the filler silicon and the support silicon. Silicon on its own already isn't that great of a thermal conductor, but it's not horrible either. But these oxide layers are real thermal barriers,

that block the heat from moving upward. The cache isn't the problem, the silicon isn't either. The bonding layers are. Now that we know how 1st gen 3D V-Cache looks like, let's take a look at the 2nd gen 3D V-Cache that was introduced with Zen 5. What has changed besides placing the cache chiplet below the CCD? First, CPU and cache chiplet are the same size now, which in theory could allow AMD to use Wafer-to-Wafer hybrid bonding. We are talking about very small chiplets in the range of 70mm²,

with a high yield. Wafer-to-Wafer bonding could be cheaper, even without the ability to select known good dies before the bonding process. From what I'm hearing though, Zen 5 X3D still used Chip-to-Wafer. This decision could be impacted by a lot of different factors such as packaging capacity, volume, and so on. If AMD or TSMC doesn't tell us, we can't really know. There are two ways a 9800X3D could be manufactured. It depends if AMD still

uses two reconstituted wafers for hybrid bonding, meaning both the CCD and the cache chiplet are diced and binned before the bonding, or it that applies to only one. The first one is the most straight forward. We start with the new 3D V-Cache chiplet. Since it's placed on the bottom now, it has to be thinned down to reveal the hybrid bonding interconnects and TSVs on the backside. So we would take the wafer with the cache chiplets, run it through the wafer thinning process and then build a reconstituted carrier wafer, which we then bond to a Zen 5 wafer. The Zen 5 CCDs would still require a little bit of thinning,

because the final product has to be the same height as a standard Zen 5 CCD and the cache chiplet on the bottom does add a little bit of height. But that would be it. The other possibility and what I think is most likely is that both cache and CPU chiplets are diced and binned before the bonding process. This would mean that AMD still works with two reconstituted wafers for the hybrid bonding process. And because TSMC currently only uses thinned dies for reconstitution, it would mean that this approach still requires structural support silicon on top. The end result would look like this: super thin 3D V-Cache die on bottom, a little thicker but still thinned CCD above and then a structural silicon to top it of.

Of course this would mean that there's still one oxide layer above the CCD, but that half a much as before. And in a video from Gamers Nexus, Steve quoted AMD engineers about how they worked with TSMC to improve the oxide layers so they are not as thick and don't block the thermal transfer as much. In the end, these are the two possibilities. It would be cool if Zen 5 X3D only consists of two dies and doesn't need additional support silicon.

But there is a good chance that it still uses one. Unless AMD tells us, we won't know for sure. In my Zen 5 deep-dive video I've extensively talked about the visible changes to L3 cache area and TSVs. Now we know the TSVs are in the cache chiplet. In hindsight it should have been obvious. But there's a reason why I dismissed the idea, that the cache would be on the bottom. And it's not because I thought it wasn't possible. MI300 shows that AMD knows how to place cache below the compute. The reason I didn't expect this reversal is power delivery. Yes, placing the cache on top adds thermal problems. But the X3D

CPUs were still the fastest gaming CPUs even with lower clock speeds. If you stack two chips on top of each other, no matter if you use microbumps or hybrid bonding, you have to get power to the chip on top. This is done via so called TSVs, or through-silicon-vias. The cache chiplet does use a little bit of power,

but it's nowhere near the power consumption of the CCD. AMD already used a lot of TSVs when the cache chiplet was on top. Placing the CCD on top requires a massive increase in TSVs. All the data paths, for example the Infinity-Fabric-on-Package, has to be routed through the cache chiplet too, because it's located on the CCD. And while there's more space inside the cache chiplet for routing TSVs, because it's much larger than the area actually needed for the cache cells, it's still silicon you have to go through. And that's just the added complexity. This setup also increases resistance, because the power hungry CPU cores on the CCD are fed through TSVs, which increases the distance the power signal has to travel. What I'm trying to say is that flipping the cache below the CCD improves thermals, but adds a lot more challenges in other areas. A increase in complexity I didn't expect AMD would take as a trade off. Especially

since it looks like the 2nd gen 3D V-Cache will be exclusive to consumer products for now. So, why is AMD doing all of this? AMD hasn't changed the infinity fabric packaging since Zen 2, but after two generation of 3D V-Cache they re-work the entire process flow for a limited consumer product and trade a massive increase in routing complexity for a slight thermal advantage? I think what we are seeing with Zen 5 X3D is a test run for AMD's future packaging plans. And MI300 might actually be a good indication of what to expect in the future. Because MI300 not only puts cache into the base die, but also some of the I/O. Right now, every Zen 5 CPU sill

has a large I/O die that's connected to the CPU chiplet via slow and power hungry traditional packaging. If we can move the cache into the base chiplet, why not move everything else there? I can imagine a large base chiplet with all the I/O and extra cache. It adds synergy, because both I/O and cache don't scale well with new process nodes, so you can produce both in the same, older nodes. And you can place the CCD directly on top, via hybrid bonding. Such a cache and I/O base chiplet would also be big enough for more than one CCD. I think you see

where I'm going. And while all of this is pure speculation, it makes me excited for the future. A future made possible by hybrid bonding. Did you expect AMD would place the cache below the CCD or did it catch you by suprise like me? I'd also like to know how you think AMD's next-gen packaging will look like. Do you think we might see a large base chiplet with I/O and cache used

as a interposer for the CCDs stacked on top? Or do you have different ideas? Let me know in the comments down. I already enjoy reading your comments, even if I can't reply to them all. I hope you found this video interesting and see you in the next one!

2024-12-09 09:28