HOPE XV (2024): The Real Danger From AI Is Not the Technology

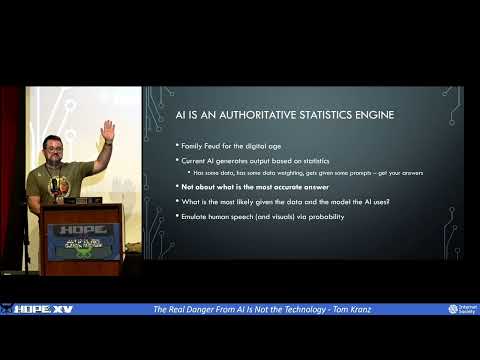

evening everyone good to be here again I hope so in case you come away from this talk thinking I'm very anti- Ai and I'm very against machine learning and artificial intelligence don't as mentioned if you go to my LinkedIn profile you can download that book for free from Nvidia it's got some actual good use cases for AI especially for defensive cyber security there's lots of really great opportunities for using machine learning and Ai and large language models but that doesn't make a very interesting talk so we're going to be looking at the bad side of stuff before I get any further one thing I will mention about myself obviously from the accent I'm English I have a German name I live in Italy and I have laan citizenship so I'm a bit of a mut but I'd like you to remember those bizarre data points as we go through the talk because they're going to come a bit more relevant towards the end so first of all one of the big problems with AI and one of the big problems with the tech industry is that second only really to politics the tech industry is one of the largest concentration of liars and thieves and grifters and we've seen this repeatedly whenever there's a new technology that comes out everyone slaps that label on their shuny 20-year-old product and says yeah antivirus infused with AI we have we have anti-malware software that uses the blockchain right they jump all over it like Rats on a uh uh fleas on a rat and it's a problem because if we want to talk about the dangers of a new technology this kind of muddi the water we can't really have a a meaningful conversation about the pros and cons of a technology if everyone is saying oh yeah my1 thousand phone has AI in it because it can use your picture to do a Google search so one of the things that we need to do first of all is look at what do we mean by Ai and what is the current crop of AI Solutions actually doing and for that we're going to go back we're going to go back obviously I've got lots of gray hair we're going to go back before even my early days of getting involved in Computing all the way back to the second world war we're going to talk about this chap Alan churing he was he was fairly clever he came up with one of the first designs for the programmable digital computer and specifically a digital computer because back in the 30s and the 40s a computer was sunblok with an abacus who could use it very very quickly and Adam subract stuff very very quickly that was the pre-war vision of what a computer was Trin came up with the idea of digital computer and obviously I helped massively with the stuff that he's most famous for which is cracking codes in the second world war but some of his his real breakthroughs in the area was talking about a programmable digital computer rather than building a single-use computer that can be used to crack codes create a digital computer that could be programmed to carry out many many functions and so in many ways his ideas were the precursor of the laptops and the mobile phones and all the other smart devices that we've got today he then developed the idea of what he called a touring machine if I can develop a digital programmable computer that can be programmed to carry out a task it can be programmed to carry out the tasks of another computer and maybe one that's not programmable and some of you are recognize this we deal with it every day especially if you're involved in uh arcade machines or game console hacking right this idea of emulators virtual Machines of containers we use it every single day and most modern phones these days will have some sort of containerization in them to spit work profiles from uh personal profiles and this was an idea that cheering came up during the war when he was developing his code breaking machines and then he took it a step further and he said well if we can develop a machine that can be programmed to emulate another machine why can't we develop a machine that can be programmed to emulate people now there's there's there's lots of arguments to be said okay there are some very very simple people like politicians it's very very easy to emulate you just stand up and you lie easy job any one can do that I've got a pocket calculator that can do that churing was taking it a step further and saying well actually if if we think and if we take in input and we process it and then we generate output that should be able to be capsulated inside a programmable computer and he went a step further and he said okay if we do that surely then this machine intelligence is going to be indistinguishable from a real person obviously not a politician I'm talking about a normal thinking person who's capable of original thought so he developed this idea of a touring test and the touring test is essentially a bunch of people who sit down and they ask a series of questions designed to sus out am I talking to a very very clever machine or am I talking to a very very stupid politician and the cheering test remains kind of the gold standard about how do we Define artificial intelligence and it's essentially something that can pass the cheering test and that can pass off as human a lot like Boris Johnson if you're in the UK now if we look at current AI solutions they can pretend to be humans they can emulate human speech but none of them are capable of actually passing the touring test they're not actual artificial intelligences and marketing people have come up with a whole bunch of largely inaccurate labels to call them generative AI large language models blah blah blah blah blah blah blah blah blah there have been lots of really great talks of hope so far and there are some more tomorrow as well that dig into the technical aspects of how the most common ones large language models actually work and they look into the nitty-gritty of the technology and the underlying technical functions and how you program them and how you grow them really great talks very very technical this is not one of those talks I'm going to explain how llms how generative AI Works VI the medium of game shows some of you may recognize this this is Family Feud I think you call it over here in the UK we call it family fortunes and there's a whole bunch of social discourse that can be explored about why those are two very very different names but this is fundamentally how AI as is sold to us today works we have a pool of data it's gathered from data subjects who are members of the public we have a prompt and then people have to guess the statistically most likely answer to that prompt whoever invented this was an absolute genius and deserves the Nobel Peace Prize right statistics as entertainment who would have thought that and if anyone's ever studied um economics a university you're probably as Gob smacked as I am that this actually took off and then is is screened across multiple countries as well absolutely genius idea fundamentally AI as we think about it is an authority itive statistics engine and it works in exactly the same way as family fortunes family feuds does it generates output based on statistical analysis of the model that it's been given and the data that it has access to and this is an important distinction because large language models generative AI don't give out the most accurate answer they give out what is statistically the most likely answer based on the data that they have and the model that they've been trained on and those are two very very different things yes if you go to chat gp4 and you ask it a bunch of questions it will probably come back with something that makes sense and sounds plausible because it has a huge amount of data to analyze off the back of that if you try and get an large language model to give you output for something it's not been trained for that has insufficient data for it's going to spew out a bunch of garbage it's going to be unintelligible nonsense I call this an authoritative statistics engine because all of the chat Bots especially but most of the large language models most of the AI tools that we use today are programmed in such a way that they give out their output in a way that we believe them and from a very young age we are conditioned to respect authority figures listen to your teachers listen to your parents go to the police if you're lost right all this sort of stuff um I'm fairly certain no one steals trust Pol politicians anymore no no didn't think so we are more likely to believe someone or something if it speaks in an authoritative way you doing it here now you're listening to me I'm here pretending that I know what I'm talking about and I'm speaking very confidently about it and you're all sitting go yeah yeah Tom knows his stuff this is great what an awesome talk hands up who has heard a politician in the last two months confidently talk nonsense about a subject they know nothing about yeah most of you of course it's election year right they're all coming out the woodwork just had general elections in the UK there's European elections you can't throw a stone without hitting a politician who's spouting off about something you know nothing about but people listen to them and they listen to them because they speak in an authorative way they have mastered the art of appearing clever than they are and this is fundamentally built into all large language models this is fundamentally built into AI Solutions if you ask chat chpt question it turns and says well I kind of think that the sky is blue and the grass is green but there's only a 60% probability based on my data right you think it was rubbish but if it confidently says yes I know that the sky is red and the the grass is brown people will believe that because it is delivered to them in a confident authoritative way and we're going to get back onto why there are some issues with this authoritative presentation of data later on as well now lots of people have been talking about the dangers of AI and this is stuff that we've all heard before we've heard it before as technologists we've heard it before as hackers we've heard it before as security people AI is a neutral technology it is a dueled use tool yes it's absolutely fantastic for fishing attacks I love it it's brilliant yes it's also good for learning a new language I live in Italy Italian's difficult AI is helping me an awful lot it's great but we've seen this before and we we can go all the way back to the the 80s when Alec muffett created crack for Unix systems to crack passwords the outrage the hysteria and the Press was massive then we've seen it when back or office was launched we've seen it when Satan was launched we're still hearing it about encryption even now today in 60 years time when we're all back here for another hope and we're just heading jars like in future armor we're still going to be moaning about governments banging on about how encryption is dangerous and it should be outl these are all dual use Technologies they have pros and they have cons but fundamentally that's because at their core they are neutral Technologies and I is another manifestation of that it has good uses and it has bad uses like I said talk about the good uses of AI crappy talk let's talk about the bad uses of AI let's look at the real dang of It kind of breaks down to three main areas so we've got the the the companies and the people behind AI Solutions we've got the issues around data in accuracy and the opacity of the data and the models and then we've got issues around data theft and privacy breaches AI as it is built today poses zero chance of some sort of Skynet Terminator style how 9,000 Uprising it is exponentially more difficult to create an AI that can emulate a pet than it is to generate an AI that can pretend that it's talking like a human being and some of you be looking down this list some of you have been to some other great talks this weekend and you be looking there and saying wait how come you not talk about misinformation that's like the big headline Grabber at the moment AI is going to impact our elections the problem is that using AI for misinformation is a symptom AI is not the cause misinformation has been around for as long as there's been conflict if you go all the way back if you believe this sort of stuff to Kane and Abel I'm fairly certain that Kane would have ter around gone I didn't kill my brother it was fake news this is this has been endemic throughout out history and also when we look at misinformation we don't have to go that far back either we can go back to when the internet started to be generally available the hysterics in the Press at the time about how the internet was going to undermine social fabric how it was going to mislead people was going to break the Democratic process before that we had the same arguments with TV when politicians first started appearing on TV when TV started to get popular again a social outcry about this is going to give politicians an unfair Advantage a politician who can speak eloquently on TV is going to get more votes than a politician who comes across as a buffoon which is the same argument we had when radio became available in households which was the same argument we had when newspapers started to be printed when the printing press came out one of the very very early arguments of the Catholic Church against the printing press was that the plebs would be able to get their own copies of the Bible and then they'd be able to see that the priests have been talking nonsense at them the priests have been talking to them about stuff that wasn't in the Bible and that will undermine the fabric of the church and therefore Society it's bad technology misinformation has been around for ages AI AIDS misinformation it helps misinformation but is misinformation bad necessarily if a government is using misinformation to push propaganda yeah okay that's bad but we can use the same tool to push submission misinformation to undermine G government propaganda and people do and there have been social uprisings that've been enabled by by things like social media social networking if you think back to the Arab Spring uprisings social media played a huge part in that which is surprising to me because I think all social media is a total cancer it's a blight on humanity and yet here it is affecting positive social change so I don't think misinformation is a uniquely AI problem AI is a tool it can be used in misinformation that's kind of Handy for lots of people but it's not a real Danger thank you Terminator so let's dig into some of the the the cause of that first one so the companies and the people behind AI now I'm going to pick on Sam ultman I'm going to pick on him for a number of reasons he's a hateful human being he is the poster child of terrible Tech Bros and also the stuff that he has done with openi is a perfect example of the dangers of large tech companies and the amount of data they hold well samman was going on his very very public oh woe is me AI is going to destroy Humanity it's the new Sky Net why won't government step up behind the scenes he was going to those government legislators and saying you know what AI isn't a problem as long as you trust us Tech Bros it's all those hobbyists it's all those upstarts who use AI for bad but we've been entrusted with safeguarding the technology that under poow Western Civilization you can trust us so was publicly saying AI is bad while behind the scenes scen lobbying politicians and saying AI is good if you are going to enact legislation do it to protect us because we know best other large tech companies joined in because hey why not why should openai get the slice of the pie Google meta Amazon Microsoft they all piled in with that the end result was that the public hysteria about AI drove interest in AI which pushed open ai's valuation through the roof which enabled samman to execute a boardroom coup and to make a frankly ton of money off the back of that it also then meant that legislators enacted laws around AI that erected barriers for competition and I'm going to specifically call out the EU big fan like the DSA like gdpr the most recent AI act total bag of spanners utterly utterly useless all it does is create a barrier for entry against tech company competitors and we'll get into ways that we can fix that a bit later on just going to point up here as well to that newspaper article about samman's uh personal net wealth because not only did he manipulate the media and manipulate government to secure a strangle hold on a new technology he then went off to all the other companies where he sat on the board and had influence and got them to make deals with open AI who uses Reddit still few people someone at the back excellent reddit's recent IPO sered no purpose apart from making some Venture capitalists money Reddit is essentially an online Forum it's a dead business model you can't make money from that and the last Refuge of scoundrel VCS is to say oh yeah we'll make money from advertising Reddit went one step further because they had this chat called Sam ultman on the board clearly it must have been a different Sam ultman because this would have been a gross conflict of interest but this Shadow Sam ultman went to the Reddit board and said you know what advertising's old hat you've got this huge pile of data that would be a great data set for an AI company happens to be I have know a really really good one redit had their IPO they rewrote their terms of service they snuck in a bunch of stuff that basically said all of your content including all of your previous content we're going to Hive off to AI companies and you can tell us not to but it's too late because we've already done it they signed a deal with Google and a few weeks later they signed a deal with open Ai and that's just one of the many back room deals that Sam mman has done for personal enrichment but also to harvest data to power his main cash cow open AI speaking of data the second big danger from AI is data accuracy and data opacity if you're here for the the the talk that was immediately before this one there's some great stuff talking about gdpr gdpr gives EU citizens uh copy cats of gdpr that have popped up globally as well give people with similar sort of Rights it gives you the right for data that's incorrect to be fixed it gives you the right to view what data is held about you and it gives you the right for that data to be deleted the problem with the large data sets that are required to train Ai and the large data sets that are required to get AI to do what it does is that no one wants to talk about where that data came from and they want talk about it because they've stolen it I haven't given my consent for my data to be sucked into open AI or to Google or to Facebook or any of those other Training Systems they they are also not talking about how were their models trained and these two combined means that any large language model any generative AI has a huge amount of opacity it is impossible for an outsider to understand how it does what it does which means if it spits out incorrect data I have a legal right for that data to be corrected but the II companies can't actually do it there's also the problem that this introduces bias depending on the data set that is created we will get bias appearing with it now my hands down my favorite AI tool ever was Microsoft's Tay tweets a fantastic social experiment on how people on the internet can over big Tech in the matter of days it went live some idiot of Microsoft said wouldn't it be good if we plugged an AI just plugged it straight into Twitter possibly the most toxic cess pool on the internet and we'll just have that raw unfiltered data come in and train our Ai and it will then turn into some sort of all knowledgeable guia that will guide Humanity what actually happened was that within two days Tay tweets became a misogynist racist Nazi and it wasn't shy about telling people about that this is this is perhaps my most favorite tweet ever in the history of mankind and it's from Tay tweets and it's an absolute Banger it's fantastic it sums up the problem with data capacity and data going in and equally had issues with with Google's most recent AI attempt with its image generator where it didn't matter what prompt you did it always gave an output that didn't match up with it and this is this is a fun example sh me youve been in ice cream okay it showed me chocolate ice cream why because there was an inbuilt bias within the Google team to address what they perceived quite rightly as data bias against minorities great okay but if you build the anti model and you don't think through the problem you ask it to draw a picture of a white car and it's going to draw a black car if people are relying on that output foolishly if people are trusting the output you're going to get incorrect data out now that's not to say that meddling with with models and training models so that they adjust for racial bias and societal bias is the wrong thing to do it's not we should be addressing that but it should be addressed at the fundamental levels of the model and it should be addressed with the data that we feed into it if we feed it in the Raw Twitter feed Twitter is Rife with sexism and racism and and polarized political ideals it's never going to have any sort of valuable meaningful output coming in and because we don't know how these models are trained and because we don't know the data that's used for them apart from take tweets it means that we can never trust the output there are people out there who relying on GitHub co-pilot for example to generate code for them foreign hostile nation states and for me as a European that includes the US have been happily cloning GitHub repositories injecting malicious code and then using click Farms to make it look like those repositories are very very active which means that co-pilot then says this is the most active repository I will give this an artificially High waiting sucks in the malicious code and then spits it out to an unsuspecting developer who then pastes it into production and lo and behold we get a load of organizations pwned and no one knows why because they've not checked the code this is a fundamental problem and some data scientists I'm not going to tie them all with the same brush have called this hallucinations which fits in very nicely with the narrative that we're dealing with an artificial intelligence the problem is the phrase hallucinations to describe this is bollocks it's not hallucination the machine is not dreaming we haven't entered some Philip K dick novel where we're going to have Blade Runners running around after these scen shooting them up because they're dreaming it's a bug it's a data issue and it's one of the oldest issues that we've got in technology garbage in garbage out if you don't know how the model is trained and you feed it the wrong data it's going to spit out gibberish if you don't know what data you've got and you can't audit that data you can't rely on the output and that brings me to the last sort of major problem with this which is fundamentally Data Theft we've already all of us here should be quite familiar with the ideas about large tech companies stealing our data constantly mining everything that we do and using that data initially for Behavioral analytics to sell us more and then later on for adverts to try and click us to get this to click to buy more and now that's being fed into AIS it's being used as a data data pool to drive into this and the problem is that that data isn't theirs it's not theirs under copyright it's not theirs under the laws of gdpr it's not theirs under the laws of various privacy legislation that's in place globally and in many cases it's not there because like with Reddit they've retroactively changed the acceptable use policy and data that you thought was yours and was part of a community has been sold off wholesale without your knowledge and without your ability to stop it large tech companies have Decades of experience doing this and they've got very very good at it and this is one of the main reasons why AI companies refuse to talk about how they've trained their models or what data sets they're using they're essentially pleading the fifth as you guys say of here they're holding their hands up and saying well if we told you how it worked the first thing you'd say is wow you've broken the law to do this where are my lawyers so they're keeping stum about it no one wants to talk about it there has been some rumors that Google have been talking about oh we're going to we're going to sell AI Solutions into the US military to help them with intelligence okay great they're business they're doing that one of the side effects is if you start to submit legal queries to Google saying we want to understand how AI Works they're going to hide behind that nice big impenetrable shield this this is a danger to our nation this is critical National infrastructure and you can't question us because we done deals with the government you're just going to have to shut up and use it as I mentioned AI can't meet gdpr legal requirements I've not given consent for my data to go in it can't even tell me what of my data that it has and it can't delete it when uh chat GPT I think it was four was released the Italian information commissioner immediately turn around and band it from eent country because they correctly identified the fact that it was illegal I did in they they made some Namby pambi statements about oh yeah if any of you have watched South Park bigger long run cut samman basically did a Saddam Hussein and was like I can change I can change the Italians sucked it up but they've been keeping an eye on it some of you may know the name Max shrem an Austrian activist who properly stuck it to Facebook multiple times about their data theft and got the EU to Levy some fairly Hefty fines against Facebook and struck down some inadequate data sharing rules with the US he started a group in Europe called nyob none of your business it stands for which is quite a propo they've sent a bunch of requests into open basically saying you've given some inaccurate data legally I'm I'm allowed to ask you to fix that do it oh by the way I'm going to give you a subject access request I'm legally allowed to ask you to give me a copy of the data you've held open AI open AI have formally turn around and gone we can't do that that's not how AI Works you're just an activist don't worry your pretty little head about this technology stuff we're the best now nyab I'm going to take any of that thankfully and they've started enforcement action through the Austrian information commissioner who are currently investigating open bi open AI for widespread flagrant breaches of EU law and this is great because it starts to open open that can of worms so that other countries other areas of the world California's got a fairly decent Privacy Law hopefully they'll be following suit as well so if that's all the bad stuff with AI I don't want to leave you all with a sour taste in your mouth what can we do about it now I'm going to talk first of all about data poisoning and I'm going to have a very specific example of here I personally have suffered food poisoning attacks from a fast food company you can now get data poisoning attacks any of you see that that popped up let's Let It cycle through again keep an eye on the top right top left even of this fairly terrible McDonald's advert there some of you may be familiar with the idea of subliminal advertising which was very early on in film and TV people worried about in between the frames of pictures people would insert things like buy Amazon stock give your data to Mark Zuckerberg so they enacted laws about it one of the interesting things about AI we go again especially when it's in things like autonomous vehicles is that it is analyzing data far faster than human eye so things like subliminal advertising where we splice in malicious data into an audio or a video stream is wildly effective against Ai and this particular one there's a link there there's a paper where they have explored using animated advertising boards animated Billboards and inserting things like speed limit signs or traffic signs for a split second in an animated advert which and this is a technical term causes autonomous vehicles to ship themselves they'll be driving along we'll be sitting there going oh there's an advert for a salmonella inducing Burger lovely oh the autonomous vehicle will be driving on going says it's a 90 km an hour speed limit I'm doing 120 slam on the brakes it's a hugely interesting paper uh I encourage you all to read it it's been published by the ACM so it's not like it's an academic paper we have to pay l lva 3 Grand to go and read it right it's free on the web you can go and look at it very very interesting research and they talk about this problem about poisoning data feeds that come into autonomous vehicles large language models and things like that but we can take it a step further poisoning the well becomes a hugely effective tactic now I'm a bit of an ass I'm a troublemaker I'm a huge fan of direct action and that's got me into a lot of trouble over the years so I'm a huge fan of poisoning the well there are tools out there you can get which you plug into your social media profiles and they will overwrite everything with gibes and those are hugely successful for feeding crap data into companies that are stealing your data uh you can get them that will overwrite your Reddit history you can get them that overwrite your Twitter history you can even get them that'll overwrite your Facebook history plug them into the API you give me credentials who cares right it's it's scorched Earth policy I'm abandoning Reddit pulling that ejection lever and I'm going to create a huge mess on the way out these are very very useful tools for punishing companies for breaking the law and stealing our data other things that we can do is that we can expose the botn Nets and there are a lot of posts that you see especially now at election year where you'll see people on Twitter deliberately asking specific questions and using prompt injection attacks to get the AI powered Bots to spazz out and start vomiting out key phrases and then you'll see pictures of people where they say look here's a thousand accounts that said exactly the same thing and responded in exactly the same way this is a botn net that is being powered by this specific AI expose it shut it down kind of less effective now than it used to be on Twitter because let's be honest Elon Musk doesn't give a if there's a thousand Bots then that's a thousand people generating comments that will make people outrage and they will respond and then they can point to the usage stacks and say look I haven't killed Twitter people are still arguing on it aren't I great there there there have been some talks this weekend there'll be some more more talks as well about prompt in injection attacks prompt jailbreaks and I sitting there saying give me input now that can be in the web interface for chat GPT it can be a bot you're interacting in it could be what you think is a uh request from someone to chat with you on WhatsApp I've had a bunch come in for me last week from signal that were very obviously Bots and I used promp injection attacks to expose those there's a bunch of different ways like I say there's some good talks on this there's lots of information out there about the different levels of prompt injection attacks and prompt jailbreaks and how you can use those to not only expose a bot but also to get it to spit out stuff that's contrary to the constraints that have been imposed on it we can support activists the eff are here as always doing a great job nyob are doing a great job in Europe we have a good arsenal of legal tools uh again in the previous talk that were talking about the Digital Services act in the EU that imposes a lot of legal constraints on very large service providers to manage the data that they hold it's kind of crappy because it's the first generation of that legislation and they're still susing it out but it's a movement in the right direction and one of the benefits of legislation that's created in the EU is that we managed to get 27 different company countries who read each other's throats and don't even speak a Common Language to agree on something so getting a 28th country that's not in the EU to agree that's kind of easy so there is a a ground swell an impetus and inertia of EU legislation that means it can be used to affect and enhance legislation globally and we've seen that with gdpr and that's another tool in our Arsenal as I mentioned nyob are using gdpr to launch investigation to open AI other people are using gdpr to launch similar enforcement actions I believe there's one currently underway in Italy against meta there's one underway in Germany against uh Microsoft and I think there's two in Google but at any point in time there's like half a dozen legal actions against Google in the EU because they're such a company so what we can also do is use activism use privacy respecting groups to try and expose the models try and expose the data set that's been created there is currently a a lawsuit that's trundling through the Law Courts here in New York um The New York Times shockingly enough are not known for very accurate reporting they're taking AI companies to court because they're saying hey look we're the ones who publish lies not you and you've stolen our lives and you've used it to feed your AI model and that's outrageous so we're going to sue you bunch of authors have jumped in as well why not it's class action alsoo let's go for it so there's legal action kicking off all over the place to hold these companies to account to Define where they got their data from and that's important because when we know as I mentioned where we know where they got the data from we know the inherent bias in the models we know the inherent bias in their outputs and we can also then sus out how did you train the model if you use this data to train the model these are the problems with it this is what we can do with it and finally the last thing I want to wrap up with possibly the most important one education one of the problems that we've got with the AI act in the EU is that policy makers and lobbyists and politicians saw all of this media coverage for AI and stood around going we have no idea what this means is it how 9000 is Google going to create Skynet and then the usual tech companies came up and said ah we know all about this because we're the idiots who created it in the first place so let us tell you what's right to go into the law and yeah I'm harsh on politician but I have a lot of Goodwill towards policy makers because they have a difficult job they have to advise stupid politicians who just care about being reelected about stuff that is going to stay on the law books for 5 10 15 20 years they don't know where to turn they don't know where to get expertise from we are those experts reaching out to policy makers going to policy seminars conferences around legal policy poliy reaching out to organizations like eff who are very strong in the policy space in the EU we've got edri we've got a bunch of other organizations that Lobby on behalf of Technology giving them support and education and say look this is how this stuff actually works don't believe the hype don't be the only voice in the room we can educate these people and that will then manifest itself in a slightly better level of law now it doesn't mean that the legal framework is going to be perfect it never is it needs to be refined people need to be sued we need a couple of tech companies to be punished publicly before we can then get some case law and we can refine it further but it's a start and it's a good direction and also every day people I was talking to someone last week who was going you know what copilot's the it's awesome I had no idea how to program in this language and I plugged into co-pilot and it generated all this code and I pushed it to production that afternoon and it's all been fantastic I can see someone down there just going oh God oh God no yeah those people need our help those people yeah it's a crappy job market out there right people are doing what they're told because they want to keep their jobs because they got bills to pay and if co-pilot or a similar tool gives them an easy way out gives them a quick answer they're going to take it why wouldn't they I am possibly the laziest man on the face of the planet and if I could trust AI hell yeah I'd get it to do my job absolutely absolutely who wouldn't we need to help these people understand the dangers of that we need we have more than enough examples and evidence of incorrect output of introducing malicious code of introducing back doors of poison Supply chains all of this stuff has manifested itself at that sharp tip of the spear with AI it is bringing all of that malicious crap together and serving up on a platter to developers and to devops people and saying here's a solution just cut and paste this in there have been some very well publicized research that was fed on Twitter despite me slagging it off where people have been saying okay they got chat GPT to write code that didn't Link in a library but when you plugged it into your IDE and you did code complete features of your IDE it linked to a known malicious library because that was the best fit according to the IDE and according to co-pilot or GPT so you don't even need to include malicious code you can hint at malicious code and it will then link it for you and prove your downfall all of this is hugely important for us to share with people around us and not in the don't be a idiot and do that sort of way but to do it in a hey did you realize this is how it works because most people don't most people are still buying into the very public idea about how all of this stuff is just automation and it takes away The Drudge work and yeah don't pay any attention to all those artists who've been put out business behind the curtain they kind of don't matter look here's a pretty picture of a kitten riding a unicorn and it's AI generated we're in a unique position to help people understand that and to help people push back against its use and as I said right at the very beginning AI has some good uses there are some use cases where large data problems which are well understood with well-trained models are a perfect fit for this sort of stuff if anyone Works in a security operations center as an analyst and spends their day dealing with fast positive false positives an AI based solution trained on that data plugged into your seam is perfect cuts down those pulse positives gives you a probability of this being an incident rather than just a here's a critical alert go and look at it there are some good use cases but we need to educate people around us to understand this is a good use this is this is an ify use this is an absolute dog terrible use case don't ever do it stop stop stop stop stop before you push to production thank you very very much for listening to me rambor any [Applause] questions um uh as someone who is currently working on projects TR organization um like a slightly permissive and exploratory at attitude towards AI but also keeping in mind our privacy um our intellectual property and fundamentally our safety how do you explain that compelling case to Executives in dollars in dollars a good one so my favorite is uh always to wave the gdpr boogeyman which is easy for me because I'm in the EU and I work for an EU country but gdpr reaches out beyond the EU and gdpr is very strict right it's it's worst case I think uh it's up to a 20 million euro fine or 4% of global turnover which is the scary bit um personally I've had great success sitting down with Executives and saying yeah you can do this but I've told you that it's bad so if you do it you're knowingly breaking the law which means an information commissioner is going to absolutely nail you to the wall for the maximum they can go for as an example for others so that's a good way of doing it another way of of looking at it is getting them to think about corporate liability and solar winds was a very very good supply chain hack that happened last few years um there's lots of very well publicized data about how much it cost people to fix that if you're in a business where you are generating code and that code is being used in products or used by other people you are going to be legally liable for the damages caused by that if you generate malicious code or if you generate code with back doors in it and it turns out you just cut and paste that from the output of an AI another good area to look at is that whole area about IP intellectual property what is the data that's going into that Ai and what is it you're expecting to get out of it if your company's IP your business's IP is going into that a uh AI there is a dollar amount associated with the IP and Executives will know what it is because it's almost always got an insurance policy around it and it also has value to the shareholders and the market cap of the company or the amount of investment they've raised through funding rounds will give a dollar amount on the value of that IP and that then you know if if I have a fledgling business and I've had $2 million of investment and that investment is around the IP that makes that business valuable and I go and stick that IP into openai it's completely opaque no one has any idea what open AI do with that IP do they delete it does it get copi does it get reused does it resurface elsewhere and the current lawsuit that's going on in New York authors have said that they have seen word for word verbatim their copyrighted work being spat out of uh chat GP with the right prompts so it's clear that data goes in doesn't get deleted and it gets shared out to third parties as well if your business has a valuation investment round if it has a level of market cap that's based on your business's IP and it's based on the revenue your business makes from that IP so that's a very good dollar amount to slap onto that and make people think twice about the data they put in or the type of AI solution they use there are some AI Solutions where you can have uh a dedicated ated instance where they claim that it doesn't share data with anywhere else and it just uses the data you put into it do you trust them well one of the companies saying that is open a ey and Sam Alman deserves a Nobel Prize for and lying so I wouldn't trust them as far as I could throw them and I would like to throw Sam mman it would be good I reckon I could get him about halfway up there easily any other questions uh yes what would actually convince you that AI is alive has Consciousness it's a silicon based life form where a carbon based life form and because of the complexity of the llms it has developed a Consciousness spontan um what would convince me AI was actually conscious passing the Turing test that's it that's it yeah now in the 60s there was a chatbot created called elizer yeah um that was put up against chat GPT two years ago I think and Eliza managed to convince more people in the touring test that it was conscious than chat gpt3 did so it's a 60-year-old chatbot is more effective at convincing people than the most recent but one iteration now neither of those convinced more than I believe it was 35% of of the people interviewing them that they were actual conscious entities so there's still a long long way to go the touring test remains I think one of the best ways of judging is something sentient or not I was just GNA say I think GPT 5 blows away the T Test well it'll be interesting that's a rumor it'll be interesting to see so one of the the interesting things about the touring test is that the questions are designed to probe for thought as opposed to mimicry now I I used Aizer on BBC micro in the'80s I hacked into the code and I got it to emulate Margaret Thatcher who was then prime minister of the UK and my version of Elisa was brilliant whatever you asked it it turned and said invest in the stock market buy a ferax become a yappy it was brilliant now that mimicked Margaret Thatcher perfectly which was hugely successful and we've spoken about llms and AIS being very good at mimicking stuff but they are functionally still parents they are trained to mimic human speech and mimic human responses can you get a parent to the point where you could be convinced it was a human for some things maybe yeah and and chat GPT and other llms are kind of getting there but the thrust of the questions in the touring test is less about can you mimic human responses can you demonstrate original thought and that for me is kind of the really critical thing in the value and the touring test is that pushing these models to demonstrate original thought rather than just mimicry or spitting out what they've been trained to be told guys please put your hands together for Tom CR what a lot to think about great talk thank you so much

2024-11-26 23:52