Technology for Older Persons with Serious Mental Illnesses (SMI) with Eric Granholm

[MUSIC] I'd like to thank Dr. Jeste for inviting me to come, and talk about technology, really technology interventions for older people with serious mental illness. I'm going to talk about some of my own work in this area, but it's more going to be a tour of different technology approaches to help people with serious mental illnesses. What are serious mental illnesses? These are schizophrenia, schizoaffective disorder, bipolar disorder, major depressive disorder.

Very often this includes psychosis, which are hallucinations, or hearing voices, and delusions, which are firmly held beliefs that you really can't talk people out of despite conflicting evidence, like being watched, or followed. These are very common illnesses. Over 13 million people in the United States have serious mental illnesses.

They are actually a little more common in younger people than older people possibly because there's a higher mortality in these illnesses with people dying from suicide, and other health problems that accompany these illnesses like cardiovascular illness. The lifetime burden of these illnesses is $1.85 million per person, per each of those 13 million people so there's a huge public health burden of these illnesses. Despite all of this, only about 65 percent of people actually receive any treatments for these illnesses. Technologies might help us close that gap, and get access to treatment for more people.

What technologies? We heard about smartphone apps from Dr. Tourist earlier today. I'll talk a little bit more about those for serious mental illness, and there's also virtual reality, or what they call serious gaming, I guess, as opposed to fun gaming, but games that are meant to teach, or help. I'm going to talk a little bit about neurofeedback, and augmented reality, and robots. There's all kinds of technology approaches that might be helpful.

Smartphones are probably the most common, and there's lots of ways smartphones might be able to help close this gap. They can increase access to treatments by having people do therapies, and talk to their therapists on their phone, chat, text, FaceTime, use apps to help, and so they can increase access for people in rural areas, and move the clinic to wherever the people are really. They can reduce the burden of therapist time. We have a tremendous shortage of psychiatrists, psychologists in this country, and if that shortens the length of treatment, maybe by strengthening those treatments so that the number of sessions to be delivered to be lower, apps could increase access that way. Apps just help us not forget to take our medicines, and do what we need to be doing; to go out, and exercise, or whatever, but apps can prompt a lot of beneficial activities. Then there's all those sensors like Ramesh mentioned, you can pretty much measure a lot of things.

You can do an EKG with your wrist, with your Fitbit, or your Apple Watch. You can measure, you can mind someone's GPS coordinates to know if people are staying home, and alone all the time, or leaving the house. Measure heart rate, all kinds of health-related things with steps, and sleep. When you measure these things, and have access to them on the phone, you could do what's called a just-in-time intervention, which is if someone's home all the time, you could prompt them to leave just in time. That's what that means. Mobile-assisted cognitive behavioral social skills training is an intervention that we developed as Ramesh said in the introduction, I developed this intervention with John Macquin here at UC San Diego, called cognitive-behavioral social skills training, and it combines cognitive-behavioral therapy with therapy, which is basically checking out your thoughts about things that might make you sad, or not, do what you need to do, and role-plays like communication skills training, which is social skills training.

We've done a bunch of RCTs. This one I'm going to tell you here is in older adults where we tried to strengthen, and shorten the intervention by using an app. CBSST is a long intervention. It's 24-36 weeks, which is a long time to go to therapy, or to this group classes, taught for two hours a week in a clinic, and so we thought maybe if we add in an app, we could cut the work of the sessions going on. The app prompted homework, and told people to do the skills that were trained in the group and had symptom monitoring features, and then we use as a control group another app which is just monitoring symptoms only, and not doing anything with skills and no groups to learn the skills, but they stayed in their medications and other treatments. Well, what happened is this blue line here is the full CBSST intervention which improved functioning well, and then this red line here is the intervention plus the app.

It's about half the therapist's time but despite that, it still improved functioning better than just your radiator treatment, and just monitoring symptoms. However, not quite as good. These two lines don't really differ, but both of them do differ from this one down here.

But it worked but not quite as good, and I think apps help therapists do the therapy. Maybe they can't work quite as well as all the therapy time, but with a lot less burden, it worked pretty well. Other people are doing these kinds of what are called blended interventions. There actually are a few for older people with serious mental illness.

This is by the Steve Bartels swoop at Dartmouth, and Fortuna's lead author, and they've been piloting this interesting intervention called PeerTECH, which is here. Other people with serious mental illness who are doing well and know this intervention called Illness Management and Recovery, which is teaching people skills in order to recover, and has a lot of the similar things in CBSST which is checking out thoughts and doing role-plays with them, and they added an app to that that prompted them to do the skills much like we did in our study. It's interesting because there's no therapists, so we have a shortage of psychologists, but we don't need them to run this. These are the patients themselves running the groups.

It worked pretty well in this pilot study where people engaged with the app, and showed some improvements in their daily life. In the intervention. I think there's some promise for serious mental illness for these apps combined with in-person therapies. The phones can also measure all those things I mentioned.

Well, one of the things we started measuring with these phones is pupillary responses, and I'll tell you why in a minute. I know that sounds strange. But, you can measure someone's dilation while they sit in front of a computer, and do certain tests using these cameras that just videotape the eye basically, and digitize it, and turn it into a diameter, and you can measure people's pupil responses. Phones have cameras too, and so you can do the same thing. Edward Wang and his graduate student, Colin Barry, here at UC San Diego have developed an app for that, and we've been testing it. Why would you measure pupillary dilation? Well, it turns out it might be a good digital biomarker that might help us identify risk for dementia like Alzheimer's disease.

How could that work? Well, your pupil actually dilates more, and more the harder you work. If you try to remember more numbers, your pupil gets bigger each number you try to remember. The more effortful processing, or cognitive effort you put in, the bigger your pupil gets.

It's a way to measure how hard someone's trying to do a test. Now, how could that help you with Alzheimer's disease? Well, when you're trying to identify someone who might be having a memory impairment, we give them memory tests. We look at their scores, and see if their scores are changing, or if they're different from normative samples.

But sometimes people can have the same score, but work harder to get that score. The idea is that if you had to work harder to get the same number of numbers correct, for example, then you might be closer to decline in your memory because your memory is starting to fail, and you have to work harder to do the test. We have people remember three digits, six digits, and nine digits.

Nine is like impossible, super hard. We found that people who have amnestic MCI, which is they're starting to have some memory problems but they don't have Alzheimer's disease, are trying harder to get these numbers right. Everyone can remember three digits, but in order to get those three digits right, the people who are at higher risk for Alzheimer's disease are having to work harder. The pupil provides an index of how much effort you put in, and might help us identify early risk for Alzheimer's disease.

The earlier you can identify someone who might end up declining the earlier you can do interventions, and you might have more effectiveness of those interventions the earlier we try them. With a smartphone, we might be able to have people measure their own pupil dilation at home. We've been doing that with people to test out this new smartphone device, or in your primary care doctor's clinic, and you can have information about how much effort you had to do a memory test.

I'm going to leave smartphones now, and move on the virtual reality. Well, what's that? Usually, you put on those Oculus goggles or you can do it like in this picture here, where this woman is doing a virtual reality training on a computer screen. You're embedded in the picture, and you play a game where you can move your hands around, and move around in this virtual reality space. This is a study out of China with older adults. There's very little work done on older people with serious mental illness. This is another small pilot study where they've been doing cognitive training, where you have to do things like point where a yellow bird will appear, and you have to remember where it was and then you point where it was, practicing memory skills like that.

They showed some improvement in memory during this virtual reality intervention. We did a virtual reality study in people with schizophrenia. This was done with Sohee Park, who's at Vanderbilt University, and her group. They developed this interesting virtual reality social skills training intervention where people explore these environments. In this case here, it's a cafeteria.

They learn how to do communication skills, and engage with people in these environments, in these artificial environments so that might make them more ready to try it in the real world. They have to go on these quests like find out someone's name, and they go into the cafeteria. Here's one at a bus stop where they have to choose. They can choose anyone to approach. The quest here might be to find out when the next bus is coming to introduce yourself, and ask a question, and start, and maintain a conversation with these avatars. The platform also measures where the person is looking using those pupillometry devices.

You can tell where someone is looking on the screen. One of the things we teach in social skills training is to make eye contact. Their job is to look at the green face here, and make eye contact with this person, and you could tell whether they're doing it or not.

If they're not, you could say don't forget to make eye contact. This is an example of how you teach some of these social skills using these virtual platforms. All these lines here just show that whether the test was easy, not so easy, and then difficult, people make fewer errors on what they say to people and learning the specific skills, and getting the quest.

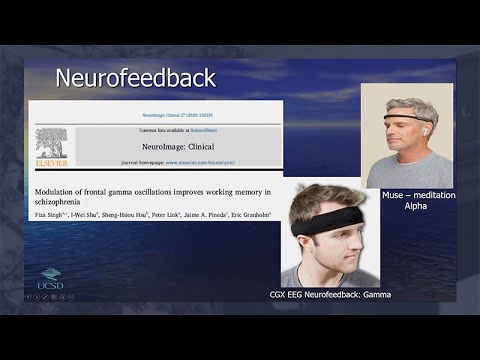

This is how long it took them to fixate on the person's eyes to make good eye contact, and you can see that's going down as they practice on this virtual platform. Another totally different technology now that we've been using in Fiza Singh, and I-Wei Shu's lab, they do a lot of EEG, electroencephalogram work. What's happened in the world of EEG is that it used to have to put wet electrodes on all over your head. It took a long time. It's yucky. Your hair gets gooey.

Now you could just put on this headband, which is dry electrode. There's no wires, or this one, which is very popular. It's called the Muse. It basically measures your brainwaves. It does an EEG. It Bluetooths or, through a wireless connection, sends the information to a computer, or a tablet, or a smartphone.

The Muse is basically done for meditation or mindfulness work where it measures whether you're doing alpha, which is calm. When you're calm, your brain goes into this alpha bringing wave. You basically can teach yourself to put yourself into alpha because you get feedback from the device about whether you're in it or not. This one has more electrodes in a local company called Cognionics that we've been using, and can measure things like frontal gamma, which is a faster waveform with more electrodes.

I'll tell you what neurofeedback is now. Neurofeedback is like a biofeedback. This has been around for decades.

Basically, you can learn how to change your biological signal through just practice and reinforcing yourself. If you know whether your heart's beating faster, you can beat it faster. It's the same for brainwaves.

You measure people's brainwaves, and the computer sorts out what state they're in, is it Alpha or Gamma, what kind of frequency are neurons firing in your brain? You extract that, and if you want to train someone to increase Alpha, you'd give them feedback when they're in Alpha. If you want to train them to increase Gamma, which is a faster running wave, you can increase your Gamma. Well, how do you do that, and how you make it fun? Well, you have an application like, again, these are snowboarders racing down the hill, and your snowboarder won't move unless you go into Gamma. When you put yourself in Gamma, you can win the race.

It's a weird thing. If you ever sit down and do it, the instruction to the participant is put yourself in Gamma, make the snowboarder win the race. You don't know what you're doing, but you do learn how to do it [LAUGHTER] by just having feedback to whether you're doing it or not.

You could train yourself to go faster down the hill over a session or come in twice a week for a half hour each, and do this in the lab. With the promise to these headbands, we might be able to do it from home. Why do we train Gamma? Well, it turns out these Gamma oscillations, which are fast, 40 times second in the frontal lobes, are linked to something called working memory, which essentially is working with memory. You have to remember things in order to manipulate information, and do things like, where am I on my recipe or telephone number is limited.

Telephone numbers are seven plus, or minus two, or seven numbers for a reason because you really can't remember much more than seven things at the time. It's about the average memory limits. We thought, well, if Gamma is linked to memory, what if we teach people to increase Gamma and maybe their working memory will improve.

This was a physician's idea, and it's a great one. That's exactly what happened in her projects. She brought people in the lab, they learned to increase their Gamma power by playing these games while hooked up to EEG machines, and Gamma increases over the 12 sessions that they practice. Importantly, this is busy, but there's a lot of bars here. The dark bars is before Gamma training, and the light bar is after, and you see increase in a lot of cognitive domains, not just memory, as people learn how to increase their Gamma power. We've also tried this more recently in just a few people with mild cognitive impairment, which, as I said, is that high-risk state before Alzheimer's disease, where people are starting to have some memory problems.

We have some older adults with these problems coming in and doing Gamma training, and neurofeedback, and their memory is improving as they do the training over 12 weeks relative to what's called a scan or a mock, where they sit down, and they think they're playing the game, but there's no accurate feedback about whether they're in Gamma or not, and those letter is just randomly going down the hill at different speeds. What's interesting is that change in Gamma over the treatment is correlated with the change in memory. It might be that the Gamma training is what's the key component here. I'm going to leave neurofeedback. I told you I was taking you on a quick tour around a bunch of technologies, and there's a lot of them. Sorry to be rushing through each one, but I wanted to give you a taste of each one.

There's a lot of robotics. There's a lot of different kind of robots people are trying to use to help older adults, not so much older adults with serious mental illness yet, but there's a lot of potential with this as well. There's Pearl and Hobbit, and Care-O-bot, and Robocare. This one, iCat, which I think is a little creepy.

But this one has facial expressions to have a more interpersonal communication with you. But they do lots of different things. There's lots of support that they might offer.

Just simple activities of daily living like carrying and reaching things or carrying heavy objects, helping people get up, helping people walk around, and there's some cognitive support like arranging appointments, reminding people of appointments, reminding people to take medications. There's some emergency monitoring. These robots can tell if someone falls, and they can contact someone about it. Some of these robots are being used to reduce loneliness by having people interact with robots, or sometimes the robot has a screen that can connect with people to person knows who's not there.

Just some quick tour of some of the robots. There's actually no work in this, in the serious mental illness yet. The last one I'm going to tell you about real quick is just out of time is augmented reality.

This mixes virtual world, and real worlds together. That's what they mean augmented reality, but it's augmented. You might put an animated figure in a screen, or you can put feedback in a screen. There's some pretty fancy things. So someone might be looking at another person, and maybe you have trouble telling whether someone is happy, or sad, or angry because you can't read emotions and faces very well. This has been done in autism, for example.

The eyeglasses in this case, this is Google Glass here, if you've heard of Google Glass. There's a camera here that sees the face of the other person, and can actually categorize facial emotions, and then it feeds back to the person what the emotion of the other person might be. This can get pretty fancy, and do some pretty amazing things. The way that it's been used in older adults, not older adults with SMI yet, is there's Google Glass, for example, can give you directions, or cues when to turn just like your GPS does on your phone. But more than that, it'll provide feedback of whether you're turning smoothly when you make, say, a left turn, and then it can also make shopping suggestions.

When you're looking at the food on the shelf, it can actually categorize the food and say, don't buy that, you should buy this low sodium item. It'll make suggestions while you're looking at the shelf with the glasses on. This is the things people are doing with augmented reality. These games to improve balances, you'll actually walk through a room in practice walking through. Really, this is more of a virtual reality game, practice walking through a room so you don't fall when there's stairs, and practice different things like that. That give you a quick look at augmented reality.

Again, nothing in serious mental illness yet. That's it. I'd like to thank you for listening. [MUSIC]

2021-11-29 20:12