Wood Log Inventory Estimation using Image Processing and Deep Learning Technique

Good. Afternoon or good evening. Welcome. In this, presentation, Intel, entitled. As would log inventory. By deep learning my. Name is Thomas wanto and I will. Present you a few slides about that. Let's. Start about agenda. I will, briefly introduce, the. Company. Where. I work for and, also, myself a bit then. I will describe the problem, itself. It. Will be followed by description. Of some data pipelines, we use here all. Of them are are, based on Asia resources, so, basically there will be a. Lot of place, or a lot of applications, of, of. Data bricks. Then. I will focus on the model itself. How. Its trained how it works and then. It results and some conclusions, at the end. So. Stora. Enso is. A leading, provider of renewable. Solutions. In packaging. Biomaterials. The construction, and paper globally, we. Basically focus, on wood. Processing and. You know. Pushing. Towards. More. Efficient. And more. Renewable. Materials. From, from, these. Entitlement. The renewable materials, company, has. Its reason, and its. Reason is that all of we reduce his based on wood it comes. It. Can arise. From from. Wood. Constructed, buildings, into. Some, simple. Packaging solutions for, food, or whatever, you can imagine. As. I said previously my name is thomas van - hi I work here for, almost two years as a data scientist, I'm, responsible for image, processing especially, in this case but. Also I'm working with some, time series and also some development, around chat. Boards or some some web, services. Before. That I. Used. To be a senior researcher, at Technical University in, Ostrava in Czech Republic and, my. Special specialization. Was in, time series analysis. So. Let's. Start about the project. As. You can imagine. Because. We work with wood we need to, process a lot of harvested, wood. Basically. It's in several places in the world we we have those milliards, which, are completely. Covered. By wood. Files and, we need keep, track. Of amount of those files, so we basically do. Basically. Do some inventory zation of that of the material. It. Can be done by various. Ways. But. Our aim to do it as efficiently. And, as quickly as possible, so. For the purpose. Drone, based application. Was developed, it. Means basically that some. John will fly over the mill it, will take pictures of the. Entire surface all, the objects there then. Those, Jones put. Together all. Those. Jones but pictures, from the Jones are put together into. Some aerial map and. This aerial map is then deliver delivered. To a human. Expert from the mill his. Task. Is to identify in the label all the wood inside and, because. We, have also a digital elevation model. It, means that we have. Ground. Up, amplitude. Of each pixel we. Can even calculate the final amount of wood in. The, in the picture, but. The. Very important, key aspect is that the. The. Reapers. I sniff wood needs to be identified. By human expert, as. You can imagine this. Is quite time-consuming task. Human. Expert needs to place, more than 200, different, points. And several, coefficients, there and. Right. After that the. Amount. Can be calculated. So. This. Was taken as an opportunity, and, some. Machine learning model was created not. To substitute the. Human. Effort but. To. Save some time. And you. Know to. Leverage this information to. Some to some quicker solution, as, you can imagine those, aerial. Maps are quite quite. Big images they, are in if. DF. Format. On. Average, they. Have around, 500. Megabytes. In size. Because. They. Contain. Those, three RGB. Channels. And also the, digital elevation, elevation. Model. At. The first stage, they, are put together by a, soft. Software. On this, map processing, server on the left side that's the, place where we start and. In. This raw form, there's. Tractor, transferred. Into, Azure data. Lake. Now. They are marked as its. Raw data it's their roll stage, here. We do just some trivial. Sorting, and, we. Also collect some metadata, around, those images. They. Put we put them together into, some some, database and, after everything is ready they. Their. Stage is changed, to production. They're. Ready to be used for. Prediction. Basically. Some. Of those images. Are labeled for. Training. Purposes and, as you can see this branch, in the middle. This. Diagram, means basically that some. Of those images, are used for model, training it's. Pretty, simple. Nothing advanced, just model is trained, in database environment, then. Stored, in. Storage. Account, with. Some additional metadata. About the model, basically what kind of model what tasks he's. Solving. Etc, because. Those metadata, are, used, later, for model, selection. We. Use several, different. Models and, when. The, request. From outside comes, for. Prediction. Tasks we. Have to decide what. Kind of model we are going to load for, this prediction, the. Model. Is loaded also. The. Specific. Data. For prediction are loaded and this. Prediction. Pipeline, is then executed. All. The, predictions are stored, into. The production. Stage in the data lake with, those source, images, so, further, investigation. By human expert is impossible.

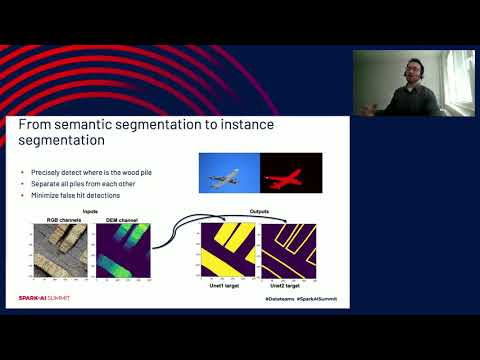

We. Also collect, some. Metadata. About data, processing, and about prediction, so we can also. Make, it. Or. Make. Some improves, improvements. Over time. So. The, task itself now you can see some. Some. Images, what. What, are we dealing with how, our Mills, looks like from from. The, from. Above and the. Complexity, of the task relies, on several. Several. Pillars. Basically. We deal with different, shapes, of those, wood piles not all of them are so. So. So straight some. Of them are curved, a bit. Also. The color of the wood, varies. And. Most. Of the those variations, are, based, on the, wood type because, you know birch can have different color than pine, and different, columns, etc. And. Also the amount of wood the amount of water inside, the wood is, a, significant. Player in these color changes, because, the wet wood as you can see is much darker than, dry. Wood which is which is much lighter. Also. A lot, of differences. In lightnings. On, shadows. Plays. Important. Role in this in. This image processing task and. As, you can imagine a, lot, of labeled. Data that's. Terrible, issue in, every every machine learning task we had were facing that as well naturally. It looks like. Image. Segmentation. Or. More specifically, the semantic, segmentation. Task but. Because. We. Need to make it even more precise, and a lot. Of those wood piles are too. Close to each other, we. Need to provide. Also instance, segmentation. What, is the difference, between that, basically. Semantic. Segmentation. Identifies. Where, is the wood and where is not, the wood like what pixels, are. Related, to wood piles and what is, the rest with, the road object, buildings etc. So. By semantic. Segmentation, we can identify all, objects, that are, representing, wood piles and, by, instance segmentation. We are able to separate. Them one. From each other and, that's something, will be really neat because we need to keep. Track. Of inventory of, each footpath, separately. And. If some of those wood paths are too close to each other then, this. Semantic, segmentation, basically. Outcomes. For us then. Test them as one one. Object. So. As you can see for the proposed, this model utilized, to, deep learning, networks. The. First one. Predicts, the, objects the second one predicts the borders and. By. Their combination. The final outcomes. Delivered. Both. Of them are, using the same inputs, but. I will come to that later. Now. About, the unit model that, is pretty popular, widely. Used in lot of applications, widely. Used in. Cagle, competitions. Etc. In. Our case it. Was implemented in biology framework. With. Use of library, segmentation. Models, I really recommend, this it's well, written. Easy. To use. What. Is special, about unit, model well. It's it's, pretty similar, to any other, deep. Learning outer and with with one modification. Every. Every, layer of encoding. Path is connected, the, decoding, path by, additional. Concatenation. So. Like. The.

Results. From those from. Those layers. Or including. Levels are transferred. To the decoding. And after, the concatenation, the. Internal. Information is increased, and the outcomes, is more. Precise it's. How it works, basically. And for encoder here. We were able to use, rest. At 18 architecture. It. Means, that we. Just increased the number of of. Convolutional. Filters. As. I. Said previously for. Our. Input channels. We use four. Of them the RGB and digital, elevation model, and. 256. X, 256, is, the resolution of. Input. Images. They. Were taken, just small, cut-offs, from the entire aerial. Maps because, entire. Area map was. Resolution. Around, five. To six thousand, pixels. Times, maybe. Ten, or twelve thousand, pixels, so. They were pretty big and unable. To be predicted. At once by, MIDI, in this model so, smaller. Cut-offs, were taken and it, was predicted, piece by piece. And. As I said we had utilized, two networks. One for objects and one for borders. Image. Argument, was. Taken, as written. Pretty necessary step here. It. Is again pretty pretty, common. Way how to increase. Generalization. And how, to increase, robustness. Of the model itself. Motivation. Or aim. Is basically, you. Are altering, some. Features of the image that you are using for training, but. You're not restoring, the information. Which, is inside or you are not. Modifying. The. Context, itself so. As we can see some. Basic. Image, processing operations are applied on this image. Of CAD but we still can recognize the cat. The. Same same. Was applied in our case so. Some rotation. Flipping. Blurring. Or some the inner. Contrast. Were used for. RGB. Channels and some. Dropouts and, hue. Manipulation. Was used for digital, elevation. Election. Map the. Motivation, to use. Different, documentation, for different, channels work. Pretty good for us and. It. Just took some some, fine-tuning to, find the best combination to not make the model too. Sensitive. Or the, data, too. Complex, for the, training, the. Motivation, is to still keep the model converging, you know. This. To. Describe the overall, models.

Workflow. Well, it it possess, four, four, steps for, like. Major. Procedures, the, first one is related, to data pre-processing. Basically. We, need to normalize. RGB. Channels, of. The image as well, as we need to normalize the digital elevation model. And. Also from, the labels we, need to produce two. Masks, one, mask is of. Food. Objects and one mask is of pile, borders. Then. The, first unit. Model is supposed to be. Trained on. Object. Masks, and then, produce, object. Predictions. The. Second, unit model, is supposed, to be trained on border, masks, and then produce predictions. Of the borders and, some. Additional post-processing. Is supposed to join those predictions and. In. Some. Simple. Filtering. And the. Processing. Way to. Produce the final outcome, it. Can combine those informations. The. Training, and testing, of the model, basically. To mention. A few important. Stuff. We. Utilize. 14, different aerial. Maps for. Training. Each. Of them. Contained. More, than 100. Different, wood piles so ever, pretty big and. By. Making, use of those smaller. Cut-offs, with some 50, pixel. Overlaps, we were able to produce more, than. 700,000. Images. Not. All of them were used for training. Because. Of the cross-validation technique. We always mixed them somehow. Randomly. And use, part, of them for. Training. And part of them for testing. As. I said previously both. Models, possess. The same, architecture. So they were unit. Models with, resonating. Encoding. For. Optimization, function I started. With a DM for. Few. But, few fat but but for majority, of a box ADM was used but. At, the end like. The, last 15, or 10% of a box were optimized, by stochastic. Gradient destined, with. Very very low learning, rate, motivation. For dad was basically. When. The model was not converging, anymore. To hit, some. Decent. Level, I just, wanted to squeeze it a little bit if. It was possible by this stochasticity. Sometimes. It worked sometimes it didn't but I didn't. Mind. For. The loss function. I used, pretty, pretty, normal, and it is used in, similar, tasks, so, binary. Comes. Anthropy with logistic log loss, loss. For. A bad size. I used. Number of 35. Images. This. Number was, adjusted. To be as, as. Big as possible as as large as possible because. In. This image processing cases, you know the. Higher batch size is, actually. Improving the, improving. Convergence. II but, actually to make it too big you can basically. Overflowed. Overflowed. The memory so number. Of 35, was somehow. Accurate. The. Decreasing learning, rate is totally. Standard. I. Decreased. Learning, rate in almost, linear, way, it. Depended. Always on how the model, was converging, and. Some. Something. About the hundred. Setup, you, know the 16 course from from. Data bricks CPU. Cores were used for data loading because every. Time. This. Smaller, image was, created. Or taken. Then, the, normalization. And everything was preceded. There so. It has been done in parallel way and then. Those. Batches were trained on GPU. As. A validation, magic, the. Dice coefficient, was was taken. So. Quite. Important, step, I think was, the post-processing, because. Now we have. Predictions. Of objects and predictions of borders so now we have to join them together and somehow. Take, the best out. Of both. So basically the first type of protesting. Is to do those predictions themselves. So we. Obtained, the. Matrix of objects, and matrix of borders. Then. We are. Supposed to apply some thresholds, on them. It, is some, kind of level of confidence and it. Can be derived, from. How, the how. The network is doing. On, distinct. Data. And because. On. Object. Detection it was it was much much, better and our. Motivation is basically to, remove. Or to mitigate. The. False. Hit then, the level of confidence on object. Detection was much, higher it. Was like, 0.5. Or 0.55. So. This. Was. Special threshold, by this this. Level and the, binary matrix was, a product of death. From. This binary matrix as. A third step. Very. Able to identify, some contours, some some objects. There and. Simply. Those, objects, that are too small in, size. They. Were omitted as, well because. They were considered, so, Falls it you know the wood, pile has to have some. Minimal size so I think it was like 50. P 500. Pixels, or something, like that was, a threshold, though. Made those too small object. Then. We did similar. Stuff, with. Matrix. Of borders. Or mask of borders. But. With, one difference and. It. Is the level of confidence which was adjusted, significantly. Lower, the. Reason for that is. Simple. The. Accuracy. Of those. Work. Of those border predictions, was, a little bit lower and, also we, could afford some. Forces. There so. Basically whenever. Some. Significant. Confidence, appeared and it was like. 0.25. Or 0.3. We depend. We. Take we took it as a as a boarder there's a brother pixel. So. Now we have one. Binary matrix of object and one binary matrix of borders basically. We subtracted. Those, borders, from, object so, those. Piles that appeared. To be too close together and they. Were joined by this, border this, object prediction, they were separated.

By This subtraction. Then. We were able to identify contours, again. And. Those contours, were much more, precise so we didn't, we, don't have. So. Much piles. Joins. Together, and. For each of them we basically. Draw, them apply, some some, dilatation. You know to fix, those. Edges and to smooth them a bit and export. Those, contours. Into some Chasen's, before mat and, at the end we used also some some, polygon simplification. You. Know because some of those edges, were not so. Not. So smooth and, it. Also, produced, to, us too much of points, so this simplification, was, was. Oversea to do we. Lost minimal. Information after. The operation. So. To, give, you some numbers on. Test. Data, or. Test. Samples. We. Were able to, have. Like, 97. To 98%. Of. Dice coefficient, based accuracy, on jig, detection, it was pretty good I think for border, direction, we only have around. 92, 94, not, 92 % percent. Of dice coefficient, it. Worried. Based. On you know how, data, were mixed, in cross. Validation etc. But. What is most, important, for us is. When. We put those two, predictions. Together how, it will actually, predict. The final amount and. The. Final amount was. Separately. On. Digital. Elevation model. And just. Come just to compare, it with our previous. Method you know the. Labeling. By human expert, we. Obtained. Results. That that were so similar, that the deviation, between the previous. Solution. Was less than 5%, actually. In most cases it was around 2 to 3 percent so this, is definitely. The way how to do it and and. The. Good results for us to. Proceed even further. Visual. Is show you some results. Here it is these. Images were. Not used, for the training they. Were used only for testing as you. Can see in most cases those, files are. Identified, pretty correctly, on. The left side we can see. One. False. Head on, the left image as. Well as on the right image we can see that probably, like two piles are not ended. Well. But. There is the and. How. To how, to fix this issue you know, there. Is several ways but, now we. Are implementing. An. Interface for human. Expert so he can join, into this and he can fix those few piles so, definitely. It, will be not for, the automated solution. Yet but definitely. We saved some time, from. Human expert, already. So. So this the, that's it that's all from me thank you for your attention, and I'm. Ready. For questions. You.

2020-08-11 18:00