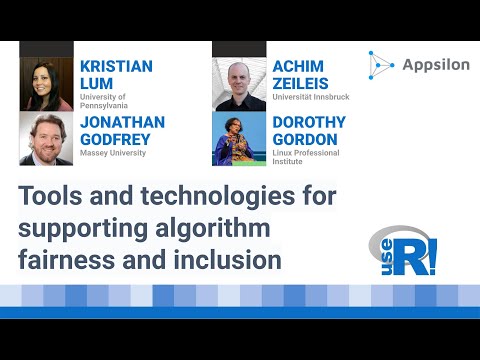

Keynote: Tools and technologies for supporting algorithm fairness and inclusion

Welcome everyone, to this multi contribution keynote on, Tools and technologies for supporting algorithm fairness and inclusion, sponsored by Appsilon. [foreign language] xhosa proverb, which means wisdom is learned or sought from the elders or those ahead in the journey. In this multi contribution keynote, we will hear from those ahead in the journey. Kristian Lum, Achim Zeileis, Dorothy Gordon and Jonathan Godfrey. Hi, I'm Vebash. I'm the Founder and Co-organizer of RLadies in Johannesburg.

I'm a South African woman of Indian ancestry. I have long black hair, which is a little curly at the moment and I'm wearing a black jacket and a scarf with animal print on it because it's winter here in Josie. I'm sitting in our study at home in Johannesburg.

Behind me on the wall are two pieces of art from my kid and one that I made myself with Lego Dots that spell out RLadies, with the little blue heart at the top corner. My love for R runs deep, as you can see, and here I am joined by my friend, Shel. Shel, please introduce yourself. Hi everyone. My name is Shel Kariuki.

A Data Analyst based from Kenya. My pronouns are she/her. I co-organized NairobiR, I live in Nairobi and I'm also part of the Africa R Leadership Team. It is currently 16 degrees in Nairobi, which to us is very cold. So I’m warmly dressed in a black and gray hoodie. My hair is currently braided and tied up in a bun and I'm wearing my pair of spectacles because I’m short-sighted I am seated at my desk, study desk which is located in one of the rooms in my house.

Thank you for joining us. And we hope that you will stay with us till the end. Over to you, Vebash. Thanks, Shel. Let's begin. Our keynote session like all spaces in the conference is governed by this Code of Conduct.

If at any point in the session you'd like to ask a question, please ask these in the Q&A widget at the bottom of the Zoom screen and include the speaker’s name if it's directed to a specific speaker in this session. You can also upload by liking a question. The hashtag we're using on Twitter is #useR2021. Our first speaker is Kristian Lum. Kristian Lum until recently was an Assistant Research Professor in the Department of Computer and Information Science at the University of Pennsylvania. She has, since the end of June started a new position at Twitter as a Senior Staff Applied Machine Learning Researcher.

And if you've never heard Kristian’s talk before, after her part today you will surely join me in congratulating Twitter in getting her on board. Please join me in welcoming Kristian to the virtual stage. Wow. So that was probably one of the nicest introductions. Thank you so much.

Don't have much time, so I'll just get right into it and say that today, I'm here to speak to you a little bit about some of what I believe are some of the less paid attention to facets of designing models ethically and responsibly and I'll get right into it. So if you've been following the discussion about algorithmic fairness over the last, let's call it five or six years, you've undoubtedly come across this article called Machine Bias with the subheading, There's software used across the country to predict future criminals. And it’s biased against blacks’. This has become one of the major test beds for new algorithmic fairness methods as well as data sets that is used to make claims about what algorithmic fairness looks like in sort of machine learning and statistics research. Now the crux of this article put out by ProPublica was that unfairness in a model, in this case, a model that's used to make predictions about someone, whether someone will be rearrested in the future, unfairness in that setting is embodied by the state where the false positive rate between different groups in this case, different race groups, white, and African-American in this case is different. So they noted that the false positive rate for African-Americans was about twice that of white people. And this is shown in that red square, in-- on the figure here.

And so this is one definition of fairness, one definition of algorithmic fairness that's been going around. And in fact, a generalization of that is just the idea that some predictive performance metric on your model that you're deploying should be the same across different groups. Usually those groups are called central sensitive attributes in that first case, it was race, but you could really pick any performance metric to define fairness in that way. And in fact, if you were to sort of pick your favorite performance metric across sort of the standard confusion matrix say it's, you know, a positive predictive value, maybe equal accuracy, certainly there's someone in the world who has provoked, who has proposed that a model is fair if that performance metric is equal across the different groups. And over the last several years, there is of course been this proliferation of different mathematical notions of fairness, group fairness which as I just discussed, individual fairness, procedural fairness, causal fairness, all different.

And I think very reasonable ways of thinking about whether a model is being worked, performing fairly. However, one of-- one facet of this, and I think this is particularly true if the group fairness types of definitions is that they take a lot of things as fixed. It sort of take away a lot of your degrees of freedom when thinking about how to sort of define a model responsibly or ethically. It sort of starts from assuming that the data is fixed, the overall goal is fixed and those sensitive attributes like the groups that you're concerned about are fixed from the start. And then it's only a matter of equalizing some rate or doing something else to arrive at fairness. And so what I want to impress upon you all today is that these sort of mathematical notions of fairness are really just the beginning of the story.

They're not the end of the story, and we really need to expand beyond those sort of mathematical notions of fairness, if we want to really be committed to building models responsibly. And so that's what I want to talk to you about a little bit today. And it's difficult to do that in complete generality because part of understanding some of these bigger picture, things really involves getting into the weeds and some particular application, there's not going to be any sort of general purpose solution that says this is the responsible thing.

It's really a collection of considerations that one might want to make, and those are just necessarily going to be contextually dependent. And so the context in which I've been working for the last several years is looking at models that are used in Pre-Trial Risk Assessment. And so quick cartoon, very quick version of this just to set the stage for this discussion today. These are models that are used throughout the United States to make an assessment of how someone should be treated after they've been arrested. So when someone is arrested in many jurisdictions, a model is run that predicts, say whether they will be rearrested, that's called recidivism based on input data like criminal history, demographics and interview questions.

So essentially a statistical model that predicts a binary outcome, whether there'll be a rearrested. Again this is a simplified version, but for our purposes today, it should work. Based on those predictions, they'll then make policy recommendations like this person should just be released with no conditions, perhaps there should be some conditions on their release or release is not recommended for that person. And this, recommendation or the decision that is ultimately made, has very consequential impacts on that person's life and their trajectory.

Okay. And so again, let's think about taking back some of those degrees of freedom. Let's not think of our data as fixed, but let's think about the ways in which it might not live up to our expectations, or it might undermine fairness, the fairness, the model, even if we are able to meet some of the mathematical notions of fairness we set out to achieve.

So since we're at UseR, I'm going to assume we're a lot of data scientists and statisticians. So I'm not going to go into this section in too much depth. But when we use data to build one, such a model, say a model that predicts whether someone will be rearrested, we have to con-- we have to be concerned about bias, so sampling bias, for example, when the data is not representative of the desired population. In this case, to the extent that

there is a bias in policing and in particular, people of color are policed at a higher rate than others. Then the population to which your model is applied and the population from which your model is built may not be representative. And again, even if your model meets a mathematical notion of fairness, then this fact will undermine the fairness of that model entirely. You also need to worry about measurement bias. This is when your data is systematically over- or under-represents some target quantity, and this is particularly concerning when there's differential measurement bias.

So that’s to say one group is more over or under represented than the other. Again, this comes back to differences in policing. So to the extent that you believe that there is a racial bias in policing that gets encoded in the data. And so say, you're trying to predict whether someone's rearrested, that's not the same thing as free offense.

And so, if the model can't disentangle, whether this person is likely to be rearrested if the model is accurate, because they are sort of person who is-- lives in an over police area, or because they are genuinely more likely to participate in behaviour that would be defined as criminal. Another aspect of bias that I think gets confusing here is societal bias. So the first two statistical bias, I think as statisticians and data scientists, we're all fairly comfortable with.

Then we come to societal bias, which is essentially just objectional aspects of the real world, especially those reflecting inequality that get recorded in the data. And they may be recorded accurately, but we might not want our model to learn those facets of the world because we don't want the model to perpetuate those problems. So for example, you might believe that there is truly some differential rate of participation in criminal activity based on some sensitive attribute. however if you build a model that then recommends different groups for say, incarceration at different rates that might not actually lead to a better world because you're sort of perpetuating those patterns to the future. You're incarcerating one group at a higher rate and that might not actually lead to better outcomes or more equal outcomes in the real world. Okay. And so, this is one thing I just wanted to say really quickly is that often we talk about sort of the foundation or the source of unfairness in models as being biased in the data.

But this is often conflating many different things. We have the world as it should or could be. And not everyone will actually agree on what this looks like, right? That's sort of a normative decision. It's values laid in, it's a moral decision and it's your vision for how the world should be. There's retrospective injustice in societal bias, it gives us the world as it is.

I don't think anyone thinks the world is perfect. From there, we measure the world and we get statistical error. And so when we talk about bias, we're talking about all these things and it gets really confusing and difficult to disentangle what we're talking about when we're talking about all these things at once.

I'm going to come back to this world as it should or could be because this is I think, really the crux of thinking through building models responsibly. Finally, there's some other considerations when we think through using the data. So, what sort of information is off limits? There are currently people working on building models to predict re-arrest based off of brain scans, so MRI data. Should someone be forced to participate in that sort of invasive data collection process in order to be evaluated by such a model to be eligible for release? Another thing is how does our measurement encode various values? So, you might want to encode the value of forgiveness. You might think of doing that by including a sunset window on past offenses. So some of -- those inputs for criminal history, maybe anything beyond 10 years ago, shouldn't be counted.

Even if it does have a predictive value you might want to encode something like forgiveness in your model. So again, sort of thinking through how you're measuring your data, what sorts of bias exist in your data, how you can correct for that and what other values you want to record your data I think is a really important part of building models responsibly. Another thing that I think gets a little bit under examined are the axes of fairness. So far I've talked about race.

In the United States, this is one of the things that has come up as one of the most important and often scrutinized sensitive attributes when we talk about fairness. But of course, it's not the only one. There's gender, there's of course, the intersection of race and gender. So, depending on which of those attributes you pick you might come to very different conclusions about whether your model is fair if you're defining it, for example, as equal predictive performance between those groups. So these are two obvious ones.

They aren't the only ones. There are many others you might consider sex, disability, nationality, age, religion, et cetera, all of these things, intersections of all of these things. Which groups you want to consider and be concerned about performance for the model with respect to is again an ethical and moral decision that needs to be made that is often not especially considered or sort of taken as fixed for the task. Another thing that is sort of scoping out a little bit, thinking more broadly, the decision space.

So, I talked about three decisions from the outset, release the person, impose some conditions or release not recommended. Now, if any of the elements of the decision space are, to your understanding, inhumane or just fundamentally objectionable. Even if you are able to, through your model, equally a portion that recommendation across the population or across different protected groups that might still not be a reasonable solution.

So, if you look at the conditions of pre-trial detention you can find reports that talk about a culture of violence for people who are subjected to pre-trial detention are subject to violence, not only at the hands of the people who are also detained, but also at the prison and jail staff. Again, even if you can equally apportion that treatment, if the treatment is itself unjust or inhumane, is that an ethical thing to do? Another thing to consider, another one of the elements of the decision space is that there'd be some conditions on the person's release. A common condition is that the person pay money bail, essentially money they have to pay to be released to incentivize their return, at least that's sort of how it's conceptualized.

Now, in practice what this means is that people who have less wealth are unable to go home. And so, you end up with these wealth disparities in terms of who is able to be released and who is not. Again, if you have some component of that decision space that is fundamentally objectionable, this might be a problem no matter how you can apportionate across the population. And finally, there's nothing bigger picture than what is the overarching goal of building a model in the first place. Often when we're building a model, we're predicting some limited set of outcomes to help make a decision.

These are sort of this class of models that I'm concerned about in this case. And so, what is the actual goal of doing that, right? For some -- and this really comes back to what I talked about just a few minutes ago with how do you -- what is your goal or how the world should or could be? In this case, maybe you believe that the world should or could be pretty much the same as it is now, but it should just run more efficiently. And that case perhaps the overarching goal of building this model is helping judges make decisions in a better way, more efficiently sort of helping the existing process move faster. For some people, the existing system is so unjust that it just needs to be reformed entirely. It needs to be rebuilt in some very large and grand way.

In that case you might not think that a models supports that sort of restructuring. However, I've cautioned you that these things get really complicated when you start thinking about the politics of these sorts of models. So for example, we'll often see these sorts of models proposed as part of legislation that does something like gets rid of money bail entirely. So, you end up with legislation that does away with some, in my opinion, abhorrent type aspect of the standard decision space, but at the same time becomes replaced with one of these models. So if that sort of legislative change couldn't be made without a model, perhaps one of these models does support that sort of reform.

And finally, you have people who believe that the current system is so unjust and so messed up that there is nothing to do but abolish it. So, to envision a world, how the world should or could be, right? To envision this world where there is nothing that exists that resembles our current criminal justice system at all. And in that case you probably do not believe that one of these sorts of models moves as closer towards that goal.

And so, I'll close now. I know we don't have a ton of time. But I’d just like to say that I think there has been a lot of attention paid to how to achieve these sort of mathematical notions of fairness and much less attention paid to some of these bigger picture, bigger scoped sorts of considerations that one does makes when deciding to build a model. I hope I've given you sort of a case study of how you can think through or sort of examples of things I've been thinking through over the last several years and thinking about models that are used in criminal justice.

In practice there's no I think general way to make these decisions. I think it is a lot of normative decision-making that needs to take place. So, thank you to my collaborators on thinking through some of these various considerations that one should count for. Thank you so much, Kristian, for that insightful presentation. Our next speaker is Achim Zeileis.

Achim is a Professor of Statistics at the University of Innsbruck. Sorry about that. Achim is a Professor of Statistics at the faculty of Economics and Statistics at University of Innsbruck. Been an R user since version 0.64.0. Achim is a co-author of a variety of CRAN packages such as zoo, colorspace, party, sandwich, and exams. In the R community he is active as an ordinary member of the R foundation, co-creator of the useR conference series and Co-editor in Chief of the Open access journal of statistical software. Achim will give a presentation on making

the color scheme in data visualization accessible for as many users as possible. Achim, please take it away. Thank you very much for the introduction and for the invitation to this keynote session. And, yeah, as we just heard I'll try to give you a quick overview of some tools and strategies for choosing color palettes for data visualization that are more inclusive specifically to viewers with color vision deficiencies. And of course colors are everywhere in data visualization, and they're not always easy to choose but sometimes also perceived as being fun to choose because you can put a certain personal touch on a data visualization.

However, when doing so you should keep in mind that the power of color is also limited. So, if you have the opportunity to also encode the information you put into color into some other way into the visualization, it's usually a good idea. And I'll show you an example for that. Of course, that's not always possible not in all kinds of displays.

Then the main topic is being considerate towards viewers with color vision deficiencies, which will affect a relevant share of your viewers. The number I put here is about 8% of the male viewers in Northern Europe. This number varies a little bit with respect to ethnicity but they will be relevant share. It's much rarer in female viewers though. But there may also be other physical limitations on the side of the viewers or technical limitations. For example, with respect to displays or projectors and so on.

And the first example I will show you is very basic, times series display.I'm using one of the built-in datasets based on R as stock price indexes on Europe and I'm using time series line plot, and I'm using the default Base-R palette colors 1, 2, 3, and 5. The display itself is not so important, it's just the typical time series display. And up to version 3 of R you would get a display like this, and probably you all remember these colors very well. This was this very flashy default palette in Base-R up to version 3.

And specifically, the cyan in this display is too light. It might almost disappear in the background depending on the display especially on projectors. This was a notorious problem but even the green might be too flashy. Then there's another problem with this display and that we have chosen to use a legend here and the ordering in the legend does not correspond to the ordering of the lines and where else in the display. So, it's hard to match these.

And this becomes even more relevant if we emulate a certain kind of red, green color deficiency called Protanopia, and if you do that suddenly the red and black line look very much alike, and this is what the experience for Protanope viewers will be. So, based on these lines it's very hard to match, which of them corresponds to the decks of the German stock index and which one corresponds to the SMI, the Swiss Stock Index. And a simple solution to that, that has nothing to do with colors is to rather than using legend up here you can go to direct labels around here. And even if all colors -- all lines were completely black you would be able to go backwards in time and to find out which line corresponds to which label. But of course, we also want to improve the colors.

So, what Base-R did it switched to default palette from version 3 to version 4 to this. And at first you might say, this is a really small change. Well, you can see that the hues remained almost the same and the brightness became a little bit more balanced. The cyan is darker now, not bright and flashy as before, but maybe even more importantly for protanope viewers if you emulate that again, the somewhat lighter label for the SMI series can be distinguished from the DAX series.

The difference is not huge but it might be sufficient in this kind of display. If you want to create an even bigger difference, you might want to use a palette that was specifically designed to be robust on the color vision deficiencies namely the Okabe-Ito palette, which is now also in Base-R and again, if we emulate protanope vision here we can see that all four colors remain clearly distinguishable. So, the good news is that Base-R finally adopted better default palettes rather than the stiff flashy colors. They are a bit more balanced now, but of course Base-R is very late to the party with it. So, there were much earlier packages

that did a better job. First probably Color Brewer then notably ggplot2, viridis became very popular over the last years and the few other packages, some of which are listed here. So, many good alternatives have been available and some of these are now also available in this space R function called palette colors. And this includes this R4 palettes,

the Okabe-Ito, some of the ColorBrewer palettes we have some Tableau colors here and all of these can be used for choosing qualitative colors for coding different categories in a display. In addition to that, of course we need other kinds of palettes for numeric data sequential and diverging palettes. And before I say something about these, I'll say quickly something about how you can assess the robustness of a palette with respect to color vision deficiencies. There are different packages that can emulate this. One is our colorspace package that includes the swatchplot function that has an argument CVD for color vision deficiencies that can be set to TRUE.

And then you get the original palettes that you put in and you can use any colors you want for that. And then most importantly, you get these Deuteranope and Protanope emulations that are the most common forms of red-green colorblindness. Tritanope, blue-yellow deficiencies are much rarer as a monochromatic viewers that these saturated colors would correspond to, but still it's a useful assessment to see the changes in brightness here.

And you can do the same for the Okabe-Ito palette and you can see that the colors are reasonably distinguishable and they're all forms of constricts. But now we’re going to move on to understanding how other palettes can be constructed. And the model I'm using here is this HCL, Hue, Chroma and Luminance color model that tries to capture the perceptual dimensions of the human visual system.

The hue corresponds to the type of color red, yellow, green, blue, purple, which we could typically use to construct a qualitative palette. And then the luminance at the bottom goes from light to dark, here it changes in brightness. And this is what we typically use in the sequential display and we can paint it by changes in Chroma from gray to something bright and colorful. And the nice thing about this HCL model is that you can control these dimensions independently. And this is in contrast to the more commonly or more well-known RGB color model for red green, blue that is used to encode colors on the computer but that just does not correspond to the way we think about the colors or we talk about the colors.

So keeping these, hue, chroma, luminance dimensions in minds, I can now say something about how palettes are typically constructed, and we distinguished the most common forms, qualitative, sequential, and divergent here. And as I said before, qualitative palettes are typically used for displaying different categories in a data visualization and a good idea is to keep luminance differences to a certain range. In this display up here I've fixed the luminance completely, which is a good thing if the viewers have full color vision because then you don't create any distortions with respect to the brightness. But this limits the views that can be distinguished by viewers with color vision deficiencies. So actually, this strategy displayed up here is probably not the best one if you want to be inclusive towards viewers with such deficiencies and the palettes that were shown. And palette colors all have certain limited variations in the luminance and that is a better idea for this.

Then we can move on to Sequential palettes that encode numeric or all of the information from high to low or vice versa. And the most important bit is that you use a palette that changes from dark to light or vice versa. You can accompany it by changes in chroma and also in hue, but you should use a monotonic luminous scale. And finally, for a Diverging palette, we would usually combine two sequential palettes, but with different hues that can be distinguished by all viewers, including those with color vision deficiencies like the screen drop scale here. And base R also provides now, a broad range of palettes used or created with this HCL model in the HCL Colors function, including, some inspired by ColorBrewer, viridis, CARTOcolor, and psycho, for example, among others.

Okay. So, let's see some of these palettes in practice in a real-world example, and here we have risk map about a hurricane that is about to hit the mainland in the U.S. and it's the map the NOAA administration puts out in the U.S. And it depicts the probability for a tropical storm force wind speed.

So, it's the probability for very strong wind will occur, not for the cone of the hurricane occurring. And we start here in the margins with a dark green that corresponds to a probability between 5% and 10% and then, we go over the lighter greens, yellow, orange, red to the dark purple, which corresponds to more than 90% probability. And yeah, because this is used for communicating to the public, this should be accessible. However, if we emulate deuteranopia, one of the red-green color blindnesses, we see that we actually get very similar colors at different ends of the spectrum here of the probability. So, this is not a good idea.

Another problem. If I go back to the original scale is that colors are very flashy, you have a lot of chroma everywhere, so it's not clear where to look at first. And you have this non-monotonic change in brightness, we can also see in the desaturation version. And here I've replaced this palette with a ColorBrewer palette going from light orange to dark red, and this works much better. So, we have light colors in the margin with low probabilities and then we go towards the dark colors. And this is easily understandable for everybody, quite intuitive, robust on the desaturation and also on their color vision deficiencies.

And so, it's a good idea to use something like this in order not to confuse the public or the U.S. President for the matter. Well, we don't know how he confused, he was due to the colors or for some other reasons, but it's this map I used here from the justification -- his justification in the SharpieGate incidence that some of you might recall Okay, I'll use my last two minutes or so to touch another topic that often comes up when choosing colors, namely shouldn't we use or take inspiration from designers, painters and directors who know about color composition and so on? nd in order not to rain on anyone's parade, I mean, not using an R package here, but I'm choosing colors from one of my favorite directors, Pedro Almodóvar. And this is a picture from his movie Todo sabre mi madre and this is a palette I found online suggested based on this picture. And if you desaturate it, you see, it might actually work as a sequential color scale, going from dark to bright. However, if we see the full color version, two colors stand out because they have a lot of chroma more than the color surrounding them.

And this is, of course, intended by Almodóvar, the red and the yellow standing out from the codes, the hair, the background and so on. But if we use this in a data visualization, we suddenly get emphasis on this low probability region. And also, this large red stands out in the middle of -- which is not working that great.

And this is a much smoother picture, the orange-red scale I used before. We can have the same problems for qualitative palettes, another Almodóvar movie Tacones Lejanos with some great flashy, early '90s colors that we could extract into a qualitative palette. But again, this wouldn't work very well on the color vision deficiencies. Okay. So, to quickly wrap up,

I hope I've shown you that also base R now provides you with some tools for choosing palettes, so you can have good starting points to choose colors. Of course, there are many other nice packages to do so, and you can use, for example, R colorspace package to check the robustness of these palettes. And when you choose colors, be aware to choose an appropriate type of palette, don't reinvent the wheel, check the robustness, and be careful with palettes that have too much chroma everywhere.

And to conclude, I have some references here on the slides and you can reach out to me on Twitter. And now, I'm already over time and I'm stopping. Thank you very much. Thanks, Achim, or that insightful talk. Our next speaker is Dorothy Gordon. Dorothy Gordon is the Chair of the UNESCO Information For All Programme, among other things.

More notably, an awe-inspiring to me, at least. Ms.Gordon has a Wikipedia page, which is notable given the recent analysis by Francesca Tripodi, which found that women's biographies are more likely to be nominated for deletion than men's biographies on Wikipedia. Ms.Gordon will speak to us today about making technology accessible, particularly to women and Africans.

And how utilizing tools such as R, can help advance public policy. Over to you, Dorothy. Thank you for the intro. I hope there will be more women on Wikipedia as it is the world's encyclopedia.

Let me take a cue from our presenters. My name is Dorothy Gordon, I'm speaking to you from Accra, which is in Ghana in West Africa. It is... after 9:00 PM in the night, and it's quite hot because this is a tropical country. I'm not sure that I've done everything that I should in terms of explaining who I am, but let me just say how happy I am to have been invited to... to be part of this Keynote panel at the very first Global by Design Conference that the R community is hosting.

It's a pleasure for me to join you because it's already such a diverse and inclusive platform and we all know how difficult it is to achieve those kind of goals. Today, I want to talk to you a bit about your agency and the power of individuals and groups to achieve change. And... please don't be upset by my next remarks. For many years, we know that programmers and their close relations, data scientists, enjoyed a reputation as misogynistic nerds given to eating pizza and growing beards. You never saw images of female programmers and let alone black programmers, African programmers, forget all that.

And this kind of stereotyping is still ripe, but in the age of artificial intelligence, it is now wharfed into that of the evil programmer, referenced in kinder terms by Kristian, that kind of person who has deep rooted biases and an ability to recognize a skilled dataset and a regular perpetrator of algorithmic bias. And... for some strange reason, I have found it difficult to explain to the audiences that listen to me at the many conferences I participate in on a humanistic approach to artificial intelligence.

I found it difficult to explain to them that most good programmers are simply working to task. They are given a set of parameters and they work within those parameters. And if the instructions that they have been given are not clear in terms of expectations, they cannot be expected to fulfil or take those expectations into account. And that is my first statement that I would like to hear some reactions to.

Can they be expected to take those issues into account? Because we know that most programmers are working to earn a salary. What is the reaction of the people that are in control? And... recently, we heard of... a very large tech company letting go a black woman, because she raised issues of algorithmic bias. So, we have to ask ourselves, how much agency individual programmers have when addressing these kind of issues? So, I do not believe that...

programmers set out to focus only on bias datasets or design exclusively to reflect the viewpoint of a particular subset of the privilege. I have many friends who come from that privilege group, so I'm not naming the privilege group, but we all know who they are, because they say they feel very targeted. So, how is it that we are able to blame in a way programmers and data scientists, and in fact, the tech community, for the kind of issues that are far more widespread in terms of our digital ecosystems and the problems we have with big tech, whether we want to talk about surveillance capitalism or data capitalism, that we recognize that these new forms of doing business in line with developments in cognitive neuroscience, do take away agency in the sense that it's far easier to manipulate us these days.

And that the concentration of power and control in these companies that... we find both in the east as well in the west is quite unprecedented. Fortunately, there's a lot of attention being paid to algorithmic bias, and we can see that there are algorithmic auditing companies springing up. And I want to suggest to you that all of this is of even greater importance today because of the accelerated digital transformation that has characterized this era of lockdown.

It means that everybody is now thinking about how tech affects their lives. And this means that programmers and the languages they use at center stage. And we all would -- I won't say, we would all, because that's not true, but in line with the values that we are supposed to espouse and the universal declaration of human rights, as well as UNESCO's R.O.A.M principles openness, access, and multistake holder, we would like...

that our digital ecosystem move in a more inclusive and accessible direction. And when I think of the R community, I think of it as distinct and special. Vebash did not mention the fact that I am on the Board of the Linux Professional Institute, something which I enjoy doing, but it really gives me an insight to... the value of open.

And R... is... an open language, exceptional in that way. A language which has got a vibrant community that encourages multidisciplinarity in the sense that within that R community, we have people who come from many different disciplines that allow us to have subject insights into the kind of statistics that we are processing.

And the fact that you can present your information in different formats, and even those who have a deep-seated fear of data, can understand and appreciate the data story, is really extremely important. And... I just want to talk to you a little bit more about that power of community.

I think, I have a few moments left, and first of all, let me say that for most of my career, I've worked at the level of policy and I've also worked within government as well as a not-for-profit sector. And I found that people who don't work with policy and government often don't have an idea about how... decisions are made with regard to policy and how very often the people who are targeted by a policy have no clue as to the direction of thinking there. And this is important because we have now moved to -- or we are supposed to have moved to a situation of evidence-based policy. So, you can see why the R community is so important, because the R community gives us the opportunity to have clear evidence and to present it in a way that will make high-level policymakers that perhaps were not very good at statistics and maths when they went to school, understand it. But even more importantly, make it available in such a way that communities can actually understand it through the data visualization and that we can have a more inclusive process in actually developing policy.

And let me just say, because in my youth I used to teach policy and policy is an art, it's not a science. I used to make my students laugh because I would tell them that what happens in parliament may just be a [Inaudible] [0:46:33] to who got irritated by who the night before and came to parliament in a very bad mood and determined to make sure nothing went through. So, public policy is not a science, but today science can definitely help us to make better policy. And the R community can do that in multiple ways. And because time is limited, just let me mention a few. First of all, you can, as a community, monitor how data is being used, and you can flag when data is being misused, you can flag when the wrong kind of datasets, as Kristian explained to us, are being used to create a certain analysis called assumptions.

And then, as Achim just mentioned to us, you can also flag when... design is not inclusive enough. But I think that... looking at the issues, sometimes it's far simpler because we see that for example, in Africa -- and when we are talking about adolescent girls in Africa, there's a huge proportion of them that are not in school. And very many people used to assume that this was a cultural issue that parents wanted their daughters to get married, they didn't want them to have an education, but actually, when you do the research, the data is very clear that it's more linked to poverty and an -- inability to afford those necessities that surround going school. And so, parents are keen for their girls to go to school because they recognize -- I don't think that -- let me put it this way, I think that parents in Africa love their children the same way everybody loves their children, and would like to give them equal opportunity if they could.

So, simple things like this can make a huge kind of difference. And I see a few heads shaking, so let me round up quickly and say that we signed on as a global community in 2015. And with the implementation in January 2016, we signed on to the sustainable development goals and we aim to achieve those by 2030. I think that I would like to end by urging the R community to really get involved in providing support to the implementation of the SDGs.

There are already several open source communities. For example, there's an open source community around climate change. And so, let me... rge all of you to get involved, and I'm looking forward to having lots of questions from the floor. Thank you very much.

Thank you so much Dorothy for the informative presentation. Our last speaker for the session is Jonathan Godfrey. Jonathan is a lecturer of statistics at Massey University in New Zealand. His research is focused on the needs for the thousands of blind people around the world, who need additional tools to make the visual elements of statistical thinking and practice less of a barrier. He is the Official Disability Advisor of Forwards.

He will discuss how to choose the right tools that make collaboration possible and fruitful so that people from all walks of life can see themselves as part of the community. Over to you, Jonathan. Jonathan Godfrey: Good morning. It's a nice wintery morning here in New Zealand. And I'm aware that I can't see everyone else, but at the moment I suspect you can't see me either.

So, I think, someone at the official end needs . to switch the video on. There we go. So, when I was thrilled to get this invitation, the first word I saw in the discussion that we were having was responsible or responsibility.

And I think, the first thing that came into my head was, well, what makes the responsibility in the context of this discussion any different... to the responsibilities we have in any other walks of life? Perhaps that's because I spend a lot of time being a -- an advocate for disabled people in my country, especially for those people who happen to be blind. In particular, I'm spending a lot of energy discussing with government agencies, how to get disabled people into their data? And that means getting us into their thinking, and hopefully, then, helping close the equity gaps between disabled people and non-disabled people. The biggest problem we face is that of identifier ability. Most organizations don't know who in society is actually disabled or not. And so, that means the algorithms do not accommodate us and therefore they can't accommodate.

So, my work isn't just pure altruism. Even as a disabled person myself, I do stand to benefit from those efforts that I make on behalf of other disabled people. And that's probably where my story with R in my research is also centered as well. Most of my contributions started with a need to solve problems that I was having as an individual and being able to share those solutions with people overseas. And I think that's true for many people who are developing or using R.

We develop an idea for our personal need, perhaps, for our employer, and we share that work with others around us. We might then go on to share that work in a much wider context, improving our efforts as we respond to the efforts and requests of other people around us. And in the context of my work, I guess, the biggest question or the most frequently asked question is, what can I do to help blind people? And... the problem I have is that there isn't a single magic solution that I can offer everyone.

It's a conversation I have to be get into that says, well, where are you at, what are you doing and who are you working with? I can give assurances that we can make classrooms more amenable to blind students and other disabled people. We can definitely make our online presence more user-friendly and we can always make our communities more inclusive, and I won't get time to give you every possible solution. So, I'm going to talk about a couple or three. Disabled people just like any other minority need to see themselves welcomed, represented and valued. It doesn't matter if we're talking about an analysis of a social issue as per Kristian’s talk, or some authorities work, or organizing a conference, or documenting our lovely new R Package. So, the first example I want to draw your attention is that one way to include people is to make them feel welcome by the language we use.

And that language very definitely does matter to disabled people. But the problem we have is that the language we prefer is not consistent around the world. That's because different societies of disabled people are very definitely at different stages in their progression out of... the dark ages for disability into a more modern 21st century approach. So, in my country and in many countries, I'm not a person with a disability, I'm a disabled person.

I wasn't born with a disability and I certainly didn't spend any energy going looking for one. I was born with a medical condition. It's got a lovely flash name that has really no useful bearing on anything, but the main symptom that was observable 40 or 50 years ago.

That medical condition has led to me not having any useful vision in terms of print screens and actually most of my environment. That is an impairment. It doesn't actually, though, make me disabled. So, it's the exclusive practices of the environment I live in there are the things that disable me. To be disabled means you've been excluded in some way, but when I'm included, I'm no longer disabled. The public transport system in many cities is utterly disabling because I can't tell which bus is the one I want.

The tools used in some collaborative settings disabling to me. The use and the selection is the tools of choice are what stifle my ability to collaborate with others. The printed books in the library, well, they don't disable me, but their reliance on using those printed books is what disables me. So, the academic journals that present their work using accessible HTML, they include me. And that's true for most HTML documents. And that means practically all of your work that is written in our markdown has a chance of including me in your audience.

But they also saw that markdown documents don't know that they actively did something that included me. Also, frequently and history tells us far too frequently, there's a lot of documentation that goes with software that is presented in a non-accessible form, and most of the time the people who created that documentation, were not actually aware that they disabled me. They did make a choice, but perhaps they didn't know the choice they were making and how it affected me. In far too many contexts, users aren't given an ability to make a choice.

We are often at the mercy of the default settings. I can go back, it takes me 20 years to cover the example that I'm going to show you about inclusion versus exclusion. And it's actually in the end being one of the most important things in my working life. So, 20 odd years ago, when I was a graduate student doing a PhD, there was no way for me to type mathematical expressions and be able to convince myself that my readers were going to understand what I wanted them to understand. The equation editing tools in the big software companies of the day were utterly useless to me.

I couldn't write it and I certainly couldn't read it. The only option that I had available 20 odd years ago was to write mathematics using LaTeX. They gave me control over what I was putting into my documents, but it didn't give me control and understanding of what anyone was getting out of my documents. So, I couldn't actually check my work, leaving me totally reliant on proofreaders, who had to be at least as skilled as I was in whatever I was producing. Today, it's a very different world in which I operate. I'm still typing my mathematical expressions using LaTeX notation, but because it's in a markdown context almost all of the time, I end up getting HTML with MathJax embedded mathematical expressions that I can read on my own and I'm happy.

I've got independence today that I did not have 20 years ago. And software has -- actually it's always been a problem for blind people. Many interfaces, totally designed with the math user in mind Sometimes in the worst cases, there is no plan B on offer, use a mouse or walk away. 15 years ago, R was an equalizer.

Everyone in the R world was working the same way that I was because none of us use the mouse. In recent years, RStudio came along and I, I'm saddened to say that RStudio has always been a disabling piece of software for me as a blind person. What I'm really pleased about is that the team at RStudio has put resources into the commitment to making RStudio a more accessible platform for blind people to use. It's still early days, but I really do believe that sometime soon, blind people will be using RStudio alongside their classmates and alongside their colleagues.

I think one of my major messages for people will be that, if you are creating a document like of package of need, that is spreading out a PDF document, it is as useless to me today as it was 20 years ago. The process of making a vignette and PDF has had no tangible improvements for my ability to engage with that work. It theoretically is possible for you to have produced from LaTeX, an HTML document that I could have read, but practically, no one knows how to do it. It's not easy. So we all take the option that is on the table in front of us, LaTeX goes to PDF. We now have a choice.

We can go from R markdown to HTML and you can have your PDF too, if you want it. I've got a selection of key mottos that I want to leave you with. A very important motto in disability circles is “Nothing About Us Without Us.” That means get disabled people into your work teams, into your thinking, into your algorithms and everything you do. Make doing the right things easy to do, and the wrong things harder.

That's a challenge, I think. All too often, it's too easy to make an inaccessible document because it's easy and making an accessible version is harder. That is not true for R Markdown.

It's harder really to make a decent PDF out of R Markdown. Good is often going to be labeled as not good enough, but one of the major problems that we have in disable -- for disabled people is that, all too often perfection is the enemy of good. And so, we get nothing. I think we need to accept that things are not yet right, and that they need to be improved and then make a commitment to making those improvements. R Markdown wasn't perfect at first.

And so, the numerous improvements that have been built into R Markdown mostly under the hood, away from the end users, only a few of them ever came because blind people asked for them. The two most important things in R Markdown documents that make life much easier for me, that the web standards are built in. As users, we don't know that, but web standards are built into what gets put into your HTML document from your R Markdown, and MathJax, putting those mathematical expressions in readable form is the other one.

My employment today and the employment prospects of blind people around the world has been dramatically improved because of R Markdown through the HTML. That means we can succeed in your classrooms. We can succeed in your workplaces.

We can succeed as your collaborators. That means, we can be included. What that actually means is that we will be less disabled. To answer the question of one of the most important tools for responsible actions in everything we do, I'm going to revert to a proverb from my country.

I'll give you the English version because we haven't got time for the full translation. But the question is, what is it, that most important thing in the language of Maori? It is he tangata, he tangata, he tangata. If you haven't worked it out, the answer is, it is people, it is people, it is people. Thank you so much, Jonathan. And we are grateful

to all the presenters for their presentation. We will now quickly move to the Q&A session. And the first question is a general one directed to all of the presenters. What is one question or consideration that is underrated in your opinion, but could move the dialog forward on inclusion and accessibility? Maybe we can start with, Achim.

Yes. Thanks. Like... I'm sorry. I'm still very impressed with Jonathan's talk

and I have to say, thank you for this . This is a really very, very useful and very important for me as a non-impaired person to hear and understand about this thing. So, thank you for this. And also, I -- my answer to this question also relates to something that Jonathan said that's, perfect sometimes is the enemy of the good and when we have to tackle such difficult questions, it's often that they're seen as one big compounds and they are -- then they're very hard to tackle or address and many, many people feel that they couldn't do this alone.

So, what I usually do with difficult problems, I try to decompose them into parts, and then, I try to do two things, bottom up and the top down thing. So, bottom up is to do simple things first where it's easy to do something and this also ties in with what Jonathan said. So, where it's already easy to do the right thing, try to foster that, and then maybe take one or two important points top-down where it's hard to do the right thing and try to make it easier.

So, decompose the problem and try to do some of the easiest things and a few of the hard things as well. That would be my answer to the question. Okay. Thank you, Achim. Maybe Dorothy, what's your opinion on the question? Sorry, you're on mute.

Sorry. Yeah, I think that you were asking something that's been underrated, but could make a big difference? Yes. Is that what you were asking? Yes. But I can repeat the question. You are muted. -Oh. -Yeah.

-No, I'm not muted. -I can't hear you. Am I audible? Yes. Now you are. Okay. Let me just repeat the question for you. What is one question or consideration that is underrated in your opinion, but could help move the dialog for inclusion and accessibility? I think it’s really very simple and it’s not simple.

I started my career working in rural areas. And we often were asked to go in to provide solutions that were of no relevance to those people. And so, just as Jonathan said, and this is also the theme that was at the heart of the decoration that came out after the year of -- on indigenous language, the use of indigenous languages is, "Nothing For Us Without Us". So, if I saw in the chat, somebody was asking, how can we make it more inclusive if we are designing a model for somebody. And that targets -- and we don't like to use target anymore when we're doing development issues. We don't like to use the word beneficiary anymore, but if you say that you're going to work with a particular community, the obvious thing is to consult that community and to recognize that that community is not a homogenous group, that there will be different perspectives within that community, and you have to figure out how you are going to be able to access those different perspectives and build them into your model with the right kind of waiting, et cetera.

And so, this is why, I feel that there is so much power within the R community, because you have the ability to learn from each other, the peer learning that is necessary to figure out how to do that effectively. And each time you go into a different community or different group, you know, a different interest group, you will have to fine tune, you will have to adapt your approach to seek that group. So if something that's been said, ad infinitum, but it is something that bears repeating and for people to understand that it is not easy, there isn't one model in terms of how to do this.

A lot of ways will be learning by doing, it's a lot of feeling for people for listening and understanding. Okay. Okay. Thank you so much, Dorothy. Maybe Jonathan, what's your view on that question? Well, I think it's people in the end. If you look around your data analysis and you think that the right people need to be represented in your data analysis, maybe we need to look around our classrooms and our workplaces as well. If the right people aren't in the room to give you the diversity of opinion, then you better start thinking about where to look outside the room.

It’s people. Okay. Thank you. Kristian? Now I'll piggy back on something, both Jonathan and Dorothy just said, and you know, wholeheartedly agree that when it, you know, comes to inclusivity, it is absolutely important it’s in the room. And this has been something on the types of models I've been taught. I was talking about during my talk

that are used in criminal justice. It's really gotten a lot of attention. So over the last -- prior to the last several years, I think there was less of a push to have people who are justice involved actually in the room, making decisions, giving opinions on the types of -- on, you know, even mundane things about how data should be processed, contextualizing the data up to whether their models should be used at all. I've seen over the last couple of years, the push to have impacted community involved in this process.

The one thing that I think just to sort of very directly answer your question that could make that a much more successful endeavor is to make sure that they are compensated with money. You know, often I end up, you know, through my job, in the rooms where these sorts of decisions are being made. And although I'm not exactly being paid to be there, you know, when I was a professor before, I could, you know, sort of decide how I spend my time and part of how I spent my time was interacting in these policy types of roles. And so, I was compensated for my time in some way. However, the people who they're asking to participate in these panels from the community really aren't.

There are people who have jobs where this sort of activity isn't included as part of their job. So, you're asking them to take time away from their families. You're asking them to read up on materials on the statistical modeling, right? In their spare times that they might have some small part of their voice heard about how models are going to be impacting them directly. And you're also asking them to maybe take time away from work, maybe pay for childcare, do all of these sorts of things to participate.

And that's not right. And so, just again, to very directly answer the question, I think, to increase inclusivity, we need to make sure that everybody who needs to be in that room is fairly compensated for their time and effort to be in that room. Okay. Thank you so much for the response.

Over back to you, Vebash Thanks everyone. That was very well answered, that question from everyone. So, Achim for you, I know you do -- did answer this already on the chat, but one of the questions was could you recommend a way to find good alignment compatibility between background, the plot layout color and then the content colors, like the lines and the points and the ticks? And do you have any advice or resources for creating a divergent pallet with greater than seven categories? Yes. Thanks. So the short answer is always that there's no one size fits all approach for any of this, but the usual advice where you should start from is, if you have a dark black background,for example, the neutral color should be close to that background. So, if you think about the risk map example from my talk, I would start at the low probabilities with the dark color and then go to a bright and very colorful color for the high-risk regions. And if you have a light background, you will do it conversely.

It starts at the light color and go to a dark and colorful color. But there are also exceptions, like the risk map I've shown where the background was neither light or dark, but there was this map with the ocean and the land. So, this doesn't always work, but it's a good starting point usually. Pick a neutral color that is close to the background color.

And for the divergent maps with more than seven categories, if you're really concerned about matching the individual categories and not just getting the impression, how things increase, then you would probably have to change, that you belong with its divergence, so go from yellow towards the red on one side and towards the blue on the other side, for example. And there are some examples for this, both in the HCL colors function in base R and in our color space packaging, the diverging X, HCL function. Thanks for that, Achim. Shel, would you like to ask the next question? Yes. Sorry. The next question goes to Kristian. How can models development be done more inclusively? Sorry, you're on mute.

Sorry. No, I had the unmute button covered. So, I think I kind of actually just answered, I think I may have jumped to down a little bit and answered that question a little bit already. So, I'll repeat some of the things I just said with less of an emphasis on the pay, though I still think that's really important. You know, I think making sure that people who are going to be directly impacted by the model, making sure their voices are heard.

And I think often this tends to happen at the end of the process, after all these sorts of decisions have been made, including what the goal is of even introducing a model. What type of model is going to be built? You know, what data is going to be used? And then sort of at the end, it's almost looked for like, okay, what do you sort of, you know, get some feedback? What do you think? Sort of knowing what we know about how models are developed, what that pipeline looks like, I think people who have been most experienced -- experiential knowledge about the data need to be involved from the get-go, in formulating that ultimate goal, in deciding whether a predictive model or any other sort of model is the best way to move towards that goal. If you decide that the model is going to be used towards that goal, contextualizing the data.

I cannot say how important it is to have people who actually understand what's going on in data they're to help you understand what it means, right. Data often -- when you get it as sort of a statistician or maybe data scientists, it -- you know, it can look like raw data. It can look like something that's not extremely intelligible. It can be a little bit divorced from sort of like the humanity of the person who's being measured, right? And sort of, having someone there who can contextualize what say a measurement might mean, or like what a prior arrest might mean or what the context of that might mean and how you ought to handle that in a way that'

2021-08-08 21:22