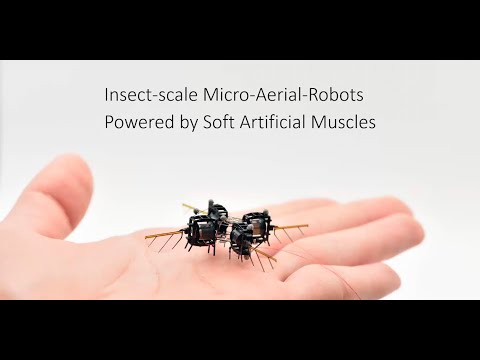

Insect-scale aerial robots driven by soft artificial muscles - Prof. Dr. Kevin Chen (MIT)

Let me get started. Thank you so much. Deniz and Robert for having me today. So what I'm going to talk about is insect scale micro-aerial robot powered by soft artificial muscles. If you look at the keywords right, there are two keywords in my title of being micro and soft.

I want to spend the first five minutes or so explaining to you what are micro robot in this context. And then I want to spend the rest of my time explaining to you a new class of micro robot that are tuned by soft artificial muscles. So first, when we think about robot, I think most people nowadays will think about large scale robots such as legged robots, humanoid robots, or trains or airplanes that span the scale of meters to the scale of hundreds of meters. On the other end of the spectrum, you can think about using man's technology to make very tiny robot.

On the scale of micro, also tens of microns. What I want to focus on today is talking about insect scale robot, and specifically they range from 1 to 5 centimeter and they vary from 10 milligram to 10g. So as a quick example, I was a grad student about ten years ago. I worked on a project called RoboBee. And back then the idea was to create a robot that has similar size and functions as honeybees in nature.

So very quickly, let's address several questions together. The first question is why do we study micro robotics? And to me there are two answers. The first one is by leveraging interesting physics at the centimeter scale, we can enable new functions, in this case by leveraging flow similarity, we can teach the robot to use the same set of wings and actuators to both fly and sway. And more than that, I can turn this around. I can use micro scale robots as a platform to investigate interesting physics at the centimeter scale.

By looking at the flow field that's generated by a flapping wing robot, I can infer about the lift and drag generation mechanism of a flying insect. Second question what makes micro robots unique? And the short answer is, at this scale. inertia becomes less important and surface effects become a lot more important. So a new property arises. For example, you can drop a micro robot from very high above, and it sees small amount of changes to its performance. And more than that, my colleague has shown that you can teach a micro robot to jump off the surface of water, something that's very challenging, if not impossible for a larger scale robot.

Okay, so last question. What are potential applications of micro robot? Well, in the short term I can think about a lot of them. How about put a tiny camera on board of this legged robot and put this legged robot inside of a turbine engine? So what I'm showing you is a cross-section of turbine engine.

And then teach this robot to climb inside of this turbine engine to look for cracks. In the longer term, our vision is to use a swarm of ubiquitous and multi-functional micro robot that can operate in a realistic environment. Okay, so this, picture is still fairly far fetched. What we are trying to say is we want to use a large number of micro robot in the search and rescue mission. And of course, there's a lot of challenge that we need to solve.

So toward the end of this talk, I want to revisit this picture and identify some of the key challenges and gaps that we still have to achieve. this longer term mission. So at MIT, our research has two themes. I want to spend rather a short amount of time to go through the first theme and then focus on the second theme today. But first, let me say that our first research theme is that insect scale robot can do multiple things in complex environment. Through leveraging unique physics at the centimeter scale.

And I want to show you two case studies. One are a hybrid aerial aero aquatic micro robot. And the other one on a hybrid terrestrial aquatic micro robot. So if you want to build something that's on a scale of an insect and that can fly, you probably want to look into nature for inspiration.

It turns out that we can approximate the flapping wing motion of an insect, with two degrees of freedom motion. And in most of the flapping wing robot design, what happens today is that the wing stroke motion will be actively controlled by a actuator, whereas the wing pitch rotation is passively mediated by a compliant flexure. And the aerodynamic forces. So in our lab, we have set up a experimental system that allows us to capture hundreds or thousands of those flapping wing videos so that we can then extract the flapping wing kinematics. And in fact, not only can we extract the flapping wing kinematics, we can also analyze the underlying fluid flow. So this is a video showing what we call a scale particle image below symmetry experiment.

Essentially what we do is we put oil particles in for the enclosed chamber, illuminate the particles with a laser sheet, and then compute the cross-correlation of the measured velocity field. And then you can see on the right now you can see the growth and shadowing of leading edge vertices, which will give you intuition on the lift and drag generation. And in addition to having experimental tools, we also have computational tools that allow us to compute the pressure profile and ISO-vorticity profile on the wing surface. So now we have the suite of experimental and computational tools. Why are they useful from a robotics perspective? So here what we want to do is we start by asking a question in physics. And specifically we want to ask the question about is there a similarity between flapping wing locomotion in air versus flapping wing locomotion in water.

And if there is similarity, what additional functions can we achieve? In this case, we came up with a project in the sense that if you want the same robot to be able to fly, to swim, but also making transitions from air to water and from water back into air. Without going to all the technical details, let me show you the robot design compared to the old robot. This new robots have new wings for added lift floors.

It has four point outriggers for maintaining stability on the water surface, and most interestingly, it has a gas correction chamber for for performing water to transition. And let me show you how everything works. So first we can start the robot at the bottom of the tank, and the robot will flap its wings at a low frequency, in this case about ten hours or so. So you can swim up to the air water interface once it reaches the air weather interface, we are going to turn off the wings and then turn, a parallel parallel plate. And what it does is that the parallel plate will start to split water into a combination of hydrogen and oxygen. And the increase in buoyancy force will push the robot wings out of the water surface.

And not only are we using hydrogen oxygen to increase buoyancy force, we are going to use them as combustion fuels. So I recommend you to pay attention to the next part of the video okay. Because it's going to be quick. In the next video we are going to ignite the mixture of hydrogen and oxygen. And you can see we triggered a microscale combustion that allow the robot to leave the water surface. This combustion finishes within 1/1000 of a second.

So this is about the fastest combustion process. That's, although very short, is powerful enough to break the water surface and shoots the robot back into air. Okay. So in summary, we have created a 175 milligram robot that is cobalt flying. Cobalt swimming can transition from air to water. More impressively, impossibly transition from water back into air and also passive and land uncrewed.

Following this same series of salt, I want to very quickly go through a second robot, which is a robot that can work on land and also on the surface of water. Okay, so in the past example, we spend a lot of time to talk about how a tiny compulsion to break the water surface tension that is otherwise very difficult for the robot to break by just flapping the wings. And on the other end of the spectrum, as humans, we barely feel surface tension at all. And the reason is that at our scale, the surface tension force is less than 0.1% of the inertial forces we can generate if we build a robot somewhere in between. Specifically, if the robot weighs about the gram or so, and if it has a contact lens, I don't know whether of ten centimeter or so that all of a sudden the surface tension forces and the inertial forces are on the same order.

Now we can introduce new functions. So again we start with a question in physics. That is how do we control the magnitude of surface tension forces. And if we can control surface tension forces what additional functions in robotics can be able.

In this case we created a new project in which we want the same robot to be able to work on land on the surface of water, control the transition into water, but you also eventually transition back on land. I was out again going to a tactical details. Let me say that we used a phenomenon called electoral betting.

And the idea was that we you're going to send a high voltage to the water surface through a electoral betting footpath so that we can modulate the surface tension contact angle, which allow the robot to control what is sinking into water. So let me show you how everything works in our video. In this case, we start the robot on the water surface. It will now transition and worked out. Oh, sorry.

We start the robot on land in transition onto the water surface. So now is swimming on top of the water surface. I recommend you to now focus on a side view of. This robot will now sink by turning on its electoral landing foot footpath. So now the robot is fully submerged underwater. Once the robot is under water, you will then climb this incline slowly to return back on land.

So again, what we demonstrated is an ice, robot that weighs about a gram. Can do multiple things. Such as on walking on the water surface, on land, under water. And sometimes those two type of locomotion is challenging. It's not impossible for larger scale systems. And recently we want to push for more functionalities in this robot, specifically by teaching this robot to climb on ceilings and was and again use capillary effect.

So what we did is we put a heating pad on the belly of this robot. And we leverage two phenomenons. We use a normal adhesion force. That is because the curvature of the contact angle changes that allow the robot to adhere to the, surface. And also we use a lubrication effect in which we allow they go about to a slide along the surface without needing to engage and disengage from the surface. So this turns out to be a very simple but effective method.

Now I'm showing you a video of you inverted climbing. Right? So the camera is looking top down and the robot is using this capillary adhesion force so that you can climb on the inverted surface. Now it's completely passive stable. Turns out this is much faster than climbing walls.

Electrostatic adhesion. Okay. So okay, let me conclude my first sea research scene, which is that micro robot. Although being very tiny, they can't do very complex functions in complex environment.

Now, let me take a step back and zoom out a bit and say that our longer term mission is to create a swarm of ubiquitous and multi-functional micro robot that can operate if you're in this environment. And let's now take the opportunity to think about what are the performance gap. So far, I've shown you two examples of micro robot that can operate at a high frequency and are very precise. In my field, my colleagues are solving key problems in micro robotics by putting sensors, controllers and power sources on board. But I want to point out that micro robots are still limited compared to insects. Specifically, insects can effectively interact with the environment, and also they can operate in a large number, whereas it's extremely difficult to make a lot of micro robots effectively.

And all the demonstration I've shown you involve a single micro robot. To solve this challenge, I proposed a happy look into soft robotics. Because soft robots are robust and they can safely interact with the environment, and you can make a lot of them cheaply. And over the past ten years, there's a lot of techniques for putting sensors, controllers and power sources up soft robotic systems. What I want to point out is that soft robots also have their own limitations.

Specifically most soft. Actually, they are slow, and they're not as precise as rigid actuators. As a consequence, most of the commercial robots that we see are driven by rigid actuators. Our goal in MIT is really to address key challenges in this field by looking into a new direction in which we called hybrid a soft, rigid micro robot.

And the same that I want to push for is that what I envision? Future soft robot security is that not only should I feel about the safe and compliant, they should also be agile and controllable. And I want to show you a few case study that we have been working on. Yeah, MIT over the past few years. Okay. So what I want to show you today is a flying robot driven by a dielectric elastomer actuator. The unique part of this robot is that it is driven by a muscle like soft actuator.

But you still have rigid appendages such as wings, transmissions, and wing hinges, so that we can precisely control this motion. And because I'm combining soft and rigid component, hopefully I'll be able to show you some emergent properties such as, resilience to collisions and injury. So what I want to do today is to answer three questions. With you together. The first question is, can a soft robot fly The first question is, can a soft robot fly prior to our time is extremely difficult to fly.

Any robot without a electromagnet motor. And second question is about if I can't fly them. Why soft? What are the unique abilities of soft robots? And finally, the third question is what's our longer term goal of this, this class of robots? So first let me try to convince you that indeed self-heal about can fly.

So as a grad student, I play this rigid. Actually there's for about seven years till the electric. Actually those are great because they are high bamboos and precise. That's exactly what you need for flight control. But they are also kind of difficult to deal with because they have tiny strain. And as a consequence of having tiny strain, if the wings collide into an obstacle is very easy to crack, a is actually actuator.

In MIT, we developed a new class of dielectric elastomer. Actually, there is on the same scale, and a unique part of those soft actuator is that a still can operate hundreds of times per second while having a large strain. And just because it's intrinsically soft is tolerant to assembly error.

So we can make a lot of those soft robot effectively. And if you look at how the soft robot is made, it has rigid components such as airframe transmissions, wings, which allow us to have very fine control of the flatulent kinematics. But it also has a soft actuator in the center for driving the robot systems. So about, five years ago, we assembled this robot, and this was from the first flapping wing flight that we are able to film this robot in this high speed video, or flap the wings at 280 times per second.

And from this high speed video, you can really see the elongation and contraction of the soft artificial muscle. And more than that, also about five years ago, we demonstrated that the first lift of flight performed by a soft, driven robot, right. Okay. You can see the elongation contraction of this artificial muscle really behaving in actual muscle. And I used to make this joke, which is that for a soft robot, this is this is a very exciting moment because for the first time, soft robot is able to lift off and fly.

But if you are working in aero robotics, this is useless in the sense that if lifts all of this in point one seconds, it's intrinsically unstable. So the question is, how do we catch up in terms of the flight capabilities of soft systems? So over the past few years, our labs spend a lot of time trying to understand the nonlinear properties of soft actuators at high frequencies, and then wrap controllers around those soft actuators. About three years ago, our lab demonstrated evolved the best hearing flight performed by a soft ground aero robot. So at this point, scram robot was demonstrating a 22nd hearing flight and it was so stable, it feels like this robot has been affixed to a particular position in space.

And again, back then, about three years ago, that was the longest flight and also the highest performing flight performed by a sub ground robot. If you look at the flight quality, the maximum position error is on the order of two centimeter or so, and the maximum additive error is about two degrees or so. So since then, we are trying to push for a longer and longer flight time about a year ago. We have been able to double the flight time to about 42nd. And then as of a few weeks ago, we reported a recent study in which we are able to fly the robot for a thousand second in a single flight.

And I'll come back to this new, to this new robot perform toward the second half of my talk. Another thing we want to demonstrate is we want to show having control during flight. And what I show you so far is that the robot kind of stably hover around the set point. It could not keep its head angle. Imagine in the future, if I want to put a tiny camera on board and teach this robot tools to to sort of fix its gaze at a flower, it will be very difficult to solve this problem. What my student Nemo did is he, inclined the, the robot unit during the assembly process so we can use a component of the lift force to generate your control at work.

And as a consequence, we can get quite good having control in this system. So when the robot is flying now, you can see the robot first turns left and then go turn right. Your turn back to face front and then you will land. So in some sense we can now get six degrees of freedom control in a sub gramme, a real flying robot. Hopefully, at this point, I've convinced you that indeed, soft robot can fly as good as rigid robot at a sub ground level. But the more interesting question I want to answer is why soft? What are interesting about those soft systems? So now what we want to show is that we want to show a unique properties of soft flying robot.

So in this case, for example, we want to demonstrate the soft actuators robots. I can repeatedly run a man. So obstacle and a soft actually there will survive a more. So how about flying the soft fuel about near a cactus.

How about cutting part of the wing off? Or put a lot of needles into the soft actuators? Or how about trying to demonstrate aggressive maneuver, namely turning the actuators on and off at very fast rate so you can perform a somersault. And also because we make everything in-house. How about showing you electro luminescence that is trying to show the robot can shine light to our flight. So first let me show you collision recovery. When the robot is flying, we can gently push down the robot and the robot will be able to recover to the at that point.

Now, I want to say the first video is not very impressive yet. Most quadcopters can do this, as well we'll start to get interesting is that if I start to hit actuators during flight, or if I start to hit the robot wings during flight right there, go down will maintain the stability. Imagine hitting the propellers of a quadcopter is flying. It's probably not going to turn out very well.

And in fact, what's going to happen if I fly? If I hit the robot so hard that you cannot recover? In this case, the robot simply bounce on the floor and they recover, to this, hovering set point simply as a consequence of being small and having a low inertia. Okay, very challenging for larger scale systems. In fact, I want to push this a little further just to look at failure mode. It turns out that, wing hinge failure is a common mode of failure. When the robot is flapping its wings, sometimes a torsional stress will cause a crack in the wing hinge, which propagates through the wing hinge within a few cycles. So when the robot is flying, you will see a wing will fly off and the robot will lose stability and will plunge forward very fast.

Downward, very quickly. This is in sharp contrast of what insects can do. The insect wings can be fairly wear out, yet the bees can still fly very well.

And there is a community of colleagues who are interested in studying the neurological control of flying beetles. And what they do is to probe the neurological signals. They will put electrode into the flight muscles. Yet the beatles is still able to fly. So we want to demonstrate a similar level of resilience to damage. Namely, we want to ask the question if the robot, for example, can fly near a cactus.

So we have identified three types of things we can do to the robot. We can either put a lot of needles into the soft actuators, we can cut a significant part of the wing tip off, or we can try to burn a big hole in the actuator. It turns out that we can use a phenomenon called self clearing, because this soft actuator is made of a very safe and compliant electrode. Wherever there is some damage or defect, we can pump a high voltage to self isolate the defect so the bark material or to actually there it can still operate. So in this example we have a robot in which actually there has been pierced ten times.

A wing is being cut off and another big hole is burnt in the other actuator. Yeah. The robot can maintain a similar flight performance. So this is a level of resilience that I would argue that’s not be achieved in, even in a rigid scale, rigid driven systems. Another thing I want to show is aggressive maneuvers. I like to use somersault as an example, simply because somersault really favors smaller systems.

When you shrink down in size, the the time scale of your robot becomes faster and as a consequence, the speed in this you can do this somersault. Also, increases, at least in simulation, we show that our robot can perform a somersault in .15 second, which is faster than any of the existing aero robot out there.

So let me show you something we have been able to do about four years ago. Okay, so this is a somersault showing in real time. This is probably a little bit too fast to see in real time, so I'll play the same video at a slow down rate. In this case, the robot takes off and hover around a set point, and then it will accelerate off force. Once the robot reaches a target set point, it will make a somersault. Okay, in this case it cannot really recover altitude, so it has to bounce on the floor and then recover to the hovering set point.

And now I'll show you a more recent somersault later on in this talk. But essentially in this demonstration, what I really want to demonstrate is that it combines agility and also robustness. Another function that we have been able to demonstrate is electro luminescence. Turns out that fireflies are quite amazing animals that when they fly, they shine a light, and they use that as a way of communication. And because we make our soft actuators in-house, we can embed electro luminescent particles in the transparent elastomers so that when the actuator operates, the alternating electric field will excite the electro luminescent particles. And as a consequence, when the robot flap that their wings each actually there, will shine out a different colored or a different pattern light, and then they operate.

And as a consequence we can generate pretty fun videos. This was one of the first project I work on that I more time it back to AB two student, and we all pull out our iPhones so that we can take fun pictures or videos with our iPhones, but you may ask that it looks nice, but why teaching the robot to shine our light? It turns out that for the robot to fly, you need to use a very expensive motion capture system that tracks the position of those infrared radiation markers. But now, if each actuator can shine out a different color or better light, you can think about those as active markers. So instead of using infrared radiation to track the motion, we can now use vision based tracking.

I in turn saw that just with a few iPhones we can get fairly good tracking qualities. So that was our first effort in trying to fly the robot in outdoor environments. And recently we also tried to make a lot of those robot chiefly. So my graduate student emo has been experimenting with 3D printing.

At least we can now print the not so precise parts, such as airframes, with a commercially available 3D printers, and as a consequence, for the first time, we are able to fly two robots together. So in this case, we are able to fly two robots collectively. While they carry a long and flexible payload, this would otherwise be very difficult to carry by a single robot. In fact, we have so much fun that when they are flying, we put our app name on it. So we are the soft and micro robotics, the Abby MIT and you're definitely welcome to visit us. But you are in Boston.

So to summarize our second part of the second chunk of our work, the same of our research is demonstrating that soft robots can combine robust ness and agility. By robust we mean soft robot can survive collisions and injuries. And by agile we mean they can, perform somersault and kind of reach a maximum of fly speed that's now more than one meter per second.

And that's considering the robot length scale is on the order of a few centimeters. And also by unique we mean that they can shout lines or they can fly a swarm, which would be difficult for a similar robot at this scale. The last 10 or 15 minutes.

I want to tell you about our recent push for new functionalities in this system. So the second chunk of work, I'll be presenting this for a couple of years now, and I've been also hearing feedback from people. We based on the feedback, we really wanted to work on a new platform that can get us, additions or improved flight capabilities as well as a platform that allow us to work toward flight autonomy. But if I look at the older platform, usually the feedback I got is the robot works great, but it looks ugly. And the reason that it looks ugly is because, you know, it has sort of four identical unit, has eight pair of wings. And also because of a pair of everything, it's not only taking more time to make, but it's easier to fail if any of the wing fails and the robot loses stability and also reserves so much area for placing the wings.

I really can't find the area for placing the exact sensors. So until recently we have been working on a new platform that looks a little bit smaller. So from wingtip to wingtip it's about four centimeter, but more so, what I want to show you is that this new platform is a lot more capable in terms of flight. What I want to show you today is this new platform can demonstrate a much improved flight capabilities, and also it supports our recent effort in investigating various aspects of autonomy. So first, as you can see, this robot has now much, much longer wing hinges for reduce stress. And what that does is, is dramatically improved.

The robot enters previously, you know, microscale robot fly for seconds or tens of second. Now in a recent work we are able to fly for 1000 seconds in a single fly. So this, video has been played 100 times. Fast forward. Right. This is what is of magnitude improvement compared to, prior work. So why is it important to fly for 1000 seconds? Well, because the robot endurance is so good.

Now that we have time to tune our flight controller. So now we can, for example, show the robot can track trajectories, right? So some of you can probably see that the robot is tracking three letters. And this is a relatively small, slow flight with meaning that the robot average flight speed is on the order of ten centimeter per second or so. But because it's flying slow is very accurate, it's able to land exactly at the same platform in which it takes off from. If I generate a composite image of that flight, it looks like this. Okay.

So we can follow or trace those letters very precisely. And now if I increase the fly speed, it will not be quasi static anymore. It will be, dynamic. In this case, the robot is trying to follow a infinity sign. And what it does is that now you can see some pitching during a flight, and the robot can now trace this trajectory at about 30cm per second. Considering again, the robot is only about 3 to 4 centimeter in the longest motion.

And now I want to show you a longer flight. I don't know if you can hear the audio, but if you can, here on your end, this robot really flies. Hums like a bee because the robot flies at about three or flapped the wings at about 330. Herz. So when it flies, it really sounds like a honeybee.

And in this, video, the robot is tracing a thin sign that's rotating. So if I stop, the video, I show you the trajectory. It will look something like this. It was trying to trace this infinity sign value rotates. So in the 32nd flight, the robot traces about a ten meter long trajectory again. Well, there's a magnitude of what subgraph robot was able to do.

And then we can also talk about agile maneuvers such as, somersault. So this is now the most recent somersault are able to do. You can see is much, much more, controllable than before.

So in a slow down video, again, the robot takes off, hover around the set point, and then now I'm showing the somersault by slowing the video down by 40 times, the robot makes two consecutive somersault, and immediately after completing the somersault you will be able to catch is at your stability, and then it will return to the hovering set point. And then you will, land at the takeoff position. A lot more agile and controllable than before. So in summary, this newer platform is a lot more long endure it compared to other subprime robot is really orders of magnitude better in terms of lift high and then is falling trajectories is now flying at twice the speed as before, though the position error is on the order of one centimeter or so.

And if you measure agility, which is the metric of rotation speed, then our go about is not only faster than any of the existing aerial robot, but also then a lot of the insect that we can find in the literature. Now, I'm not claiming that we are faster than any of the insects there, but I think there's just no motivation for an insect to do a multiple somersault and compete in this manner. Okay, so after demonstration I've shown you so far, rely on a PID control.

Other people who know me knows that. I think in my field, the challenge really resides in the hardware limitation. So that I'm happy with is always pushing with your hardwares. But you seem a PID controller. Since we have this newer platform, I think I'm convinced that now there's a lot of opportunity with control. So in collaboration with Professor Charles Harley, MIT, we are now working on much more sophisticated control architectures.

For example, colleague has been implementing model predictive control and then compressing them with a deep neural that so that you can achieve much more aggressive flight than what we can do with the PID controller that I've just showing you. This is a ten time slow down video. And the reason that I'm very excited about this flight is that if I look at the composite image now, the robot is pitching at 45 degree pitching angle and is able to accelerate from this point to this point, this in, you know, it's able to accelerate from zero speed to 1.2m/s. This in 15 centimeter. Let me remind you that in the past slide, we said that the PID controller accounts human speed up about 40 centimeter or so.

So this is looking at 300% improvement compared to the PID controller. And until very recently, I, my my student has been able to show quite good, flight maneuvers. If you see in real time. Now this flight looks like this, right? It can fly very, very quickly.

So if now I show you a slow down video, then you can see the robot pitching angle during flight. It's really quite aggressive. And I show this to, colleagues. I think the feedback I heard is that now the robot really fly like insect. And not only can do, point to a point, you can also, follow quite fine trajectories. So this is my of the, sort of optimizers actually by this model predictive controller.

And you can see that if it's traveling at 0.7m/s or so, you can follow the trajectory quite well. And then the maximum, you know, speed that you can get is much larger than one meter per second. Now another thing is business.

In your platform you can demonstrate hybrid, locomotion. I like to use hopping as an example. Hopping is very difficult, and I think the most successful hopping robot I've seen so far is the South Pole robot from Berkeley. And it is very impressive, mainly because that many hops when the robot hops, it has to do a lot of planning and control means making contact with the ground, and that is very challenging because it means within a short period of time, you need to use very complex mechanism to execute a lot of control.

Instead, in collaboration with a former colleague, Papa, we have a different idea. Instead of making very complicated mechanisms about if we make a robot, a hopping robot that 100 times smaller. And the key idea here is that instead of making active control during distance phase, we are going to use the wings to perform low power control in the jumping phase.

And letting the stands for has been completely passive. And turns out that if we go into the modeling, this is really advantageous because because the, contact is now passive, we don't care about the surface property as much as also the inclination angle. So the hopping process become a lot more robust.

For example, we can show the robot can hop on a lot of different surfaces, such as it can hop home soil can hop her eyes. Okay. And they have you can also have one glass layer, but it's hopping. You can also pour water on it.

And after those are done by the same PD controllers. And also you can hop on grass and hop on wood. And then the robot is hopping. You can also rotate the hopping plane and the the same controller will be able to take care of that as well.

Another thing we are trying to demonstrate this was an older, system, which we want to show that because now you are small, and by small I don't mean the size being small, I mean the inertia being small. You can now leverage impulsive interaction between a robot to demonstrate your functions. Previously, aerial robot, try to avoid each other. Now we kind of show a 0.7g robot to hop on top of a 20 gram robot so you can hop over a large guy, right? Otherwise, it would be very difficult for larger scale quadcopters to perform. So hopefully I'll convince you that with this newer platform, we can really demonstrate a lot of fun functions with some gram robot.

The last five minutes or so, I want to show you our longer term goal toward flight autonomy. If you visit our lab, you will see that to fly a subgraph robot, we need a lot of hardware. Specifically, we need a lot of motion capture cameras. We need a lot of outboard compute. And we also need a lot of high voltage amplifiers. The goal of our research in the next five years is to shrink all of those all four things, to be to something that's on board.

Okay. So recently in collaboration with our colleague Farrow Hambling, we are putting in are putting sensors on board. Specifically we have IMU, a optical flow camera and the range finder. And by wrapping very simple observers in our controller, we can demonstrate fairly high quality control with onboard sensing. In this first video we are flying this on board. I have you so on board as your controller, but the position controller is still reliant on a off board Viacom motion capture system.

As you can see on board. I am you sensing it's really quite high quality. And the next video I'm going to show you complete sensing on autonomous flight right in the picture. In the video on the right, what I'm trying to show is that the white on camera is completely switched off, and the robot is able to completely rely on the suite of three onboard sensors to hover around a set point. It's not perfect yet.

Compare it to a motion capture camera. But I think we are making very good progress. At that point. So in my view, I may be biased. I think sensing autonomy and compute autonomy is within reach in a few years. What’s most difficult is, of course, power autonomy. In the past 35 minutes or so, I spend a lot of time to tell you about the good properties of soft actuators.

But what's really challenging is they are much less efficient compared to rigid actuators. If you if I compare the first version of soft actuators to piezoelectric actuators. You can see that they have much lower power density. The robot has much lower lift to weight ratio, and it requires much higher voltage and less efficiency. This this fact is almost impossible for the robot to fly with onboard electronics. But because we can make everything in-house, we know exactly what we improve.

As of a few years ago, we have came out this new elastomers for making the actuator so we can significantly pump up the robot performance. And as I was two years ago, we have come up with this new fabrication process that allows us to now operate at much lower voltage. I know for a lot of you, 600V still sounds very high, but because now we are at 600 volt range, now we can look at tiny components that can allow us to build onboard electronics.

So first let me say that whatever we had been able to achieve this 2kV, we have now been able to achieve at a 600 volt range. And that from a power electronics perspective, is extremely helpful because now we can make very preliminary tiny circuit that can boost a low voltage in DC to a high voltage in AC for driving our actuator. So about two years ago, we came up with our first version of onboard electronics that weighs about 130 milligram, that can be carried by a unit of our robot. That's 158 milligram. Okay. And what it does is that this component, this circuit element, can boost a 7.7 mode input voltage in DC

to a 600 and 400Hz voltage in the AC so that we can drive, so we can get quite good flapping wing videos. Okay. And also about two years ago, for the first time. Oops. I don't know why the video stops, but if you trust me, we demonstrated a very short lift off flight. While the robot takes off with this onboard electronics now we are still very far away from achieving, power autonomy because we don't have a good battery yet.

So the state of the art right now of what we can achieve is the robot can take off its own circuit, but we don't have a tiny battery. And that is really something we are trying to push for in the next few years, which is coming out of its tiny batteries and incorporating that this our power electronics. With that, I want to say that there is also a newer version that we are working on. And the goal is to continue to drive down on the actuation voltage. So hopefully we can continue to improve the transduction efficiency of the entire pipeline.

Also, the last slide is I want to say that we are also very much interested in pollination. This is a very meaningful application. And it turns out that pollination is a lot more complicated than just teaching the robot to land and take off from flowers.

There's a lot of things you need to do to be able to shake off the pollen from a flower, and that again, it's still preliminary, so hopefully I have something more to say in the coming years. Finally, I want to zoom out a little bit and say that our longer term vision, again, is to use a large number of micro robots, apply them in realistic environment. What I want to say is we have made very good progress in terms of the hardware. I think the robot now is capable and reliable and agile, but we really not want to push out in the next five years or so is to thinking about the power electronics and control logic for those systems. With that, I want to thank my past advisors Professor Rob Wood, David Clark and Jane Wang, my collaborators. But most importantly, we are a relatively small group at MIT, but a lot of work that I've presented today are really led by my students With that I want to, stop here and take questions.

Thank you. Thank you so much. Professor Chen.

This was a really nice presentation. Are there any questions here or in zoom? There. Excellent. Okay, okay. There is a question in zoom. Yes, so I can. I popped the question.

So the question asked me about the limiting factor of the fly time. Right. I think there are two part when we talk about flight time. One is in the longer term, people will, ask about the amount of energy that we can store in the battery and etc. so if we think about the amount of energy the battery can store, I think, we are looking at the upper limit of about 300 seconds, but in practice they're really limited by the amount of power that the battery can deliver. So I will say that if we're ever going to achieve a power, Thomas flight, at least in the first trial, we are probably looking at about 10s of power, autonomous flight, and the other part about, limiting factor, flight is on the mechanical issues of this bee. Right? The bee, the flies flapped the wings 330 times per second.

So there's a lot of stress, both on the fly and also on the actuators. In the older version. I was saying that the flight time is about 10s or 20s. That's because the wing fractures tend to fail very quickly.

It turns out that in the old design, the first iteration that I've shown you, the the stubbing Fletcher's has a lifetime on the order of 100 seconds or so. And because you have eight fractures and any of them fail, may means the robot will fail. So our average once you pass about 20s, you know, some fracture, at a certain point they'll fail. In the recent work, the redesign of the fractures in such a way that we have the idea of reducing the torsional stress in the lectures. So right now, really, we haven't seen, fracture failure for a long time.

So now the limiting factor, our flight time really comes back to the actuator. What happens is that the actually there typically have a lifetime on underwater somewhere between, you know, 500,000 cycles of actuation to 2 million cycles of actuation. So that essentially converts to about somewhere between 30 minutes or 2 hours of operation.

So, you know, a good actually, there probably can last for about two hours. And bad. Actually, there probably will need to be replaced every 30 minutes. So that's why right now they're flying for about six, ten minutes or so in a single flight. And the cumulative repair time for this type of system is on the way out to out 2000 seconds or so vicious of a half an hour. The answer to a question. Okay, so let me just move to the second question.

It says in a swarm setting, how do the unions coordinate with each other? That's a great question. So to me on this, we haven't really thought too much about multi-agent control algorithm. I think there's a lot of expert in MIT. So in the demo that I showed you, right, essentially you kind of think about the two robot are still controlled by the motion capture system, which are then controlled by a central planner.

So in some sense, if you have a controller that knows exactly where each robots are and the controller coordination in that manner, if we talk about sort of trying to fly a large number of them, then yes, I think then localized control is important. Or in the future, I think, you know, for example, additive stability, it should be done on board, but sort of this, higher level, swarm level controller still need a central planner for some sort of coordination. But I think the short is I think the hardware is capable of flying in a swarm. But in terms of software, there's really a lot of things that we need to explore. Okay, so I'll move to the next question. The next question is is it possible to use any other type of signal for the actuated, for the actuator instead of electrical signals? So that's a great question.

I think my honest, feeling right now is, you know, for the past ten years, I've been working with flying systems and all the flight work. Microscale robots rely on electrical control, and that is because the time scale of those are extremely quick. And when I'm running, the controller and my controller are running I thousands or tens of thousands of years when they're doing control. And that's where, electrical control systems are really good at.

You can have very precise high frequency control. I think we are collaborating with, Professor Cameron Aubin, and we are thinking about, compulsion driven flying robot. Of course, that solved the problem of energy density. But of course, when you're using compulsion, then high frequency control becomes an issue. So again, I think it's a it's a promising, direction that we still need to explore. But from the perspective of a control, I would say electrically driven system is the most convenient to work on.

And if you use any other type of other system, like domestically driven or compulsion driven, the control will become a lot more challenging. Okay, so I move to the next question. What are the key challenges between the versions that allow the reduction of 2 kilowatt to 600 watt? Yes. So, you can think about of actually

elastomer actuators as a soft capacitor. Right. So I think in your lab, you're also working on an electrically driven soft artificial muscles that you're, you're making any sort of electro, a static driven actuators essentially is a capacitor. So the challenge is really how to make sense. Elastomeric layers or liquid layers, the sooner the smaller the separation the lower to voltage.

You can't get to the two kilo version. I think the elastomer layer has a thickness. I don't know whether I'm 35 micron or so, and the 600 volt version has a elastomeric thickness, I don't know, about ten microns or so. And once you go to scenarios in their layers, why it takes a lot more time to make because then you just need to stack up more layers.

But to make sure the quality of those layers are good, right? What I used to explain to a people a lot of times is not only do you need to make a layer that's ten microns thick, but it also has to be, you know, tens of centimeter in area. So you kind of roll them up into a device. So you're making something that is larger in one dimension, it's more in the other dimension. That's why it's particularly challenging.

So if any of you are interested in making sort of coming up with new techniques for making elastomeric layers, I'd love to talk more about this. Okay, so the next question is, is there any heat related issues linked to the RF actuators used and how do you handle them? That's a great question. In fact, in my first paper that we published in nature, we use a thermal camera to look at this, the temperature signature, those robots. And they are those actually there has been they a fly condition. They tend to heat up quite quickly. Specifically the actually there tend to heat up from, you know, room temperature 25°C to about 70°C in 80s.

That's what we have been able to characterize about five years ago. Now, let me mention that he said very significantly, because of the resistive loss in the electrode. So if you want to reduce the heat problem, there are two ways. One is of course use less or use more conductivity of actual, but two is just simply operate.

You're actually there at lower frequency. If you operate at 500Hz, it will, heat up very, very quickly. But if you if you operate at, you know, 50 Herz or 100Hz, then the temperature increase will be a lot slower. And the other thing I want to say is the actuator, at least heuristically, seems to be okay, with handling high temperatures. Okay.

If you're able to operate it at 330Hz for 1000 seconds and a robot. And actually, that's doable. Cats do. All right. So yes, I think heat will be generated, but I don't think is as severe as, causing the actual to fail or anything in the timescale of tens of minutes. Okay.

So let me move to the next question regarding to your future development. What your do you think about inspiration could play for insect scale or insect like navigation techniques? I think that's a great question. And honestly, we have been just thinking about, sensing autonomy. Right. So what I can say is that the actually, I really want to collaborate with biologists to think about how insects address some of the challenges will be learned. For example, is if I use a gyroscope to stabilize the robot, that's not very power hungry, then probably takes about a few immediate loss of power.

Considering the goal that is consuming hundreds of many of us for a flight. But it turns out that achieving position feedback is extremely costly. If you think about anything like optical flow, if you think about anything like time of flight sensor, you have to shoot down some infrared radiation and wait for the radiation to bounce back. That actually it takes tens of videos. Right?

And there's a lot of compute that's needed for optical flow. So, my current hypothesis is that I actually don't see a lot of insects that will be able to hover stable the at particular position in space because it's very energy hungry. So I think what we need to do is to to collaborate with biologists and identify behavior patterns. For example, low power optical flow, etc., that the insect use so that, you know, we, we, not only emulate the navigation techniques, but think about what kind of navigation method, can allow us to save the amount of energy that we can use for, for trying to perform those type of injuries.

Right. What's the least amount of energy we can use, for example, to teach the robot to land? As well? Okay. So next question, are they know about weatherproof? That's a question I saw.

Was this moment. No. One of the big weaknesses of this robot is it's really sensitive to moisture. So actually, there are a couple of days in the summer that we cannot fly. If the moisture if the humidity in the room is higher than 80%, I think the robot tend to die very quickly. So in some sense, yes, you can possibly, address this problem by coating the.

Actually, there it is. Parowan is a very similar apparently. But no, at this current moment, if you operate the zero about in a high humidity environment, it will not perform well. Okay.

Next question is flow control through micro robots wings a consideration you are exploring to increase or optimize flight? Or is it already an optimized stage? So I've actually, as a grad student, spent two years studying again the filming aerodynamics. We've done a lot of, work. CFD is at scale particle image symmetry. This is not a fluid mechanics talk. So I don't want to spend too much time.

But let me say that a lot of the, trade offs that we are struggling with is because we have a under actually this system, we just have one actuator and we rely on a passive fluid structure interaction. My hypothesis is that if we can have two degrees of freedom, you can definitely increase the net lift. And also sure about, efficiency. But in terms of increasing lift, if I can also control the wing pitch dynamics that I think, we can further increase the payload of this system.

Okay. And then last question, is there any tutorial, a guide to build such kind of spilled out for experiment? We don't have a tutorial yet, and I have to be honest that this robot is pretty difficult to make. And and even in our lab, not every student can make this. I think, to be honest, this is a problem that our entire field needs to address.

So the best I think if you really want to build this year, but of course you can read our papers, but I think the best thing to do is either come to my lab to shadow a student, or come to my advisor job, or use lab and shadow a student for a couple of months. And and let me be honest, I think the test for our student is, you know, if if I have an incoming student by the end of their first year, if they can't be independently a robot and fly them, that's already a success. So there's a lot of, small things we need to teach you in terms of fabrication. So, so, so yes, if you're interested, please talk to us.

I think the best thing to do, if you want to build those robot, is to come to a lab and the shadow of the students, and then slowly, you can learn all the fabrication techniques. Okay. Is it possible that you'll hear about the complex motions from insect videos to reinforcement learning? Yes. I didn't talk about this work, but we recently have a, work published in Robosoft, and my student will be there and presenting that work.

So reinforcement learning. We are not learning from insect videos, but rather we are learning from our, models. So yes, I personally see a lot of, potential in reinforcement learning is just, you know, to be honest, we haven't had a lot of exploration in that area yet.

2025-03-06 11:31