Foundry 2022 Operating System Demo

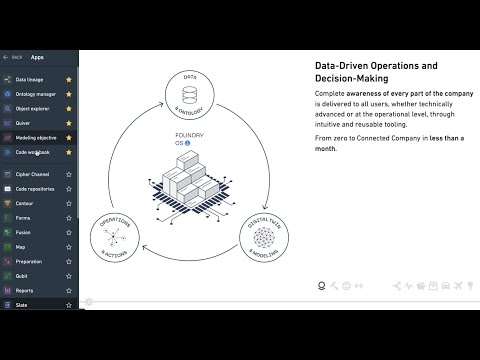

Thank you, everybody, for joining us today to go through this walkthrough of the Foundry platform. There's a lot to cover, so it'll be a bit of a whirlwind tour. I won't be able to get to everything, but I'll try to give you a representative cross sample of kind of the key parts of the platform. Foundry behind the scenes is 100+ different microservices kind of surfaced up as kind of two dozen different out-of-the-box user facing applications, actually a bit more. In addition to applications that you can also build. And these

applications stretch across effectively every persona in the modern enterprise. So we'll try to take a tour through kind of some of those key personas and call out what kind of tools or services are used at every step along the journey. You know, we can think about the functionality and foundry kind of in three main parts. So there is the data and ontology piece. So how are we connecting to

different data sources, data lakes, data warehouses and bringing that data together into a semantic foundation that can then be used by the entire organization. We call this an ontology. So we'll talk about the journey of how we connect into different data sources, refine them, build out data integrations with health checks, security, and kind of build out this ontology as sort of a starting place will then want to bring in kind of data science and modeling into that same foundation. So this is sort of the sphere of digital twin and modeling. So how do we think about leveraging business logic, data science, ML, LP models within the same foundation that we've built on the data side? So kind of trying to fuze data and models together into a common foundation through this ontology. And then kind of finally, we'll talk about how the combination of data and models comes together for different types of end users.

So, you know, these are everyday users doing core operational functions in the organization, and this is 70 to 80 percent of users of Foundry in most contexts are sort of quote unquote non-technical users trying to make use of the fruits of all that labor towards real operational workflows and different applications that are kind of existential to the business. So let's start at the top with data and ontology. So what I want to do is first go into a first application here, which is called Monocle, which is sort of our data lineage graph for the story of kind of how the data comes together into an ontology. So here you'll see this is a pretty notional example.

So it's a little bit simplified a supply chain ontology, right, which is showing me kind of the different concepts I'm going to work with in my supply chain demo. So I've put together common concepts, right? Like a supplier, an alert, a distribution center, plants, materials, et cetera. And each of these also have each of these objects have links between them. We'll go into how each of these objects also has actions associated with them.

But the idea is, is that if we look at kind of any one of these objects, so we're going to go into the plant to begin with here to start. Each of these is the culmination of many different steps of data integration. So if we zoom out here, what we see kind of in this spiderweb diagram is the story of how we integrated different data sources to build this plant object to start with on the right hand side. So reading this from left to right, what we see here is we're actually pulling in kind of a couple different data sources.

Typically, these diagrams are much, much larger, much, much more complex. But this is just a starting example of us connecting to. You'll see on the left hand side and SAP system as an example of one business system, Treasury.gov, which is an example

of an open API-driven source for connecting to. And then I have a time series source here too, which is a synthetic sensor that I've set up just sort of in my environment to work with. And the idea is that every one of these steps, every one of these sort of rectangles or nodes you see in the graph is one step closer to refining, cleaning, joining, applying business logic to that data to get us to that semantic representation. So for instance, if I kind of take a look at one of these datasets, I can always sort of pop up and say, OK, what does this data actually look like? So let's take a look at the PySpark code here that actually shows us how we're actually applying logic at this step in the pipeline. If I select a different node, I can go and take a look at the history tab, and I can see that we have full data versioning for every version of the data that's updated in this particular part of the pipeline. And this is huge because

I can go back and take a look and say, "OK, who actually updated this particular routine or this particular schedule of this data build? What was the actual result for this version of the data versus the current version of the data? And how can you actually roll forward and backwards through different versions of the data with the code and the metadata at each point in time?". So it's a powerful ability for me to not only look at data pipelines as they exist today, but also be able to look at historically how they might have changed over time. There's a few other things I want to make sure that we call out here kind of in this part of the Foundry platform. So kind of the build system for data or the data ops part of things.

The first is, you see kind of these lines connecting all these different nodes together, and this is indication of of lineage and. Lineage is actually a concept that is intertwined with security in the Foundry platform. So we think about how we're securing data and how that security will flow into the ontology and into workflows. Beyond that, let's actually change the view here for a second to take a look.

So we're going to change here to the permissions view and you'll see here that it's now showing me dynamically what my perms---, what my permissions are across this entire pipeline. So you'll see it's blue, I have access to everything here because I'm sort of the administrator here of this environment. But what's actually happening is we're assigning permissions to the data sources. So the SAP system, Treasury.gov, et cetera. Basically, the role based access permissions that might be coming in from an active directory system or some sort of other authorization scheme that an organization has, and we're then propagating those controls forward.

So that means that every child will inherit the union of all the permissions of its parents to make that a little bit less esoteric, let's use another example. If I change this permissions view, which I can do as an admin, to my colleague Jason Richardson, we'll see that Jason doesn't have access to most things here. And that's because he didn't have access to anything except for the Treasury.gov source.

And so when the permissions flow, he's going to lose access the moment we start to blend that source with other sources. You'll notice he actually has one sort of exceptional piece of access here where he should have known that he would have not had access originally, which is on this OFAC clean data set because we actually chose to situationally expand his permissions in this case, but not allow that to sort of propagate forward to any other pieces of the pipeline. The point here is is that we can mix and match very different types of security models to get very granular, robust results so we can intermingle role based access controls, classification based access controls and purpose based access controls all here. So we can have very fine grained control over who is doing what, with different personas, different parts of the organization through Foundry.

And this was very much built from our own experience in the field as Palantir engineers, where if you thought about just doing security based off of the location of data and some sort of file system or some sort of bucket location, it became really hard to actually make sure that security was robust as that organization organically started to pull that data into other places. So data and security kind of flow together with lineage, and it's immutable as you work throughout the rest, throughout the entire platform or interact through the APIs. So that's sort of the first thing to call out here is security is sort of baked right in as part of the metadata in Foundry.

The second piece I want to call out here as we kind of work through things is "OK, so I build out these pipelines that get to be quite robust to refreshing all the time. How do I keep these things healthy?" And so if I change this to now a data health view, what we're going to see here is this is a view into the parts of the pipeline that I've instrumented with data quality checks, and I can always kind of pop this open at the bottom to say, let's take a look at the checks that we have across this part of this pipeline. So I'm going to take a look here at one of these nodes, and we can take a look and say, let's add a data health check here.

This is going to pull up a list of out-of-the-box health checks that we can sort of assign to different points in our pipelines. So is this data updating on time? Is it refreshing on the schedule that I expected? Are the files the size that I expect? And are we seeing the cardinality of different data columns with the statistical results that we expect? All of these things can be quantified here through point-and-click, but can also be set through code as well. So we'll go in in a second on how we actually author these transformations, but the data health component is key to keeping these pipelines healthy as they're sort of refreshing all the time. And so having these checks right here saves you having to go to a different platform to kind of see what's going on there before you kind of come back to this platform here.

All right. So let's now switch back to our sort of, our default view, and let's take a look at more detail on what's actually going on in these individual steps. So I'm going to open up one of these sort of, these the steps of logic here, and we're going to go in and take a look at the repository and how this particular result was built. So this is going to launch me into another application in Foundry called Authoring, which is an IDE or an integrated development environment where I can see here how I can actually make changes to this part of this pipeline with kind of full change management built in.

So the key thing here is that you'll see, like we saw in Monocle, the previous application, there's sort of this branch icon here at the top. And what this allows us to do is treat data the way that we treat code. And so instead of making changes on the master branch and trying to then propagate my changes through which could cause all sorts of downstream issues of not careful, what we've actually done is virtualized the entire change management process that would normally involve going to a UAT environment, but have done it directly here in one instance, a foundry using logical isolation in a cloud-native paradigm. So what I can do if I want to make changes here is I actually open up a branched version of this part of the pipeline. And then when I've made my changes on that branch, I then create a pull request to actually merge my changes back into the master branch. So if we go into some of the old pull requests, take a look at what these proposed changes look like, we can actually see line-by-line kind of what those changes were.

And we can also get an impact analysis of, if we were to merge this into master, what would be affected? And because security again gets blended through different parent permissions and different role paradigms, what security changes would actually occur as well? And you'd see we didn't actually see any in this particular case. This is a huge difference from the normal process of saying, OK, if I wanted to make changes, I'd have to clone all my pipelines and data over to a UAT environment, separate environment, make some changes and then hopefully say a prayer and then port them back to master and hope that everything just sort of works. Here, we can actually do that entire process with total logical isolation, total sandboxing, here. We can iterate on these branched versions of the data and actually use different kind of analytics tools and visualization tools with that branch data that we'll look at later and then we can choose to come back and then merge that into master. And the most powerful thing about this is everything is sort of git-based in terms of semantics. You'll see here the clone icon at the top, which means if we ever want to go back to a prior version of master or any of the other branches, we have a full commit log as well.

So we're never, sort of, out of luck if we merged something in that we realized later has to actually be undone. We can always just move back like we can in Git with code to a previous form of the data. And so being able to treat data like code, having tje health checks, having the integrated security, we think gives you the build system to be able to go really fast when it comes to assembling and growing these data pipelines organically, which is always the case, right? These never sort of, sort of, waterfall method to building these things upfront that never changes. It's always a constant sort of state of iteration and being able to try to keep up with the latest demands. I want to show you guys one more thing before we go into the ontology and kind of bump things up a level.

We've talked a lot about being able to author kind of data pipelines here the way that you can actually author pipelines and Foundry is, you know, by default, we actually offer two out -of-the-box compute engines that are auto-scaling. So this is Flink. We're running on Kubernetes for streaming computation and spark running on Kubernetes for batch computation.

And so you can do any you can basically use any languages that have bindings to those two runtimes. And so if we go back to kind of our repository here, we'll see where we're using PySpark, we can use Spark sequel, we can use Java, we can use Scala. We even have bindings for Groovy. And so we adhere to open standards all the way through when it comes to code authoring and critically when it comes to data formats as well. So if you ever want to look at what's happening with kind of the resulting data in any step of any sort of transformation in Foundry, if we kind of look at what's happening here in the preview or we go to the History tab here, we'll take a look and we can see that any of the data here is effectively just data that's in parquet or in Avro or in sort of any open format that I'd expect.

So I can always interoperate easily with any of the data here in Foundry. And critically, even though we're using Spark and Flink as our runtimes, if we take a peek at what's actually happening under the hood with Foundry's build service, we'll see it's just calling those two runtimes because we've registered them with Foundry. And so if you have a high performance computing environment or you have something that's an external compute engine like you're using something like another Spark environment or a proprietary runtime, we can register that runtime with Foundry as an external build worker. And when we refresh these pipelines, you do the orchestrations, Foundry will know at certain steps to go and federate that computation to that build worker, which allows you again to have a totally extensible compute framework here with what we think is great starting defaults. But you're never sort of locked in to just using the compute that we provide as part of Foundry.

All right. So one more thing to show you guys and then let's bump it up a level. So we talked about being able to compute these pipelines and author them manually. What about data systems that we've seen over and over again, like the SAP's and the other ERPs of the world or the CRMs? So what we try to do there is try to say, can we actually implement what we think of as software-defined data integrations where you don't have to manually redo the kind of cleaning and transforming work that we think is actually quite standardized and possible through kind of ML-assisted techniques? So what I'm going to do is actually open up the kind of data source for this SAP instance we're connected to internally. And we're going to take a look here at kind of how this looks. So this is the SAP explorer that we have kind of as a kind of a rich connection interface here.

And you'll see it's showing me kind of all the modules in my ERP estate like my MM, my data dictionaries. And it's also even pre-populating some workflows I might want to do typically that are common for SAP. And the power here is that instead of having to think about browsing through all the individual tables myself, we have a native connector connecting at the application layer, which is called NetWeaver, and we're actually dynamically pulling out all the rich metadata that actually tells us much more than just the raw table information in SAP. Like we're able to see all the German acronyms auto-translated for me. We're able to see kind of

the foreign keys, the relationships, and the idea here is that we can kind of derive out that federated lineage and then auto build pipelines and even starting objects in a matter of hours, as opposed to weeks or months of manually recreating SAP transformations on the Foundry side. And this applies critically not just to standard modules like MM or SD or DD, but also Z tables that you might have customized. Because we're not just doing static mapping, we're doing a dynamic inference of the metadata through NetWeaver. And so if you have a very custom SAP estate or CRM estate and many people do, it's very simple to install the SAP connector, which is, you know, certified native, and be able to browse around and then kind of in a shopping cart like experience, pull in the data that's of interest to you into a set of pipelines in Foundry that you then start to work with. And actually, that was how we created these green pipelines here that we saw before, and we saw what was actually produced was PySpark.

So even if it gives us a starting set of pipelines, we can always choose to expand that. We're never sort of given some sort of black box output that we can't change. And so that's an example of, for more common systems, the CRMs, the ERPs, the others of the world, how we're trying to invest in speed, time to value speed for our customers to say "we don't want you to have to redo all those integrations if it's not required." All right.

So we've talked a lot about, in this first mile, kind of getting data into the ontology. Now let's actually talk about the ontology itself and a little bit more depth. So I'm going to go now into one of these objects and kind of pop the hood on what's actually happening here. So to do that, I'm going to jump into our ontology management application. And this is a view that a data governance kind of team member or a part of the business working with it might start to get involved. Or they might say, "OK, we've done all the integration work now let's decide how we're going to actually make this appear to end users," right? So everything from this plant object we've been working with, is it a normally visible object? Is it hidden? Is it prominent? Is it something we're experimenting on? Or is it actually an active object that people should be using daily for different workflows? Literally, how are we mapping in every element from those kind of golden kind of datasets we produced into object form? So things like geospatial properties, how are those coming in? What is the API name for these different properties and the actual IDs as well? Because we're gonna want to use this with all of our different tools kind of in lots of different kind of architectures, and you'll see we have all this information kind of rolled up here, right? Including kind of the link types, usage information and on the sidebar here, we even have ways to go in and say, I want to apply additional security here, restricted views.

I can do that here as well. I can also prime these objects for different types of workflows. So it might be the case that this plan objects going to be be primarily doing simulation based workflows or geospatial workflows. And what we can do here is sort of preset some metadata which you can always manually set ourselves that will just get us again time to value faster.

Let's get these objects up and running so we can now start to work with them. And the idea is you do a little bit of configuration work here, which again can be templatized to a large degree, and business users can then dive in and immediately start to make use of this data in a host of different out-of-the-box applications in Foundry. So let's take a look at some of those applications that kind of come to life for a business user, for somebody who's non-technical, who probably isn't starting their journey in Foundry in the data lineage application, in the coding environments or kind of in the ontology management interface, either. They're probably starting in an application like Object Explorer, which is a kind of point-and-click way to be able to browse around different information in the ontology and sort of feel very familiar to folks who are kind of searching for information or kind of using kind of different sort of web applications day in and day out. So you'll see here that I have a lot of different objects here that are surface to me in Object Explorer.

I've got my aircraft, my delays, my flights, from my aviation demo. I've got some marketing objects here called interactions campaigns, offers, things like that, and to search for something, it's as simple as searching for a type of object I'm looking for or any individual object property. So in this case, I want to look at those plants we saw before, and what this will do is actually launch me into an investigation or exploration that's been kind of pre-configured for me to be able to start to explore this data.

So this is it's a very simple sort of set of visualizations here, right? It's showing me kind of where the where the plants are geospatial based on that geo data that we affixed. It's showing me some roll-up statistics like 30 days of inventory, production capacity, things like that. And if I want to go into a 360-view and one of these plants, I have a list here to be able to start to kind of dive in and take a look at some of this information at kind of a higher level.

And the idea here is that, you know, it's intended to feel familiar again to folks who have worked with kind of business intelligence or visualization software and just roll up all that information that's coming from all those different data sources in a single view. Right. So I can see information about, like again, the geospatial information. It's address some unstructured data, like a schematic, maybe some risk factors or material information coming in from the ERP and EMS systems and even things like timelines as well here. And these, like 360 views, can look very custom and very different, depending on the user type or the business unit. And you might imagine that you know there are certain users that want to see IoT information or video information embedded here and others that want to see financial information or kind of more kind of CFO style of use.

And so you can have different lenses or different kind of views assembled on the same types of objects here and the power of object you splurge that allows people to traverse the different objects in a completely security compliant way and at a scale which you could have millions or billions of different objects here where we're actually allowing people to kind of traverse in a way that that makes sense and isn't overwhelming. Or isn't too granular or unfamiliar for folks who are familiar with kind of -- more business centric tooling. So this is just one view into the data, though, I think it's important to realize that so you know, it's point and click, it's kind of based off of the object's in this paradigm.

But if I'm somebody who is a more technical user like an engineer or somebody who's an analyst that maybe is used to working with series data, I might use a sibling application of object explorer, which is called Quiver. And Quiver here actually allows me to look at charting information on time dependent data coming in. So this is going to be streaming data coming in in real time in an oil and gas context or in utilities context. And as a user who might be using an application like this, I'm probably thinking in terms of not just point-and-click, but in terms of formulas and integrals and interpolation and scatter plots. And I can actually derive new data here.

Just through point-and-click. So I can say, you know, give me the summation of these two series, produce another series. Let me actually look at the ongoing differential between these two pressures, and let's produce another pressure. I can even do things that are more sophisticated, like let me do a search across these series for any time the difference in these values is beyond a certain threshold. Or let me actually do a point-and-click training of a first approximation machine learning model. So I actually want to build a classifier, and maybe I kind of tinker with that here as somebody who is an engineer.

And then I want to be able to then collaborate with somebody who's a data scientist on my team. And so I can export kind of my first approximation to Python code, kind of with full library semantics for interacting with this time series data in Python as well. And again, the power here is it looks pretty technical, looks pretty custom, but at the end of the day, I'm just interacting with those objects in my ontology like we saw before, so I can just kind of browse for more objects and plop them onto these plots to be able to use them.

So another view into that ontology that we built that can be serviced by another type of user. If I think geospatial, let's say not just IoT centric or maybe object centric, I might want to use an application like the Foundry map, where I'm looking at kind of all my plants and distribution centers kind of here, and I can kind of toggle layers on and off as I expect. And I might want to say, you know, let's take a look at, you know, for this particular distribution center, show me kind of in a graph-oriented manner, all the customers connected to this distribution center, and it's going to visualize that here and allow me to kind of explore, you know, the different flows or kind of supply or logistics information that might pertain to the dependencies between different objects. And so this is another common view, again, looking at the same ontology just through a different lens.

And so this hopefully gives you an idea of if you do that work of kind of hopefully being able to rapidly integrate in data from different sources, right? Or data lakes or data warehouses and layering in those health checks. Having that security built in allows you to quickly assemble this semantic model, which then opens the door to immediate access and exploration for different types of users. Whether it's an object explorer or it's in Quiver, or it's in sort of a map-based application. And that really is sort of the journey in a very, very short form of kind of the first mile of the platform, right? Which is sort of data and ontology. If we now think about how digital digital twins and modeling and data science come into this, it gets really exciting.

And so the first thing I'll say here before we kind of go into the data science part of Foundry is if you talk to four or five data scientists inside of Palantir and ask them what tools they love to use, you'll get 10 different answers. People love to use, you know, Foundry's built in machine learning capabilities and authoring capabilities, but also they love to use data robot Dataiku, SageMaker, Azure ML, you name it. And so we've tried to build this part of the stack to set, with the philosophy in mind, of build anywhere and then when you're ready to deploy into operational applications, let's give you a streamlined way of doing that. And so as an example of how I might build a model end-to-end using Foundry's existing, kind of, data science tooling, I'm going to open up and kind of switch personas again. So we talked about the data engineering persona, some of the business personas, now let's think about the data science persona, where I'm now here in an environment that again looks kind of graph like or sort of a sort of lineage like, but is intended to feel very familiar to those who have used other data science tooling. So if I pop

open one of the steps in my model here and I kind of zoom out, you'll see concepts like training sets and feature creation, kind of all the way back to the objects I'm actually pulling data from. And so every one of these nodes is a step of PySpark based transformation. I could be using Pandas. I can be intermixing R or Spark SQL. And you know, the joke that we have internally is that the most interesting thing off the bat to data scientists is the import data button. It's being able to say, "hey, you can get clean, consistent access to that data that you've built into the ontology or anywhere else in Foundry." And as a data scientist,

I don't spend 80 percent of my time rebuilding these data pipelines myself. I can just tap into the good work of my data ops colleagues or my data engineering colleagues. And if anything goes wrong with those data pipelines like the ones we saw before, I can get immediately alerted to that because the health information will flow down to me as well. And so I have a stable, rich, healthy foundation to build on, and I can then kind of use all the packages and all the techniques that I am familiar with here to actually build out kind of different models end-to-end batch or live inference end points and then actually plug them directly into different operational workflows. And of course, I can make use of kind of all of the packages I would expect so we can mirror things like can't afford you. If you have your own package managers, we have the added benefit here of kind of you can set up custom profiles that not only have different sort of compute profiles associated with them, but also if you have particularly sensitive or license restricted libraries, you want only certain users to be able to use.

We can enforce that to the same security perimeter that's enforcing data security and ontology security that we saw before. So it allows people to make kind of secure use of commercial libraries as well. And so the idea here is you know, build here. We think it's a

great, streamlined set of tooling, or you can also connect in the same way that these tools are connecting. You can connect directly from the DataRobot to Dataiku, the SageMakers of the world to the ontology through open APIs or through Python clients that we provide to all to build your models and those environments as well. And you can connect via REST via JDBC via direct file access.

We have an we have kind of a Foundry version of Hadoop FS, and it's super streamlined to get data sort of in and out of Foundry and not just data, but also lineage information, the semantic representations, all the versioning information, all that's available through the APIs. And so the idea is you build your models, you've built some here, you've built some on other tools. Now I want to be able to register them and use them in a variety of different end user applications.

And so what I'm going to do now is open up my model objectives library. This is kind of mission control for all the models that I've been working with in this particular instance of Foundry that I have access to. And a lot of this paradigm was informed by some of our work in the defense space where there's kind of this three part sort of approach to deploying operational AI. There is, I first want to define the modeling problem, right? Not actually think about a particular model, but what is the objective I'm actually trying to run with here.

I'm probably then testing dozens, if not more models against that objective. And then I'm figuring out how I want to then deploy the winning models into production. And so I'm going to open up a few of the objectives we have here so we can take a look at what's actually going on. And the critical thing here is this does not mean deployed in Foundry necessarily. Several of these models are living in other environments in Azure, in AWS and through managed services like DataRobot.

And so we're just registering these models here, and we're going to basically have a holistic approach to comparing them as we think about which ones are going to be deployed into production. So if I open up my customer demand forecast, sort of objective here, you'll see at a top level it shows me all this kind of 360 information about the model, right? Hey, what are we trying to do with this? What's the approach kind of broadly defined? Some information about the latest releases, relevant files that actually informed or were used to train this particular model that we're commonly used by every different model that we tested, and even lineage information about the applications that are going to be depending on this model. And also, as we'll see a little bit later on, some information that we can about how we're actually chaining this model with other models inside of simulation environments. And it's all of that is kind of rolled up here for me and I can go in on the sidebar here, for instance, take a look and say, let's take a look at all the models that we've actually proposed to be kind of deployed against this objective right now.

See, some from my colleague Matt, some from my colleague Andrew, and I can always look back in time to say, OK, for like this lasso model, we actually can see the details about where it was built, when it was built, and also if it was rejected, why that was. And so kind of the full kind of life cycle around kind of the approval process like we saw with data, how we're treating data ----- like code. We're also kind of treating models like code in kind of the same way the metadata around the approval process. And, you know, if I kind of switch over to another objective here, which might be my sort of campaign model, I can even go into the comparisons tab here and take a look and say, OK, so for different models that we're assessing, we rejected one of them, but let's still add it into the comparison.

We have a random forest model and SVM model and then your regression model, and I want to I compare kind of the key theories, the key criteria across these models. So, you know, things like the ROC curves and how basically the different kind of core dimensions of evaluation are performing across these different models. And so you can kind of even customize very easily kind of what these core dimensions are, and you can even segment out things that are sensitive. So there might be, you know, personally identifiable information or things that have to be locked down.

And you can actually cordon off that kind of feature comparison or that kind of dimensionality comparison inside of a different set of parts of the objective that are only open to certain types of people or certain folks with certain permissions. And so all this information is here, and the idea is that once you've sort of made our selection and tagged, the model is being ready for production. The kind of key step here, the kind of coronation is we're then going to actually bind this model to the ontology. Now this is key because this is basically giving us a type system for the models.

And so what you see here at the bottom here with the modeling objective API is the winning model, which in this case was a SageMaker model is actually going to take, as two of its inputs, two properties from the ontology from from our supply chain ontology. We've been working with this entire time, so it's going to take the cost. It's going to take in the price into this live inference end point. And then every time it returns a value for the endpoint, we're going to basically take those three values - demand, capacity, inventory - and map those back onto the objects in our ontology as time dependent properties or, in this case, model dependent properties that could be time dependent as well. And so the idea is that we

have configured the ontology to basically have to recognize to be aware of the fact that it's going to be receiving values from a model. And so this is huge because what this means is, with this binding in place, I can now use this model. That's sort of one, you know, ----- it's been competed, it's one it's now bound to the ontology.

I can now through that ontology binding, insert the model into not just one application or five applications, but dozens of different applications and sort of seamlessly have it appear to end users. And so as an example of how that kind of comes together here in this kind of takes us to kind of our final mile, which is sort of operational applications. Let's open up a supply chain control tower example and take a look at how the data in the models kind of weave together for an end user application.

And so what we're going to open up here is just an example of an application that again, might be a starting point for many users in the enterprise. This is sort of my top level control tower. It's showing me some roll-up statistics at the top, and I see here that we've got different objects here on a map.

As an operational user, I probably don't care about what an ontology is or kind of all the things that we've been through. I just know that I have a job to do. So I see, you know, we have a plant here in Michigan that need to go take a look at actually that production capacity is starting to dip, and I want to know why.

Maybe I received an alert about this, and so, you know, that alert could have come in via email or it could have come in via text or kind of any sort of integration with a messaging system. And I can go into my alerts tab now and take a look at kind of a prioritized ---- set of like kind of different alerts that I can start to action here. So you'll see there's all sorts of things that are coming in here, and maybe I just want to look at those that are high priority.

So I'm going to filter here on the left hand side, and I'm going to take a look at one of these alerts here, which is a business interruption related to COVID. And you'll see that this is pulling together lots of different information from everything we've covered so far. Right. This alert was generated maybe by a model pipeline. It is pulling in data from the supplier, and the plants are different links in my ontology.

It's showing me the impacted plant that this pertains to, and it's actually showing you recommended actions as well, which are in this case coming in from a model that I've built. The critical thing here is we're not just stopping with looking at this alert and saying, "well, OK, looks like we have a problem here." We're saying we want to be able to take action now to actually do something in response. And you'll see that we have different actions that we've actually pre-assembled for these users canceling an order or partially fulfilling an order or actually going into more advanced actions like reallocation that will look at in just a second. But if I hit this cancel customer order button, you'll see that it allows me now to sort of it kind of preconfigured and action I can take here on this particular alert or a related alert, and I can then choose to submit this in a way that will be nondestructively captured back into the ontology. And you might be asking, well, how does this work, right? I don't want any user to be able to take any action on any part of the ontology and start to update things right. That would be chaos.

We can't have people modifying values willy nilly. Turns out, the way that actions work is they're actually part of the ontology at the core level. So if we go back to the ontology configuration interface that we saw before we were talking about bringing our plant together and all of the connections here, if we go back to kind of the main page, we'll see their object types, of course, that we're defining. There are link types which we've seen all throughout, but the critical third element is actions as well. And this is the dynamism here that kind of makes the ontology truly special is we're not just talking about kind of semantics, we're also talking about kinetics. We're talking about the kind of actions that can modify the state of the ontology in a compliant manner.

And so if I, for instance, search for that cancel customer order action that we saw before, we'll see this is in our library of actions. And if I open this up, you'll see all sorts of related information like we have with other parts of the ontology, the API name for this, this cancel custom order, the actual ID that we can use, we can programmatically interact with this and the actual logic used to create this particular cancel customer order action. So we see here what objects that pertains to, what properties it will be updating, what parameters it takes and even what validations it uses as well. And this is all kind of configured here through point-and-click, but it could be configured through a much more kind of robust kind of code-based interface as well, using the authoring tools that we saw before here and kind of the more data ops part of the stack. And so with this action essentially defined every time I want to cancel a customer order, I can do so in a reliable, repeatable, auditable way. And so that means that every application builder isn't implementing their own version of what it means to cancel a custom order. It means they simply use

this action of the library, and it knows intelligently how to basically modify different objects. It knows what sort of precautions to take. So if I'm a junior employee, it might be that when I click the cancel customer order, it's aware of who I am and it has to go and then get approval from my manager before it actually gets confirmed. And then it might be that the last part of this is a webhook or some sort of write-back routine that then actually synchronizes this change back to an ERP or back to an MES system. Again, all of that is a pretty complex flow for an action or a piece of logic that I don't want the application builder to have to think about. I just want them to be able to embed that piece of logic here as an action and have Foundry at a system level be able to deal with all that orchestration that happens thereafter.

So we've kind of alluded to this, but what this means for the application builder is that even though these applications we've been looking at here in this particular supply chain control tower example looks very custom - It's actually all just built using a low code application builder, and in this case, a no code application builder. And if I want to add something here to the top, for instance, it's very simple, just a point-and-click my way into adding a new widget here in this particular view, so I could add an object table, for instance. And it's super simple to start to wire in different things into these views, right? Because I'm not dealing with pulling data into kind of a kind of siloed database and trying to do kind of things in a one-off manner. I'm connected directly to the ontology and so I can easily open up a set of objects you've been working with before, like the plants, and I can start to actually pull them in here and take a look at how they will actually get bound to these widgets that we've set up.

And so here, I'll type in our MSC plant in this case, and it will now be able to pull in all that data in a way that is aware of who I am and my security profile. I can add all the properties here, and just like that, I've added in a new widget in the same way that I can add in data. I can add in those actions as well. And what this means is, as an application developer, I no longer have to think about the full stack of considerations myself every time I want to deploy a new operational workflow or application. I don't have to think about setting up a new hosting environment. The databases, the indexing, the compute, the storage, that's maybe non-database.

How am I capturing back feedback from users? How am I binding models to the different application states? How am I capturing feedback from those models? All of that is taken care of below the level of the application because of what we saw in the data integration, in the set up of the ontology, and the actions in the actual wiring in of the models that come together in these objectives and the actual binding of the models to the ontology, to these objectives as well, which means you can do a fully feature rich compound applications in a couple of hours or a day or two, as opposed to spending months or quarters of effort. So we think this is a really powerful part of the platform, and oftentimes, you know, this is this is just, you know, an example of kind of where the kind of operational capability gets surfaced. Kind of what we've been looking at here is one example of an operational application builder called Workshop. There are other application builders in Foundry as well.

So there's another one called Slate as sort of a sibling application. And the key thing here is, of course, you can go and you can build your own custom applications as well, and you can kind of do it an object oriented manner or even do it in a tabular manner or a more classical manner as well. There's kind of a full set of documentation here around not just sort of interacting with existing applications, but building your own applications, using the APIs and Foundry using every part of what we've talked about kind of throughout this demo.

Awesome. Now I have one last thing to show you guys. And then then we'll sign off here for this particular demo, and that is kind of how these things start to come together in kind of a more strategic kind of digital twin paradigm. So what am I open up here is one final application here in Foundry that we call Vertex.

Vertex is kind of like the map application in Foundry. Sorry, the graph application in Foundry you might think of, right? It's showing me kind of all the relationships here, kind of between all my different objects, right? Like if you were just to look at, we started here, you might think this looks kind of nuts, right? There's so much going on here, but now we know exactly what this is, right? These are this is just showing me kind of the kind of connection that I've built into my ontology, right, where every one of these object types has a lot of data being integrated into it. And then we've also overlaid the different machine learning models and data science approaches as well. So in this case, this is an example that's based off of one of our medical supplier customers, kind of loosely based off of anonymized, of course, from the work they did during COVID kind of the early phases of COVID, where they needed to really map out kind of the full flow of how they were building and shipping ventilators from everything from their upstream suppliers to intermediate goods to their plants all the way down to their actual distribution centers and their end customers themselves.

And so what this is showing here is sort of the bird's eye view of the entire value chain, right? And being able to kind of scrub backwards and forwards in time and saying, show me where alerts are firing, where inventory is getting to dangerous levels, where we're basically experiencing kind of fundamental dislocations, right? And I can always kind of go backwards and forwards kind of scrub through kind of different points in time here to be able to look at kind of where things were in kind of the danger zone at different points in time. And so that's useful, of course, to be able to kind of be able to get visibility into the whole kind of connected chain here, which was spread across dozens of different data systems and models before. But the more important thing is, I need to now be able to kind of update my strategy and actually operate now in this sort of very volatile environment, which it was last year. Right. So there were

simultaneous supply and demand shocks and kind of every pre-built model was kind of thrown out the window. And so they needed to be able to simulate kind of different scenarios that were exigent day in and day out. What would happen if one of our suppliers went completely offline given the pandemic? What would happen if one of our medical center customers had 10 times normal demand they normally have for ventilators? How would we actually be able to meet these different requirements, given how we're currently doing allocation, how we're currently doing different supply tactics? And so what we can open up here is actually a simulation tab to think about running those simulations, those scenarios directly on top of sandbox versions of your world, right, of the full ontology. And so we can actually simulate directly here, given that we have the data in the models connected, what would happen if that supplier went offline, for instance, and you can kind of run these simulations as kind of contained case studies.

And so in this case, I can make a very trivial change, right, where I could say, let's literally just update the valve price per unit. What if it dropped to four hundred? Let's literally now simulate across the entire chain what effects that would have across kind of every dependent property that we have here, kind of modeled in the ontology. And so that's a very trivial example. We can now kind of have this sort of modified simulation of the scenario state continuously run as new data comes in and we can kind of continuously assess what its impact is. And as you can imagine, it gets much more complicated and sophisticated when you're testing compound changes to parameters across not just one model, but potentially many different models. So you can say I'm not just taking one model in, but I'm going to chain different models together and run a compound simulation across kind of the entire state or the entire value chain.

And this this is, I think, where we really see kind of the the progression of customers working with Foundry kind of kind of move towards where you first were working with point applications or kind of starting workflows to begin with. But really, it's about the connected operations paradigm that unfolds by virtue of bringing together the ontology kind of incrementally and, you know, as an example, in oil and gas, initially, they were doing kind of point optimizations and simulations for one part of kind of a connected value chain, right? Just the subsurface. So they had one team working on the subsurface, integrating data, building models and kind of just looking at that element of what was going on.

But of course, it's part of a network, right? The subsurface connects to the top side, connects to the flow lines, connects to the onshore. Each of those different functions had their own kind of siloed simulation and optimization and data regimes, and to really understand how to optimize production as a whole, for instance, they had to connect together all of those different parts of the organization into one ontology, connect together all the different models into a single chain representation. And then they could actually try to run these full-scale continuous simulations and begin to optimize production in that case, a kind of a full scale. They started to work towards really connecting or reconnecting their operations. And we see this sort of again and again, across whether it's sort of industrials or its finance or its retail or its health care, you name it, that that being kind of the North Star for us is how are we helping our customers really re-engineer and reintegrate their operations.

So I know we've covered a lot today, so I won't I won't keep keep yammering on longer, but I just want to make sure that we can recap here a bit of what we've been through. Just to leave you with this. So, you know, we started at the top here with how do we think about kind of data and ontology. So data coming together from many, many different sources, structured, IoT, unstructured, geospatial, you name it, over 200 plus out-of-the-box data connectors that we've built for Foundry and is extensible so you can build your own and being able to bring kind of all of those kind of different pieces of data together into a semantic representation that allows for people to instantly start to look, interact and kind of wield that data.

The second piece is now how do we bring modeling, data science, linear programing into that same frame so we can actually overlay intelligence into that data and build out not just a semantic representation, but as we said before, a kinetic representation? So how does how do things actually flow or get dictated in business process based on what the models dictate? And how can you sort of build models within Foundry, but also outside of Foundry, and then bind those models to the ontology? So you can then insert those models into operations in a way where you're both allowing for very robust and safe deployment of models, but also very rich feedback to model builders who can know because of the data lineage, the model lineage, kind of all the bookkeeping that Foundry does, exactly how users are using those models in different applications and also in external applications that are using the APIs. And then finally, we talked about kind of how this all comes together in again, where 70 to 80 percent of Foundry users live, which is operational applications, which you can build using low code, no code or pro code application builders and can also, of course, be surfaced directly via the APIs if you want to build external applications as well. So there's a lot more we can cover in follow-on sessions. You've only shown you kind of a glimpse of kind of how all these different tools come together and Foundry into a cohesive platform.

We've only shown you a glimpse of kind of all the different interoperability patterns that exist in Foundry. We've only shown you a glimpse of how you can actually kind of deploy workflows using a workflow catalog out-of-the-box to get started really quickly, if you're doing an alerting workflow or an entity resolution workflow or a plant-360 or resource allocation workflow and all the pre-built templates we have for getting started super quickly. But we'll save a lot of that stuff for another time. Thank you everybody for listening and I hope we can talk to you guys soon.

2022-04-30 03:58