Announcing Form Recognizer: Create real value in your business processes by - BRK2002

Good. Morning everybody and welcome to our session announcing, forum recognizer, cognitive, service. And. I am a program. Manager for cognitive, services computer, vision my. Name is Neda hi Bea and I'm a principal program manager and the applied AI team together, today we'll show you introduce. You to our new service form, recognizer, cognitive, service. So. This is the first session from, several session happening and build about knowledge mining, so. Consider watching the other going to attending the other session they're all about knowledge mining, and how you can extract insights, and from your content. So. Asia, REI is comprised, of three main. Solutions. One is AI, apps and agents where, you can create intelligent. Intelligent conversation. On the AI and, BOTS and machine. Learning for creating machine learning models and managing them this, session will be part of the knowledge mining sessions where, you can create insights, from your content and it. Will it. Knowledge, mining is a comprise of either search and form recognizer this, will focus on the form recognizer, new service. So. There are lots of forms everywhere and every, customer and every company has forums there, is invoices, the receipts there's tax forms financial, forms, bank forms medical, forms. Application. Form construction, forms you name it forms are everywhere and. Forms. Come in different types so keys, can be on the top as you can see at the address keys can be on the left there's. Multiple tables. Checkboxes. And I can seek multiple, types of forms like different types of forms. Tables. That come with headers and, different. Lines the header spread of cross lines complex. Forums like this one with lots of tables and condensed data. And. What, everybody is trying to do is extract, key value pairs and tables. Out of these forms and today, most people are doing it either manually, so if they have a big team of people taking, these forums and keying in and digitally Qingyun the keys and the values in the tables others, have built machine. A I'm a bottles, for every key and value or, created, templates, to. Extract value, pairs from these forms this is time-consuming. It's tended, to error if it's a manual it's not efficient it's a very long business process, today. And. There's, a lot of challenges in extracting. Key value pairs and tables from forum so as we can saw before there's a lots of different types of forums the. Document, quality can be scanned they can be images they can be faxes, they can be text. PDFs, and they. Come in different types so we saw they can come in printed they can back come in handwritten they, can have complex table with nested table merge cells tables. That span multiple rows. A lot of tables in one forum sorry there is a lot of complexity, and extracting, the key value pairs out of forms and what everybody is trying to do is extract, the data the key value pairs and the tables are the forms. So. For, that reason we're introducing today the form recognize our cognitive service, which, basically easily. Extract, keys. And tables out, of forms. So. As you can see the forms and it extract the key value pairs and, the tables out of these forms. The. Input for the form recognizer is forms and the. Output is a structured, JSON output of keep their values and tables, where. One has you can get the keys the bounding boxes. The. Value and the confidence per key value pairs and tables and. And. You can see that we provide. For, each key, its, value the bounding box and the confidence where the bounding boxes can be used to overlay. Back the construct. The form and confidence. Can be used in, order if you want to if it's a hundred percent confidence, you can immediately transfer. It to your to your system if it's lower confidence you can put it into validation, into a human validation. So. Form recognizer is comprised, of two parts one part is tailored to your form where, it's custom you build your forms and you, train, a model that is customized, to your forms and the, other one is a pre-built, system. Where, we train the models and we are trend using pre-built receipts today.

So. The custom form recognizer, includes, two parts a step one is you train you can bring you, bring five sample, forms or an MP form there is no human labeling work so, you bring with five sample, forms or the empty form and you train the system on your forms to create your model so the system is tailored, to your form and creates a model based on your form and your data for you and it's. Available as, a hosted, managed service or as a container, the. Second part once you're happy with your model with, your chain model you analyze and you send your documents, and you extract this the structures ation of the key via values and tables. There. Is no human label work you. Just bring the five sample forms or, the empty form your trainer model. So. There's two parts for the system the first part is you, bring the five M sample, forms or the empty form and you train the model once. This is when you train the model the system discovers. What are the keys what, are the tables and associates, values, two keys and entries, to tables and once, you're happy with your model you can analyze your forms input, your forms and get the extraction, the structure, JSON key. Value pairs and tables from your data. In. The future we'll also introduce. Ability. To customize so the first release is fully unsupervised, there is no human labeling work everything is automatic, in the future we'll introduce the ability. For human inputs for, a gamble of labeling. Telling, the system yes this is the key this is not a key and providing inputs to the system so it can you improve if it missed something. So. How does the system work when you train it or when you analyze so, the first thing is we do ingest, the data so, the input can be PDF, text PDF or scan PDF or images. JPEGs, and PNG and where the system does it extracts, all the text and all the information from the files the. Second part is we cluster so, once, we extract. All the text and all the information we cluster based on the types so, here we cluster every form type based, on structure and Fontan, in the foil and the form two, different types, once, we clustered, we, discover, so. Once we cluster we, discover what are the keys and what are the tables, and.

We Put every week. Every cluster. We give it the his keys of the tables per cluster and then we extract we associate, the values to the keys and the, entries, to the tables and extract the structured data out, of your. Including. The bounding boxes on the quadric. So. Let's see a demo for that. Giving, one second for the computer to start. So, as you can see we have here a form and the. Form consists, of. Key. Value pairs and tables and. You can see that the system everything. You see here the system was trained with five sample, forms, and. Then analyzed a form so, the output you see here is the analyze of the form and you can see the system discovered for this forum where, did it Keys so bill - and, you see the key here is cantos of phone, number invoice. Number, and if, we scroll down we can see that it also discovered, the keys that are here like, tax rate sales, other, total. And even the terms here you, can see it here on the bottom. And. It also discovered, a table where. You can see that it discovered, the, item number and all its values the description, the quantity, the unit price the, discount I noticed. That even for the empty cell the system will always return empty. Cells so you can always reconstruct, back the table with all the number of rows and the. Output. You will get is basically a JSON, output. Which. Includes. The. Key, for example Bill - its value, contoso. The, bounding box for the key and the, value and the, confidence and, you. Will also receive in, the, JSON output a. Table. With an ID for the table and then the header of the table is item number and all its entries were there bounding boxes and confidence, and. This is one type of form another type of form that we can see is a totally a different type where, it's a mortgage interest statement and the system discovered one, mortgage interest and you can see it here -, outstanding mortgage. Mortgage. Origination. Day, receipt. Lenders tin number. And. All. The information and the output is always a JSON, output with. The key value pair as the key and, its. Value. Bounding, box and confidence, so. For example one mortgage interest, it's. A value bounding, box and confidence. Another. Example is a table. And. You can see on this table that the, headers span multiple rows, like employee ID and this some discovered, employee, ID and all its value name. And all its values, hourly. Wage and all, the values and the, output basically is at Jason where, it shows the table ID, the.

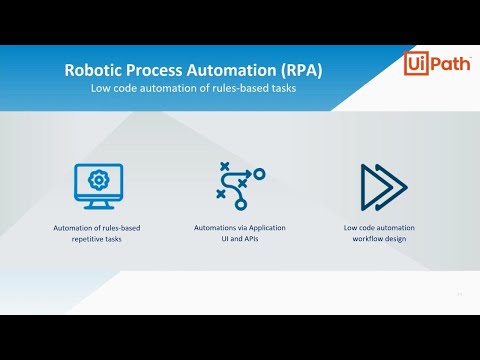

Date For the first row in bounding, box. Entries. Bounding, box and confidence. So, to show you how form, recognizer, is used in our PA system, robotic process automation system I would like to invite mark, vivillon. Ski. Sorry. About that. Mark. Vivillon ski a director. Of AI and. Document understanding, at UI path to. Show that excellent. Thank you mark thank you net I appreciate, it so good morning I'd like to share. With you a little bit about who you I path is if. You're unfamiliar, with us as an organization, real quick we are the fastest growing software company, in history, we, currently have over 2,100. Global enterprise, customers, who use our platform on a regular basis, to be able to automate their processes, from back-office to mid office to front office we. Have over 350, partners, in a very part rich partner, ecosystem, that allows our platform, to be as robust as it is and enable, a lot of new capabilities, on the platform, and, we, have over 2,500 employees inside, the organization, we're growing a very steady clip I just presented this I did not too long ago and these numbers change quite dramatically, we. Also have secured a billion dollars in funding we have a valuation, of seven billion dollars in the market today and, we're backed by companies like Excel kleiner, perkins Capital, G many, of our great partners who have entrusted. Their. Money with us as we continue to expand our business so. Beyond the company what do we do as a business why do our customers actually make investments, in our platform we. Are all about robotic, process automation, so, our PA is a category, and it really talks about or, it enables. Us capability, for software robots, to basically perform the tasks that humans do inside. An organization, on a highly, repeatable, basis, and at, scale so. What. We do is we then take these tasks, and we enable. Them through scripts. That the robots then process, day, and night 365, days a year and. That we do that integration, at the UI layer ends Hugh, Ipath so, we can actually integrate. Applications. That users use on the screen move the data from one application, like s ap into Excel or Excel, into another back-end, system a lot, of that work that you know the human tab are being, performed then, generally, our automated, the, other key aspect, of the platform, it's low code design we, have a lot of business users or, technical, people who you, know begin working with the platform you can download the bits and start working, with it your environment, and they discover, that, it's relatively easy to actually start to build these workflows and then, ultimately you.

Know Promote them inside the organization, and then, get them to the stage where they can be running, in production one. Of the beautiful things that's happening between these two worlds a robotic, process automation, and AI is that, they're coming together quite, quite, nicely so, I talked about our PA is really about emulating. Human, task activity, that's, highly, repetitive in nature with. AI capabilities. We're really now starting to enter into new era of smart robots, where. With these cognitive, skills enable, through AI and machine learning they. Can start to do more cognitive. Tasks like scoring, or forecasting. Or predicting, the outcome of some type of business and then in incorporating. These machine learning models and artificial intelligence, capabilities. Into, the workflow so, that's a big pivotal, point within the industry and a big pivotable, point, for our platform. Overall, so, as. Nedda mentioned you know we're looking at the forms processing, service form, recognizer, service, specifically, and, it's all about capturing, that data that's, locked up in your documents. And being, able to convert that extract, it quickly with, a high level of efficiency and quality and, then make that data available for, business insights, and further operational. You. Know updates, to back-end systems so, there's a number of steps involved, with that and. This is really where the value of our PA and AI come together is that we can use a robot, to automate the ingestion process, so a robot, can say for example look at a email, that's coming inbound see. That there's an attachment like an invoice or a receipt, extract. The invoice and receipt and automatically, you know start the pre processing activities. Of that document, make, a call to the form recognizer, service, to be able to extract the data I'll get that return, JSON payload. Information, with, the key value pair information, the table, level, details as well as the confidence information. In, addition to that workflow you might want to have things like document, classifiers, so, when we look at documents in an enterprise type of setting we can have financial documents. Benefits, based documents, HR documents legal, documents, so, we have classifiers, that can actually detect based upon some of the keywords that are in those documents what type of document, we're actually working with in, addition to that you might want to leverage some more, customized, fit for purpose machine, learning, models that, are specifically, designed for a particular document, type maybe, there are some data in the document you want to be able to do some risk scoring, of a vendor that's sending some parts and supplies to another. Country, where you haven't seen that type of behavior or activity before that, might be some potential risk or leakage would, be an example of that and then, we also incorporate. The capability, to allow a human loop so, naturally. A robot can automate a lot of these processes but what happens if that extraction, isn't, quite - a hundred percent and. We still need a human to be able to see the extraction, output, and then, make validate, it or make refinements to it so that's really where the human loop process, comes in and then finally, the robot can take that data and then, move it to back-end systems after, it's been either extracted. With a hundred percent confidence. Or based upon the minimum threshold values, that have been set by the business rules in the organization, or enable, that human loop process, to, occur but. More specifically, I'd. Like to talk about a use, case in scenario where we've demonstrated, this, capability working. Closely with Netta our team Dan, with Chevron are a great partner and customer, to, be able to take a massive, volume of reports that they receive on a daily, basis that include drilling, reports, completion, reports, work over reports, and. These reports are quite large in size so they can be 200, pages and up size of content, that basically needs to be parsed and. Then they receive approximately, 50. Of these documents per day so literally thousands, of pages of documents every. Single day there's, drilling, or some type of activity, that's occurring out, in a well field or on, a rig for example. So the, key thing here is that these reports come from multiple.

Providers. Or players within those market other super major cut oil and gas energy companies, that are mining in, a particular, field or extracting. In a particular field so we see a lot of variability, with respect, to the content, that's in these documents and how that contents, actually laid out the documents, and, so today. The process requires, a subject, matter expert a drilling engineer, or a mud engineer, to actually go into that document inspect. That data and then key, it into a back-end system so the process can take weeks. Or sometimes, a month to be able to catch up and finally, get that data into a usable, format, so, what we see are, all the the C and the double V so, we have complexity. Here in terms of the content. In the actual layout of that content, we, have a lot of variability, of how that content is reflected, in the forms and then we have a lot of volume that's coming together so. I'll show you an actual demo, of what we produced and. How the the, end end solution, works but essentially what we're doing is we're using a. Robot, to be able to pick up these paper or digital documents, ingest, them very rapidly the. Robot then will start to do some of the processing, so if we have for example a, 200 page document we. Might want to parse that down and do segments. Of that document, and process those individually, our. Document, processing, capability, through a robot can enable that we, make the call to the Microsoft forms recognizer, service, we, provide the input, of the document, itself then, we receive back the JSON, payload, with the key, value pair of the table extractions, and the confidence information. If, it's required and we want to have a human loop actually see the results of that extraction, as well as the source content, we can begin the validation, start process, and display UI. Where, the human. Can go in and inspect, the content, make changes to it and then, after that process is completed the, intelligent, automation process, can begin again we can then land that data into back-end systems like an S ap or other operational, systems, or into, a data lake for further data analysis. And then finally what we'll present is how can we take that data and actually drive a power bi dashboard and, again. Unlocking, that data very rapidly, to, get to the end game which was using, that data for business benefit. And purpose so, I won't spend too much time here because not already talked about you know what this intelligent, content extraction, is the, key aspect here, are two, things one we get the bounding, boxes, so we have vertices information. To be able to then paint, a bounding box around where is that data on the actual document, but, then even more importantly, at the data element, level we get the competence, core information as well and that's where we can set rules to be able to say if it's, if the extraction quality, for a table has meant 90 percent that doesn't require human loop process, if it's below 90 percent we would actually, invoke that human loop process, and this. Is a representative, of the human loop process itself so you can see side by side the source document, on the right that. Was sent into the service and the extracted, values that came out as a reasonable, and then, finally, you know the whole purpose of getting the data unlocked, from these documents, is to be able to drive better. Knowledge insights and management, inside the organization, and we'll show the actual demonstration, of the power bi component, so, with, that let.

Me Go ahead and start the demo and. I think I can do that by clicking here. Yep, so, we're gonna start with the completion Reaper workflow, but real quick what I want to show you is a taxonomy, manager, so you can go in and actually define a, taxonomy. By, a particular, document type, so here for example we're setting up a a. Completion. Report, we can go in and just because, there's so much data in there we may only want a subset, of the data from the report so, we indicate that we want the well name we want the start date there's, a table in there that concludes, time log data so the actual time series, based information, of what was performed, during the day by the production engineer, we, want to capture that information as, well so we can very easily go into taxonomy, manager, and create this taxonomy, and then we can use it across all, the, same types of documents, that contain that type of data but, if we go into the workflow and we actually look at here in the our ERP a tool this is the robot workflow. That we've enabled if we execute, that the robot basically will then go and inspect, the contents of a blob storage area, or email. That's coming in with the attachment, or a file system pick, up the documents, do the processing, that we had talked about which was say. For example take a 200 page document or. About 10 pages that are relevant, content, it, will then return that confidence, score back to us and here we show for example you. Know the a hundred percent confidence. Is what we are actually seeing in terms of the performance of this with the forms that we are using the. The human user can go in and very easily see, the content, through that UI, type, of display another, example, here is if we get a hundred percent you know we're showing the UI example, here but this could just be automated, in the backend, the robots automatically, just going in moving, that data into Excel or into power bi or actually. Making updates into a back-end, system like si P so, here's another example of, another report, you see again the content, varies quite significantly, the same data we're trying to get the, time log data the well information, the well location, the, spud date for the well these are key attributes, that we have and, you can see all, the key value pair extraction, as well as the table content itself so, that's. One way to view it naturally, once you've got that JSON payload, you can take that data and you can put it into, like Excel if. You have some Excel reporting, that you want to be able to use we show as an example all. The individual, data that we get from each report, can be tabbed up by the robot, automatically, produces, the structure of the report and then, normalizes. The data into, this format, so you can see essentially, what the robot sees in, something, like Excel but. Then if we switch over and we look at the drilling report, workflow so a different type of report, that, comes in contains similar type of information here's. The workflow that we're executing here, and again a robot, is performing, the process, to go and invoke the service and pull, up the drilling report that you see here this, is a really good example of a complex form very densely, packed, content. On a form. With, side by side tables, and even, tables, that split multiple, pages and. You can see our extraction quality, is quite higher we're getting a hundred percent extraction. On all the key value pairs of interest plus, a table, extraction, detail that you see here with the component, level detail. As well so. Again. You, know the human user can very easily go in, and make modifications to, the key value pairs or even add new key. Value pairs that now they want to add to taxonomy, manager, as an example so, same thing here we can take the data we can move it into Excel so.

We Can again take all these different reports and start to normalize this, data into a common format but, really what's important, is how do we get this into the business users hands to be able to then use. This data in a meaningful way to be able to make more intelligent decisions about, how, are we competing against other operators, in the field we. Can see across. These different reports coming, from say 40, different providers, that are actually, drilling in the same field that Chevron is they, can see how much time they spent on non-productive. Time, time. Doing repair, time, involved, with safety meetings and these types of things so there's a tremendous amount of intelligence, that comes out of that type. Of capability, so. My time is up but I wanted to share with you what an actual solution looks like in. Something that we've done in partnership wish I've run em with Microsoft, thank, you very much. Thank. You mark and, to, demonstrate how form recognizer, is used also in the financial, industry I would like to invite super, boss I. Would, like to invite super boss, CEO, or financial, fabric, Thank. You knitter. So. Thank you later good morning everyone so I'm, super opposed see your furniture, fabric we, provide, data, hub. Services. To the 74. Trillion dollar investment, management ecosystem. We. Are a. Microsoft. Cloud, solution. Provider and. We. Are based in New York City. So. Our. Clients. Receive tens. Of thousands. Of documents. Structured. Semi-structured. Unstructured. Excel. CSV PDF, with. Very, rich data, embedded. In it and. I'm sure you all know getting. Data extracted. Out of PDF document specially, if semi-structured, or unstructured is, a, lot, of work a lot, of the organizations, do that manually, today some. Right, ETL code. So. We, picked a very simple problem to, work with the Microsoft, Forum, recognition, team simple, on the surface but, complex, in terms of actually, getting the work done to, extract. Data from semi, structured PDF, documents. And then. Deriving, insight, out of it so. Prior to form, recognition, our solution, is to be probably. A lot of you do that today, writing. ETL, code, for, every, different form type. Which. Is to take weeks. Long, development. Right, I see some shaking, heads and, it's. Costly, and time-consuming especially, when you are dealing with a large variety of forms. With. Form recognition, the process became fairly, simple. We feed five, or, more. Documents. Of same, type to, the forum recognition, engine let. The engine learn and then, extract data as JSON which you saw in Nana's, demo and also, mark Shirley. So. To. Walk you through, our. Architecture. In terms of how we have integrated, the form recognition, in our data.

Hub We. Receive what you're seeing on the left hand side PDF, documents, from the bank's which. Comes to our file, Rob that's the data hub file drop and then. The smart loader which is a component, of our data hub picks up and then, makes a decision based on the form type where. To route it so, in case of PDF, document, where we already know the template, we, route it to the azure. Forum recognizer, engine, and then. It, gets the JSON, output stores. It into the blob storage and then, once you have Jason on the blob store you can directly feed it into power bi or if. You need to normalize the, data further you can store it in sequence or as, your data Explorer other sort, of normalized, database technology. So. At, this point I'll show you a quick demo of what we have done sort. Of under the covers so I'm going to switch screen, here. There. You go so. On the left hand side what you are seeing is the, end, user experience and on, the right hand side I'll show you under, the cover what, is happening so. Here we have five PDF, documents. Coming. From JP Morgan Chase for one of our clients so if I open, one of the documents let's say here, this one. In. Browser, you. See it's. A fairly straightforward statement. Which. Has, one line with some values in it now. We are going to switch to our, job. Processing, server. And here. I, have pre setup the, form recognition, engine with. The sample, set so that the learning is complete. And here I'm gonna run the. Same, five documents, one more time and he may see the output coming as output, one here, so. I'm going to invoke the car command here and. Here. It generated the output, one let's, open it in notepad plus, plus and see what happened. We've. Format the Jason let's. Go all the way to the top so as you can see here that the, data came from the. 28:26. PDF, if, we, go to the 28:26. PDF that's the same PDF and we. Are particularly, interested, in the total value here. So let's see whether we can find it or not here. So. We'll go down a little, bit. Here, you go total. Ccy. It gives the bounding box if, I go down a little bit more, US. Dollar 85 that's, the same value it didn't make it 84, good, thing manual. Entry can and. Confidence. Level 1.0, so we can certainly use the data so. Then. What happens in terms of the inducer, process, of course, we. Feed, the data from JSON into power bi here. I have a very straightforward just, data, table where you see the same data in, power bi. So. From. Document. To data to insight, fully. Automated, without, manually, entering any data, let. Me go back to my deck. So. To recap the demo what. You saw is the. Document, came in in the file drop the, Smart loader invoked. The form recognition, engine the. JSON output went, to the blobstore and then. Power bi report, refreshed, with the JSON, data. So. Not. Only form recognizer, makes. It. Like. Easy but, it's literally, no. Code when you are receiving new. Instances. Of forms, you feed the recognition, engine it, learns it generates, the data, it. Comes, with a very easy to use. Rest. Api, which, we have used our. Time. To. Market, in terms of new form template, got reduced from few, weeks of development. Activity. Or ETL development, activity, to. Few. Hours, of data. Engineering, activity, that. Is a 90%. Decrease. In time, or, increase. In productivity, with. Respect to our overall workflow. The. Form. Recognition, cognitive, services stack is very easy to use the, API is extremely. Easy to understand, it took us only a few days working, with the form, recognition, team at Microsoft, and, to. Get the initial, solution up and running the. The confidence, level is very important, of course because, you know whether the data is usable or not of course, from an accuracy, perspective. If you don't have manual, entry then. The. Chance. Of error is much, lower and with the confidence level the data becomes much more usable a lot of our clients had PDF documents, where they, were entering, the data either manually, or comparing, it side-by-side with.

Form Recognition, that goes away so. We are very excited about, this technology so congratulations. To Microsoft, to bring this stack, into, the, cognitive. Services stack, we. Believe, that form recognition, is a revolutionary. Stack. In the azure ai and is, going to change the business users, life for. You, know better. Where. They don't have to spend the four hours of copying. And pasting data and, only. The rest doing their actual, analytics they, will spend more time in doing the analytics, so thank you all and enjoy, build. Thank, You Nader for having me. Thanks. Uber. So. For the first 30 minutes this morning we've talked about form. Recognizer, and the. Customization. Of being able to bring your own forms, and tailor. The model to work for your specific, forms now. We'll switch gears another flavor, of form. Recognizer, is pre-built, models so, we know that out, in the world there's, a bunch, of a bunch. Of documents that are universally. Structured. So they don't look too different from each other and so we Microsoft. Can go and train, models for you so you don't even need to send. Documents for training so the first, pre-built. Model we are releasing in, June will, be receipts. It. Will be off-the-shelf, so there's no need to train your receipts you can just senders, receipt to the receipt API and then get fields, back it'll, be a make managed, service it'll be available this, summer. Here's. An example of. Eight. Fields, that will extract, starting. When. When. It's released for preview some. Of the scenarios that we're seeing there's. Two big buckets of scenarios one obviously. Is, expense. Management so I'm sure many. Of you here coming, to build from. On. Your. For. Your job for, work you'll have to fill out expense reports, and you'll have receipts. From top pod and, Starbucks, and all these other places where you have to take a picture and then enter into a system and so expense, management kind of everything that has to do with that, whether it's entering receipts or auditing is one big, customer, scenario we see around this. Use case the, second one that we've been seeing is, around customer insights, so you could imagine if you were able to collect a bunch of different receipts from different customers, and. Being, able to analyze that quickly you may be able to understand, their consumer. Behavior like how much did they pay for different things how, much did they pay when they went to this rush other, restaurant, for. The first version what, will support is what we call the, u.s., thermal, receipts so, you can think of those as things you get at a restaurant at, a, retail, at, a gas station, but.

We're Looking to see how we expand, and the. Eight fields, we support, right now our merchant name merchant, address phone number transaction. Date time subtotal. Tax. And total. Who. At Microsoft, to test out new products. That we have we work with internal, teams to, pilot and refine. Our technology, so there is an internal. Team at Microsoft that is. Using our services, right now for receipts and it's, the equivalent of the expense. Management team within Microsoft, and, what, they've integrated. The, receipt. Receipt. API. In is audit, auditing, the expense reports so currently today the. Employee. Will still enter their. Receipts, and their expense management in, the current way but. Where this. Team has started, the integration, is an audit so, traditionally, they. Can. Only audit about 5% of the receipts that come through but. With this technology they're able to increase how many they see to, see which, receipts. And which expense, reports, look, like there. Something's. Wrong with them they don't match and to pull them out to have someone review and make sure that, it's correct there's. Two main benefits to this one, is for, low risk reports. For, things that employees. Enter, in nothing, looks off, they. Can just streamline, through and get done faster, and so for the employee you get your money quicker and then for finance, they're, able to just. Process those without any issues and then. For the ones, that do, look suspicious. The. Reviewer, can save time because they don't have to, go. Through, all. The receipts are all the the, reports they just get the ones that are that, look. Suspicious, or a little different in. The current system. Along. With other signals. That the finance, team has put into place they use the, receipt API along. With that and if. Something, looks anomalous then. Alert, will be sent and, then it will go through to. An agent and then. Go. Through that process to figure out is that actually, an OK, expense report or not. So. Let me show demo. So, here's one demo I already ran it through but, you see a receipt and then you will see the. Fields, were extracted, so you have merchants. Address. Date. The. Time the. Subtotal. Attacks. And the. Total so the output, will be a JSON. Format. With. These items. In it. One. Of the great things that we can do with. Receipts. As well as forms. In, general is, our. State. Of our OCR, engine which. Kind. Of powers, a bunch of this technology it's, really robust, and can handle. Things with, lots of noise and, kind of different angles so, I'll show you an example of. This one where the receipt looks like more real-world right when you take a picture it's not always straight and, then we are still able to handle it so this is. The. Read API for OCR. Which it you can use separately, from an a form recognizer, but it's what, is powering. And. It forms and recedes so here again you see the. Merchant name the. Address, all. The fields that we talked about kind. Of on the image that's a little more, so. This will be available in June and you'll sign up the same way as you do with form recognizer so. Now I'll hand it back over to Netta and she will tell you how to get started. So. So far we saw a solution, we saw at forum recognizer does let's, see how you can get started so. In order to start using forum recognizer the first thing you need to do is create, a forum recognizer resource, in the ager portal once. You have that forum recognizer users once you created that for mechanize user resource, in the asia portal. Then. There's, two commands that you need to contribute. To, use first like we said is the train and then, the analyze, for. The train we're gonna see a simple Python script on how to, invoke. The train and, from. What you created them the Asia resource, you, need to take the endpoint and we'll see in a second how to do that the endpoint the sass URL, and, your subscription, key to. Call the train and your dad data needs to sit on the blow-ups or five sample forms, as we said or an empty form and this. Will construct the post train. Command so. Let's see how it works. So, on, my blog here we have five sample, forms, where. The forms look like this there, are PDF documents. Text PDF documents. Different. They're, all the same type, and. You can see they have some keys on the top some keys on the left and. A table and. These documents will be used to first train the model and then, to extract, for the data. So. To train the model. We. Have here a Python, code, where. From our aid, report all we took the, end point and put. It here this. SAS URL, from our blog to. Be able to access the data and, our, subscription, key, and. Then all, you need to do is run. This. Just. Click the run okay. So. Right, now this is running and what, for mechanize is doing is everything we discuss this first clustering. The data so it's discovering, which type of data we have there then, it's discovering, the keys and. Discovering. The tables and, wonder is finish discovering, the keys on the tables it will create a model for these type of form so we can extract the data using, this model so.

We'll Give it a second to run all this and. Do all this processing, for creating our model I create. Our custom model, that is fit to these documents. And. We can see the model is created and the. Output is a as a as, a reply, 200, with the model ID and. Then. All, the document that were used to Train and their status I would use invoice 1 to, add page 1 and no errors and the status is success you can see invoice to. The. Number of pages in that document, know where the in no errors and the stat is a success. So. We can take our model. ID. And. Basically. Let's. Look at what we're gonna do now. So. Now once we train and we have a model and a model ID we. Want to analyze and extract the data so. The next step is, basically calling they analyze for. The analyze we need to take again. The ending point where, our resources. The. File path of, the file where where, the document I want to analyze is the, model ID that I just received from the Train and basically. The content types so if it's a PDF then, the contact type will be PDF it's images images. And the, subscription key and this. Will create. The, analyze. REST. API command. So. Let's see how this works. Yep, so. We'll go to the analyze the, same thing we are the Python for. The analyze command where, you can see the base URL is our end point and. It, constructs, it added to the form recognize v1 preview custom, then. We have the file path which is a document. Here you can see the document that we're going to analyze. So. It's, this invoice. We. Have the. Model ideally, we need to take the model AG, let's. Take the model these we just generated, and. Paste. It here and. The. Subscription key so let's run the analyse. And. The analyse return, a. JSON, output of. The start of the data so we'll see it a second in a notepad, but you can see from the output the key value pairs so you can see address and its bounding box and the, values is one redmond and, all the data so you can see the output here but let's open also the output in JSON so we can see it side-by-side with, a document. So. We can see the, system extracted. There, was it. Extracts, the number of pages so there's one page in this document it, extracted. The page height. And wide so you can reconstruct. The page if you want it exactly cluster, ID so we had one clustered, built. When we trained the model which is cluster zero and the, key value pairs that were associated, with this document. And model, so, address, this. Is the address and. We. Can see that this is a multi value so, the first row is one redmond sweet sorry about that. The. First row is one rendered suit it's bounding boxes and it's confidence. Then. The next row is 600, redmond way it's, bounding, box and the confidence and the third row 9. The zip code it's bounding box and confidence, then. Invoice for same thing the bounding box the, value microsoft then. The confidence for that. And, the system also discovered. A table, with, the invoice. Number. This. Is the invoice number the. Invoice date. 1818. June 2017. And all the bounding boxes and confidence invoice. Due date. Charges. Bounding. Box value. Confidence. And the. Bad ID so. This is the manage to discover from five sample forms no, human labeling, only. Site five filled in sample forms PDF types in this case the system managed to discover all the keys and values and extract the table from, these documents so. To. Train, a model for your data basically you need to write the analyze and then the Train the API has. Some additional commands for example at the end of learning a model you can do get keys do you see the keys the system discovered. If. You want to look, what keys the minted system built for model, you can also per analyze let's say if the forum has a hundred, keys, and you want to extract only five you, can specify the specific keys, you want to get on the analyzed so you don't need to get all the data you can get only the data that is of interest.

So, We saw how to train and how to analyze extract, data from forums and, form. Recognizer, has a, lot. Of benefits so first of all it's easily it easily extract data from form. With no manual labeling, you, can create it based on tailor to your forms and we saw complex, forms we saw all, the drilling reports, we saw financial, forms we thought invoices, so. Like. All the forms the different types of form we saw at the beginning you, can take form recognizer and train it to your data to your specific forms, in. Addition. Foreign. A recognizer, includes pre-built models like receipts, as. Which is our first model that we release which, comes just, bring your forms and will extract key value pairs for your forms its, enterprise-grade in terms of security, and privacy, it's. Available as a managed service and a container so if you want all the data needs to stay and premise you could deploy formica as a container if, you want to use it in cloud you can use the managed service in. The area in the Asia cloud and it's, tailored to your form no, manual labeling, provide, five samples, or an empty form in order to train your model. So. To get, started with form recognizer you can go to form recognizer. Portal. AKMs, form recognizer and request, access to the service or brand you access your form. Recognized documentation. Form, recognizer api and, a contact us if you have any questions. These. Are additional build sessions that you can attend. And. These. Are resources for all the knowledge mining, and AI demo is that you see it build and for. Now if you have any questions, we're. Here to answer them. So. If somebody wants to ask question then you can come to the microphone and feel free. Yes. Of course the. Build sessions yeah. When. We enter, the country you need to fill in a declaration, form so, we had to run handwrite and, fill in a form is there any option, to read. Those kinds of forms so handwriting, is not supported yet in our v1 it's. In our roadmap for future versions okay. Thank. You um, we're, a, recent. Uipath. Customer. And. We're. Also. Developers. And. So. Should. We where. Does uipath. Where. Does. Custom. Development, using. The. Forms. Recognition. Engine. Fit. An, enterprise. Strategy. Yeah. So thank. You for being a good to uipath customer, so. We actually integrate, on the platform, so we have natural integration. To the service itself so, you can embed, it in your workflows, just naturally, and define your own customized, workflows, where you can call the service and get, the extracted, value out and then if you want to programmatically, work with that data you're free to do that is you would normally, on the, platform, or you could receive, the benefit of using the validation station. Type capabilities, as well for. That human loop type of process. I'm. Not sure I fully so. So, is. There a case where, we would not. Use uipath. But use the forms, engine. Directly. Is. You. Know would you say UI path is always the appropriate. Well. If you are. Expecting, the benefit of automation, and, you'd like to be able to automate some of the task of actually extracting, that data and then naturally. The platform, enables, that capability to have a robot, perform, a lot of the tasks, associated with picking, up the document. Any type of pre-processing, that, needs to be performed on the document as well as the extraction, through, the Microsoft form recognizer, service, could, all be done in the workflow but the service is an individual, endpoint so you could elect to use that as well and custom, code outside of the UI path form if you decide okay. Have. You used, form analyzer, or maybe, even do you believe, you could use form analyzer, and. Something. Else to. Extract. Data from less structured.

Documents. Such as requests. For proposals which. In theory. You. Know oh it's, the same type of content, but it might be in literally different orders or even, something like a restaurants, menu which, again all menus have the same content, but they're, wildly. Laid. Out in different ways currently. A form recognizer is used is needed is used for structured data so, for training a model you need the five samples, for the same type or an empty form where the constructor. Is the same and. The keys need to be explicit, and on the documents, so, right now the solution, is based on is fit. A good fit for a structured, item data not for unstructured, documents, have, you seen anything that can work, with the unstructured, data though, yes so we have solution in a jar AI that. Can also work without the. Unstructured data cognitive, search and that would be a good session to go to and the knowledge mining session will demonstrate how to work with unstructured data and get your insights out of unstructured data and so that's coming up later yeah, so if we saw if we go back to the, let. Me go back to the first line, easier. Doing it like this give, me one second. Okay. One second I'll go back all the slide, and. We'll present this all these mining slides again a. Quick. Run back. And, here. We go so, the, knowledge mining sessions will explain how to use unstructured. Data to grate up insights from your unstructured, data. Form. Recognizer in v1 is the for structure data. You. Can expand that to more, global, different, languages yeah. We're gonna look, to see kind of, after. A preview where, where. Are the different, requests coming in like, our plan is, Microsoft, a global service so figuring, out like where should we go next for, well. For your, particular scenario what do you care about. How. Different, layouts. The layout. Of the document be when you're training your model. Ok, take for instance pay, stubs they're all going to have the same data but. They're gonna be they're, gonna be slightly different layouts. So. How well will that work so. For, the training data if, you have a different, types of document you need to bring five for every layout five. Samples or an empty layout for each layout, that you have and the system will train on that you can put everything in one blob so when you analyze the system will basically first cluster send it to the right layout and then extract the information but, you'll need to train it on five samples from each layout.

Expand. To expand. Use Japanese. Additional. Languages are in our roadmap our, public preview starts with English and supports English and it, will also support English. Character, languages, but if there's essence, on the top and stuff like that that may be removed, we're, planning to expand, the languages, as all services, operative, services thank, you. I think. The guy just answered one of my questions but, just to confirm so if you've got multiple. Potential. Formats. And you're not sure which one it is when you send them at the document, it'll, be able to tell which, potential. Or which of the multiple, trained forms, this applies to yes. So if you train your doctor your model on all this different samples, the first thing we do is we cholesterin. To send a document for analysis we cluster, it we route it to the st. the same type that it was trained on and then, we see what what keys were discovered, for that type of our table and we, will we will extract the data based, on that template, and if I may have a second question. The. Images for obviously for the training totally makes sense to have them in sure. But for. Operations. Those images have to be somewhere, on a jurors airway to API, or pull them from other locations where the images may be residing so for the hosted service the, training. Data needs to be on a blob if you want images on premise because they can't leave the the company. Or the corporate then we have a container solution, where, you can deploy form recognizes a container on premise and then the data will stay within the container and within on premise I. Think. You just answered both of my questions too so awesome thank you thank you. Maybe. A bit of a crossover question, there are common. Documents, used for customer onboarding so, driver's licenses, and passports they. Don't always have, tags. Like that says name or whatever but, they're all very common formats, is. There any thought to making those one of the standard. Yeah. So those are things we're looking at we started with receipts and we're looking to see kind of where's the customer signal from you all to, say like IDs, come up a bunch where. It's like like you said they don't have like the key value pairs and they're pretty standard looking across, yeah some of them are lies some of them have keys and some of them don't that's kind of hot we're. Thinking through them. Are. There any is, available, to. Documents. In. Yes. So the API is that everything, is on rest API the training data it. Needs to sit on a blob but the REST API calls the blob okay. And all the services. In public preview so, if you go to the documentation you can see all the API friends and try that okay, another, question is like what are the other models, that you are you going to work on after, the. Receipts. So. We're. Still like, we have others on our roadmap but not any we can share right now and. If we have like a specific, like, we process, millions of invoices, today. Yeah. I think definitely I contact, Netta or me and we can see I think invoices, uh we. Hear that a lot as another one that kind of looks the same across, a lot of like there's definitely unique, invoices, but overall like receipts there's some invoices.

That Just look at universally the same and so that's definitely something we're, looking at. Is. It possible to extract data, metadata. From. Files. The. Former can eyes extract, the key value pairs and. And. The tables are the files if you go to our knowledge mining, cognitive search so let's session. You'll. Hear how you can take files and, put them into a pipeline and add metadata, and search and indexes to them to, enrich your data we. Can extract the metadata it's part of the process like file name mine type so. The form recognized returns the file name as part of the but. It doesn't return other properties, from. The file okay again I. Think. You might have answered my question, but I'll ask it anyway you showed an example with Python. But. As far as like a flow, or logic. App, are. There connectors, or is, that just an API, connector. That is needed to their SDK C sharp SDKs, and there is a REST API that, you can call in c-sharp, Java, or, any Python, or any other language, to. Invoke the former cogniser service okay, so that, is built-in with for logic apps yes yes, we said we have a solution that have using logic app to invoke, form recognizer thank, you. You. Mentioned that handwriting is not supported, yet is. There a way to extract signatures, or anything like that as an image. So. We're. Looking into that from the vision side we. Don't have any offering. Right now but. That is. Something. We're looking at okay thanks. And. Last. Question because we're out of time, then you can come to us offline so, for the samples that you guys used what, would be the response time. So. The response time is very quick for documents, I. Don't. Need that but it's really fast so. He's like subsequent. Subsequent, yeah, sub-second, so totally sup seconds and we'll, take your question offline because they need the stage for the next session, thank. You everybody.

2019-05-09 22:29

odd.... https://youtu.be/7bvqegV9Fpg

When will it turn from preview to the release version?