AI @ Microsoft, How we do it and how you can too!

Okay. Well go ahead and get started. Thank. You for coming, and welcome to the, session that we're gonna do, we're. Gonna talk about AI, at Microsoft, how we do it and how you can -, let. Me first introduce myself, my, name is Eric Boyd and I, lead the AI platform, team at Microsoft, the. AI platform, team is. Chartered, with building. The infrastructure, the, tools, all, the main systems, that are used for helping developers, all, across, the company really. Scale out all of the AI systems, and and just, really make it easy for people to do machine learning and. Then to bring those same things to. Our tools for developers, and make them all the lessons, and learnings that we've had internally, and make those available and, so, what I wanted to do today is, walk. Through a little bit of the, history, of how. We've done machine learning at Microsoft, some of the lessons that we've learned and, how, we've brought those together and, and how we're bringing those into our tools for developers, we'll, start talking through how, the, AI platform, was built from Bing and from Bing ads and what we learned there how. We started to expand that across the rest of Microsoft, how. We started to cooperate with the broader and open ecosystem that's, forming, around AI and, how, we're bringing those services, together in Azure and making them available. So. Microsoft. Has built several billion, dollar businesses, that are primarily, run on AI. There's. A tremendous, amount of lessons, that you learn when you do something like that, the stumbles, that you take along the way the wrong paths the understanding, what it is that really makes something tick and. How to make it really work, in a special seamless, fantastic. Way and I. Wanted to start by telling you my story and when. I joined Microsoft I, started, on the Bing ads team and wanted to talk a little bit about the journey that we went through and the ads team and and, what we pulled out of that, now. There. Used to be charts like this that got shown in prestigious. Publications, like Silicon. Alley insider I don't think anyone's, heard of it anymore I think if they're probably out of business they, liked the snarky, hey look at how terrible Microsoft, is and all the money that they're investing. In bing and what a disaster, they don't know what they're doing and. You. Know this charts several years old but even at the time that they were publishing this there, was a clear shape that was going on search is a tremendously. Expensive business to get into to, serve, just a single user you, still have to crawl the entire web and so, you have to index every single page so that whatever query, they might have you, have an answer to even. If there's only a single user that you've got in it but. As you start to grow and learn and get more information about the system then you can start to improve it and. So you can see sort of the trends, in important, fact that's when I joined Microsoft. These. Are just facts draw your own conclusions. Just. You, know just giving you the information, but.

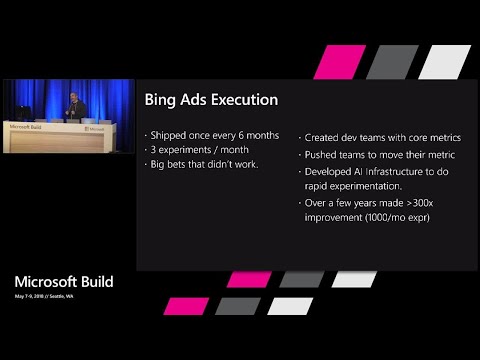

They Stopped publishing this chart and the, reason they stopped publishing this chart in January of 2013 is, because. It became less interesting. To have a snarky, chart about how Microsoft is gonna actually, really grow and do fantastic in this business and, you. Know what is step three is every South Park fan knows its, profit, and so, being is now a tremendously. Profitable business, it's not one that we talk about the numbers publicly, but. It's a very big profitable. Business and so. What I want to talk about a little bit is how, did we get here what are the things that we went through one of the changes that we had to go through and how, do we really accelerate, and build a momentum to, build such a strong, monetization. Engine in Bing, and. So when I started, at Microsoft, and I started on the ads team we. Shipped our software about once every six months and, that. Seemed, crazy to me then it seems totally crazy to me now but remember, where Microsoft was coming from Microsoft, was a company that shipped office, once every three years and that, was the model that they thought about so six months was pretty fast and they. Did about three experiments. Per month and an experiment, in this parlance, is, anything. That I might want to change if I add a feature to my machine learned model if I change a color if I do something like that they, did about three of those a month that they tried to sort of look and learn something from and, they, made a lot of big bets they, pushed on the. Click prediction, engine which is one of the most you, know important, pieces of technology, in the advertising, system they, came up with this new model that they were going to try and just completely. Replace the old one with and they spent about four months developing, it and when, they started to expose it to users and started to launch it it tremendously. Underperformed, the old model and so, we, just you had done the wrong thing and so we, needed to learn from that and figure out how do we you know take those learnings to improve, the systems going forward, and so, what did we do we started to focus our teams instead of focusing on the single metric and ads you focus on the revenue per thousand searches rpm, and the, more you can improve our p.m. that's, just free money that just falls into your lap but. Having every team try and improve our p.m. really. Was to diffuse and it was really hard to tell what people were actually moving so we focused teams on an individual, metric this is the selection team given, a query you're supposed to go and pull all the ads out of the entire corpus that we've got and your. Measure is recall every ad that's relevant I want to see it in the corpus that we selected, this. Team is focused on P click the, probability, of click given a user I want, you to know how, likely they are to click with this query this ad and and, really nail that and really push the team's to just focus on their individual, metric, and move, it and we. Really wanted to get them to experiment much, much faster. The rate, of learning is the key thing, in any online business and particularly in a machine learning business you, have to find a way where you can try a lot of experiments, really really quickly and iterate. And learn and. Over a few years we went from doing about, three experiments, a month to, now we do more than a thousand experiments, every month and so every time if you go to Bing and you. Look at the search result that you get it, is almost certainly unique in some, dimension, that you probably can't even perceive from, anyone else who goes and does it because, there's so many experiments, that are running simultaneously across. All of the different things and we're, learning each and, every time with it and so. Some of the key lessons that we took away from, you know working, through this and learning with our team is first. That when you experiment, most, the ideas fail and. 90% sounds. Like a lot but that's been pretty consistent.

We Found 90%. Of the idea that we tried do fail and so, you have, to have a culture that's gonna embrace that and be content, with that but. At the same time that really highlights, just. How fast, you need to move to actually, learn something and find something that turns into that 10% that, actually moves your business forward and, the 90% of ideas fail it's, very interesting we'd run all these experiments, where people work very hard and, about a third of them actually make things worse and so that's, really frustrating, about another third just, don't make any difference whatsoever and then, one third actually will improve things but, why do I say ninety percent fail well I could. Make the ads blink, everyone remembers their blink tag from Netscape and. The. Click-through rate would go through the roof we'd make a lot of money and you'd piss off every user so, we're. Not going to put the blink tag in there so you need to make sure that all of the metrics are moving in the right direction so. Really. Need to focus on the speed and the iteration, but. To do that effectively you, have to build infrastructure, that just makes it simple, for people to experiment, to try their idea to get it into production to, get the feedback from the users and to iterate and learn this didn't work let me tweak it let me try this let me try this new feature let me try another idea I have 10 ideas let's try all of them at the same time and really, building that muscle with, really great infrastructure, and. As we focused on that we. Started to see some pretty strong results, so the graph on the right shows. The number. Of experiments, ether experiments, will talk more about ether as we go but it's an experimentation, management. System we use and you, can see we added a whole lot more experiments, and started just doing it a much faster pace, and the. Graph on the left hand side shows the relevance increased over time and so, very. Strong correlation you increase the experimentation, rate you increase the relevance, we. Also saw it in our revenue per thousand searches this. Graph shows the revenue per thousand searches over three subsequent years the, red year being the oldest year and you can see the spike at Christmas that we see every year if you don't think ads run on Christmas, well everything runs on Christmas Christmas starts in September in ads, but. You see the spike and the. Red spike that's, lower than just about every of the blue points so the next year our system was so much better than even the spike you see Christmas, wasn't. As good as we were doing the next year because we didn't proved the engine and a similar story the green year we keep improving the system each and every year, taking. Every, idea we can find we've done a lot more with deep learning and built a lot of new power by you. Know being able to leverage all those different models and make the system a whole lot richer and, we built a whole lot of infrastructure, with this and we learned a lot and you, know this is a. Time, period that have been sort of describing it's probably about the last eight or nine years of sort of lessons, and growth that we've had in the being system and. What. I want to do now is talk a little bit about how Bing is using this today in its, relevant system and. So if you think about a search scenario, you. Know it's sort of evolved, we used to be very keyword. Based and so, you'd frame, your query as canned. Soda expiration. Date and of course nobody talks like that that would be weird you, would say how long does a canned soda last and. Increasingly. As there, are more devices. And ways, that you're going to communicate with things the natural language evolution is, really, pushing you to want to be able to use a natural language expression, how, long does it can soda last when I'm talking to Cortana, on a speak, or on a PC, when, I'm using hololens.

Or All of the different devices that we talked about this morning the, proliferation. Of devices is going to be huge and so how do you make this much more natural, in all those scenarios and, so. How. Do you ensure the best results when you have a natural language query like that one. Of the things that we did is, we. Wanted to get a more semantic, understanding, of what. Is this query actually mean and so, we built a deep learn model that translates. Each word and represents. It as a 300 dimensional vector and, you. Know you take we had tremendous amount of data in the Bing query logs that, we could train on to sort of learn these. Are the different words this is the corpus we've got these are the ones that are associated with each other and. Build a model that's going to build a 300. Dimensional vector and part, of the beauty of deep learning is you, don't have to have any real context. Or understanding, of what the dimensions and the vector means you, need to train a model and the system itself will, extract, the information and the features from it. But you can see we took those 300 dimensions and then projected, them into two dimensions to make a graph but. Things cluster together right lobster, and crab and meat and steak like those all sound like things that are roughly. In the same area, you, can see McDonald's, with an ass and McDonald's with an apostrophe you're almost right on top of each other and, so you get some confidence that all right this has learned the right thing and the semantic, knowledge about it and now, you can move to a vector-based retrieval. Approach where. What you do is you, take all of the query that you've got how long does it can sew to last and then. You convert it into this vector base you run it through the inference, of the model that you created and trained and then. You've, also run all of your web results against, that same thing and you've, scored them against, your same model so now you can, do an approximate, nearest neighbor search looking. For which of the vectors that are most closely aligned, and then you feed that into your ranking index and, then. You do as well the, traditional, reverse. Index and posting lists and gather the results that you've got there but now you've really supplemented, your results with, a much richer set of data from this deep learning model that you put in there, so. How does it work how does it perform if we look at the search results for Bing on how long does it can soda last there, are a couple interesting, things that really jump off the page with this, first. Off is that we just answered the question right, up top you can see we found an article, that, has an answer to the question, and we highlighted, the right answers nine months and three, to four months for diet soda which was news to me the diet soda doesn't last as long but.

Sort, Of pulled that answer right up top the. Other thing that jumps out is all. Of the different ways to express this soft. Drinks doesn't appear in your quote in your query at all. Unopened, room-temperature, pop, to, those few of you from the Midwest who use the word pop, we. Still will find your words as well can. A soda carbonated. Drink and unopened. Can of soda and so, just by using that sort of the, vector and the query similarity, you can pull all the information, out of that and really, get pretty fantastic results, coming back. So. The platform that we use and, this is what we're going to talk a lot about in this is this. Is the the way that we think about this Scott, walked through in his keynote this morning, when you think about deep, learning when you think about machine learning any type of AI there's. A data prep phase there's, a building train phase and there's a deploy phase and so in being, the infrastructure, that we built is of, course there's the web corpus we've gone and crawled the entire web and we store that in our big data store that we call cosmos, and then. We also take the Bing history, all of the queries and things that people have done and we, store that in cosmos, as well and. Then, we, build put that into ether. Which is ether is a system, that we've built internally, for. Managing, all of the experiments, and all of the workflow that a machine-learning developer, needs to do and. We'll talk more about ether in just a minute but, they build and train the models on ether and then. When. They actually want to do the training work GPUs. Are unfortunately, and, we, have. Clusters of thousands, of them but you need a very sophisticated management. System to make sure you're. Allocating. The jobs to the right places that you've got the quotas managed appropriately and, so, that's a system internally that we call fili which manages the clusters of GPUs, to go and efficiently do our gr training, and then, you need to deploy them and, so for being for a while we've been deploying. It and inferencing, on FPGAs, and we talked this morning about. Project brainwave and somehow that fpga, work in bing is now coming to see the light of day and, so you'd inference it on bing and really get a lot of acceleration, from that and so that's the platform that we've put together for, how. Bing works and what I want to do now is have, a young, G Kim walk, through, the. Ether system that we talked about and how they use that and a lot of their systems. Alright. Thank. You very good. Hi. My name is Yong su Kim and I work in being relevant, in the eye but number. Wires. We've never had a problem with wires before. They. Go alright, sorry, about the technical, difficulties. As, always. So. I work in bringing relevance, in AI as Eric, mentioned we, do, a lot of deep learning and machine learning in in being, and this. Is a platform, called ether that we use for our development, I. Must. Say ether, really, helps us to keep, our sanity and you, will see what I mean by there pretty soon. So. This is ether client, which provides, me graphical. UI. To. Manage, my experiment, if your preferred programming, ether also, provides visual, studio, plugins so that you can programmatically. Manage your, experiment, as well. So. This is very typical ether. Experiment. A. Graph. And purple. Node represents. Our data, sources, and the, green node is. Either, modules, ether modules, represents, any arbitrary. Executables. Python, script cosmos, script command, lines and so on, so. On the right side, of the ether client, there is a search pane for data sources, and modules, so, these are data sources and modules, published. By others, that, I can be used for my experiment, so, I never, really have to start from scratch. Another. Thing I wanted to point out is some. Of the modules are encapsulating. Other modules, so, for example, this fuzzy match module, when I click details.

It. Shows. The. Content, of the module, so. When I go back to my original either. Experiment. This. Looks pretty manageable, but. This. Mod, this, experiment. Is actually composed of 560. Modules and the, other day. Was looking at another experiment. That, was composed of 12,000. Modules so, now you know what I mean by ether, actually helping our sanity. Next. I want to briefly touch. Four. Steps that we go through as we are developing our, models, so. Those are first steps data. Preparation. Training. Validation, and. Deployment, so. The ether experiment. That we have been looking at is actually representing. Our data preparation, step. So. What it does is it, retrieves, our data from cosmos, which Eric mentioned earlier which is our big data storage, and it, runs a bunch. Of processes, also running on cosmos, and it. Produces, our. Training. Data as well as validation. And the testing data so. Once this ether experiment. Is completed, now we have those data sets so. The next step is training, so, I'll flip to our training, ether experiment. And. In. This experiment, what, ether does is it grabs our. Python. Source. Code as well as its dependencies. From git repository, and it. Grabs our training, data from cosmos, and, it, packs them and submit. To Philly which is GPU, based, compute. Cluster that Eric mentioned earlier. So. Once this ether, experiment. Is completed, now we have a newly trained model. So. The third step is validation. So. This either experiments. Represent. Our validation. Process. It. Pretty, much evaluates, our newly, trained model against, our current, model and. The. Last step is deployment. During. Deployment. Experiment. We retrieve our newly. Trained model, with. Its artifact, and submit, it to our hosting, environment, called the lis or deep learning inference, system. So. These are first, steps that we take during, our development, and each, of the step is represented. Either experiment. So. Before I conclude the, demo I want to actually show two of my favorite, features in ether so. I'll flip back to the, the first experiment. And. Bring. That this menu either, supports, clone so, from any experiment. I can clone, the experiment. And. I. Can make modification. And submit, my newly, modified experiment. And you can imagine this feature is very very, heavily used in my team because. It made iterating. And reproducing. Experience, much, easier not. Only that it, really empowers us to build, on top of each other's idea so. For example, we, had an ether experiment. For superable prediction, with, a few, modification. We, could actually predict, Oskar using, the same experiment. So. The second, feature, which. Is my favorite, in, order to show you I'm gonna just make a quick modification. To this experiment. So. I'm going to change the parameter, from fifty, to hundred and. Save. And. Make. The corresponding, description. Update. And, submit. This, experiment. Once. It is submitted I get a link to the ether experiment. And. This is how the. Ether experiment. Looks like the. Color code gray means this, module has not been executed yet, once, this is executed, it, will turn to green I, know data. Probe actually usually takes a while because we're dealing with tons, of data, so. Let's see how it is progressing. I'm. Going to refresh, from word time. So. This. Module has been already executed, but, we're actually dealing with like terabytes, of data so usually it doesn't take few seconds to process it but. It. Could actually be. Completed. Because we're reusing the, output from the previous experiment. So this recycle, ID means ether. Back-end in the ether back-end we use cached data so that it doesn't have to rerun the module which, is pretty awesome because I can save my time and the resources, thank. You thank. You. The. Experience that youngji described, of a developer. Cloning, a model changing, something in it and then running it that's, the experience that most of our machine.

Learning Experts. Go and do every day and that's where really the iteration, speed comes from you, cache the history you start with something that's already been done you, can build abstractions if, I need to extract a particular, data set and transform it in a particular way you can really leverage that and so, a lot of power really comes from that and the being relevant side. Now. Moving on beyond being, you. Know we took the platform, that we built and, started working with more, and of the groups across Microsoft. As, we see AI is really transforming. Every, business in the world and Microsoft, is no exception, and so, each and every team that we look at is doing, AI in almost some way shape or form and many, products, that you wouldn't even think of as being Naturals. For needing machine learning in them actually. Have a lot of machine learning in them and we're really able to accelerate those teams by using all of the infrastructure, and the learnings about the iteration, speed and what we're trying to do in the failure rates and all of those things we, can bring that to those teams to really accelerate, them and. You. Know as we culture, change is always hard and so as we work with some of those teams it can take some time to sort of warm up with those ideas you, know I mentioned that Microsoft. Had a history, of it took a long time to sort of ship things and people sort of knew what they wanted to ship so one. Of the teams I was working with I was about to do their, first machine learning experiment, and they built a model and it was adding some new value and their feature and they were very excited about it and we. Were going to do the experiment, and get learning's and the data back and I, got this email from a person I no longer named. Hey we've decided to ship the xxx feature without running the experiment, we're quite confident in the feature our team has a rich history of shipping features without any experimentation. Something, one may be alien, to folks from Bing and. Unfortunately. I'm a little bit snarky and so I replied, I have a rich history of driving my car with my eyes closed I'm quite confident I know the way to work and I've only crashed a few times. The. Key message there is that's what it's like if you're not gonna run an experiment you're not gonna gather this data back why, are you driving with your eyes closed open your eyes there's amazing things that you can learn from this and, I'm. Happy to say that I, no longer receive emails like this and the company has really embraced the, culture of let's gather and learn data from our users and let's iterate quickly and, experiment, on small sets and expand from there but. Culture, change takes some time, but. One of the next airs I want to talk about is, PowerPoint, designer and. You. Know PowerPoint, is not a product. That I think of as having a lot of machine learning in it and. So, product PowerPoint, designer is you know this is a traditional. Slide you might see it's, kind of boring text. And some bullets and the, designer is sort, of the right-hand. Pane that's trying to suggest hey you can make this look better and nicer, and as. Someone who creates a lot of PowerPoint, myself it's. Hard it is hard to come up with the right designs to make it engaging and, interesting, and. Really, find the ways to capture what. Am I trying to communicate in, a nice good succinct, way and. You. Know so the. PowerPoint, team have, built this feature and they built it first using a rule-based, model, and so, rule-based, model, if I see these bullets, then make this suggestion, humans. Sort of curated, these are the list of things we want to do and so, the blue line represents, how the rule, based model worked and, they. Then built a machine learning model and the, PowerPoint team cares about two key metrics, the, first one is how, many of the suggestions were kept overall. Out, of all the suggestions that we show and how many people said no that's a good one let me keep that and, the second one is the keep rate. So you. Can think of the keep rate as the percentage, if I showed it to you 3%, were kept and the, top line you know there's some you. Know you also involve in there the fact if I showed it to every single person every single slide or something like that versus, not every slide and so you can see at the start that, you.

Know The machine learned version, the redline is not being kept as often as the blue line they trained a model purely using offline data and it wasn't performing, particularly, well, but. Then they started to use the inert user interactions. If they start to feedback hey this user clicked on this one so that's a positive label, that, this was a good interaction, and feedback that through and you start to see the, machine learning model starting to improve it slide kept rate and then. The next thing that they do is they, move to a reinforcement, learning approach, and you. Know reinforcement. Learning really. Takes two key concepts, if I have sort of a great answer, then. I'm going to exploit that and this is something that in machine learning you see all the time we're going to exploit what we know but. With reinforcement, learning you need some amount of exploration and so when do I go and explore, and try something new or different to, learn hey this is actually even a better idea than what we had before and, so what you can see is they spiked the slide, keep rate and then, they turned up the volume on how often it triggered which kept the total, number that we're being taken, to, go up and, then. The next step that they went is they, first changed. The scale of the slide so that it would show up, because. They really you know made something work tremendously well, they, started to feed more and more data on all, of the you, know publicly available slides, that they had and just looking for ways that they can feed all of the different combinations and, ways that you can go and train this model and so, now we have what's a really rich and robust feature as a part of PowerPoint, that, was really accelerated, the rule-based model is, nowhere, near sort, of the volume or the sort, of value, to users but, by using the machine learn model you can make it much much richer and, so this is an example of something I would.

Not Have guessed outside, that of course that should be a machine learned feature but. Is that a tremendous value to PowerPoint, from it and, again. The infrastructure, that was used was. The infrastructure, that we built and learned from Bing, and so the same stage is the data, the building trained in the deploy they, had all of the PowerPoint, user behavior, what are the slides people are clicking on and how are they using it the corpuses, of different. Slide decks that are available and open on the open Internet that they went and learned from they. Did data prep using Azure data bricks and stored the data in cosmos as we talked about they. Used ether which we just talked about to manage all the models and the experimentation, and sort of iterating, quickly through that they. Used TLC, TLC, is an internal, library, a c-sharp, library, that's, been optimized, to run ml algorithms, at high speed is, used tremendously, all across bing and we'll talk more about TLC, and how that's making its way out as well and then, they went and deployed it into their application, and the, PowerPoint application that you're using today is benefiting, from all of this so. What I wanted to do is ask a non to come up and have, him walk through some. Of the great things that we're doing with the PowerPoint, designer. See. If we can get this right. Thank. You Eric. Hello. Everyone my name is Anand Balachandran and I'm a program manager representing, a team a big, team of machine, learning scientists, data. Scientists, and engineers that, built PowerPoint designer how, many of you are familiar with PowerPoint designer today. Okay. Not a whole chunk of the room so I have the privilege of telling you what PowerPoint designer is so when. The team brainstorm. And created PowerPoint designer write the one, mantra. That we took is what. Does it take to, make a deck that's filled with boring, slides boring. Bunch of text and. Address. The gap between a deck that's just filled with boring, slides and text to a deck that can help you close your deal so. The, one. Operating. Principle, in PowerPoint designer is to help each and every one of you be, a better storyteller so. Like Eric mentioned we, had the privilege of looking at vast, number of decks that are present out in the wild and we could actually label, this data by, looking at patterns of words that, were present in PowerPoint, one, of the one of the privileges we have in Microsoft, is to share a bunch of natural language technology, that, can address by, using traditional, natural language libraries that, can understand parts of speech and apply, word breaking, and parts of speech detection, to words so the rule-based model that Eric showed you first was, able to understand, what, are those types of words that describe a timeline what. Are the types of words that describe a process and so we could infuse. That knowledge, into the product and say for, the initial rule-based model we, could apply a certain set of word mappings, to a certain set of designs and of course it is that system, that has picked. Up and revolutionized, into a completely, machine learning. Based system so let us look at some examples, of how that works here, is the same slide that Eric showed a little while ago that, talks about the legislative history in the, country and you can clearly see that when, I launched the design ideas pane PowerPoint. Is able to detect the, presence of a bunch, of dates in the slide which, obviously represents, a time line and it, says look, what I have a timeline based view and representation.

For You so. I'm gonna go ahead, and choose, that design and. Doesn't. That make the slide more impactful, so. Similarly. I have, another situation where, I'm describing, just a simple process that takes. You. Know a description of how to make espresso you, buy some beans you grind them you categorize the beans tamp. Them well and then once, you get your brew going you have great espresso and guess. What PowerPoint. Is able to detect that. This. Is a process and it actually shows this as a flow diagram so. What. We've done through the process here is of course design ideas in PowerPoint, are invoked, both reactively, as well as proactively, in many, cases, when, you are in the Taiping loop and you finish typing a bunch, of text and your foreground let's say goes into idle, powerpoint. Designer says okay here is my chance to suggest an intelligent, design for this slide and it, pops right in in. You, know as least. Intrusive as possible, and offers you a bunch of suggestions, and if you're done with that suggestion, you can dismiss the design ideas pane and go back to working on your slide deck until. The next opportunity for us to prompt, you a more, intelligent and applicable design the. Reactive, way in which you can, invoke. Designer if the, automatic firing is not happening. For a particular slide for some reason is you can always go to the ribbon and launch, the design ideas pane and there. You have it there will be those designs. Lately. Like Eric pointed out after. We started seeing the uptick in the designers performance, we started thinking hey how do we introduce. Exploration. In order, to do better, designing, now, like. Eric said exploitation. Is when in a world of recommendations. You always want instant gratification so. You give the highest intensity instantaneous. Reward for, a particular, suggestion, but. Oftentimes we, notice that a machine learning system that is built on reinforcement, learning can, pick up when, you start to explore with. Lower rank suggestions, by fitting them in into, your existing design suggestions, so this is precisely what we did in the world of icons, let's. Take a look at that so, PowerPoint. Has a bunch of icons. That we ship within the product and so we could take, the power of word embeddings, and combine. A, bag-of-words the, map to a certain icon, and we said. How wonderful it would be if we took a slide that has text, and give, an icon-based representation. For that slide, and. Let. Me invoke, design, ideas one more time and. There. You have it I have. An idea slide with, an icon representation. To, take. One of our in product, icons and make, the rendition better and this, is a technique where we're using exploration. Where icon, is not something you may expect, immediately. When, you had that text in that slide but we were able to explore what, our model gave as a lower priority such, a lower rank suggestion, and push, it up in the stack so. Just a moment for me to, reflect. Back to what youngji said around ether and TLC this is the system we have been using in PowerPoint designer to, train our models over the last year. And a half and the, thing the the great advantage, of using tools like ether and TLC is that, it helps us build workflows, and graphs, of, chaining. Models, now powerpoint design are the effects that you saw today is not, the effect of one single model there's, a composition, happening on the slide and it's a combinatorial, problem because a slide deck has pictures, it has, text and it, has small dirt and SmartArt.

Is Where you emboss you take and take, an image or a particular. Piece of art that tells the story and you emboss the words on top of that smart art so, you have machine learning models that are predicting, the appropriate, SmartArt the. Best way to crop and position the picture and then, you have the, overlay, of text on top of the SmartArt and that's a combinatorial problem, so we have a layer of models, that are running in a workflow and then we have a rancor that runs on top of all of that to, rank all the suggestions of the results of these models and this is where a system like ether TLC. Helps us tremendously. So. If I have one call to action for you all it is, to use PowerPoint and rejuvenate, your presentations. And become, that better storyteller and stop, creating boring, slides. Factory. Hey, come on I. Always. Worry about telling the PowerPoint designer story in the middle of my own PowerPoint, presentation. Cuz if. You don't like the slides I clearly should have used designer, a little bit more, but. Yeah, a lot that we've learned in taking. Again the infrastructure, that we built and bringing it to other places across the company and so, that's, really the story that that I want to land with you is we've. Looking at AI across, Microsoft. You. Know really every business is investing, in it and this, is one of the great things about being at Microsoft, is we are such a big company that, you know we have thousands. Of data scientists kneei developers, building models every. Day and they. Use basically, every technique and idea and tool and platform, out there from, classical, machine learning, to new and advanced deep learning from. You know working on very, compliant, data with customer data where you need to be very careful at how you manage it and who's allowed to access it to public. Datasets to publicly, crawling, the web we. Use lots of frameworks we've developed, we use virtually, every open-source framework that's out there and we need to deploy it to basically everywhere, and so it, really has set us up well to learn, all of the different things that people might want to do to, be productive as an AI scientist, as a machine learning expert and. This. Is how Scott sort of framed earlier today the work of a machine learning developer. A data scientist, really, comes in these three phases you prepare, the data you, then build it and train the model and there's a lot of iteration, there as you think about the changes that you might need and then you have something that works and you look to deploy it and, I want to sort of walk through the, tools that we've built and how we sort of use those internally. In, building your own model. And creating. The data that prepared data phase we, talked a lot about the system that we've called cosmos, and cosmos, is a massive. MapReduce. System it. Has exabytes, of data and it receives, you know millions of events every second from you, know billions of devices across, all of our different products and platforms, and.

Of Course it's has been a fun, project for me it's gdpr compliant. And we've learned all about the privacy rule, and regulations, and controls super. Important, for our company. And for enterprises, around the world to make sure that we're handling data in a respectful, manner and. So being, able to have this as one of the first building, blocks and go to any group, in the company and they're they just they get so accelerated, by not having to think through all of these questions and concerns the. Next step they need to build and train and we talked a lot about ether, this is a system that we use for managing ml pipelines. Optimizing. Prototyping. Managing. Your data use you. Know one of the main things that we do as computer, scientists, is we create abstractions, you, have something that's really complex, and you wrap an abstraction and youngji talked about we can have 500 modules, and you, sort of wrap an abstraction on that and that's you know extract, data from this and get it in this particular format, and run all these transforms, on it and that just simplifies the workload for people and we, have millions of pipelines that we run on this lots of active data sources this, is a tremendously, valuable tool, that we use and virtually everyone who's doing machine learning at Microsoft, is using, this tool and. Then. The next phase is deploying, and. We've had, to work a lot on how to deploy in a really smart and sophisticated manner a. Lot, of our you, know if you send a query to Bing about. 600, machine, learned models need to fire and it needs to fire in milliseconds. You know we have about 50, milliseconds, to go to the index pull all the relevant results out and give them back to the ranker. And you. Know so the system we use is a system called the deep learning inferencing, system and so it really takes all of those deep learning models and can, run them really really fast it works at 600,000, requests a second which is really fast and run it's super low latency, and it. Deploys constantly. It deploys something like six times a day and so, anytime a data scientist, has a new model they, deploy it onto DL is it, will. Automatically. Run your tests and sort of verify that hey this model doesn't break sort of if you search for something obvious, that it gives you the obvious answer for those things if, you search for Microsoft it better give you Microsoft comm as a search result. It'll, also look, at sort of the core metrics, and sort of compare is this, performing, better than the old MA that you're aiming to replace it with it'll, flight it it'll start at two percent of traffic and ramp it up through the stages and make sure that we're not breaking things or doing things that we didn't intend and, so just taking all of that complexity, away from the developer, and saying you can just push the button and deploy this again.

Dramatic Acceleration, started. In being and now used widely across the company. Additionally. We've built other things, to sort of accelerate, the work that people do in, deep learning and, all of machine learning but particularly in deep learning managing. The number of hyper parameters, is a really challenging, thing for people to do hyper, parameters, for those who aren't tremendously, familiar, with machine, learning if, you think about what does deep learning traditionally, do you. Sort of come up with some error function, and then you do gradient, descent to sort of take steps down it and get closer to the optimum, performance, one. Of the key questions is well how big a step should I take and there's, no real science to that which is one of the interesting things in machine learning it's basically, guesswork, and so you try generally, ten different parameters, how. Many layers should my convolutional, net have there's, again no real science to that so you tried different numbers, to see what's going to work best and so those are all the different hyper parameters, and to, really have an effective model you, need to try and sort of explore the space but, it's very expensive you have to train a model each and every time you do that and so we've built a system that, we'll walk through you, know the the hyper parameter, space and we'll try, all the different options and we'll even quickly prune jobs that have tried some job and it's you say this is not converging, as fast as the other things that I've had it kills it which saves you a lot of time on your GPUs, and manages that and. Doesn't much you know it's it's a machine learned algorithm, itself and so it makes much better predictions, than just sort of randomly walking across or through it so another really important, piece of technology that, you know we've learned and now are bringing to all of our different teams and. You. Know bringing it together it's. Hard to do machine learning it's hard to do it at scale and we've, had to build a lot of really rich infrastructure. To, make it easier, to make it approachable for developers, across the company to, really go and do this collaborate. On large models, to do their individual, models and, really pull all that together and, you. Know now it's providing, tremendous acceleration to, all of our different teams and. I wanted to talk about you, know project connect Fraser which we announced again this morning Sachi talked about that as, another area where you. Know they've taken this really, cool fourth, generation Kinect. Camera, and you. Know it takes an image but, the cool thing that it does is with, each pixel it can calculate the depth of that pixel and so not just the brightness but the depth and, so now you can do things like change your point of view and actually get a 3d model from a single camera that's sitting in a single place this is amazing, this is really cool stuff and, they.

Want To figure out all right we want to use this in a lot of different scenarios but having. The picture on the left which shows you just sort of the point cloud and the depth cloud but. It doesn't tell you what that is and, so now you need to you really want the picture on the right where. The walls are labeled the floor is labeled, the chairs are labeled the table and now I can sort of take this depth cloud picture that I just created and now try and do something more interesting I know all the information about it but, to do that effectively, you have to figure out how can I go and train, the model to, take this image on the left and the depth and all those things with it and actually, now put the label labels, onto the different pieces and so, what. The Kinect for Azure team did is they. First, took sort of the input from the camera and then, they built their own custom labeling tool where what they needed to do is they would take a particular, image and scene and create, the labels for it and, just really this is the ground truth, and then they take those labels and then they split them traditionally, as you do a machine learning the training set and the test set and then, you train your machine learn model again on Philly the GPU cluster that we have and they produced a CM TK model that, then they transfer to an onyx model and they used to deploy and go from there and then, they take that same scene TK model and they validated against the test set and they built an analytics, client on top of it which will help them understand, where. The different places where, it's. Performing, well does it understand, that this is a table really clearly but we're missing that it's the floor or things like that and, really put all of that together into, a rich model and so I wanted to invite Michelle to come and walk you through some, of the things that they've been doing in. The. Project. Connect for a juror. I am. Perception, team I'm gonna tell you a little bit about what we've been doing here. Which. One you want. Sorry. Of course. There. You go. So like Eric said we have been working on training, a DNN to take the raw output from Project Connect forager and build. Essentially. Environment, understanding on top of that so, this example is focusing, on common building and furniture elements like chairs tables, floors could. Be used for things like navigation and, obstacle avoidance but as we walk through this you can imagine using this for any type of object object detection. So, the, first thing we had to do is actually build out that training set. Traditionally. Image. Classification is, done in 2d, over the entire image but, what we're looking to do here is actually classify, individual. Components, of this image so, we had to produce an image like what is on the left or sorry on your right my, left here right so. That we can actually tell the DNN what parts of the image we actually care about and what their labels should be, one. Of the biggest challenges we had was the amount of data that's required to actually train your DNN, reliably, so, our training set right now is approaching a million labelled frames, obviously. We can't do that manually, for every image so, I'm going to show you how. We scaled. That process. Maybe. Okay. So, this, is a fully, reconstructed. 3d. Scene from, a real room scan and what, we're able to do with this is actually create, a tool. That let us go in and label. This in 3d basically, selecting, areas of the image and labeling, those you, then go from many different perspectives and, project this into 2d for, a scan this size we.

Can Get approximately, a thousand. Images that, have now been labeled that we can plug into the DNN. Once. You have all that data ready to go we use Visual Studio tools. For AI to, help manage some of this experiments, that we're running so. We've got a main driver script, all of, our. Parameters. In one configuration file, some. Utility, classes for shared functions, we. Start out running this locally on a very small data set and when we're ready to scale we can take advantage of some of the tools that Eric was talking about specifically. Philly which lets, us actually manage GPU, and scale, out so. I can deploy to Philly directly, from visual studio I can, pull it up, select. The cluster I'm interested in and actually. Scale out how many GPUs, I want to use right here. Once. I submit that job I can. Actually manage and monitor everything directly from visual studio as well I can see my full job history, and I can actually watch in real time as my job is running and that models being produced the. Other thing Philly provides for us is Elastic Compute it's. Very important, for our ability to scale you can see even for this job. This. Was running across, eight GPUs, and it still took almost two days to process so. With Philly we're able to run many of these jobs all at once or. When, we're not using them share them out with other teams at Microsoft who might want to be taking advantage of that compute. And. Last but not least once you actually have the model trained we, actually run the test image through the DNN, and we've produced our own tools for running metrics on top of this so. Once you run the test image you want to visually compare the, original GT, or what we wanted the result to be with, the actual predicted, image from the DNN so having this visually side-by-side, lets us actually drill into issues, that are identified and be, able to see exactly where our models failing. We. Can that, this tool helps us calculate metrics across the whole data set or we can also look for class so, you can see for this model, ceilings. Doors did pretty well chairs, we have a little bit more work to do. You. Can check out other AI, ready, IOT, devices in the expo hall today and I'm, looking forward to seeing what else gets built on project, connect Fraser from you guys thanks. Ari thank you so so. Yes. A lot of very interesting, exciting, things happening really all across the company as we build you know AI models, in almost, every product. So. We started with Bing we, expanded, across the company we've now built a platform that we think works really well across, our internal. Company and, the next thing we wanted to work more on is making sure that we had this open and interoperable AI, as. We think about you. Know the world of AI it's, really, gone fast, it's that mean so developing. So rapidly and. One, of the things that's powering that has been this commitment across the industry to keeping things open, not. Lock-in, not competing, vendor platforms and things like that but really making it available really. To every developer and you. Know we started to face this problem internally. As, we started to we had several different internal platforms, you know with all the different teams across the company everybody. Kind of made their own decisions, and I sort of made allusion to this earlier that, we support just about every single platform and every single combination because. Someone's, using it somewhere so from, tensorflow to CM TK to PI torch really across the spectrum and then, you, know what, my team is expected, to do is to take all those different platforms and make, them work really really well on all of our different hardware and accelerate and all the other you, know as we need to go and deploy or do training or all these different things and you.

Know What that leads to is really, something of a mess where each framework. Now needs to connect to, each of the different hardware platforms, in really. Just a combinatoric, explosion. Kind of manner and you. Know we were really starting to struggle with how can we manage all of this complexity, and, we started talking to other company. And found that they're facing, the same challenges, as well and so, you know the approach that we want to take is to just wipe all this mess away and come up with a much cleaner and simpler model, of let's. Bring everything into a single format and then have that that, single format connect to all the end devices, and you, know this is informed a lot from compilers, if you think about compilers, that have multiple front, ends for different languages that, all then compile down to the same intermediate, representation. Then, all the optimization, on the intermediate representation, the common sub-expression, elimination all, the things you do there all happens, at that intermediate representation. And then you know how to go from that IR into each of the hardware layers machine, learning is actually fairly similar where each of these frameworks will output a graph and the, graphs are all relatively, similar and can be represented by a common. Framework a common, model description, you can then do a lot of optimizations, even a lot of the same compiler, optimizations. You would do and then now deploy it directly on all of the different pieces of hardware that you can in the most effective way that you can and so, this. Is a project that we we've called project onyx and we've partnered with other companies across the ecosystem to make this happen but, the simple perspective is tools. Should work together, everything. Should just work and it shouldn't be made a particular, choice and that locks you into a particular direction and so. As. We built this project we really, are looking for interoperability, and, there are two key dimensions, we look at on the one side you can take any framework, and now connect it to any particular hardware, layer on the other side and. Make any connection, through there that you need and do that really effectively, and performant, lis and really you know making that really simple the, other challenge that people run into is they. Develop a model in you, know say PI torch and they want to make it run in cafe 2 and, or. Even just convert two different models from one place into another and so how do I really bring that together and again, onyx provides you a nice you, know way to sort of take a model in one framework and then effectively show. It up in another framework and make that work very well and, this, has been a broad based industry. Cooperation. You, know we started, with Facebook and, created, the project and then we invited Amazon and they are now participating and. Then we've, got a host of hardware, partners as well Qualcomm.

And Vidya Intel, you. Know basically basically, the whole industry, is seeing this is really valuable thing that they need to go and do and. And it's good for everybody it's good for the hardware vendors because they want to optimize for all the frameworks and they don't want to have to provide 20 different libraries, it's good for the framework makers it's good for developers, and, this. Is a community, project it's it's, open source and so please feel, free to get involved, its github onyx and feel. Free to use it and use it in all your tools and all your platforms, and. Then you know there's a lot coming this is a very active development, project one that we're very committed, to as a company, we've. Basically. Said all of our tools are going, to standardize. On onyx and Windows, ml is, a runtime in the April release of Windows that you can now get that, onyx. Runtime and onyx hardware accelerated, machine learning runtime just baked right into the operating system and. So, you. Know how can you use onyx, and how can you sort of use that in your applications, well let's sort of talk through this a little bit, there. Was some pretty, interesting work done at Stanford where. They took a publicly, available set, of chest x-rays, and. They. Trained a machine learning model on top of it to predict certain diseases, based. On the checks s chest x-ray and, they got really very good results high high quality prediction. High fidelity on that and it. Was a pretty interesting, you, know research paper that they wrote and the, data set was public so a, researcher, at Microsoft went. And just tried to reproduce it let me reproduce, their results and. Actually was able to perform. Even better than the results that he got from Stanford building, on sort of everything that they'd done but. So now I have a pretty interesting tool, I have a tool that given a chest x-ray can, advise a doctor, hey maybe these are the diseases, that you should go look in a little bit more deeply, but. One of the key challenges we often see in the, machine learning ecosystem, is I've, got a data scientist, who really understands, the model construction, and the model building and how do I just, you know make it tune and work really well and then, I have developers, whose job it is to take this model and then, actually, deploy it to lots of different platforms make. It work performant, lis and so, there often is this divide that we see between. Them and so, we really want to make sure we've got the tooling to just, really simplify, that so.

If We think about again, our standard, data prep build train deploy the, standard model that we're talking about across, all of these systems we, had you know a publicly. Available National. Institute of Health's chest x-ray data set that, we then built, a model a very. Rich, convolutional. Dense net 121, a whole bunch of jargon that you can go and read a paper if you want to know exactly how they did that and. Then we trained it and we trained it we use Visual Studio tools for AI to, build and create the model we, used a dremel to go and manage the training and our, deep learning GPU, clusters, and then. Now. It's up to the developer how do I get this thing into, a lot of applications, and you can see on the far right hand side how do we want this to work we, want this to work on everything from the cloud to, a Windows PC to, an, iPhone or an Android device, to, an IOT edge device, I don't want to have to train and rebuild this model every time I just want it to work and so coming up with the tools that can really manage all, of the life cycle of a machine learning developer. How do I take this model how do I put it into a docker container, how do I convert it through the different models and really, make it work and. So I wanted to invite Chris Lauren up to come and walk us through exactly. How we did that and where that model is and how it works thanks. Eric. So. What I have here is an iPad, where, we've taken that train model, that he mentioned and you can imagine a doctor, somewhere, in rural, America. Or somewhere else in the world where. They don't have ready, access to trained, radiologists. Leaving, access to, a portable x-ray machine maybe with Doctors Without Borders who's, traveled somewhere they're. Able to take chest x-ray images, and now they're trying to triage, and diagnose and determine, whether it's worth so, a patient, actually taking, time out of the day and traveling to the big city to, get more the, expert care that they need so we've deployed, the, model. On this device here and we. Can simply click import image and select. One of the x-ray, images. And, it done and so. Sitting in a doctor's, office then you can imagine going, through and actually looking at and having that the, trained AI model. On device. Providing. Guidance to the doctor as to where look how to interpret, this it might catch some other diseases, where doctor, might find. Something pretty obvious, in one part of the x-ray but then there's of course maybe some other things going on in that x-ray, as well and so augmenting.

The Skills and capabilities, of every human through, AI and, devices. That are scoring locally, or in the cloud is, something that I'm super passionate about but as a developer, I want to show you how you can actually build something, like this so. Let's toggle, over to, my. Windows machine here, where I can show you we've got that exact same model in. The, Windows ml app and so, we can again detect. The, diseases, that might be affecting, this patient, now, to, build these applications, use, visual, studio to. Both train, the model as you saw earlier we can work. Together with the data scientist, you see I've got a Python project down, down in the lower right here, where, the data scientist, is training, using kiosks and tensorflow and they, can right click they. Can submit their job to as, your batch AI or, as, your GPU VMs. And use, the power of Azure machine learning to, keep track of their experimentation. But. As a developer. I'm going to let them worry about that stuff I'm going to collaborate through, kits like I do for all of my other projects, with my other developers, and show. You how we can take that train onyx model and incorporate. That in my application now. Not all data scientists, start with training, their models and understanding, what onyx is but, it provides a mechanism by which the, data scientists, and developers, can work together in a common way so, for, if they've used another, framework, like tensorflow. Or scikit-learn. Etc. Right, here in tools for AI you. Can convert. From core, ml tensorflow, scikit-learn. Etc. To produce your onyx model. Additionally. You. Can create a new, model, library. Project. Which will take. In a trains tensorflow. Or onyx model, and using, our new Microsoft, ml scoring, library, wrap that in a consistent way to provide an interface where, a service like the deep learning inferencing, service that youngji mentioned earlier can load balanced lots of models across, a cluster, like an azure so. Regardless, of what type of model, is being used the, way that you can exercise or, score, those models, is always. Common now, I'm going to show you how to just add the model to an existing, project I have here I'm going to import my model and I'm. Going to import, this trained. Onyx model that detects, diseases. From the chest x-rays now. When, I do that I'm going to traverse the graph and I'm going to look at all of the the inputs, and outputs and. Give. This a name here. And. I'm. Gonna give. This a description. So I can remember what this does now, because this is generating, code I want to be able to control the the name of my classes, and methods. So. I can change my my, input names and my output names. And. Hit. OK and now. This will generate, c-sharp code in my project. That I can use now this particular, project. Contains. A model as a reference, just like it would any other asset. That you would add to your project and I've, got the model code here as well you. Can see it already, imported the Microsoft ml scoring, that I mentioned, earlier and you. Can see all. The, code is, here to take the inputs, and the outputs and actually, score and provide a prediction, now. As Eric mentioned I need, to actually take this model and deploy. It not only in this particular application, which, is an azure function. Going to be used in a jour function but also on, IOT, edge so, that we can perhaps do the scoring right on the edge in an x-ray machine itself.

So, I'm going to show you how, to deploy. Using. The. STS which, as a developer, I'm using all the time anyways and notice. That here. I've got a dependency, on the, onyx model file so when the data scientist, iterates. On the model training and they have a new version of that model then, they can simply do it get commit and push to the repo and VSDs, will watch for that change you'll, trigger my test cases and it will then subsequently, go, ahead and do, the next great. Stuff which is prepare. The docker image, using, that ashram machine, learning CLI, will, go ahead and prepare the docker images, and roll those out to, the IOT. Edge as, well. As update. My new get package, that. I had created in Visual, Studio and, then. Subsequently, set up my build process, to update every time the model, is updated, and, so. This is monitoring, that inferencing, project, and updates, my asha function, so at you that as your function uses that NuGet package so, just like any other NuGet package that includes my libraries, my DLL and my, onyx model, and this will distribute that across a sure in. A serverless, manner, so it will scale up all the inferencing, power that i need to score those x-ray models in the cloud so. Regardless. Of whether I'm taking. The x-ray on t

2018-07-19 00:54

Nice.