Webinar Introducing Varjo Base 2 4 – Varjo Insight Session

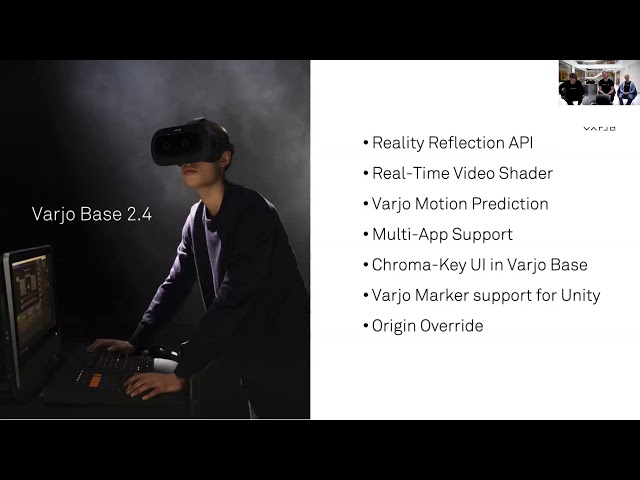

Hello. And welcome. To Vario, inside. Session, webinar, my name is carolyn miller and i'm, event marketing lead here, at Vario, HQ, you just saw a glimpse of our Helsinki, office as we've had a, lot, of questions, on our, whereabouts so yeah this is where we're located this is action old office. Station. Indeed, renewed. A little bit so, at this stage I would like to thank you everyone for joining us, and I, would like to get a confirmation on, the. Audio. And the video Fitz you're actually able to hear me and see. Me properly if you could just raise hand so I could get a confirmation. Yes. Okay, great, thank you so much. Hey. So what is the Vario inside, session. But. Your inside session, is a. Introduction. Of the various, upcoming. Feature which are in this case the, software update of. 2.4. What. Is often perceived as a hardware, company which is make. Sense due, to our kind of beautiful headsets, to draw a lot of attention but in reality we are I would say 50/50. Software, and, a hardware. Company. One, of our key differentiating, factor. Indeed. Is is our dedication. For a regular software, updates, as a b2b. Company these, are often driven by our customers, the current and the future ones so. This is also a water inside session, is a a channel, where we're, announcing. This, software features but also a, form, for us to get, the, feedback from you your insights, your questions. You asked for us to understand, how to develop, this feature, better, so. I would love to kick, off the, Vario. Inside session, this. Afternoon here. In Helsinki, and I would like to introduce our. Today's. Present. Recruit. So. Let me just share, my screen with you. Lovely. So. For, your delight and confusion, we have today - on this ant is, a very common, Finnish name we have on people pirinen, whose, team was actually leading. The development of, the new upcoming features, of 2.4. Then. We have also antiquing, Laskowski who is a product, manager here at Vario HQ, and he's, working very closely with our training and simulation customers. In, addition to that we have also Marcus old son another. Product manager of ours joining, us and Marcus is a. Dedicated. Professional working, with industrial, design customers, of Vario, and. For. A gen agenda. We'll. Talk a little bit at the beginning of, varios, software, philosophy. And we. Will move on to actually, introducing, the exciting, list of the software, features and then, we'll have proper. Amount of time for your questions. And, insights, feedback, whatever, you have on your mind so. As mentioned, let's, start with the body of software, philosophy, introducing. By auntie peg arena. And. I'm Scott, oh. Yeah. So as, Kara mentioned let's, go. First. To our software philosophy. So. I, think one thing a for you is that we. Are not really not. Just a hardware company but we also software, company but our thinking is actually.

May, Be more deep so, basically. When we are doing the mixture the pictures we are not thinking that they should be done with hardware, or they should be done with software we are saying that how, we are going to do the new things as well, as we can overcome so. Some, people may be at how, are you are really, like deeply, understanding, the hardware, so. Imma deeply understanding, the software and maybe. Some. Actually, understanding, both and. There's. A. Often used, referred. Quote from. Alan Kay. Us. Computer. Scientists that. People who are really serious about software, should actually, make their own hardware. Yeah. So we have well. Really. Well doing the this. Very thing and also. We are believing, it like like. The, best way to break, the future is actually to. Invent and build, it, by yourself, and. Then. One. Other thing is. Quite. Fundamental, also how, we think about the software which, is actually differing from many other companies, that, we are not thinking that we are in, our own silo. Or in. Our own egg actually creating. The future of mixed. Riadi and, computing. Paradigm of, the headsets but. Instead. We are thinking that we are creating. This future together. With our credit. Partners, and there's. Few listed here but there's actually link at the bottom of the slide you can, actually see. More of our partners, we are actually working, with so, there's a lot of this kind of shared, love that, how we are going to build the, future of mixed. Reality and like, the. Future, of these, headsets. Used in, computing. Our. Software, basically is called, a swatter based currently, and then. There's a teacher said what, was. Basically. Sewn in. A, webinar earlier, called. Warrior workspace, which is basically enabling, you to work with. Desktop and mixed, reality or virtual reality, softwares. Simultaneously. So basically no need to take your hats the way you can work with your desktop and, exercise. Fare simultaneously. And. Maybe. You guys can actually share something. From our partners, course I think you guys know it better than me yeah, I mean we work a lot with different, customers. In different, verticals. And for. Me working in create, for example be red has been something, that we're working really tight and closely with developing, features, and, getting feedback on api's, and so and of course unity and unreal is, also. Close to us and mean. Desk and, emotions. Are all examples, on these kind of engines, that we are integrating, really tightly with and that is of, course on requests, on our customers, such as you on, what. Kind of Indians. You you, need to do the, experience. That you're building. And. You. Can see here. Claims. Of the. Partners. We currently, have and we, would love to hear more from you that is there something that is missing and, we. Could then approach together, and get, the support or the borrow your headsets because, we are fully focused on the p2p and we.

Know That there's a huge amount of different software engines. And platforms, used by our customers, so what we mean we want to be as broadly compatible as possible, with, anything. That is out there so please get in touch with us us on those, missing. Parts and, let's do those together and. I think one thing that we've been hearing, is that you. Guys are pushing out new, features on Barrio very rapidly. On very. High, pace so. This. Industry, is moving so fast and, even. We don't know how to use these headsets. And the technology, in the best way so we, try to listen as much as possible to the feedback we get from you our customers, put, those into our roadmap, get them fast out so it's available for, everyone and then iterate on those and keep on coming perfecting. Those features, and add more and we, want to keep this up and that's, the way we want to be here so please, keep the feedback coming we, love it we want it and then, that makes us to react and give the best products out there. Then, if because the next software, release so we are releasing, in fast pace and. Like. Keeping. The like, existing. Features working and adding the new ones without. Any feature, creep, there that. Everything is perfectly, we're, going to get our there what, we are releasing so. Next. Time we'll go through the next pitch. Set with, 2.4, we are going to release, and. Because, the 1.1, through, then basically one of us is explaining. A bit, like more. About the future how, it is working and like I like how you should be actually. Enable, in some cases with the future and, then at the end we also try to remember to say when the releases, available, no, if we don't please. Remind us yeah yeah. So. First one here yeah, the first one is the reality, reflection. API, so. Some, of you might have seen this feature. Being demoed, at one of our events during, last year specifically. It's. Also built into our the. Volvo demo that is downloadable, on our web page to, some extent but that was still in a private, api, what. We've done now is. Publicly. Made this available as an api and on the video you see a recording. From the Vario, xr1, so this feature is available for the xl1 and. As you know the extra one has two. Cameras, in the front of the headset that captures. The reality so that you can see the real world and then, have virtual, objects appearing in the real world what, we're doing now with this API is introducing. So that the virtual objects can also reflect. The light from the real world and cast, shadows on the real world and we're. Doing that by, as. You can see on the on the video you have a real, ball on the left side, that is physically. On-site and then. A virtual ball that is introduced, as a virtual object in. On the right side, the. Lamp. That you see is casting. Light and that, is captured, by the cameras. On, the XR 1 and the. XR one is scanning the environment continuously.

When You're moving your head around it. Does, that in the background without you noticing it, and it also is able to change the exposure so, that you can create an HDR version. Of this, 360, capture, which we call a cube map cube. Map can then be used for, creating, light sources that cast, shadows, from the virtual objects on the real world so. This. Cube, map can be either scanned, in, first. Scanned and then used as a constant, background. For you for light probes or. It can be done in real time so that it continuously, scans, and. What you see here is version of it in real time so when you. Turn on the lamp here for example the the, virtual object actually cast shadows soft, shadows, on the underlying, real floor or. Or table, in this case and as. You move the light source you can also see that it's reflected, on the on the, virtual ball and, yeah. This creates. This. This is basically an example on our gorgeous, hardware, with, the real high resolution, cameras from the exa one working. Together with the iris, retina. The, human eye resolution, this place that we have in the headset together. With the software that creates, the most. Advanced. Makes reality, experience in the world so photorealistic. Makes. Reality, one. More example here is the volvo demo. That. You can see here this demo, was actually shot from me within the headset in this very. Space that we're sitting in and the. Car here is virtual. And you, can see that as we turn, on the lights in the in the roof in, the ceiling you can see that it's reflected, on the car, and. That's an example on the, real-time, reflection, api in usage. So, this volvo, demo will be updated, and will be released as an updated version on our webpages in the coming days so that you can try it out for yourself if you have an Excel one at home or, in, your office so. Yeah super excited to launch this and and, see, what you do with that and how you can integrate it in your experiences. And engines. So. Keep the feedback coming we're we're. Excited, to launch this word I think it's a super cool feature it is so. It's really like blurring, the boundaries, again between what's real and what's not real that.

You Cannot even distinguish, them anymore yeah I think the video what you're now seeing it, doesn't make the full justice, like if you're actually having the same experience, with the headset so of course with the headset it's like totally, immersive, and then it's really hard to say when, you see the lighting and the reflections, like actually changing, and the reality is actually like matching, to each other like it's us to be like really fair the barrier between the realities so it's, really awesome stuff actually, when we did this in the life at United, Copenhagen, conference we had a real local car next to the virtual Corinthia, some people were commenting that the real car was, not reflecting, the virtual car so yeah. This real world is broken yeah I'm usually opening, the door just to scare the people like us you actually think that it's it looks are real and you actually like actually stepping, back when you open the door it's like and it's also funny that when you have the car there people, are afraid to walk, into, the car even, though they know, that it's not there but you try to walk into it as if the car is really there usually they, try the dots ofcourse, it just looks so real and if it's not there of course, yeah. The next one all right so. You saw how you're able to get reflections. Now into, the virtual objects, from the real world we, are now also introducing. What we call real time video shader, so this, allows. You to know for the first time ever to manipulate, the video feed that you get from the XR one and, you can do various things with this we, actually, got a lot of feedback that how, could we do nighttime, training for example and we. Started experimenting with, this and. Basically. Came up with the feature and, here it is now and, what. You see here on, the video is actually a combination of multiple ball acuario enablers, so we actually have a dcs world fight. Emulator which, has the native integration done, with part, of your headset so you get the full human eye for a solution without punic, punic display, then. We also added chroma keying there so, you can see the, person's real hands the real controls, and then. We added a real-time, shader so inside. The Decius world, flight. Simulator you have a night-vision goggle, mode and that's. Enabled, now in this video and then, we use the real-time video shader to match the.

Video Feed into, that night vision cocoa rendered, view in the dzs world and the. End result is what you see there so with the click of a button you are now able to go from. Day. Flying. Into, night vision cocoa blog so, this is an example what you can do with, this technology and. If. We check the setup. Here so on, the reality. Reflection, API you saw battery playing around with with, the setup at the office but this is now actually he's set up at home so. It's a quite funny funny setup that he has there, so he's full in when it comes to barrio he put spinach. Color. Canvas. There, he didn't use green or blue he selected, that color for really professional, set really, professional, and, he. Put the controllers, there he has the XR one and then he used the shaders. To do. The matching and. Modified. The, video, footage and, the. End result is there so you don't need to have a hugely, complex. Peak. Setup you can do this even at very you, know I. Would, say even low cost ups. Like you might see from here, yeah. So it's it's really cool you, can actually do this development, even with this kind of really simple setup so. You don't need to have a studio or anything like that not like professional. Lighting lighting, it's there are it's like really, enough that you have something which is close enough and we. Can actually manage the rest on our like side so like, it, makes development possible. Easily. With this feature. What, about this picture exactly. Alright. So, what, can you do to get the real time video Seder into. Use so, this is such a new thing for us as well but we are not 100%, sure that API. We've developed, is the final, one so we are really looking forward to get feedback from you so, that's why we are now releasing. The real-time video shader as, an kind, of experimental. Feature and that. Means that there will be a separate experimental. SDK, available for download at developer.bada.com. So, you need to grab that and together with the 2.4 release you are then able to play around with it and, we've. Gotten also feedback that. Vario, releases, new features. Instead. Of just providing, the API could you also provide demo. Applications. Or examples, or something that makes it easier to try it out so, this, is the first time that we are doing, this so, together with experimental. Packets, you will also get a demo application and there's, a screenshot now on the on the right hand side so with, this one you can easily with the graphical, user interface. Manipulate. The, video feed and this. Is just a subset and an example of the things that you can do so you can change, color you, can add blurriness. Into the into the feed and and kind of test, and try it out but. When. You start doing the real implementation. Of. Course the best way is to use the API and then inject the shader into, our compositor, directly, and then you are able to do it in real time so. But. We, really. Hope that you try this out and please, give us feedback on, this one so, we are super excited about this one as well yeah. This is a really cool feature. Next. One is ten motion. Prediction so. Many. Applications, which have a low FPS scenario. Basically. That. You see like the frames coming from the application, I think, like normally. If you have 60, FPS it's. Rather good but, if you actually have movement even in 60fps. Which is like fast enough there, might be like a problem that like basically. You see our stuttering so. Basically you can see the frames, even. If the FPS, was high in high enough but especially with. A low FPS, like. Case like, it, is obvious, to see the frames and especially. When you have moving objects, they will be like pretty. Like like. You can see it's frame drawn. By the application, so for this kind of problem. We actually introduced, a feature called motion prediction where. We actually estimate, the motion. From. The frame sent by the application, and then basically, be predict. Like. The, outcome, per, frame for the displays so. Basically. Doing, this makes, makes, this kind of low FPS look, really. Like high FPS so, basically we can have 60. FPS coming out from, your low FPS application. By. Predicting, predicting, the, motion. And, here on the right-hand side you actually see like this, 15 FPS, case with. No prediction, so this is like a real food it's from, an application and basically, you can clearly see that like the.

Object Is moving slowly of course this is slow motion and then, on the right, like. Animation. You Occident can see the same case where, we actually have the motion. Estimation and, prediction happening. So basically, we. Can produce. Like. Basically. 75%. Of the frames which were missing and the. Movement looks much more smoother so this is like real food it like. Recorded so. Like, it's, totally much more smoother movement, and of course when it is happening, like with, normal normal, speed you can still see the same thing and next. There's the video from. The feature and maybe, because, of the web, stream you cannot see the actual. Effect, but in here it was so clear already but you, can check actually the video maybe later when, we send out the recording maybe. It's more clear there but let's see, the video next, so. First the. Animation, is rendering full 60fps. So the animation, and the movement of the object is totally smooth and. Then. Next we for Steph is the 15 fps, so then you actually can see the, frames are coming slowly you see in stuttering in the movement, and. Then in. The same case we enable, motion prediction, and then the. Movement is smooth. So. Basically. The. End result looks pretty. Much the same as the, application, would have been rendering 60fps so. This is really, cool thing and basically this works with any application, and we. Have basically. A total you can try it out in your application, does it help for, real so of course there might be some corner cases that, you can see some artifacts, and maybe not working that well but in in, most of the cases it should be helping, if you have low FPS and, basically, some motion, so you the, motion will be much more smoother, what. You will see and the, person perceived like quality is much more higher yeah. And, I especially like that we, have two modes for it so like auntie mentioned we have the automatic mode so you don't need do anything for your application, it just works so please try it out and then, there's also the possibility that, the application, can can provide the velocity, buffer yeah and then, kind, of using, that we. Pretty much know that it will work a lot better yeah, but, that, then requires the application to to kind, of do a bit of changing, on that one so you have two. Modes yeah. So there's this kind of really Noel thing that the application, can also send, a bellows type offers optional, but, can Center so of course then when the application, consent the exact velocity. From.

The Application, side then, we get more precise, estimation. And then, the prediction will be more precise so, the quality should be in most of the cases better than basically. No chances to application, needed to use this and please, try it out and we, are really interested to see the feedback cost it is now still bad time 2.4, most likely we still a point to it a bit in, next upcoming, releases and, we've had a lot of, requests. From our customers, that yeah even though they try to hit, the 60 frames per second, especially. With with, image generators, that have very high detailed. Visual, data bases the, frame rates will go down ultimately when you move your head and there's a lot of movement there so, now with this one if you have fast-moving objects, flying in for example, it's, much easier to now follow, those and it's a lot smoother experience for, the for the person wearing the headset so really, looking forward to get your feedback on this one. Okay. Chroma. Key so. We. Introduced, this feature around. February, timeframe. And we provided, a native, API for it and, this. Is again one of those features that we, got feedback from our customers that hey I would like to try this out but, I wouldn't want to spend time, doing the native API integration. So is there any way I can try the chroma. Key in a easier, way so. Now, in 2.4, we are adding the chroma key. Set. Up in the barrio, bass UI so it's a super super simple way that you can try out the feature without any programming. Skills so. You go to barrio base you, go. To the chroma key color. And then, you hit, peak. Color you, put the headset on and then you look at the Cannabis and you're, able to with the click of a button take, multiple, different estimations. Because, there's always a bit of different color the lighting changes the, shadows are different, they're on the on the, canvas so you take multiple, different. Estimations. We. Take. That input, we. Store it and then, you have the chroma key setup, done and. We. Also added the third, function, here which is the global chroma, key toggle so, you, don't need to do any work on your application, you just put. That toggle, on and after that the, chroma keying will work in any application so. For example here on the screen we. Have this very professional. Canvas. Set up in our office we're, going to fix that but, I just did that yesterday and a. Two couple of, estimations. From from, the canvas, and then I put one of our. Unity. Applications, up and running and you can see it now there on the on the, screen so it's super easy way and you can go. Now, and try chroma key and put it in your your, development. Yeah. Another. Feature that we introduced, earlier this year was, barrio, markers, and, Vario. Marcos as you might know is a way to easily, create. Trackable, objects, that are, by. Printing, a code. Big or small different, sizes exist, you. Can, track. That marker. And replace. It with the virtual objects so, it's a very simple way to introduce, as. An alternative, to the BioPark for example. Without. Having the need for active, power or cable and or anything you can just print this with. Any printer or there's, instructions exactly, what kind of printers or, ink you should use preferably. Matte paper. But. You're you're. Printing these and then previously. They were available, or, unreal. And for, our native API but now in 2.4, we also make them available for, their unity. Applications. And. Here, is an example of this. In action so this is a example on a small marker so. You can now see how it follows in the virtual object is overlaid on top of the marker and yeah. So available, on for, unity now so looking. Forward to see people try, this out and show, their examples, on what they do with this and. Also. As a reminder there is here's. A you can go to developer.bada.com. And. Download these markers. As PDFs and there's also the printing instructions and, how to how to print them and. Another. Feature that we're introducing in 2.4 is the override, origin. So, we've. We've. Gotten a feedback, and. Also experienced it by. Ourselves that, when you're moving. Around and setting up at a new place setting, up a br set. Up for example and a expo, or a demo, setup or if, you have to move, it to another room or another one then. The lighthouses, and the the. The. Region. Of man. When you start to be our app the original, place would be on a different place than you. Want it to be so if you want to align. The, virtual, world what you've done previously is to go out to. Steam, VR, and doing. The room setup where you place the headset. And. That. Can sometimes be, a bit tedious, to do so we've now introduced, a very fast, way to do that by. Integrating. And button. That overrides the region that, basically. Snaps the origin. Of your virtual world into place where you have place your headset, so that's, going to be super simple. To use and. It's. Also great. Both for virtual reality see is where you want a place and virtual, starting. Point of an object, in a, certain place or, if you want to have it in a mixed reality setting for example placing a car in in.

A Room that fits. To the place. It. Comes this API, also or this function comes with also an API that allows you to set. The get, the transformation. Matrix out, from, from. From the API so that you can also make. It for other trackables, like controller sources yeah and. I mean at least I'm super. Excited about this one because we, do a lot of demos on wari aside and I'm sure that you do a lot of demos as well so, whenever you go to a new location, you, just put the base stations wherever they're spaced they can be at different heights and you never have enough time to do the proper setup so, you usually are in a super hurry and you just put everything there and then you need to start running the demo you start the application and, the. Car that you're supposed to show. It's like up, there 10 feet high and, you. Need to start scrolling, it then and putting the car in the right location, so now with this one you just put everything up and ready you put the headset for example on the floor and you. Put the orientation. Of the headset where you want to start in orientation. To be you hit click override, and boom, you have everything set up you start the application and you can go directly into the into, the business so, this, will make, at least demoing, super, super easy and faster. Ok. And then, multi. Application, support, so. This is also being. Requested by multiple, partners, that like how. We, could actually have multiple, PR. O XR apps running top of each other so. There. Are cases but actually this is like making sense a lot that you actually are having, to to like experiences, running same, time to. Form, a single like consistent, experience, for. The user so basically, in this video what is running on the left hand side we. Have actually, on the background, Google Earth unmodified. Running. Top of our own beer like. Unreal. II example, having, the cockpit so like. It's a really great example where two totally different apps are forming. A single experience, and it would be really hard to say from the visuals that they are actually not running. In the same app and basically. Like. We, have a priority but, you can set per application. So as as default it's zero for, any application, what you have like. The Internet and you can run on our headset or you, do your own app like it's. The as default running a zero so basically, you. Can set the apps top of them by bigger numbers or them even below by, smaller numbers, and then, you use the opacity to, actually, like make the see-through areas so it's, so, easy to use only like one liner basically, need it and basically. One, cool thing is that you can also have this running with existing, apps like here at the Google Earth so you. Can actually use, some existing, experiences. To create like a new one without any changes to the existing. Experience. Exactly. Exactly so as, an example if. You, want to do a training solution. And you. Have an ID that you want to use for the synthetic terrain, and that. Ite, can do nothing but show, the terrain, so then you're in a situation that okay how do I add now, interactions.

Or How, do I draw a cockpit on, top of that one so now, you can for example take, the ID and then, put unity, or unreal application. On top which provides, the. The. Cockpit, and you, can have the avionics, there and it's, also probably. Easier to do interactions, as well into. The unity or unreal app so, now it's possible to to kind of merge these together and like. Auntie said it's a super easy way to do it hmm, yeah. This is a little bit I think it's super exciting it's a new kind of architecture that, allows us to to, widget, eyes kind of application, so I mean why would you have when they have one app you could have multiple, developers, doing different apps and combining, them and overlaying, them so one, example could be that you have one existing, app it, could be any app that you cannot, modify and then, you're, in, like, layering, a for example an avatar or some, other functions, on top of this so that it becomes all the sudden a social application, so exciting, to see what. You're going to do with this and yeah. Opens. Up a lot of new opportunities and. Next. We are going to go for the questions, and answers, session so ah. When. Is 2.4. Available. We. Almost forgot it ah yeah. I think. We are getting that question better than most likely. Yeah. To. Be answered now or I don't know let's check the question yeah let's take questions, you need to remember this way I'll try. Alright. I. Mean. Yeah. So. Alan. Is asking, in. The real-time video share, you. Are modifying, the data with yet the similar at night vision mode is that correct. If. So is there, a way to add additional nighttime, elements such as runway, lights and an appearance at PTC, or even, better a night simulation. Right. So what, real, time video shader does is that it only modifies, the. Camera. Feed so. Then, all the other elements that would, need to go there to simulate an item would need to come then from. Rendered. Content so. That's something that we, cannot really control so, this, is more like matching. The video see-through capability. Into the rendered content, so. That's, something that we would definitely want to hear more that how to build those and are there additional needs. That you would want from us to make, that whole system setup, to. Work so, Alan, please be in contact with with us and we will definitely talk more about that and what can be done there yeah. Great. Question and the next one, by. On. Animals, so, if, you, can please use your name so then it's a, bit, more human but yeah, will answer this so. How, is motion, prediction different, from time warp yeah, so time warp is, used. As, a term to actually. Reproject. The, headset movement. So it's the headset movement, and the, reprojection of the existing, last, frame that's, time work but then if, in the frame you have something moving like a plane flying or some ball.

Bouncing, Around the. Space, Corp is helping on that so we can basically, estimate. The animation. Happening, in the frame and then predict. The movement of that so. Basically, the motion. Prediction works, next. To the time warp, so there are different. Features may be sounding, bit the same but, they're totally different feature time warp reproject. Singing the last frame based, on the headset pose and then. The motion prediction basically. Estimating, the movement. In the frame the animation, of the objects, or movement of the objects and then, moving those in the frame right, so it takes into account the actual content, yes. Visible in the in the rendered. Content yeah then we have a question from Dan weeks from, BSI, he. Says he is wondering if there here's a custom, that may not want to chroma, key due to their space its depth masking, still a viable metal. Yeah. Indeed there is two, methods of doing like. Layering. Of depth, so, chroma. Key is good for when. You have the ability to to, color, things around you depth. Is still just. As valid and, if works automatically. Out-of-the-box, for your hands but. Has a limited, range so, we are recommending the depth, for specifically. A for hands and things, that are nearby. Versus. Chroma key for, something that are further away exactly. Exactly, and I mean you can still use, either. One of those so both are still available so chroma, keying is definitely, not, replacing. The, depth. Yeah. Then, on animals. Hello. Again, asking. How. Many applications, basically. Layers can. You support at the same time I think this is about the multi out case yeah so yeah. It depends like from, your computing, resources, basically, at the end and you're, like system. Set up so, of course it's really hard to give an easy. Answer but I'm thinking like maybe in, a context, of single, PC, and what single GPU, then depending. How heavy the applications. Are so, for example it's, quite easy. To run two or three or even four apps, which are rather why saying. By months they get, a really good experience but it depends bit from the case but, I think, the aim for the picture is that like, it's. Used only with. Few apps but we haven't been intentionally, limiting. The maximum amount, so of course if you have a case but. You have like 10 or 100 or even more apps like we. Would be really happy to hear about it and then, maybe there's. Some other ways or other technologies. How. We could actually even enable those so there's, many other technologies, which, can enable even more than ten or even hundred apps running, at the same time and visualize, them for the headset, good. Question by the way yeah all. Right then. Matthew, McKinney is asking, you hey, guys new features look great thank, you, question. On marker, tracking, I tried testing, the marker tracking yesterday, with XR one tracking. Is good but there's a significant. Lag between the marker, and the virtual object, this, means objects, don't appear to be fixed to their markers, what is the source of this lag I. Think. There. Is now the extrapolation, mode, available, that. We launched. In 2.3. So, you can actually have two modes for, the markers, we have what is, more for static, markers. Or static objects, that don't move around and then, kind. Of an extrapolation mode, that, then takes into account the. Marker, movie so. It could, be good that you check that which mode did you actually use. And if. You didn't use the extrapolation mode, please try that out and if that still doesn't do. The trick then please, get in touch with us again and let's start digging into that and a bit more yeah. Man. Roy Matthew, asks when, is the launch of two point four and you want to take that out yeah okay auntie yeah. I think, I mentioned earlier, today like, like. Roughly, end of this month at least like, end part of this month so, yeah. I think that's the answer that's the answer a couple of weeks away we, try to get it by end of this month yeah yep. Alon, is thanking us so we thank you also for joining. And. Then, we have a question from Jeff hands, burger. He, asked what are the plans for introducing ultra leap in the XR models, like VR to Pro versions, so, this is about the alternatives hand tracking, having. That built into XR. We. Don't have any specific. We. We. Don't have any specific. Plans. On, that but we do have seen, I mean the hardware as we, selling it with. XR, 1 does not include alpha bleep. For. That you need to buy the BR 2 pro. However. We've seen customers. Adding. And they leap motion we've, done that we've, done at ourselves as, well yeah so, please, send, an email to us and if you have a specific request on that we can give, you guidance and help on that yeah like way to place the. Ultralift. Module, lambda, X r1 to make it work because let's say if we've tried it out and we have a video of that one as well and it's kind.

Of Mind-blowing, when you can see your real hands and then interact. With with the virtual yep. Ok. Matthew, is actually asking from the rail by video setters can, I use my own pixel, shader and. Can I have access to that buffer, in my shader. Yeah. That's actually good question so yeah. Pixie sailor sailor part so yes, you can actually like make a full pass on the. Image and actually, it's injected, to all combustor so, that's, really. Like low lack then, the depth buffer part I'm not, actually totally, sure and wonder nothing it should be you have it I think you have the depth well, it's actually not a processed, API, is it yeah so I think that that we need to really check well, that's a good question so. We. Need to compile, about, this most. Likely it's in the same. Place in the combustor, available, but, then how usability. Is at least like that we need to really check yeah that's a good thing to add also to the developer. Documentation for. The package that adds to. Alex, a is action. Asking do, you have any news. Regarding development. Of hand tracking support, for HR one other, website, yeah I think a bit saying it. Can't be done with maps, but yeah yeah so so, it's the similar to the right. Because, now with the depth map you can get your hands visible yeah. And. The, illusion, is right but you cannot do interaction, with your hands yeah oh you just get them visible in order to do interactions, as well then you need to have two separate ultralift module your next index are all awesome source and happily like, physical, like we see a lot of our customers using, real haptic. Device I like right is your physical controls yeah. Oh been tracking from the BSD converse three the answer that, that's one way of. ROI. Is documentation, about version 2.4. Available, at, 3:02. The launch I. Think like you, can send email for us so they can look the case but, like I think the documentation. Will be coming with the released, and correct. And. Neal. Christianson, says fantastic. Work initial. Override is such a simple solution that will save many headaches nice. Thank you so much. Do you have any plans for integrating, object recognition. Object. Recognition. We. Have been, in, discussions, with. Third-party. Tracking solution. Companies. Such as vision, Lib, for example, and they, do object, detection, and object like tracking with this I don't, think there is any solution that works out to the Box right now, we. Would love to hear more what the use cases and see. If we can we, can support that we do have connections in the industry for, this like I said vision, lis that, has been used, by many of our clients, and. So. That's, something that we could look into, for. Example is that loaded, geometry, that matches an external object using computer vision for overlaying, information yeah exactly so you'd, use a 3d, shape like, from CAD and then, much a virtual, object on top of that so. Object, based tracking. We. Don't I don't think we have it right now but like we have contacts, I have actually spoken this week with additional. About. That so it's something that, and looking, would love to hear more about your use case new so please get. In touch with us and let's talk more ya reach out and yeah. Joe, is asking will any of these ABS be available, to us without the headset. Yeah. That's a good question so basically. Most, of the features are like. Available. Sort. Of like you've actually developed, them without the headset so we have basically this kind of analytics, view where. You can actually even simulate the XR one so to, some extent you can actually develop everything. What. We were showing even without the headset but of course like having the headset helps a lot to, understand, how it really looks at the end and how it really feels whatever you are working on so. Of course we recommend of like you. To buy the headset already like to the software really well but, yeah all. The api's are public, when we release and then basically, it's, possible, to use the. Stack, even. Without the headset to actually, develop the applications. And. Then Harold. Gunderson, as. If it's possible to get. GPS location. So that to place CG. Objects, with known latitudes, longitudes based, on your own position, considering. Movements, over time so, this sounds like it's in the content, side it should be possible to do, we. Are not providing the GPS location from our headset but you'll.

Have To build the. Application. Logic for that I guess. Yeah. Bondo. Is asking is there any plan to make the XR one more. Affordable. Mmm, it's. A question for our sales team yeah. Yeah. Like I think in long run of course like yes. But, like yeah. For. The time being. No. No real plans I suggest, that please contact our our sales to, get more. Information on that yeah okay. There's a little more questions, from. Simon. This. Videos going to be available after, the event, I think. Yes yeah, yeah so there will be a videos. Are. We sending at all our attendance. Yeah so, a video that, you can see the same this exact. Web. Sits, the only way to for, example check the. Motion. Prediction. Yeah. Okay. Okay okay I think that was it there any more questions. Yeah. Please shoot they are still in here that's. The wrong yeah. Okay. I guess we don't have any more questions, at this, stage, hey. Gents thank you so much for joining us I don't know your, time is very valuable and. Thank. You everyone for joining this. Session despite. Different time zones, I. Think. It's also a good overview if, you're not a var your customer, to understand, what is really driving the rxr, professional. Market, at this stage, so, if, you're interested to hear more about our, features, and interested, in validating, your use, case I, recommend, you get in touch with our body. Of experts, that are. Located, in a. Different. Different. Geographical, locations. Such. As. Finland. Germany, and. Again Finland we have for, instance Marco Speedman, in. Charge. Of design and engineering applications. In Europe here. Are his contact, details we. Have research, medical application. Expert. Village. His. Email, and also marcus hahnemann, in. Charge of training applications. And, also we have in North America Brandon Turnage. Working. With the designer engineer customers. Jeff, bond. On research. Medical sort of things and also our. Training. Expert. John. Birbal, so. Thank. You so much for joining us and we're hoping, to see you in our next session and this recording, will be shared with everyone who. Registered, for this session afterwards. So, have, a beautiful. Day evening, and stay, healthy. Thank, you.

2020-09-20