Irene Alvarado // Art && Code Homemade 1/14/2021

Hello everyone and welcome back to Art&&Code Homemade: digital tools and crafty approaches. I'm thrilled to welcome you now to the session with Irene Alvarado who is a designer and developer at Google creative lab where she explores how emerging technologies will shape new creative tools. She's also a lecturer at NYU's interactive telecommunications program. Irene Alvarado.

Hi everyone very excited to be here. I'm going to talk today about a behind-the-scenes story of a particular tool called Teachable Machine. So as Golan said I work at a really small team inside of Google. I mean we're less than you know 100 people which is small for Google size. And some of us in the lab, the work that we do includes creating what we call experiments to showcase and make more accessible some of the machine-learning research that's going on at Google. And a lot of this work shows up in a site called Experiments With Google.

And time and time away, we're sort of blown by what happens when you lower the barrier for experimentation and access to information and tools. And so today I want to tell you about one particular project and especially the approach that we took to creating it. So why am I speaking at a homemade event when I work at a huge corporation. I think you'll see that my team tends to operate in a way that's pretty scrappy, experimental, collaborative. And this particular project happens to be a tool that other people have used to create a lot of homemade projects. So to begin with let me just talk about what it is.

It's a no code tool to create a machine learning models. So, you know, that's a mouthful. So I think I'm just gonna give you a demo. So I believe you can see my screen. This is sort of the home page for the tool. It's called Teachable Machine. And I can create different types of what we call models in machine learning.

Basically a type of program that the computer has learned to do something. And there's different types. I can choose to create an image one, an audio one, a pose one. I'm just gonna go for image, it's the easiest one.

And I'm just gonna give you a demo so you see how it works. I have this section here where I can create some categories. So I'm gonna create three categories. I'm gonna call this

like neutral or I could call this just like face or something like that. And I'm gonna give the computer some examples of my face. So I'm going to do something like this, give the computer some examples of my face. Then I'm going to give the computer some examples of my left hand. And then yeah that should be enough. And then I'll give the computer some examples of a book, Happens to be a book that I like.

And then I'm gonna go to this middle section to sort of train a model. And right now, it's taking a while because all this is happening in my browser. So the tool is very very private. All of the data is staying in my browser through a library called tensorflow.js So one of the main points here was to showcase that you can create these models in a really private way. And so now the model's trained. It's pretty fast, and now I can try it out.

I can see that you know it can detect my face. Let's see, it can detect a book, and it can detect my hand. And, you know, some interesting stuff happens, you know, when I'm half in half out, you see that the model is trying to figure out okay is it my face, is it my left hand, you know. You can sort of learn things about these models like the fact that they're probabilistic.

And, you know, so far maybe not so special. I think what really unlocked the tool for a lot of people is that you can export your model. So I can upload this to the web and then if you know how to code, you can sort of take this code and then essentially take the model outside of our tool and put it anywhere you want. And then you can build whatever you want with it, a game, another app, whatever you want. So let me go back to my slides here. We also have sort of other conversions, right. So you can create a model and not just

have it on the web. You can put it on mobile. You can put it, you know, in Unity, in Processing, in other formats, and other types of platforms. And just a little note here of course this is not just myself. I worked on this project with a lot of colleagues, a lot of very talented colleagues of mine. Some of them are here. And I'm going to showcase a lot of work from other people in this talk. So as much as possible I'm going to give credit to them, and then at the end I'll

share a little document with all the links in case you don't capture them. So okay I want to emphasize that a lot of our projects have really humble origins, you know. It's just one person prototyping or sort of hacking away at an idea, even though it might seem like we're a really big company, or a really big team. And just to show you how that's true, you know, the origin of this project was actually a really small experiment that looked like this. The interface sort of looks the same, but it was really simplified.

Like you have this sort of three panel view. And you couldn't really add too much data. It's a really really simple project. But more so than that, even though it was technically very sophisticated, I think our ideas at the time were very- we were kind of exploring really fun use cases.

And just to show you how much that's true I'll show you a project that one of the originators of this idea Alex Chen tried out with his kid. So let's see [organ sound effect] [bird chirping sound effect] [quiet organ sound effect] [bird chirping sound effect] So you can see he's basically creating these paper mache, you know, figurines with his kid. And so he's training a model that then triggers a bunch of sounds.

So it's really kind of fun at the time, just trying a lot of different things out. And then we started hearing from a lot of teachers all over the world who are using this as a tool to talk about machine learning in the classroom, or talk about sort of the basics of data to kids. And then we finally heard even from some folks in policy. So Hal Abelson is a CS professor at MIT, and he was using the tool to conduct sort of hands-on workshops with policymakers. So we had a hunch that maybe the silly experiment could become something more.

But really really didn't know how to transform this into an actual tool. And we also didn't know necessarily what the best use cases would be. And this is where the project took a really really interesting turn, because essentially we met the perfect collaborator to help us and to push us into making it a tool.

And that person his name is Steve Salling. He was introduced to us by another team at Google who had been working with him. And Steve is this amazing person. He used to be a landscape architect and he got ALS which is Lou Gehrig's disease. And he sort of set out to completely reimagine how people with his condition get care. And he created this space that everything is API-fied.

So he's able to order the elevator with his computer or turn on the TV with his computer. It's really amazing. And so he actually found the original Teachable Machine, and someone else sort of was using it with him. And, you know, we basically got introduced to him, and the question was well

you know, can we figure out if this could be useful to him, and in what way? And, you know, how do we just get to know Steve, and what he might want? So a little pause here to say that, you know, folks like Steve they're not able to to move or you know in Steve's case he's not able to communicate. So he uses something called a gaze tracker or like a head mouse. And he essentially sort of looks at a point on the screen and then can type into- can sort of press click and type a word or a letter. So he's able to communicate but it's really really slow. And so the thought was: okay can we use a tool like Teachable Machine to train some, you know, train your computer to detect some facial expressions and then trigger something. And this of course is not new.

The thing that was sort of new was not for me to train a model for him, but for him to be able to load the tool on his computer and train it himself. Like sort of put that control on Steve. And specifically you know, we basically went down to Boston and worked with him quite a lot. He became sort of the first big user of the tool. And we made a lot of decisions by working with Steve, and sort of like following his advice. And one of the things that the tool sort of allowed us to explore was this idea of immediacy. So what were some cases where Steve wanted sort of a really quick reaction, and how could the tool help for this.

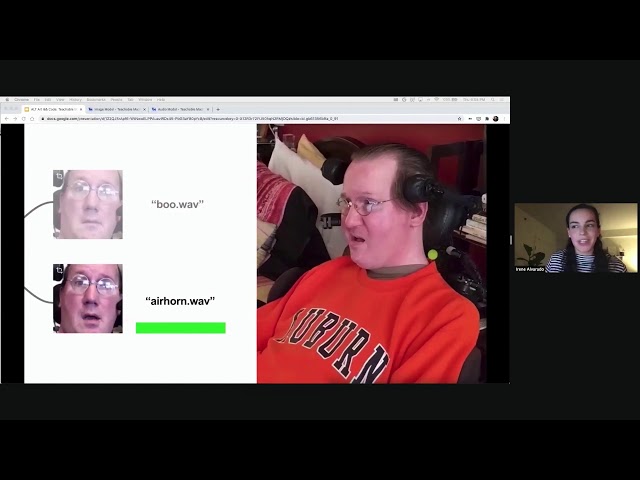

And one use case was he really wanted to watch a basketball game. And he really wanted to be able to cheer or to boo depending on what was happening in the game. And that was something that he was not able to do with his usual tools, because he had to be like really fast at cheering or booing when something happened. And so he trained a simple model that basically, he could sort of open his mouth and you know trigger an air horn. So that was one example.

So you know we kind of immersed ourselves in Steve's worlds. And by getting to know him, we got to know that, you know, maybe other ALS users could find something like this useful. So we started exploring audio. Like could we have another input modality to the audio to potentially help with people who sort of had limited speech. And that led us to incorporate audio into the tool. So I actually have a little example here that I also want to show just so you guys see how this works.

I'm loading a project that I had created beforehand from Google Drive. So let's see if it doesn't, you know, this is- this is some data that I had collected beforehand, some audio data. So there's three classes, there's background noise, there's a whistle, and there's a snap. And let's see if it works. [whistle noise] As you can see the whistle works.

[snap sound] You can see the snap works. So you know, same thing here. I can kind of export the model to other places. So you know, but the interesting thing here is that the audio model itself actually came from this engineer named Shanqing Cai. And he created that audio model for people like Mark Abraham who also have ALS through exploring with him the same idea, like how can I create models for people like that so that they can trigger things on the computer. So the technology itself, you know, also came from this exploration of working with users who have ALS.

And, you know, you can't see what's happening here, but essentially Dr. Abraham has sort of emitted a puff of air and with that puff of air, he's been able to turn on the light. So you know, we decided that okay this could be useful to other people who have ALS. And we decided to essentially open up a beta program for other people to tell us if they had other similar uses, and trying to think about maybe other analogous communities that could find you know, some interest in Teachable Machine. And that's how we met the amazing Matt Santamaria and his mom Kathy.

And Matt had come to us and told us that, you know, he was playing with the tool and he wanted to try to use it for his mom. So he actually created a little photo album that would sort of, you know, change photos for his mom, and he could sort of load them remotely. But his mom because she didn't have really too many motor skills, because she had a stroke, she wasn't able to control the photo album. So we actually worked with Matt. And, you know, you can't see it here because it's just an image, but we actually worked with Matt to create a little prototype of, you know, his mom being able to say previous or next, and then training an audio model that then was able to sort of like change the picture that was being shown on the slideshow. And that was just sort of like a one-day hack exploration that we did with Matt. And then ultimately he sort of kept exploring potential uses of Teachable Machine with his mom.

And he created this tool called Bring Your Own Teachable Machine, which he open-sourced and has shared online. And what it allows you to do is to put any type of audio model, and then link that to basically a trigger that sends texts- that sends a text to any phone number. So pretty cool to see what he did here. And then finally, you know, just seeing that the tool sort of ended up being useful in a lot of analogous communities once we launched it publicly. We saw a lot of uses outside of accessibility. So I wanted to show you a few of my favorite ones today.

This is a project by researcher Blakeley Paine She used to study at MIT Media Lab, and she was really interested in exploring how to teach AI ethics to kids. So she open-sourced this curriculum. You can find it online and it just has a bunch of exercises, really interesting exercises that she takes kids through. So this one for example

explains to them the different parts of a machine learning pipeline. So in this case, you know, collecting data, training your model, and then running the model. She sort of gives them different data sets. So you can see here in the picture, it's a little blurry, but you can see the kids got 42 samples of cats and then seven samples of dogs. And so the idea is for them to train a model, and then see okay maybe the model's working really well in some cases maybe it's working really poorly in some cases. Why is that? And have a conversation with them about AI ethics and bias and sort of how training a model is related to the type of data that you have.

Here's another example of her workshop. She sort of asks kids to sort of label their confidence scores. And you'd be surprised, you know. We were invited to join one of the workshops and these kids sort of established a pretty good kind of insight into the connection between how the model performs and the type of data that it was given.

It's not just Blakeley. There's other organizations that have created lesson plans. This one is called readyAI.org and, you know, again they kind of use these simple training samples in a lot of schools. You can't use your webcam, so a lot of them sort of have to upload picture files or photo files in order to to use the tool.

And that was a use case that we found out through education that we had to sort of enable, you know, not having a webcam. And then more so in the realm of hardware, there's a project called Teachable Sorter created by these amazing designer technologists Gautam Bose and Lucas Ochoa. And what it is is it's a very sort of powerful sorter. So it uses this- oops. It uses this accelerated

hardware board it's kind of like a Raspberry Pi. And they essentially train a model in the browser and then they export it to this hardware. And they both were super super helpful in sort of creating that conversion between web and, you know, this little hardware device. Now this is a very complex project. So they made a simple version that's open-source and you can find the instructions online. And it's a tiny sorter, it's a sorter that you can put on your webcam.

And so again you can train a model with Teachable Machine in the browser, and then export it to this little Arduino, and then sort of attach this to your webcam and sort things. In a different vein this is a project called Pomodoro Work and Stretch Chrome extension. And what it is is it's a chrome extension that reminds you to stretch. And the way it works is that this person trained a pose model that basically detects when people are stretching, right. There's a team in Japan that integrated Teachable Machine with Scratch. So scratch is a tool for kids to learn how to code.

It's a tool in a language and environment for children to learn how to code. And unfortunately a lot of the tutorials are in Japanese. But the app itself sort of works for everybody. So you can take any model and run it there. And then a lot of other just really creative projects like this one is called Move The Dude.

I can make this probably a little bigger. So it's a Unity game that you control with your hand. And your hand essentially is what's moving the little character around. So again because these models work on the web, you can kind of make a Unity WebGL game and export it. And then just one last example. A lot of designers and tinkers started using the tool to just play around with funky designs.

So Lou Verdun created these little sort of explorations. In this one he's doing different poses and matching those poses to a Keith Haring painting. And then in this one it's sort of like a cookie store, and then you can draw a cookie and get the closest looking cookie. And then this GitHub user SashiDo created a really awesome list of a ton of very inspiring Teachable Machine projects in case you want to see more of them. So just to sort of go back to the process of how we made this tool. We took a bit of time to think about this way of working and informally started calling it Start with One amongst ourselves, amongst my colleagues.

And it's not a new idea, you know. This is inclusive design, I'm not inventing anything new. Just the word Start with One sort of was a way to remind ourselves that you know, the tactical nature of it. Like you can just choose one community, one person, and sort of start there. And we're really just trying to celebrate this way of working this ethos.

Start with One was just an easy way to remember that. So just coming back to this chart for a second. This idea of like a tool that we created for one person ended up being useful for a lot more people.

But I want to clarify that the goal is not necessarily to get to the right of this chart. A lot of the projects that we make, they just end up being useful for one person or for one community and that's totally okay. And it's not necessarily the traditional way of working at Google but it's okay for my team. And, you know, this idea of sort of starting with maybe the impact or the collaboration first rather than the scale, it doesn't mean it's the only way of working. It doesn't mean it's the best way of working. It's just a way of working that has worked really well for- for my very small team. So there are a lot of other projects that sort of fit into this bill.

And if you're curious about them, you can see some of them in this page google.com/co/startwithone. It's also a page where you can submit projects, right. So if any of you have a project that fits into this ethos, you're welcome to submit there. And, you know, right now that times are really hard for people I think it's easy to be crippled by what's going on in the world.

I've certainly felt that way, maybe even a little powerless at times. And for me when I think of Start with One I remember that, you know, even small ideas can have a big impact if you apply in the right places. And I don't just mean pragmatic, right. Like human need is also about joy and curiosity and entertainment and comedy and love, you know.

So it's not just a practical view of this. So just a little reminder for all of you I suppose to to look around you, to collaborate with people who are different than you, or even people you know really well: your neighbors, your family. I think the idea is to offer your craft and collaborate with a One and solve something for them. You know, even if you're starting small, you'd be really surprised by how far you can get.

That's it. There's this link, a tiny url digital machine community. I'll paste in the discord. And you can find all the other links in my talk through that link in case you didn't have time to copy it down. Thank you very much. Thank you so much Irene. Yeah I'll keep this up for a little bit just yeah that's great. Yeah I'll keep this up for a little bit just in case- Yeah that's great. Thank you so much. It's beautiful to see all these different ways in which a diverse public has found ways to use Teachable Machine in ways that are very personal and often you know, single scale kind, of scale to what a single person is curious about.

Like a, you know, father and a child or, you know, people with different abilities who can use this in different ways to make easements for themselves. It's really amazing. I've got a couple questions coming from the chat. So you mentioned this Start With One point of view. Is this a philosophy that's just your sub-team within Google Creative Lab or does Google Creative Lab have a manifesto or set of principles that guide its work in general? And if so, what sort of guides the work there, and how do you fit into that? Yeah great question. No I wouldn't say it's like a general manifesto or even of the lab itself.

I would say it's a way of thinking within like my sub-team of the lab. And again like I do want to say like it's not like I'm talking about anything new. Like inclusive design and co-design been talking about this for ages. It's really just like a short word keyword for us to refer to these types of products, but I would say like the Creative Lab does pride itself in basically embarking on close collaborations. So we tend to see that projects where we collaborate very closely like not making for people, but making with people end up being better. So not all projects can be done in that way necessarily.

But I would say like a good amount are, yeah. And so I know that there's a there's a sort of coronavirus themed project that uses the Teachable Machine by I think it's Isaac Blankensmith which is the ANTI-FACE-TOUCHING-MACHINE. Where he makes a machine that whenever he touches his face it says 'no' in a computer synthesized voice. It's super homemade and it sort of like trains him to stop touching his face. But I'm curious how has the pandemic changed or impacted the work that your team is doing or that people are working with Teachable Machine, or that the creative lab is doing? How have things shifted in light of this big shift around them? Yeah I mean that's a good question. I don't know if I have an extremely unique answer to that except to say that we've been impacted like anybody else.

I mean luckily I am in an industry where I can work from home. So I feel incredibly lucky and privileged to be able to be at home safe and not be a front line worker. It's changed the fact that certainly collaborations are harder to do. Like you saw in the pictures with Steve, like we like to go to

where people are, and like sit next to someone and actually talk to them and not be on our computers. And that has certainly gotten harder. But we're trying to make do with things like Zoom and just like everybody else.

I'd say the hardest thing is not collaborating with people in person. And it just takes like a shift, because there's so much Zoom fatigue and you don't get to know people outside of your colleagues. Like when I'm trying to know a collaborator or someone outside of Google like anybody else, you know, you benefit from going to dinner or grabbing a coffee or just like being a normal person with them, having a laugh. And that doesn't really exist anymore. Like Steve actually the person that I was talking about who has ALS, he's so funny. Like he says so many jokes. He's so restricted with the words that

he can say because it takes him a long time to type every sentence, you know, it takes two minutes to type a sentence, but he's so funny, like he has such a great personality and humor. And I think that would be very hard to come off through Zoom starting with the fact that it's very hard for him to use Zoom because someone else has to sort of trigger it for him. So certainly that type of collaboration would have been very hard to do this year.

Thank you so much for sharing your work and this amazing creative tool. I like to think that there are several different gifts that each of our speakers are giving to the audience. And one of them is the kind of just the gift- of permission to be a hybrid, right.

Like, you know, you are a designer and a software developer and, you know, an artist and a creator and an anthropologist and all these other kinds of, you know, things that bridge the technical and the cultural, the personal, and the design-oriented, and all this together. Another gift is just the gift of the tool that you're able to provide to all of us, you know, me, my students, and, you know, kids everywhere and other adults. Thank you so much.

Yeah I mean final words is that it's it's a feedback loop, right. Like I was inspired by your work Golan. Like you give a lot of people in this community permission to be hybrids. And I didn't know that that was possible. And then everyone making things with Teachable Machine inspires us to do other things, or to take the project in another direction.

So I think we have the privilege and the honor of working in an era where the internet just allows you to have this two-way communication. And I think it makes so many things better. So thank you Golan, and thank everyone for organizing the conference like Leah, Madeline, Clara, Bill, Linda. You guys all make hybrids around the world possible.

2021-05-03