The Rise of Confidential Computing, What It Is and What it Means to You

- [Stephanie] Excellent. Good morning everyone. I'm very excited to talk to everyone today about a new paradigm in computing called confidential computing. Each of you in this room is charged with the very difficult task of governing the security of your businesses.

So whether you're offense or defense, or whether you're InfoSec or product security, each of you brings with you a toolbox of techniques and technologies that you use to drive impact in your world. My goal today is to empower you with a new tool to put in your toolbox, and that tool is confidential computing. Standard disclaimer. Now, data exists in three states. We think of data in rest, in transit, and in use.

As data zooms around your network, right? It's in transit. As it sits in storage and waits to drive impact, it's at rest. And while you're doing something meaningful with your data, it's in use. In today's world, we generate and use a tremendous amount of sensitive data. So whether it's something like credit cards, protected health information, or it's simply business proprietary or sensitive information, we need to understand how to protect it in all three of these states.

Now, cryptography is the commonly accepted way to provide things like data confidentiality, preventing unauthorized sources from accessing it. And for providing integrity, both preventing and detecting potential unauthorized modification of that data. Now for data at rest and data in transit, we have pretty mature understandings of how to leverage cryptography to protect data in these two states. Where it's the new frontier is how we protect more efficiently data in use. And that's why I'm excited to talk to you about confidential computing because this is the exact problem that confidential computing is trying to solve. No security presentation would be complete without a slide with scary headlines, and so I promise I just only have one of those.

But the reason every presentation has these is because headlines are a great way to drive the why you should care, right? Attacks and data in use isn't some theoretical future state that we're thinking of. The threat landscape is already here. Data in use attacks are already happening. We already have examples of high impact memory scraping and stealing important things like usernames and passwords directly out of memory while that data is being acted on, because remember, our three-legged stool, we're mature in protecting data at rest, we're mature in protecting data in transit. But typically, when we're using the data, we're decrypting it.

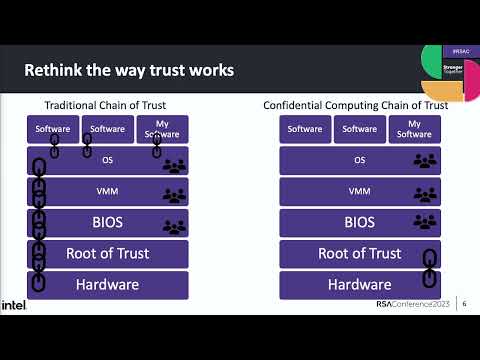

So in memory, that data is often in a vulnerable state. To further drive this point home that confidential computing is not some academic aspiration, it's not some niche version of computing that's continuing to stay in specific use cases. Mark Russinovich, the CTO of Microsoft Azure has a goal and he cares very much about all hardware purchases that Azure makes by 2026 will support confidential computing. It is generally agreed upon by all of the large tech companies out there that confidential computing is not just the future of some niche version of compute, but it's actually the future of all compute. So let's talk about and think about the way trust works in a system. From the second you hit the power on button, trust is built inside of your system by starting at the lowest level and every layer builds on top of that, building a trust and a security relationship with the layer that comes before it.

This means that at any specific layer in your trust stack, its security can only be as strong as the culmination of all the layers that came below it. So something like a vulnerability in your operating system has the ability to potentially undermine the security of your software that is running on top of it. Beyond just vulnerabilities, people who have access to privileged layers like your BIOS, your virtual machine manager, your operating system, potentially then have the access to also introspect or modify the way your software might be running at the higher level layers. I feel confident in saying most of you in this room work for businesses who both run in-house compute, but also leverage things like cloud service providers. So it should keep you up at night thinking about all the different potential insiders, both internal to your company and in the cloud service provider that have access to these privileged layers of your system. So whether it's through one of these rogue insiders potentially doing something to nefariously modify your software, again, both internal to your company or in your cloud service provider, whether it's honestly just something like a security patch at one of these privileged layers that wasn't applied promptly enough, or it's just an honest mistake in configuration that happened at any one of these layers.

The whole idea is if something happens fundamentally lower in your chain of trust, it has the ability to affect or erode security that happens at the higher level layers. So what if there was a different way of looking at how trust works in a system? So I'm still going to have the same fundamental building blocks. But one of the goals of confidential computing is to reduce what we call the trusted computing base or the TCB. So you can think of the TCB or your trusted computing base as all of the things in your chain of trust that you have to trust at that higher level layer. So on the left hand side here, my trusted computing base is essentially all of the layers.

I am establishing trust and trusting in those layers to provide me holistic security. In confidential computing, my goal is to say, "I don't want to have to trust all of these layers. I want to remove them both from the sense that if they have vulnerabilities, I would like to be isolated from them, or if there are rogue insiders who potentially get access to one of those other layers, I would like to be shielded from them." So in confidential computing, my goal is to take that chain and reduce the amount of things that are in it. So trusted computing bases come in a number of different flavors and shapes depending on which of the technologies use and depending on the use case. So I'm gonna show you what the chain of trust your trusted computing base can look like with one of the technologies that specifically is focused on protecting applications and is focused on minimizing your trusted computing base.

So I'm still gonna have to trust my hardware, right? The immutable layer that sits at the bottom the second I hit the power button. I'm gonna trust my root of trust, but after that, I'm gonna bypass the rest of it. This now means that a potential vulnerability and something like my operating system potentially in my virtual machine manager, my VMM, I'm potentially now isolated from that if I'm using confidential computing because the trust and protection and security of them deriving from the system, my trusted computing base, no longer includes them. This also means it's excluding those people who have access to those layers. One of the reasons that cloud service providers also see the future of compute as confidential computing is 'cause the truth is they don't want to be in your trusted computing base. They don't want to have the ability to potentially negatively impact your compute, right? They want to reduce their risk and it's a win-win because you no longer have to trust them having access to these layers and they no longer have to be worried about their potential insiders doing something that might affect your compute.

So we want to remove both those layers from a vulnerability aspect and from the potential of those who have access to those layers. So when is this useful, right? It's interesting to think about how we could redefine trust inside of a system, but where would you actually use this? So first and foremost, quite literally any workload where you just want more security, right? You look at that workload and you say, "It would just be nice to know that it had extra security and that I had data in use protection." But more specifically, there are a lot of use cases where we find that there's a lot higher adoption because there's a lot more urgency and a lot more desire to drive that new paradigm of trust. So one of those is moving sensitive workloads to managed infrastructure.

So I mentioned the cloud service providers, they don't want to be in your trusted computing base and you have the ability to remove them. We're gonna talk a little bit more about attestation and how we drive confidentiality is. But the goal is, or you can accept for the moment being, that confidential computing provides you the ability to test the integrity and the confidentiality of your data and code in use. You're removing that infrastructure owner, right? You're potentially removing that cloud service provider from having the ability to affect your compute or introspect on your compute while it's happening. And it's hardware-based, right? That immutable layer at the hardware level.

We're gonna talk about new paradigms like multi-party sharing scenarios where this isn't just about providing additional security to workloads that already exist, but we're actually seeing an emergence of new types of workloads that you can have around multi-party sharing where if you leverage confidential computing, you can collaborate on data without actually sharing it with other participants. And then also we can't forget about the regulation and security landscape around regulatory for security and privacy. So confidential computing goes a long way to helping build and meet privacy and security regulations both domestically and around the world. So what does it actually do, right? We're gonna peel this onion slowly, so don't worry, we'll get into the tech details later. But at the high level, what it's doing is it removes or it moves all of your compute that's happening in confidential computing into what's called a trusted execution environment.

You'll see these referred to as TEEs. So TEEs happen at that most fundamental hardware layer. And why you should be worried or why you should want this to happen at the hardware layer? Is remember, the hardware layer for one represents it as lowest level, the immutable piece that starts when you hit your power button.

Also, the way that chain of trust, that layer works, hardware is the lowest level on that chain of trust. So if you can reduce your trust to having to be in simply that hardware, you've reduced the amount of things that you have to trust, you've reduced your tax surface against your potential software application. Now, if you're thinking of data in use protection and you're familiar with this space, you may be familiar with a couple other technologies in this space. So I wanna acknowledge and talk about two of the other things that exist in the data in use space to help you understand how confidential computing and hardware TEEs are different from what's currently out there and they solve a different use case. So some of you may be familiar with what's called a trusted platform module or a TPM.

It's typically something that sits in your hardware or sometimes you can find soft TPMs. And their goal inside of a TPM is to protect your encryption keys so you can load keys into your TPM. And then what you can do is you can feed data into your TPM. It will do that encryption for you. That way, your keys are protected from being exposed at the software layer. So TPMs do provide data in use protection, but it's very specific to the use case of just encryption that's happening at the hardware layer.

So the keys that you load into it are protected, that data is protected, but it's specific purpose-built use to only be for those encryption keys. Also, the code that runs inside of a TPM is not mutable by you. You cannot custom load a bunch of your own code into the TPM. So while it does provide code integrity and confidentiality, understand that that code's not typically mutable by you. Homomorphic encryption is a really interesting new paradigm where you're able to do performance or compute on data that is still encrypted.

So you never actually actually have to unencrypt it to do compute on it. So this is a really interesting and emerging area, and I'll go on record as saying I think it's still in the early maturity stages, right? Not commercially viable right now for some of the performance and code explosion reasons, but it is a really interesting technology and it is used in some use cases. So homomorphic encryption, understand though, is specifically focused on data count confidentiality because the data is being operated on without ever being unencrypted. So you get confidentiality from that.

But the integrity of that data actually is only derived if the code operating has integrity measures in place. Homomorphic encryption itself does not actually directly provide data integrity coverage that actually comes from the code. And then homomorphic encryption is specific to data so there's no protection associated with the code. So that's where the hardware TEEs come in. So hardware TEEs provide both confidentiality and integrity protections for both the data that you are operating on and the code that you load inside of the hardware TEE. And they do that through something called attestation.

And we'll get into a little bit later how or what attestation is. But recognize all of them support this notion of attestation, but their value propositions are different. And the one I don't have on this slide, but I sometimes get asked about is this notion of sandboxing. So we talked about protecting your application. So I don't have it in here because it's not really a data in use protection, but people do ask me about it, so I'll explain.

Sandboxing is the notion that the operating system actually wants to protect itself from your application, right? And the notion of a sandbox, the application is the thing that is not trusted. The operating system wants to protect you and wants to protect you from getting out of your sandbox. Confidential computing is actually the exact opposite of that. In confidential computing, I'm saying, "I trust me.

What I don't trust is everything else on the operating system." So confidential computing is about protecting things from getting into me, and sandboxing is protecting things from getting out. So let's talk about some use cases. So I told you this isn't just an academic pursuit. This is actually being used, right? We're seeing a lot of increase in adoption.

Understand this technology is only a couple years old, right? So this is emergent. So where are some of the use cases that we've already seen this adopted in some interesting ways? And there's lots of different use cases where we've seen this adopted. I'm gonna cover three that I think help illustrate essentially new ways of thinking about how to provide compute, and so I like them for that reason. So the first one is this new notion called Zero Knowledge Solutions.

Zero Knowledge Solutions fascinate me because the idea is that I can provide a business value to somebody without having or wanting access to any of their data. So I wanna provide you a service, I wanna benefit from that in some way, except I don't want any of your data. So Signal is a really popular phone app, and the reason it's popular is because security of the messages that it has is its highest value proposition. People who adopt Signal the messaging app are typically doing it because what they're concerned about is security.

So Signal, their claim and verifiable, they're all open source, right? You can go through and see that your messages and content are only visible to you. Signal designed from the ground up a solution where they didn't want to have access to any of your stuff. They don't have access to see your contacts, they don't have access to see your messages. It's not just they're providing you security, they architect it in such a way that they don't have access to it 'cause they don't want it.

So let's talk about a specific thing that Signal used this for, and Signal runs on a lot of confidential computing, but we'll talk about one specific feature that they implemented. So when you first connect to Signal, if any of you have used Signal, one of the first things it wants to do is do contact discovery, and wants to discover, of the people in your contact list, which other ones are using Signal. Remember, they don't want any of your data.

They want to do this in a way that doesn't allow them to see who your contact list is. So they don't want to know about your user contacts, but somehow they have to connect you to other users inside of Signal. So the way that Signal does this is they do it inside of a TEE. So when you start up Signal for the first time, Signal is going to attest to that TEE on the other end of that connection.

It is going to make sure that it is running the code as expected, that the permissions are set up the way it wanted, that it has protections in place, that the data's never gonna leave the safety of that TEE. Once it does that, it'll send your contact list inside of that. It'll do that compare to figure out your contact list, it'll tear all that down. You get back on your side a list of your contacts that are inside of Signal, and Signal itself never had access to that data. So Signal never sees the requests, they never see who was near contact list, and they never see which of their users were in your contact list.

They don't see any of those results. And for those who didn't see it, there's a blog down there, if you wanna go in, they go into a lot of nitty-gritty detail on how they do this, the code, everything. And they've got several blog posts about how they leverage confidential computing. This was one specific feature example where they do this. But I highly recommend reading their blog. So the next one is another new compute paradigm that I just find incredibly fascinating.

Privacy preserving machine learning. So AI and machine learning, I mean, you literally can't open any type of news site without seeing something about machine learning or AI. And all of us being security people, we recognize there's a growing need for security in the AI and ML space. So privacy preserving machine learning is a very interesting way of doing machine learning in a way that never actually exposes the data that you're training the model on.

So one of the first public use cases of this was Bosch. So Bosch wanted to train some AI autonomous vehicle models with camera data that was collected from tons of vehicles. So the problem they were facing, they were facing over this in predominantly Europe, but they're obviously corollaries here in the U.S.

with our privacy laws. Privacy regulations like GDPR require companies to minimize the collection of personal data, protect it at all times, and delete it as soon as possible, or, I mean, you have to do all those security things also. But there's also a notion that if you can get permission from every single user to use it in a specific way that that can help you in specific use cases. But in autonomous vehicle data, it is quite simply infeasible for you to get permission from every single person or thing that was in that autonomous vehicle data in those camera recordings. So what they did is they used the TE in two spots and we'll walk through a flow diagram in a second.

They used a TEE to do de-identification of the data and training of the model in such a way that that allowed them to more easily meet the GDPR privacy regulations and to maintain the efficacy of the model by training it on the raw data and not modified data. So let's see what privacy preserving machine learning looks like. So in the case of autonomous vehicle, my vehicle is capturing a whole bunch of data, right? In this case we're worried about the visual data.

That visual data is rich in stuff that is considered protected information. So you have things like people, you have street signs, faces, license plates, all sorts of things that are identifiable. So personal data under privacy regulations must be removed or protected with all those stringent requirements. But in this case, we want it to be easier to use the data, so our goal is to try and remove it. But the problem is, if we remove it with either things like black boxes or we gray stuff out, in truth, we're affecting the efficacy of that model. A model trained a safety critical model trained on data that has grayed out things, what if a grocery bag floats by? And because the model was trained on this grayed out data, that grocery bag looks like somebody's head.

You can't have that in safety-critical models. So what we really need to do is we need to train on the real data, but we need to do it in a way that's maybe not so burdensome, but still protects the privacy and does all the right things from a security perspective. So the first TEE, so the first trusted execution environment. The autonomous vehicle is going to make an encrypted connection to that TEE and do all that great attestation I mentioned before. It's gonna make sure before it sends any data that it's what it expected, that the code running inside of it is authentic, that the integrity is still in place.

So once the vehicle is satisfied that that TEE is set up to its liking, it'll send the data over encrypted inside of the TEE. Inside of the TEE, the data is split into two different pieces, so it's the de-identification phase. So the data is split into the protected pieces of information. So on the top there you can see, I cut out the faces, the license plates, things like street signs. And in the bottom piece, I have the data that is left.

And in the cases of most images, you'll find the protected information is usually like less than 10% of the data, right? It's a subset of it but it's the piece we have to be extra careful about. So we split it into these two. Now the protected data is encrypted, sent to a nice encrypted database, and treated with the utmost of care.

The de-identified data can then be sent out. You can store it unencrypted if you want, right? You can still store it encrypted for best practices. But the idea is here now that you've removed the protected information from it, this is no longer regulated data and you can share it, you can send it out for labeling. It's a lot easier to use and share amongst collaborators because I've de-identified it.

And all that de-identification happened inside of a TEE. So I feel confident that the data came encrypted from the vehicle, it went into the TEE, which we'll talk about how it protects the data, but it is essentially at the boundaries still all encrypted data, and when it left the TEE, it was encrypted and went into a database to be protected. After you've got all the labeling and all that stuff done, you're going to use your second instance of a TEE. You're recombining the data inside of the TEE back into its original form, but now you've got all of the de-identified data that was labeled however you wanted to, and again, that was most of the data, so you could send it out to be labeled by third parties, it's a lot easier to work with.

The protected information is brought back in and it's reconstructed. So now I have the original raw data unmodified and I'm able to authentically train my model on the real data having preserved privacy throughout the entirety of this process. And understand, TEEs are not this silver bullet, which means you don't have to do the rest of your security practice. You still have to do the rest of your security practices. It's that, this is a really powerful way to protect that data, right? Meet those regulations that say you have to show security due diligence, right? You have to show that the data was encrypted. TEEs a really powerful way of doing that.

So this notion of privacy preserving machine learning is a really interesting emergent use case that simply didn't exist before because we didn't have a way to do these types of calculations with data in use inside of these hardware TEEs. Another interesting one is what's called data clean rooms. So you can think of multi-party sharing scenarios where I want the ability for multiple parties to collaborate on the same data without anyone actually having access to the data, right? A lot of for regulatory data sovereignty reasons, it becomes really difficult to share data amongst other parties that could benefit from that collaboration. So I'm gonna show an example here and all the, I know the URLs are small, but the use cases have a lot more detail than the URLs at the bottom. So this one, in this case, it's a healthcare example. So healthcare is one where the data is so heavily regulated that it often becomes difficult for collaboration to take place.

But we all know that bringing healthcare data together to drive some of these collaborations is actually a really powerful way to drive impressive outcomes. So this particular example, a healthcare consulting firm wanted to find a way to build a bunch of basically survey data, right? They wanted to build trends and outcomes associated with a bunch of protected medical data. They've tried to run these types of collaborations in the past and found that it was really hard to get people to collaborate, not because they didn't believe in the outcomes, but because it was such a headache and a hassle for them to go through all the due diligence necessary to make it okay for them to share that data. So this privacy preserving collaboration, we call it data clean rooms, multi-party data sharing, they wanted to collaborate on sensitive data across geos. And third, collaboration amongst third parties while mitigating or preserving privacy risks. So privacy regulations and security concerns over data sharing makes this traditionally very hard.

Sharing data like this, especially protected health information, is really difficult with privacy and compliance regulations all over the world, right? Every country has some form of data privacy and sharing that can make this really difficult to do. So one of the solutions they found that helped ease this burden was performing this collaboration inside of a trusted execution environment. So how does this work? How did data clean rooms work? So imagine a scenario where a bunch of hospitals got together. They wanted to all put their data inside of a data clean room.

This data clean room is a trusted execution environment. So the same steps as before. Before they put anything in this data clean room, they have the ability to attest to the integrity, to the confidentiality that it's up to snuff with all of their concerns associated with where they're putting their data. They're gonna encrypt their data and move it inside of the trusted execution environment.

Inside that trusted execution environment, it's gonna be combined with all of the other people's data, right? But it came in encrypted, right? And now it's inside the trusted execution environment which nobody has the ability to introspect inside of. And through my attestation, I was able to confirm the permissions and things associated with it, that people can't access this data once they put it in there. So then what they're able to do was then there was a software wrapper put inside of that trusted execution environment. All of the data put in obviously has to adhere to some sort of agreed upon format, standard, however you wanna phrase it, but all of the data from the various hospitals conforms to some kind of a format. So a software wrapper application around that is then used, so those outside of it, now all of the collaborators have the ability to hit that data without ever having access to it.

So you could ask things and you could see trends like, well, how many patients were male, right? What was the readmittance rate after a certain number of surgery across all of these hospitals? And because of the TEE and all those attestations, I have confidence that the data's protected in all of its stages, and that nobody actually has access to the data. I, as hospital A, don't get any access to hospital B's data. In this case, there are companies, third parties that run these data clean rooms and they allow you all of the attestation probing you want to see but they don't have access to it either, 'cause remember, you removed them from the trusted computing base. They're providing you a zero knowledge solution. They don't get access to your data either.

So that's all interesting, right? I hope that that section has helped you understand. There are really interesting ways to use confidential computing I mentioned earlier, 'cause if you want more security, by all means, confidential computing is a great opportunity. But what I find really fascinating is that it opens up new compute paradigms.

These things that I've shown were things that don't exist, well, without confidential computing, right? It's emerging new ways that we can collaborate and offer solutions. Okay, so how does it actually work? So there are two main value propositions associated with confidential computing. From that table, if you recall, it's integrity and it's confidentiality.

So how do we do confidentiality? And we're getting into some of the tech here. So for those of you who are on the edge of your seat wondering, well, what are the solutions that are out there? On the right hand, no, left hand side are the technologies that exist out there in the confidential computing space. And I mentioned earlier, and I'll show you in a later slide, each of them is a little different. It depends on your use case and what you're trying to achieve, which one's going to be the best solution for you? So confidentiality is the notion that I want to provide and protect both the data and the code for being accessed by those who should not have access to that data.

So that confidentiality is provided at runtime by a couple different isolation techniques. The first technique is CPU addressability isolation. So these are techniques and things that the CPU is capable of doing to provide isolation associated with compute.

I'll talk through a couple of 'em in just a second. The second one is memory isolation. So beyond just the CPU enforcing and trying to provide you isolation, we also have the memory and RAM doing some stuff too.

So the first CPU addressability isolation technique is access control validation. So all of these technologies use a little different. So it's gonna build out kind of a spider chart so you can see. So access control validation is quite literally just the notion that when you go to access something, I'm putting controls in place that says, "If you are not the right confidential computing process, if you are not the TEE, I am not going to grant you access to this other TEE memory space, so I'm quite simply going to just not let you access it." The next is address translation. So for those of you who've ever dug into how virtual addresses are translated to physical address, it's a super interesting process.

But all you need to really understand about this is that the CPU is doing that address translation. And what they're doing in some of these technologies is basically saying, "I will not allow you to translate this address because you are not a TEE and that is not your memory space." So when you go to try and translate, even if you somehow knew maybe one of the addresses associated with a trusted execution environment, the address translation simply won't allow you to perform that translation if you do not have permission to do so. The third technique we use in CPU addressability is paging control. So paging control is quite literally this notion that page tables associated or pages associated with confidential computing will not be loaded in the CPU at the same time the pages that are not in confidential computing.

So I'm providing isolation by quite simply saying, "If my confidential computing pages are loaded, I'm not loading the other ones," right? So there can't be any cross contaminations because they're existing at different times. I mentioned memory isolation. So memory isolation, there's one main technique here and it's quite literally RAM encryption. So some of the attacks you see against data in use are things like just scraping memory or side channels trying to get memory to leak information.

So RAM encryption means that while all of their confidential computing process exists in RAM, it is also encrypted. So if they were to scrape, they were to try and side channel leak memory information, all of that information is encrypted. So all they're getting is encrypted information, and that one's used by most of them. So confidentiality was one value proposition. And this applies to both the data and code, right? Because the data and code exists and memory space exists in the CPU at the same time.

So all of these, and this is another one where, this diagram, if you wanna lift the true version, I have the PDF source down there of where this diagram is from, and I'll give you some resources later for more of these tech diagrams. So these are the techniques used to provide the confidentiality of both the data and the code. So the other piece I mentioned is integrity, and I've mentioned this word attestation a few times. So integrity is performed or achieved in confidential computing through attestation. Attestation is quite literally trust but verify.

Now, attestation exists and confidential computing is not the first technology to use this notion of attestation. This ability for you to somehow challenge something for it to give you a response that you can trust. So you have a verifier, you have an attester.

You have somebody who wants to confirm that this is something I should trust. Attestation, because confidential computing technologies come in several different flavors understand that what you're at testing to depends on what the technology is. So some of the confidential computing technologies, which will show you on the next slide, protect an application. So in that case, you're attesting to the application. Some protect a VM, some protect a process. Whatever it is that that confidential computing technology is built for, that's the thing you're attesting to.

So how it works. Quite literally, it's a challenge in response exercise. I as the verifier am going to challenge with something.

Now in confidential computing, the way this challenge typically takes place is things like measurements. I want measurements of the code that was used. I want measurements of specific hardware registers. I want measurements of things that I consider critical to knowing that that TEE is in a state that I should accept it. So it's gonna be measurements about the system itself. But then measurements also about that specific TEE, how it started up, what version it's running, any of the code that you've loaded into hashes associated with any of that.

So attestation in confidential computing is measurements. And so you can set up policies saying, "Well, here's the measurements that I accept," right? If the measurement says it's version X, I can set a thing saying, "Well, do I accept version X?" I can choose whether or not I accept those measurements. Now some things like measurement of the startup code, that's stuff that the manufacturer's going to say, "This is what you should look for." And obviously, you should follow that. But then some of them is up to your discretion of whether or not there are certain versions, how long the TEE has been powered on, when was it last rebooted.

Those are things you have at your discretion. So this is an iChart, and again, I have the link down there. But this iChart is trying to show you what I mentioned earlier about all of these different confidential computing technologies being different.

And it depends on the use case of what you're trying to protect. So what you see here is kind of a yellow and a red box. And at the top in the gray is probably is, think of that as sort of the legend of what you are trying to protect.

Basically, what is that small trusted computing base that you want to have? So you can get real small or you can protect a whole VM. So from left to right, it's kind of small stuff getting bigger. So the yellow is your package, right? That's your software, that's the thing you are deploying in confidential computing. And the red is the piece that's then in that trusted computer base that's being protected.

So the difference between the red and the yellow, we think of it as kind of the shim, right? It's the thing responsible for setting up and configuring the TEE before it puts the red stuff in the TEE. So you will always have some kind of a shim. The OS might be doing something depending on which of these technologies you're using to set up the TEE. And then what's in red in the diagram is the thing that is then protected. So all of the protections we've been talking about, those are extended to the part of the diagram that is in red. So you may be thinking, how does this work with accelerators? I talked about privacy preserving machine learning.

So accelerators like GPUs are the really common way of doing things like machine learning. So I'll admit that this is emergent in confidential computing. I told you confidential computing is a pretty new paradigm.

So the first wave of confidential computing is focused on the CPU. So that means accelerators can be used, but in a way that's called bounce buffers, which I'll just go ahead and say, is not the most performant thing out there. But everyone, again, all the big tech companies, we believe this is the future of all compute, so we recognize the need to bring accelerators in. So there are two standards that you should pay attention to if you wanna understand how confidential computing will leverage accelerators. So TDISP and SPDM are two of the standards being developed and have now reached a certain level of maturity where they're now being transitioned into hardware.

So you will over the next couple years start to see hardware coming out that now supports these, that will allow the notion of confidential computing to extend those memory protections and CPU protections to an accelerator more seamlessly. So I mentioned bounce buffers. So it is possible right now to use accelerators with confidential computing. But what has to happen is the data that is encrypted in RAM has to be basically re-encrypted, moved into a memory segment that the accelerator can access, 'cause remember, all those memory isolation techniques, the GPUs and accelerators cannot access the memory space of those TEEs. So the data has to be encrypted, moved out, and then moved into the accelerator and then decrypted, and then that process has to happen. So those bounce buffers are possible right now, they're not the most performant, obviously depending on your use case if you're willing to accept the performance hit, you can do this now.

But you'll also see over the next couple years an expansion with these standards to allow those memory and CPU isolation techniques to actually seamlessly add the accelerator into that protected memory space. So where can I learn more, right? There's a bunch of diagrams I've seen people taking pictures of. Those all come from the Confidential Computing Consortium. So if this is something that you're interested and you're trying to think of, how could I learn more? How could I potentially bring this knowledge back into my business? Confidential Computing Consortium is my number one recommendation for where you go. It is a consortium that you'll see all, of course, all the logo sides that they have, all the big tech companies that are trying to push this new paradigm and mature it in a way that benefits the entire industry, raise all boats to say, "How do we bring confidential computing to solve the real problems out there?" So all of the diagrams you saw are things from Confidential Computing Consortium.

So what should you do as somebody learning more about confidential computing, I'm gonna challenge you with, going back to your organization and thinking about any security or privacy critical workloads that would benefit from confidential computing. So just generically, are there workloads that would benefit from data in use protection? But then I'd also challenge you to think about those new compute paradigms, right? Are there new things that your business could actually be doing, like the privacy preserving machine learning, the data clean rooms, like Zero Knowledge Solutions. Are there actually new things you could be doing now that you have this tool in your toolbox? And what does that potentially mean? Also, intercepting any planned cloud transitions, if you're already in the process of transitioning something into the cloud, you might as well go ahead and put it inside of a confidential computing environment because you're already going through the heavy lift of moving it, knowing that over the next couple years, you're gonna see all compute in the cloud move to confidential computing.

So B, one of those adopters who says, "I don't wanna have to move it again later," right? If you're already in that transition, see if there's a way to intercept it and add confidential computing to those plans. All the main cloud service providers already support confidential computing, those technologies you saw. So in the first three months, just better understand how to leverage it, identify those workloads that might benefit. And then the last one there, figure out which technology suits your workload, right? There's no one-size-fits-all. It depends on the use case of what you're trying to solve, which technology might be better for you, right? You saw things like ARM that are in mobile, there are server ones, there are cloud and edge, there are client, all of these are different.

So try and figure out which ones suit the unique needs of your workload. And so the final takeaway is understanding that a number of us are challenged with this three-legged stool. We need to protect data in all of its states.

We have mature processes for at rest, we have mature processes for in-transit, and confidential computing is now the way that I think you should start to approach data in use protection. So make sure that your stool has all three legs. So not only does confidential computing enable just quite simply raising the security bar on any workload, but it's also enabling these new types of compute paradigms, which I think are really interesting to see how things like privacy preserving machine learning, Zero Knowledge Solutions, and these data clean rooms really start to unfold to pave basically the new compute paradigm.

And with that, thank you. I don't know how much time I have. Thank you. (audience applauding) Five? Okay, I have five minutes for questions. And I'm told I have to make you guys go to the microphone, so make sure you go to the microphones.

So I see your hand up, but you gotta walk to the microphone. - [Participant] Hello. Thank you for the presentation. It was interesting. Here's my question. You talked about memory isolation and RAM encryption.

So if the RAM is encrypted, where install the encryption key? And who has the right to know the RAM content? Thank you. - [Stephanie] So I will say it depends on the technology. And so just to repeat all that.

So the RAM is encrypted, right? And where is the key that is being used, right? Who has access to the key? For most of these technologies, and I'll put this big asterisks, right? It depends on the technology. Each of the vendors has implemented everything slightly different. So in general, the goal is to protect that key with the utmost protection, right? So it's going to exist inside of the CPU in hardware in general because we need to protect that key, right? The encryption is only as good as these, your protection of the key, right? So the notion is that key also never leaves your trusted computing base, right? That hardware and your root of trust. Those are the only things you're trusting, so those are the only things that potentially access your key.

And for the different technologies, the number of keys and everything varies, but they all follow something similar. - [Participant] Excellent presentation. Thank you. So realizing this is emerging technology, obviously, but there are, as we know, certain countries around the world that are interested in the data that is being processed in those locations and have certain legal mandates. Have there been any concerns raised at this point where it may be infeasible for statutory or regulatory purpose to use confidential computing in some legal jurisdictions that may have very permissive requirements for governmental access? - [Stephanie] Mm-hmm, yeah.

So this is a really interesting one because what we've actually seen is sort of the opposite of what you would expect, right? Some of the countries that have a lot more of what we'd call this permissive access desires, they've actually been some of the biggest supporters of confidential computing, right? We're seeing those countries actually be some of the biggest adopters and the biggest ones who are really interested in this notion of getting the cloud service provider out of the trusted computing base. So it's actually sort of the exact opposite of probably what you would expect where you would want those permissive data laws so that you can harvest your citizens' data. But we're actually seeing some of those countries, and I won't name any specific ones, but everyone's probably got some at top of mind. Some of those countries are actually some of the ones we're seeing with the increased knowledge and adoption of confidential computing because they take security very seriously, so it's kind of interesting. I would say we've seen sort of the opposite of the trends you would've expected, yeah.

- [Participant] Oh, thank you. - [Stephanie] Awesome, well, if there's no more questions, I'll be around after this. We do have to clear the room, so anyone who has questions, please see me in the hallway or find me on LinkedIn. Thanks everyone. (audience applauding)

2023-06-14 23:29