Avoiding Digital Bias - Adam L Smith

Hello. Full-stack first my. Name is Adam Smith, I'm chief technology, officer of Piccadilly labs which, is a machine, learning and AI. Company. Based, here in Barcelona, and, also, big burly group which is a software, quality, consultancy. Based in London. And. I think the presentation is on the other screen so if you'll just give me a second. Yeah. Great. So. I'd, like to start by telling you a story in. 2014. Two, people were arrested in, America, on the same day for very similar crimes. One. Of them was, a young girl who, just turned 18, so. She. Found a bike lying on the streets a kids bike and, she, picked it up and she. Started to steal it and, then she changed her mind but. It was too late, the police had already been called on. The. Same day another person, a middle-aged, middle-aged. Man he. Was arrested, for armed. Robbery and he. Used, a weapon to, go into a shop and he. Stole power tools from that shop and held. Up the shop if you like. So. Both of these people committed a crime of which the value was around 80, US. Dollars. But. These people went to court they, pled guilty to the crime. But. They received starkly, different sentences. And, the. Reason for this is that, in, nine, states in the u.s. the. Judge receives, a report and. They received this report before, they sentenced someone, now. This report gives. A recommendation to, judges, on the likelihood, of an individual, offending, again. We. Know for years later that, the algorithm got this completely backwards, in this case and, the, algorithm recommended, that she was. Sent to prison and, that. He was not, four. Years later he's committed a series of violent offences following, that event. What's. Interesting about this is that there's, no appeal it's. A case of computer, says no it. Can be very difficult to challenge reports. And the, output of algorithms, when, things are not transparent. Now. I'll go into this example a little, bit more shortly, but. I want to, first. Of all I want to explain to you a little bit about why this is becoming an increasingly, big issue I want. To give you some examples for the different types of ways this issue can manifest in, software I, want. To outline a few ideas for how you can evaluate your systems, to see whether they contain any bias that you don't want them to. And. The.

Reason I'm doing this is because, as well as being. Working. In a couple of companies that look at AI and, look at quality I'm also, a member of the eye Tripoli's ethical, design standards, group which, is looking at a range of issues from autonomous, cars to. Robots. In healthcare to, algorithmic, bias, and. Personally. I think as, software becomes more human we, as engineers have. A duty to become aware of some of the ethical issues once. We release something, into the world so. Good, start by talking a little bit about artificial. Intelligence. So. A lot of people trying to find a I there's a lot of definitions of it some, of those definitions are, as simple as it's everything we haven't done yet some. People believe it's about humans being, perceived as human. But. A lot of it really comes, down to the research goals of AI, if. You look at the Venn diagram, I have on the screen you can see some of the things that will resonate with you as as areas, that you read about a lot in the press it's, difficult to pick up a newspaper or, a magazine without. Artificial, intelligence, being mentioned. So. The, reason why artificial, intelligence has gained traction over the past few years is. Not as not as simple as is made out in some of the articles there hasn't been this massive breakthrough in research in fact the number of research, papers written about artificial intelligence has, been declining steadily since, 2012. The. Factors, that have driven us, to become, where we are now and to get them to this stage of the hype curve a first, of all big data so, the variety of sources, that data is consumed, from the, volume of data that's being consumed, there's, a statistic, from early, 2017, produced. By IBM. Which. Was, that 90% of the data in the world was, produced in the last 24, months and that's only going to have increased in scale since then. But. As many of you will know that's not the only thing that's changed here we.

Have Access, to compute, in a way we certainly did not ten years ago it's. Very easy to spin up a server spin, up ten servers and process, huge amounts of data in the, AWS cloud or Azure. That. Compute also can come with GPU. Farms, strapped on for specific. Types of modeling, and specific, types of work it, can come with pre-built knowledge, and, also the the, way developers, are using it is is. Often AI as a service, so you're connecting, to web service that provides specific. Machine, learning or other, capabilities and, this, means that the experts, in some, of these areas and some of these algorithms become. Further and further detached, from, the engineers, and the end users with. Each piece of software so. What's, really changed, is our ability to gather and, process. Data. So. What isn't on this slide though is general, intelligence, so. Artificial. General intelligence and. That really means the ability for a machine to learn. Something, in one context. And transfer. That knowledge to, a completely, different context, there's, about 40 teams of researchers around, the world working on this but, none of them will, claim that they are close to anything being ready for any kind of production rollout. So. What do we do when we can't when we can't implement. Intelligence, well, we can't solve any problem. We break it down into smaller problems looking. At perhaps presentation. Just now in tents and the way you use in tent classification. In chatbots, is the perfect example of this when. You talk to an Amazon a lexer you. Get the perception. That you're talking to something that has a level of general intelligence, whereas. In fact there's, a thousand, separate intense, each. Of which has their own little AI so. By joining lots of narrow AI together, we, create the illusion of, intelligence. And. By. The way for those of you that are live here in Spain Amazon, Alexa may not be familiar to you but there is a team in Pablo new working very hard at the moment on getting that working, with the Spanish language and rolled out this year. So. My overriding point here really is that we need to be careful about the information and power that would give to machines and not. Not. To be fooled that they are more intelligent than. They than they actually are. So. Just drilling down a little bit into machine learning, so. Machine, learning is another thing that has significantly, changed and enabled, us to make. The technological, developments, we have made in the last few years and. This, is very different to coding, because, the, majority of the work is achieved by training, a model with data not, by explicitly, coding, so. You analyze data you. Extract, features from that data you rank them based on the importance, that they have and you train the model to behave as you wish.

We're. Moving away from explicit. Programming. And. Moving. Into a training mode, another. Interesting, thing about machine, learning is it infers absence, attributes, so data it doesn't have it, infers, those within the algorithms. Ultimately. It's good at classifying, things grouping. Things counting. Things but. Just because it can learn doesn't. Mean it's self-aware it's, not the same as cognition. Machine. Learning, can't. Be fair or just unless. It's given fair, and just, data a. Few. Other issues with machine learning or not not issues but differences, the, approach to it is development, you. Don't necessarily follow the, workflow, that you would follow when developing software against. A hypothesis, or against requirements, you typically spend a long time playing around with data and trying to get it to output the, right the right things without, necessarily, understanding, exactly how it's working. It. Can be a black box it can be very difficult to explain after, the fact exactly, how its come to any particular conclusion. And. The other thing that's different is that developers, working with machine learning models have a tendency, to use as much data as possible to try and get the the model to output the result they want rather. Than someone sitting down and deciding what data is actually relevant. So. What's the problem that I'm that I'm really getting to here so. AI. Technology, is moving forward really fast. But. Not general. Intelligence, level, not at transferring, knowledge between contexts. We. Humans have a set of moral values and, those, moral values can't currently be added to any kind of algorithm, there's. Research going on but it's not possible right now. Machine. Learning algorithms, find, patterns so. We as humans do this as well rules. Of thumb, heuristics. Common, sense these. Are imperfect, methods. Where we consume, data rapidly, and make. Imperfect. Decisions. Now. This is all well and good the, way you combine this with the data that defines us our, personal data and by that I really mean our identity. Our. Political opinion, our sex life our, color, our gender, all of these things make us make. Up our identity, and when.

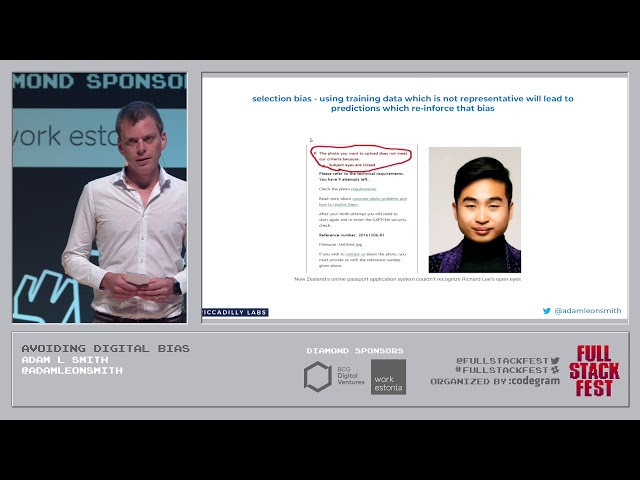

You Use machine learning with this kind of data there are some very significant. And unique risks, that can manifest. So. Just. Talk a little bit about bias so. Bias is a statistical. Concept, all. Machine learning is biased, towards, the data which, it has observed, whether. That's in training. And. Testing, maybe, even in the production environment. The. First, relevant type of bias is selection bias. Where. The data that it's given is skewed, it's. Incomplete, it's. Not representative, of what is the, reality in production, an. Example, of how this can occur is taking an algorithm, that's been trained in one environment maybe for the UK market and, trying to apply that to the Spanish market. Another. Type of bias is confirmation, bias. So. For, me I started. My life as an independent tester. Or I started my career as an independent tester, and this is something people do people. Are very aware of is. Developers. And testers, that, can if you don't have some kind of separation and at least the thought process is there you, can confirm, things work without really, proving that they do. One. Particular risk here is overfitting. So, overfitting, it's, where you, tune the models so much to, work on the dataset that you've got in front of you then, it works perfectly, but you expose it to different data and, it all falls down you essentially. Over tuned the model. Another. Type of bias is training bias is, when the bias in the engineer. Affects. The the, model when, they believe it is right because. They have brought bias to the equation themselves. But. As I say all machine learning is biased this. Is a fact what. We're looking for is inappropriate. Bias bias. Has a negative impact on, the. Stakeholders, of the system. Some, level of biased is natural. Minorities. Are always going to be underrepresented, in. Production, datasets for example so, great care is necessary in preparing, the data and stripping out unwarranted. Bias. So. Let's take a look at some examples so this is a computer vision system, when. You upload your picture, to apply for a passport in, New Zealand there's. An algorithm run over your face and this. Guy the. Algorithm said his eyes were closed it, denied denied, a passport this. Is a perfect example of. Algorithms. And machine, learning being trained, on one type of data and then. When they apply it to different sets of data it. Comes to very different conclusions. So. These examples can often be very newsworthy and typically, affect minorities, it, isn't an issue if the bias applies, equally, to different, groups of people but. Often that isn't the case and that's when this stuff really hits the press. So. Let's take another example. So. Researchers used, browser. Plug-ins to look at the different apps that were being served to different people and they. Found that Google. Disproportionately, would advertise higher, paid jobs to men. Now. There's a counter-argument here, and has counter arguments throughout a lot of this presentation, there's a counter-argument here, that, women click on the higher paid jobs less and the model had trained itself not to display those images, to women because they those adverts to women because they didn't actually click on them this. Is interesting because the bias that actually exists, in the real world is, then being reflected. Within the machines. Another. Example in face recognition face, recognition is notoriously. Unable, to. To. Track darker. Faces as effectively, it's. Notoriously, unable to recognize, darker, faces as effectively, as it is able to recognize, white faces, one. Of the interesting things here is the there's work going on in China to make sure that varies and a freely, available, data using. Faces of Chinese people to train algorithms, effectively, because, at the moment is heavily, skewed towards, the US enter, to Europe. So. What's, the solution to this is it more data, I mean, research has shown that if you double that. If you quadruple. The, amount, of data that you are used to train an algorithm, you double the effect the effectiveness of that algorithm, but. One big risk here is people take data from the internet. So. Using data that you find on the Internet to try and remove bias from your model is a terrible, idea this, is this is type. Of CEO into google images and whilst. There's at, least one or two women on there it's. Skewed towards white men. It's. Been shown a lot that taking publicly, available data. Disproportionately. Reinforces. Existing. Bias and, this is important, because this, is like money laundering for prejudice you're. Taking bias, that exists in society and, you're packaging it up all nice and clean into an algorithm. And. It may be years before anyone to text or corrects that bias. The. University, of Virginia found. That using Google images, to Train image recognition systems.

Teachers. Teaches. It to associate, women and kitchens. Similarly. An unsupervised. Learning system, that used, different. People's names so black sounding names and white sounding names in America, they. Found that it started to associate, pleasant. Words with white sounding names and unpleasant. Unpleasant. Words with black sounding names. That. Was at Princeton University, incidentally. So. It should be clear now how data can influence. Systems, and introduce. Bias, however. There's, a further complexity. To this problem, so. I'm just going to switch to this is a screenshot from my Twitter profile, so. Twitter has decided that I'm male without. Me actually, that's, great they've got that one right it's, also decided, I'm not between I'm not hundred thirteen and I'm not between 55, and 65. But. How does it know this it's, looking at different things I do and it's making assumptions, and, that's. The kind of that can lead to a kind of indirect, discrimination in. Facts because. Once data items, become inferred, from other data items, as an engineer you start to lose control of what, you're actually dealing with. Things. Like your your location, your postcode, your zip code that. Encodes a huge amount of information about you you ippolit your political opinion, your. Ethnicity, your, socioeconomic class, can, be guessed from, your your zip code, your. Shoe size for, example can tell people a lot about you. So. Let's take a look about some of the examples where this has happened in in the real world, so. Staples. To be if you don't know staples there are a huge stationery, retailer, in. The UK in the u.s. now. They made. A decision to. Reduce, the prices on their websites for, people who lived near. Competitor. Stores. Seems. Like a completely logical decision. But. What this led, to was. Consistently. Higher prices, for people in lower-income, groups. And. Which. Seems less appealing to staples so they quickly changed, their system and put the prices back, but. There's a rational reason why they did that and it's not necessarily unjustified. Amazon. As well so, Amazon were, found by Bloomberg, to, be offering, differentials. They're excluding, services. In fact excluding people and services in predominantly black areas. Amazon. Doesn't know your race but, it's it's extrapolating. It from your zip code there's. A counter-argument again, with a counter-argument, that they're a delivery company of course they can decide which, zip codes they, deliver to maybe. There's some safety issues there that are also packed, into this issue. But. For us as engineers what's. Important, is it wasn't what Amazon wanted at all they did not like this press attention. So let's go back to a, scarier. Example, which is the one I started with, nope. I got first sorry Facebook. So. Facebook have. Patented. Figuring. Out your socio-economic, group, from things that you click on on, Facebook now, Facebook gets a lot of flack for things they've done, this.

Is One of I think the more worrisome ones do you really want advertisers, to be able to pick your socio-economic group, and pick whether they want to show you something. So. Going back to the example our started with compass, so. This was this was revealed in an investigation, by, ProPublica. Who did a lot of the work recently, on revealing, what's going on in some of the refugee camps in America. So. Compass predicts reoffending, risk and is an input to sentencing, it. Uses a hundred, 37 different data, inputs, things, that things that criminologist. Look at like whether you're a drug user single parent things like that and, it makes predictions about you and, what's been found is this you are 77, percent more likely to, be predicted, to commit a violent offense if you, are black so. Going back to that story at the starts the, young girl she, was black the, middle-aged guy he, was white and. You can see clearly up here the. There's. A relatively, normal distribution, for the risk scores of black defendants, but. On the white defendants. The. The one which is the lower risk is. Disproportionately. Allocated. Now. Where this this. Is this is a proprietary algorithm, this is this, is not accessible, to the general public, there's. Been numerous legal, challenges to this specific, system there's, also been attempt, to try and remove some of this bias by, mathematically. Injecting, different types of bias which, actually ended up having unintended, consequences. On other types of people there's, a whole level of complexity, here in trying to get systems to behave using. Values, that we think are acceptable. But. Where this is really risky is where there's no error feedback, if, people, can't appeal if people can't appeal to a human if. They go to prison they can't prove that they weren't going to violently, riaf end because they're in prison. So. Let's. Take a look at some other risk areas so. This is actually a, this. I this. From the French data protection officer. And added in a couple it evolved it a little bit these. Are some of the areas where people are getting a little bit concerned about how algorithms, can be used now. Two of these areas have happened over the past two. Three months in the UK. Predictive. Policing is. Being rolled out in the UK predicting, who will commit crimes. We. Have a case, in the UK where, university. Admissions nationally, a pastor, a fraud detection algorithm.

So, You, apply for university the, form gets uploaded there's. An algorithm that says this, is a fraudulent application, and you're five times more likely to be deemed to be a fraudulent applicant. If you were black. There's. A a festival, in the UK over the summer lots of people going enjoying, themselves they. Hooked up face, recognition to, a laptop and, studied scanning the police sorry, hooked up facial recognition to a laptop and started scanning the crowd looking, for people on their, database the, fugitives, they. Found a hundred people of which six. Or seven were actually fugitives, so ninety four people were incorrectly, identified as. Fugitives, they were stopped, they were searched there was subject to interrogation. So. These these are real use cases where there, is a high risk because, data about people's identities, is being combined, with algorithms, that we don't necessarily understand. Or sufficiently, regulates. So. What's. The answer is it more regulation, I don't think it is I mean in. Europe. We have some pretty good human, rights legislation, that, already stops us from discriminating, against people. Because. Of their identity and their protected characteristics, we. Also have gdpr which. People have different views on but. It does include the right not to be subject, to an algorithmic decision, where, it has a legal or vital effect on you so if you could affect your life or your freedom or your legal status you. Have a right to appeal against, any algorithm. So. We've. Got enough legislation. Let's. Recap, machine. Learning is a key part, of AI its, use is significantly, increasing, wherever. Personal, data is processed there, is a risk of unfair, bias. Protected. Features, like race, gender political. Opinion, don't need to be in the inputs you're not just looking at the input-output, domain of the algorithm, there are other things that can come into play a. Bias. Can emerge not only when datasets, don't reflect society, but, also when the datasets do accurately, reflect society that they reflect an unfair, aspect of society that, you your company don't want to replicate. This. Bias can certainly be unlawful, especially. Within, the EU. So. How. Can you determine, whether. Something. That you have built an, algorithm that you have built is discriminatory, against, people to belong to these specific subgroups, and, the first one's easy you.

Can Take the inputs to an. Algorithm and you can tweak some of the inputs change, through to change the race change the date of birth change. The shoe size, that's. Simple and easy to achieve but. It is done on a very small scale. The. Next thing you can do is, obviously group, inputs. You, can take a whole set of inputs that belong to people of a specific, subgroup run, them through the algorithm, then. Run a different subgroup through and then compare the results, and. That gives you a wider picture, but. It doesn't detect where external. Data and latent, variables, are affecting the outcome. So. The most effective way, this can be done and this is exactly what happened with the Staples example, and the Amazon example earlier that I gave earlier it's, use external, data sources for instance a census, so a census, being, where, the, government, gathers every 10 years a list of everyone that lives in a zip code and, all their different characteristics, so. You can bring that data into, your your evaluation, process, and start, to determine whether. There. Is any kind of latent variable, affecting the outcome, I'm. Not sure how you could approach this with shoe sizes though. So. A. Few. A few thoughts here about, how you can approach throughout, the lifecycle this kind of problem and make sure that you bake your values, into the algorithmic system, you're designing, the. First step is identify, who your stakeholders are who, are the people that matter who are the people that are going to be deciding, what is warranted, who are the people that are going to be affected, what, is how, is society to stakeholder from your algorithm. Needs. To consider the risk and impact. 90%. Of the algorithm as we build hold very little risk and have very little impact on people. But. We've all seen how things, like political. Advertising, can, be misused. Think. About the diversity in your team if there's. Four. White young guys in a shared building, some, kind of startup then, maybe, they're not going to have the perspectives, that a wider team would have. Maybe. You should be using production data a lot of people very, against using production data to evaluate your, code but this is one example where, the bigger the data source the more effective, your evaluations, will be. Maybe. You should determine some acceptance, criteria what's, acceptable, bias for you. The. Test techniques I showed on the previous slide what's. Appropriate for the level of risk that you have what. Sort of tools do you need to build because this, sure isn't manual, testing. What. Consents, and notifications. Do you need to give users if you're profiling people based on their personal data you have to tell them it's the law it's in gdpr. And. Then. Lastly. Once you once you go live once you take an algorithm live you, need to constantly reevaluate. It it's constantly, getting new data constantly. Having new experiences and, changing, as I, said earlier if you're applying things to different markets that, they haven't been trained in you need to consider that as well and. Of. Course you need to monitor whether the social norms have changed. People. Have a different there's, the whole this year has been the me to campaign, for example Google's attitudes, about certain things change in an annual basis, you need to continually, monitor that kind of thing. So. Why am I here talking about this as I said at the start I'm working with the I Triple E on a whole bunch of ethical, standards for technology, I'm, really interested in use cases that people have problems. People have had, in. This area concerns. People have an ideas people have about, how to reduce. The risk of algorithmic, bias, within, the systems that we develop and how they affect technology. So. I think I'm just about on time so, thank you for your time and I'll, take any questions. It's. Great that you are so. Involved. In these kind, of stuffs how. Does. These. All these considerations. Affect. Your, everyday, life. A job and do. You have like. Profitability. Issues, that does that does these. Being. Attentive to these things really, slow your, business or how, does, it affect, it that's a good question and that's why we originally started looking, at this because we were looking at how we in. Fact allocate, work to different engineers, so. We are how we allocate the most effective, work to the best engineer to complete a task and we took a step back and we thought well isn't.

There Some issues we need to think about here the symmetrical issues and that's when we started looking into this and since. Since we started talking about this publicly, we've, had quite a number of companies big, companies in, the UK come, to us and want to meet talk about assistance, for developing, their own data ethics, policy I think data in 2018, the, ethics around it has suddenly become a much bigger issue and, we're starting to see it, becoming a real real. Revenue stream from people that want, to invest in this area to make sure that their reputations, are damaged, in the way that some of the companies I just flashed, up on the screen have been yeah. Yeah so it's basically, the market that, asks. For. Actually. Doing things. The. Complex. Way they did the complete, way not the just the easy way and. Yeah, and. Do. You think that we. Will find one. Day, the. Right, way, to do it or will, it just be all. The time readjusting, and readjusting. And. Technology. Develops we need to keep our eye on the ball we, need to be constantly assessing, our practices, and at the moment to be honest we're a long way from having a set of tools and techniques that engineers, can pick up there isn't a book about this out there this, is kind of new stuff and it's evolving, every single, week every single month during this year in particular so. You kind of need to be in. Some way like. A sociologist, as well, as. As, well as a developer, and, so. Some of these working groups they have psychologists. Politicians, machine. Learning experts, from around the world there's, a real focus about, cross-disciplinary. Examination. Of how, we develop software now. Very. Interesting, thank. You thank. You.

2018-10-13