LEARN with IFTF Playbook for Ethical Technology Governance

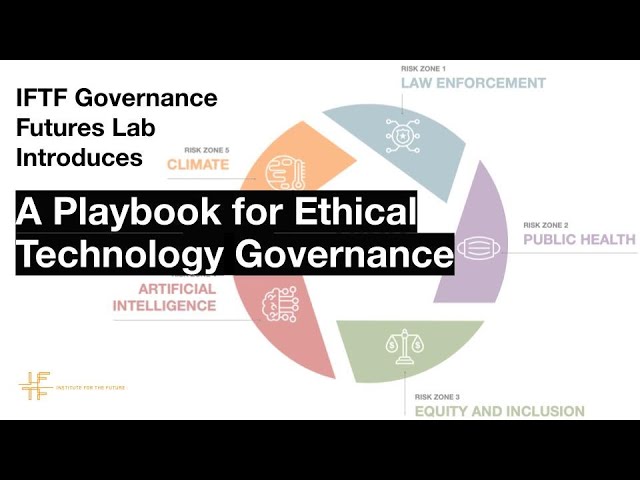

welcome everyone to the playbook for ethical technology governance webinar um my name is john clamme. i am the director of strategy and growth here at institute for the future and just to kind of set the table for everyone and give go through a little bit of the agenda um we have a 45 minute window planned for this webinar and the the majority of the time will be jake and ilana walking you through this playbook um note that we will set aside 10 maybe 15 minutes for a question and answer period at the end as i'm sure many of you will have some questions so please write those questions in as you think of them as they come up in the q a section below or the chat whatever you feel more comfortable doing so so again thank you um we appreciate you spending some time with us this morning and um wanted to first also give a quick shout out to the tingari silverton foundation who is a funder for this program they're an austin-based foundation and also thanks to lane becker who is an iftf affiliate and he is the co-author of this so i wanted to say thank you to both lane and the tingari silverton foundation before we start um we'll ask you in addition to your name and where are you from is to enter into the chat what words come to mind when you think about ethics and technology one or two or maybe three words come to mind um jake and ilana will kind of pick a few of these out throughout and perhaps not by name just the words so we don't uh embarrass anyone but but if you could uh put those words into the chat box if you could um and that would be very much appreciated there are no kids present so any words are acceptable there are no ratings on this um and one other thing to note as well to the group after the webinar ends hopefully at some point today we will send a follow-up email to everyone who has registered for this webinar and it will include the video of the webinar so please forward that on to your colleagues or any other team members that perhaps were unable to attend this webinar today so expect that email from us later today it's great to see all these these phrases coming in i'm i recommend to save the chat it's kind of interesting to look at this word cloud of ideas that come up um from different people all over the world so thank you for editing those yeah so i will quickly just give the overview of who we are institute for the future we are the world's leading and longest-running futures organization at least that we are aware of we're a 53-year-old non-profit based in palo alto california and we were started in the 1960s from a handful of guys that left the random corporation who were looking at what the internet might be used for someday so pretty fascinating stuff that they were working on back in the late 60s but today we work with many of the world's leading organizations for-profits non-profits foundations government agencies ngos across the board um and and our goal and our mission and probably why we are a non-profit is we really want to help organizations to think systematically about their long-term futures their preferred futures and to help them to become future-ready organizations and with that i will pass the mic onto jake who will tell you about today's goals yeah we want to uh just introduce you to the governance futures lab what we're up to who we are and what we're up to we're going to dive into the playbook itself go through um the the structure of it and also do a little some demos together to look at how it's used uh how we uh designed it to be used as a tool to help think through ethical issues and governing those issues and as i said practice get some hands-on practice uh doing that and then we'll have some time for questions at the end so the governance futures lab is uh i think it might be the oldest lab that we've created at the institute it started about eight or nine years ago and our goal really is to um to educate and arm a movement of social inventors uh people that are uh inspired and tasked and responsible and um and uh mandated uh to help rethink and recreate our systems of government so we want to arm that movement hopefully create catalyze educate arm a movement of social inventors with tools and concepts to help build better governing systems and and we've done that through um through things like the the toolkit we created which is a step-by-step uh process for redesigning government so if you want to know how to do it here's a here's one way that we think a structured way to think through from kind of beginning to end what do you need to think about what are the order um how do you even begin such a crazy audacious uh idea as redesigning or reconstituting our society so we have some structures and platforms and participatory processes that we use to do that we also have been working with the us conference of mayors for the last few years um uh doing foresight training for mayors and city leaders and we created a series of uh posters from the future working with different mayors around the world and here's uh some examples uh from uh rochester hills michigan and tempe arizona and oakland and we work with santa fe and austin and portland and other places so we we are kind of opportunistic in finding ways to help futurize uh those working in government and to help uh provide structures for thinking through uh at a grassroots level how how do we get involved in and not just voting or not just these kind of one-off ways to participate in politics but really rethinking the architecture of our political systems okay ilana is going to talk through uh the the structure and outline what's in the playbook and then we'll go diving into some of the examples great so in this playbook that we're going to walk through today it contains risk zones scenarios the decision tree tool that jake mentioned we're going to practice some discussion questions and further reading so diving into that what are these we have five risk zones contained in this playbook which are areas that we've identified as either current or or will be threats significant threats as well as areas that are ripe for technological disruption so through the research and through interviews that we did with a number of people working in government we identified these five risk zones that we think people need to be paying attention to and those are law enforcement public health climate ai and equity and inclusion so within each of these risk zones we have two scenarios that we've created one near term from 2022 and one of a further out future from 2032 and each of these scenarios kind of paints a picture of where you might find yourself in that year within this risk zone and then what are some decisions that you might have to make that inherently include some dilemmas or choosing between trade-offs or choosing between things that might kind of butt up against your civil service and civic values so to help with the decision making architecture we created this decision tree which is actually an evolution of the futures wheel which we kind of simplified and bifurcated to this binary positive and negative choice points of what do you do in these scenarios and so the idea is to kind of think through what are the potential positive and negative outcomes of your decision making of your policy making and what are some ways that you can anticipate unintended consequences of the of the policies that you're creating of the regula of the regulations that you're making um and either remedy them or kind of use that hindsight that you've gained to create different and better policies and then after each of the decision trees there are series of questions for consideration that you can use within your teams within your departments to think to think a little bit more deeply about these scenarios and in particular if this partic if this scenario or if this issue area doesn't feel immediately relevant or tangible for you or your department these questions are designed to help you think about how you might apply that decision-making architecture to things that you are actually dealing with on a day-to-day basis um so that's the that's the basics of what what's in the playbook um we think the most exciting part comes from actually walking through it and doing the uh walking through the scenarios and using the decision tree so jake is going to um walk us through an example yeah and we we have these scenarios um and i will say a little bit of background we built the ethic the playbook for ethical tech governance kind of uh on on the back or the compliment to the ethical os piece that that iftf did uh sam wooley and jane mcgonagall colleagues of ours at the institute for the future working with and targeted toward an audience of tech companies how do we how do you get ahead of of uh your products being used and abused uh and and destroying democracy or creating injustice you know how how do you think ahead so that the things that you're building don't end up having these negative outcomes and we presented that actually at one of the meetings of the us conference of mayors in austin and uh it really resonated with the mayors and city leaders and so uh inspired by that we thought okay let's let's do a version of this toolkit or process uh of thinking through the ethical implications um and adding foresight to that four city leaders four mayors and four civil servants so that that is kind of the the way we ended up uh uh you know creating and designing it in certain ways and why the scenarios are crafted the way they are kind of kind of as an audience of civil servants or people in policymaking making these decisions uh and doing that from a government point of view so uh we have we have as ilana said we have five different zones one of them is law enforcement and this is a a near-term scenario that we created i think i think these issues will be familiar uh to many people it's in the news every day and that was kind of part of our design as well is to do something that's that's now now-ish something you might face any at this point or have faced these ethical choices right now and then other things that push further but have the same structure of thinking so that we can get ahead of those things so law enforcement so here's here's the kind of scenario that you would be working with in this case so your county recently began using a facial recognition system to identify individuals caught on surveillance cameras uh recently on the recommendation of this system your department arrested a black residence resident at his place of work drove him to a detention center and interrogated him for several hours before determining the system had misidentified him you can you can find many examples of this kind of scenario already so activist groups are lobbying to yes coded by bias uh sheila too many examples of this so in this scenario activist groups are lobbying to ban every police force in the state from using any facial recognition technology in this scenario we're not trying to um you know add any uh value ahead of time for this but uh facing choices is what we're trying to to to put forward here with these scenarios so to counter this movement you've been tasked uh with proposing publicly available legally enforceable guidelines on the acquisition implementation and oversight of the algorithms that facial recognition systems use when making criminal justice decisions in your county so um these are the sort of ethical choices maybe you're being told maybe you you agree or disagree with this but this is this is your task and so how would you go through about this how would you think through this let's go to the next slide okay so the decision tree as we said is kind of a limited uh in a way can a little bit more confined or directed version of the futures will where you think about what if questions so if you do this what happens next if that happens what happens next and we have this positive negative outcome in this case to think about you know just to put a finer point on thinking through what are some of the the things that could happen that that you hope for or intend with this and what are some of the things that maybe you you wouldn't want to see happen and how do you deal with those things so we we went through with a colleague of ours to to think through this and and run through the the tool itself and so in this case the suggestion was okay let's create an oversight office a sort of citizen group that would approve uh any ai and facial recognition warrant or arrest so it has to go through before you make an arrest uh or issue a warrant it has to be vetted by uh you know an advisory group all right what's up what's a positive thing that could happen well if it goes well this this oversight office will prevent false arrests based on algorithmic bias okay great what and then we go over here what's something negative that might happen well it might slow down drastically slow down uh police work and become too expensive and therefore rarely used so maybe the benefits of this technology are are thwarted because of this bureaucratic oversight and then from there you build on so what would be an um what would you do and then we ask okay what if now what would you do what would you do if it slowed down and made too expensive this tool uh what you could do possibly is examine court systems to see how warrants are being issued um you could review other programs and and that could be leading to a drop in crime instead so it's kind of taking a more systematic systems view of that and maybe there's other angles to doing police work so that's how we go through and you can see each one of these prompts helps you think systematically through okay let me do this let me do that and then you know ending up at the top what are things you could have done differently in the first place maybe you go back now and think about that first choice and say okay let me get ahead of this what could i have done differently from the start that maybe wouldn't have led to some of these negative outcomes that we had to backtrack and fix later so that's the idea that's the kind of thinking process that we're trying to to scaffold here with this tool and as we said uh in the structure of this you're given questions so you go through this process and then through um you know through these prompts and kind of through the lens of civil service values are the choices we're making at every point kind of embedding those values that we had one of the things in our interviews and research that we found which was kind of very interesting and i don't know if surprising or not but we talked to many people working in government and civil service and they were saying yes uh you know public values civil service values are they're they're they're kind of uh so there that they're not talked about and and therefore they're they're you know they're they're you're expected to do this but we don't have a systematic way of of going through this and um you know i i think what we're trying to do with this is contribute to that okay if you have these values how can you how can you use them in a systematic way to to think through your decisions if you don't have a formal process for doing that so that was this is a hopefully a contribution to that which would be a more systematic or more structured process for doing that kind of thinking through uh civil service values and ethics okay ilana you want to take them through it's your turn now everyone great thank you jake um so as as i mentioned um we had a number of different risk zones so now we're going to take you into the risk zone of climate um in the year 2032 and um again we recognize that not every person working in government or in every department is going to um have to be addressing each of these issues in each of these risk zones that we're that we're talking about so trying to embed within the scenarios um a number of different questions and values and ethics as jake was mentioning and kind of thinking about how do the decisions that we're making um impact or reinforce those values so we'll talk through a little bit what that means in this particular scenario in 2032 citizen scientists have created an open source pollution map of your city along with these early warning drones that alert residents when pollution levels rise telling them to stay inside so recently these drones swarmed a neighborhood with a warning before the city informed residents of potential toxic exposure um so understandably people are upset they feel like this the city has betrayed them there's a little bit of a loss of trust that the city's not telling them uh what's going on in their neighborhood before these drones do so the department of public health is proposing a new lengthy regulatory process for the drones um that the drones have to be approved before they can be used and residents are protesting this because they say they're not getting health information when they need it from the department of public health and so they want these open source drones so you as a as a regulator at the department of public health um what do you do what do you do about this so we're gonna walk through um this decision tree uh which is welcoming us in with an aloha and hello so we're going to ask you to just put in the chat and jake's going to call out some of the things that you're responding in this scenario in this scenario where the department of public health wants to regulate these drones but the citizens who feel like they're getting more accurate information from these open source drones or protesting what do you as a regulator with the department of public health do so feel free to just put that in your ideas in the chat so we have one really from aaron here um regulation could prevent possible panic due to errors and notification algorithms it's kind of a i think an observation on that are there other other choices maybe we should go you want to go back okay from sheila we have uh call an investor investigatory panel with all represented investigate the quality of health information and communications maybe that would be a good initial uh start to this proposed idea great thank you sheila so we have a regulatory panel or an investigatory panel excuse me that's going to look at um that's going to look at the results so what is a positive outcome that might occur from creating this this panel yes add it to the chat so what are what are the positive outcomes of the best case outcomes for this proposed idea so more participation involvement uh the drones could become more efficient at detecting pollution and alerting the public trans create new outcomes transparency fosters trust with residents lots of minds considering the benefits for a wider population the pan the panel will be more transparent great these are great it seems like there's a lot of uh very immediate positive outcomes thinking about this investigatory panel so now um we're gonna switch our mental gears which is part of the point of this decision tree is to force us to think in kind of two uh very binary directions so what are some negative outcomes that might happen uh because of this investigatory panel polluters shift their emissions to avoid drone detection right uh yeah a discussion of who wasn't able to participate in the panel who was left out selection becomes a politicized battle yes thanks vanessa governments start using drones for other purposes right yeah they're not you know what else can they what other data is being gathered and how can that be used or abused industries could lobby to heavy heavily regulate the drones and render them useless borderline useless right these are great the negatives are really coming right uh yeah or even the perception that the drones are doing that right so let's go with that um the the the uh last one that you just read about um governments using drones for other data collection purposes right or even the perception which was part of it which could could undermine this as well even just the the hint uh or you know the conspiracies that might float uh be associated with this that the government is using it to spy on people or gather other data yes thank you so so the use of it and the perception of it so so you again in your shoes as a regulator with the department of public um public health what do you do in response to this potential negative outcome yeah how would you respond to that okay people um either government is using it or people perceive that the government is using gathering data and using it as a surveillance mechanism how would you deal with that you could do a series of public information notices uh on the use of drones what's their purpose right drone data could be available open source yeah that's that's great yeah anyone can look at it um the optics release guidelines on use um find out what the prevalent narrative is for the drones in the public and create communication plans based on that set up an oversight board inviting civil society orgs you know what i love about this uh uh sometimes there's like uh you know oversight uh infinite regress that happens right over who's policing the police who's overseeing the overseers and all of that um which is which is why i love the kind of ethical dilemmas and the choices that this this process brings out like how do you how do you deal with that do we just keep adding other layers or is there other ways to look at that so just thinking through those those layered consequences helps us helps us see that sometimes clear information about what happens with the data consequences for regulators if they abuse it right yeah so so some some real teeth to that look at how bad actors could hack the system yeah even you know black hat hackers which some governments and organizations use to to see how the system can can be abused or broken into or you know do that ahead of time right great so these are these are great and as you can see they've they have kind of taken us multiple layers past this initial decision of creating an investigatory panel um so uh what we would then do is think about okay what could you have done differently in the first place to anticipate some of these negative outcomes what would you have put in place um and we kind of walked through it that way but i want it for the for the last kind of interactive part i want to bring it back to the positive side because what we also want to think about is you know things that have a seem like they have an initial positive outcome might also have a negative outcome for certain uh populations or uh down the line so when we thought about what positive outcomes might occur we would then prompt okay well what are some negative outcomes that might occur because of these positive outcomes um so just some thoughts on i know we had a lot of a lot of great ones about improving trust and transparency uh but what we want to force you really to think about is what are some negative outcomes that might occur from that and and some of those conversations came up in box number three of certain people aren't included in the in the panel or um changing the way that the drones operate certain lobbying effects so i want to open that up to a conversation too to just enter in the chat again what are some negative outcomes that might occur after this initial positive outcome one size fits all might not um be the case some language groups excluded yeah exclusion i think is a it's a big part yeah particip participatory is one of the positive outcomes but how wide is too wide and then you know uh if everyone is involved when does it become too unwieldy that's always an issue with with government participation yeah great so these are great and and as you can see we went really quickly from trust to lack of inclusion or from openness to not being able to have um control or efficiency and so these are some of the the conversation points that we really want to encourage people to have about these trade-offs about these um kind of dilemmas that are embedded within each of the decisions so and you can get a sense of how the decision tree works you could obviously continue to have these brainstorm conversations for multiple hours or weeks on end about policy decisions um so and then again after the after we go through these multiple layers of consequence and and potential outcomes uh we have these questions for consideration so um as examples with this one you know if the wisdom of the crowd is trustworthy is it your place to regulate how it spreads information so again this question of openness and open source to um to regulation or as a government agent how would you work with the citizen scientist to rebuild trust in your agency and so again we saw those some of those conversations around well the openness or the investigatory panel can create trust but then there are issues of inclusion um and and openness so really again wanting to encourage encourage these conversations within um your departments and you know just just add a little uh meta commentary on this you can go through all of this and then get to the point where you go back and say this this doesn't work i mean that's a perfectly fine outcome it's to try to get ahead of these things and not learn the hard way as any foresight work uh does so the process is really to to to do that to look at the outcomes and then to weigh those choices like there are going to be negative outcomes for almost any policy right there's going to be people left out or you know those at a disadvantage for certain policies so getting ahead of that thinking through that i you know there was a question from bogdanna earlier which was really great it's you know what is the process of going through does does each civil servant go through it by themselves or they do it collectively um and then you know how do you process that information i think you could use this individually yes um that's one use case another is to do it in your in your group your policy group or with with city leaders and decision makers i really think and we we talked about this with a lot of our interviewees when when policies are made when decisions are made there are these trade-offs that are happening whether they're made explicit or not i think this could be a great tool to go through with citizens to say here's either here's what we thought and how we got there and here are the trade-offs that we made or let's go through it in a in a kind of public way to think through this and where where are the places that we're willing to give a little bit of you know uh the ethical trade-offs or the some of the the uh you know who's left out and trying to deal with that but we know there's gonna be issues and there's no perfect answer but you know i could see this being a much more transparent way to talk through these ethical issues not only for foresight but for for public communication and buy-in i i think it could be used in those in that sense as well yeah thank you so we're gonna go uh we're gonna john is gonna talk about the um what we have this playbook is is publicly available you can use it we designed it to be self-facilitated and that's why you know speaking of trade-offs some of the trade-offs with with the kinds of things you could you could think through the different implications in a broad sense which we kind of narrowed it into this into you know limited but hopefully uh enabling the constrained version of positive and negative to help you know help think through that in a systematic way um so it it's it's there it's it's it's gonna be given to you i think in an email um and we can you know share that with you and you're welcome to share with anyone but we also want to provide facilitation services we can run workshops with you we can we can help work with the with with a group or the organization or agency so we have some offerings here where we can come in and collaborate with you to to think through this and use the tool for different um occasions thanks jake yeah as jake mentioned that the playbook itself is free and we will be sending that as part of the follow-up email but there are three offerings that we would like to walk through briefly and answer any questions that you might have um if you would like to implement this playbook within your own organization um there are two different workshop options a half day and a one and a half day and as you can see on the slide the half day workshop would simply be where our group comes in and gives an introduction or an overview of the playbook and risk zones and scenarios we would allow you and provide you with dedicated time to practice using the tools on both the decision tree and additional prop questions that you could use um with small breakouts and this would be accompanied with expert facilitators i have a feeling you're looking at two of them right now um the the second offering is the day and a half offering so this is much more of a a specific deep dive into the playbook um and this is you know everything that we mentioned above so that introduction and overview and sort of how to do it but we will take you deeply into two of the risk zones specifically um we'll walk through that decision tree and with the feedback and the input from the facilitators on site and even more than that there will be much more hands-on application um so you can practice using these these methods and applying them to your own work with the feedback feedback and input again from the iftf facilitators um both of these sessions um we can provide various discounts for government agencies and other entities so so please talk to us or follow up with that on the half day session would run somewhere between the the 20 to 30 000 range and the day and a half workshop is more in the 60 to 75 000 range again what we propose is that you let us know what your level of interest is we can provide a specific one-on-one follow-up call and meeting with you to walk through how you can use it specifically for your organization then the last piece is is a custom speech so we can create a customized speech for your organization where jake orlano or someone else here at the institute can come um and give that speech to your group and that is in that five thousand dollar range so those are the three offerings that we wanted to to propose to this group and again we would i will outline these in that follow-up email and and hopefully we'll have an opportunity to talk with you specifically about your needs and how iftf can help with this and now i wanted to walk through some of the the questions that we received during um during the webinar so again feel free to keep typing them in as they come up um one that we wanted to tip off with was from francis and she asked what have you done or do to make certain no bias is present with this process and the questions asked the main premise is there's something inherently wrong with protesting that's a great question i mean these um uh we're trying to uh as best we can simulate what a civil servant might face or a policy maker might face and you know we're not necessarily trying to make a value judgment on protest or not ahead of time we want to think we want to provide a scenario um you know a plausible use case for civil servants to think through that so you know we don't have necessarily an opinion about the protest but it's something that might happen right and and you know maybe maybe the protesters have a valid point that you need to think through and then to bring that in to the um to the decision tree process okay what are the negative outcomes you know expanded protest or more aggressive protests how do we deal with that so um or maybe you know the protesters have a valid point uh you make a decision that addresses some of their complaints ahead of time through this so we're just trying to to lay out the the kind of agents and dynamics of of a plausible situation and then you know the the decision tree there is um is is really there to help think through those what-if questions and to keep doing that and looking at the layers of intended and unintended consequences that that could happen and yes garrett uh protest and riot and insurrection these these terms are very fluid and and uh the way we have typically thought about them and stereotype them i think are changing very rapidly as well great thanks jake and actually another question from francis um asks so when you do this do you have multiple positive and negative outcomes but you create something like a risk log to mitigate that yeah i don't know lana if you want to take that one um yeah i think part of the like like jake was saying you know when when we get to the end of the decision tree and go through these questions part of the idea is okay well what do you do now what how do you take this foresight how do you take these this kind of um future hindsight and apply it to what you're doing now and part of that can be yes looking at these at the various risks if you want to create a risk log and kind of weigh them against each other and think about how does that impact your decision making now and which of how are you going to weight some of these risks versus the others and think about these trade-offs think about the dilemmas and is that going to affect your policy decision is that going to change the policy decision that you're making so we don't have a like a specific chart for for logging these risks but i think that's a that's a really great way of putting it because that is essentially what you're doing every time you think about the potential negative outcomes of your initial decision or even the negative outcomes of a positive outcome is um logging those risks and then using them to to weigh against the decisions that you're making or the dilemmas that you're trying to address so also imagine just in that in that use case of the climate and the drones and the you know the data gathering one of the negative outcomes was that people feel like the government is going to you know gather other data and that there you know there's this sort of conspiracy side of things that may be generated right whether it's valid or not um i think there's a lot of valid about how how you know power the powerful use data but imagine you've you've weighed these trade-offs and you say okay yeah we think this is still valuable this is really it's a really good thing even though you know it might cause some of these issues get ahead of that narrative you know start building your campaign your anti-conspiracy campaign ahead of time before you ever launch it or engage groups that you think might have skepticism toward it ahead of time so you know i think i think these kind of things help you not only you know maybe make different decisions but but prepare to launch in a way or to engage different groups and and create processes that will alleviate potential barriers and hang ups and and backlash that might happen ahead of time get ahead of that great thank you thank you both um a question from ashish uh the question is a sidebar question when working with city officials how are you navigating their tendency to retain control versus having openness to be more experimental or collaborative learning oriented decision making i ask considering the background of trust deficit and quasi-authoritarian or adversarial governance behavior i want to pass that to ilana too that is a that's a great question um so the first the first way that i would answer that is um i think similar to what jake said about using this with citizens to kind of establish that open report and see where each group is coming from and kind of see your different priorities and how you would decide to how you might decide to do something or what your priority is based on where you're coming from i think that's just as valuable internally um and to kind of see where those edges and boundaries are of what can be open what you know where there can be innovation where there can be creative prototyping and who has the uh who has the autonomy to do that within a government um and and sometimes there might be somebody at a lower level who has the id ideas who has the desire to do something but they run up against um the structure of of the bureaucracy and the red tape and they can't they can't make those decisions and so they have um if if you're walking through this with a department or with an elected you can kind of see where people's different um edges are in terms of what they're able to do and what they're able how they're able to make decisions um and so of course that walking through a decision tree isn't necessarily going to overcome an authoritarian government but i think it might help within a department or within an agency or even across agencies of get a getting a bit of an understanding of where some of these decisions or innovations or prototypes might actually take place where there's openness for it where there's space for it um and how you can get creative with doing some of these things thanks ilana i have a handful of questions that i'll sort of try to combine here but um the general theme is who would be the best candidates to take a workshop like this in other words what types of organizations or people or roles or job titles um would be the best fit and kind of what is the ideal size how many people within the workshop yeah very good practical questions i mean we we we wanted this to to target government workers policy makers decision makers but we also wanted it to be as best we could applicable to civil servants working in different roles but i would say you know having having been spurred by mayors we were thinking about kind of key decision makers uh as one audience city managers um you know uh the people that are doing uh policy city councils uh chief technology officers uh internet security types uh that might be working making these decisions looking at outcomes looking at if i roll a policy out what what are the impacts going to be even i mean as we've as really started to highlight in this conversation public relations and uh you know the messaging that goes along with a rollout of policy too this this is very important as part of that communication side of things as well so you know broadly uh anyone working in the civil service who is who are wrestling and making these decisions and and kind of crafting policy um you know from the top to to other people in layers of the bureaucracy that are that are implementing and rolling this out ideal size uh you know i i hesitate to put hard numbers on things but i tend to like a kind of working group in the 15 to 25 range i have to say um but as we said you could do this by yourself you could think through this on your own or you could have it as a kind of public meeting i could see this being you know implemented in a virtual setting where people could comment on each one of these things and you could you could kind of roll it out in a virtual way where there could be dozens or hundreds of people contributing in an asynchronous kind of way so um hopefully you know as we said it's sort of it's made and designed to live on its own but it you know we can see these other uk use cases where you could come together in a in a sort of condensed way and and do it in a room or maybe spread it out asynchronously and virtually all of those i think are valuable with this great thanks jake um question from elizabeth how do you help policy makers use their values to weigh risks or is that something you leave to them smiley face uh you know in our research and interviews the civil service values i mean going back you know 80 years and being written in these old british tomes about integrity and fairness and all of those things they they hold true in a way but we didn't want to over prescribe exactly what those are those they'll be slightly different so to answer your question is yeah you know their your values should weigh in on the kind of ethical choices what are the trade-offs what are you willing to to to to accept as this decision are you are you willing to accept some manner of uh you know non-participation to get this rolled out because this is so important right or do you need to you know uh go a different way because your values say that's that's that's too much we're not going to do that's too unfair for this uh you know uh or it might lead to less trust and lack of integrity for what we're doing so yeah those are those are kind of embedded in the ethical thinking that each each group would bring to those choices in those trade-offs thanks jake um quick housekeeping note we are at time um so understand if you do need to jump and then go to your next meeting for the day but we do have a few more questions so so we will continue to answer those um and again this will be in the video recap as well so i just want to give you a heads up on the time yeah for all of those who are leaving thank you so much uh and yeah we're going to stay on for a few more questions and thanks again to tingari silverton foundation here in austin and hopefully this will live on in in many different um uh cases and you know hopefully you find it very useful all right let's let's get into some of these other yeah yeah jake i had one from another from mcdonough asked i'm curious about the risk zones do you think the risk zones affect the decision tree structure in some way because of the different nature of the risks i think that's a that's an interesting question um the decision tree itself is supposed to is designed to be applicable across all risk zones ones that we've considered for this particular playbook and any that might come up in the future so ideally it's a tool that is was created neutrally so that it can be applied to a number of different number of different risk zones and issues and threats and opportunities that come up again with the idea being that we really want to we really want to encourage or force people to think about both positive and negative outcomes of something and so um structuring it that way allows us to get into those nuances of these of these zones of the risks of the threats that we're facing got it um here's one that's anonymous but how far e-governance can achieve fairness and accountability in our communities and how can we use e-governance to strengthen risk management another good question i mean i think our default setting is very skeptical about technology silver bullets and e-governance you know has has seen great advances with participation with speed of government with how information is is gathered and inseminated you know estonia for has always brought up as a great example of how you integrate that a kind of digital version digital transformation and governance lots of lots of positive benefits there but you know adding e to a broken bureaucracy is not going to work either or a corrupt one or you know um so it it takes a sort of good will and commitment to to put these into into practice and even still you know data overload we've seen that just having more information doesn't necessarily lead to better outcomes oh yeah we're going to be transparent but you know that data can be manipulated it can be reconceived you know all of those things happen once it gets out into the wild and woolly public sphere uh it's open game um but you know just basic stuff speeding up uh bureaucratic processes um you know do i do i need to get pulled over sorry for for a speeding ticket uh you know uh those kind of things maybe we don't want those processes speed up but i do want to get my driver's license faster i want to get my my fees paid or my my license to do other things in a in a quicker fashion where i don't have to go down to some sort of long line to work in so those kind of efficiencies i think are a nice promise of e-government but uh you know i um it's not some sort of magic transformation um that automatically happens without a whole other things happening around the system and um just embedded in that question the person who asked it was asking about fairness and accountability and of course whenever you're talking about anything digital they're questions of access they're questions of you know who has broadband or who has the ability to access or use the technology and then of course any platform that is built upon which the e-governance system is is being more efficient or is being used um no platform it is actually neutral because people built the platform so there is likely going to be some sort of challenge or bias or some sort of issue embedded in the way that the platform itself was built and so there there then become this whole slew of questions of how do we consider how those platforms are being built so that the e-government system or the different ways that we're using it do have that fairness and accountability built in or have some checks along the way to make sure that we are accounting for those things yeah i love the example from some uh some folks we know in brazil who have used the the government uh sort of uh reimbursement process which is open to the public uh for for elected officials and civil servants so they took that information and started to suss out corruption that was happening in double billing so like okay yes uh you know mr decosta you uh you charged your lunch in sao paulo at 1201 and you also charged you know this other thing in brasilia 10 minutes later for another you know 300 um dollars or whatever uh so you know that that kind of accountability if we have that data there's there's some oversight that can happen as well i like that part of it maybe we got a little bit of corruption i had another question from ashish uh what was the biggest facilitation adjustment you all needed to make during these sessions these seem like very valuable points of contact to participate and support leaders yeah that's a great question i'm so glad you asked it because we really went through an evolution with this um you know we had the ethical os which existed which had a structure to it we worked with mayors we talked to them about what what what's what messages are resonating with them what are they what do they need to think through this and as we've uh kind of intimated here the the original idea was to do a futures wheel which is if you've seen it it's just like what's the prime mover what thing is happening okay what's the what's the first level what's gonna happen right if you shut down the internet what are the things that are to happen to people how are they you know they're going to communicate they're going to protest okay so you go to the next level what if that happens then what happens and then the third level if that happens what happens next and so it's kind of an open-ended way of doing that and when we were uh doing play testing with this and demos for people and we just gave them that tool to say okay you know basically what you've done but but think through that people kind of it was too slippery and a little bit too amorphous to think through all right i'm gonna do an oversight committee i don't know i had you know some people had a hard time uh self-facilitating that and we wanted this to be something that kind of lives on its own so the the the the trade-off that we made of doing this positive and negative is really is really to constrain that and of course it's more complicated than that i mean you know for us like oh are we are we putting it too much in a box to think about positive and negative um but i think it helps i think it just helps you think through in a in a way that just kind of forces your hand a little bit to make it a little bit more constrained and a little bit more directed to think through those those kind of consequences um and but but still being useful and i you know i i think still reflecting a kind of plausible reality of how how things might go so that was our that was our trade-off um we have a thing at the institute called the foresight engine which was an online uh uh participatory forecasting tool which had a one of the one of the architectures of that was a you know you had a scenario and then you start thinking about what is kind of a dark thing that could happen and what's a positive thing that can happen so that was in our mind as well if you want to if you want to go back into the mental weeds of where we're uh where we came up with this but yeah that's the idea is to to limit it enough to make it useful and self-facilitated but not so limited that it doesn't reflect reality to some degree great and just we'll finish with these last couple of questions which are again more of a practical nature but um so folks wanted to know who would run or facilitate these workshops and and are they remote or in person and what would each of them look like in practice well jake and i would facilitate them um at the beginning and we would we would work with you closely to figure out exactly what your needs are um we could do them in person that would be very exciting it's been a while but we could do them in person we could do them remotely and we really like them to be hands-on with small breakout groups so you can have these deeper discussions and get into the nuance of the questions um and the dilemmas and the trade-offs and and the values and the ethics that aren't necessarily explicit but are so important to be thinking about when you're talking about these questions so um we would we depending on what the structure is whether that's the half day or the day and a half um we would again dive into some of the risk zones and walk through the decision tree and really spend time with each decision point about what you know what are the implications what does this mean what might you have done differently how do you change your policy um and then if it is the day and a half we would we would get a little deeper into who are you and what's you what are you working on what are the things that are most relevant or um most urgent for your department or for your city or for your municipality and so how do you then take this thought process and this decision-making architecture and apply it to the specific issues and policies that you're considering and um working on right now great well well thank you i think we'll wrap it up now jake, ilana, thank you very very much for walking us through the playbook um thanks to everyone that joined us and is still on the call because we are a few minutes over um but yes in closing um thanks to tingari silverton um who funded this project elaine becker who helped to co-author it um and we would very much like to work with you and your organizations and and set up a workshop with you so um i'll remind you that we will send an email that will cover all of this information as well as the video um and oftentimes if you are interested in doing this please forward that video on to other folks in your in your office your team your colleagues so you can all get on board with this happy to have individual meetings with you as well so um we'll look forward to communicating post webinar but again thanks to everyone for joining and most importantly jake ilana thank you thanks john thanks everybody for your great questions i mean this was our our our launch meeting we had good confidence we've done the work but to see it you know in the wild and get reactions to it and your questions were great and deep and challenging and we really appreciate your your time today thank you so much reach out if you want to if you want to talk more thanks all

2021-07-21