NLP for Requirements Engineering: Tasks, Techniques, Tools, and Technologies

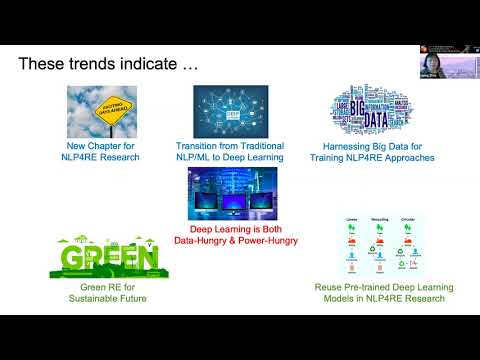

okay continue so i'm i'm not introducing ourselves again here uh so let's start with a brief overview of what is technical briefing is about i will start the presentation by providing you an overview of nlp tasks for requirements engineering or nlp ferrari for shorts then i will end over the talk to lipping who will speak about our mapping study of an nfp ferrari that we recently published in acn computing surveys she will also speak about tools and resources and finally where there will be an actual uh tutorial from what who will teach us something about transfer learning for nlp ferrari so first of all like let's give some basic definitions here what is natural language processing we all know what is language processing but here is an intuitive definition so natural language processing are all technologies enabling extraction and manipulation of information from natural language intended as english italian swedish spanish etc so here as you know natural language techniques and application and everywhere everywhere and we're using them every day sometimes with voluntarily like google translate and sometimes there are applications like all the nlp tools that are behind the different social media that we use that that manipulate and use our language although we are not aware of that for example for giving us advertisements and such and so we we we use and we have natural language processing techniques in our everyday life basically on the other hand with requirements we have to deal with requirements in our work life but what are requirements well there are several definitions out there for requirements and they are not always in agreement they they look at different aspects of requirements for for example i'm not going through all the definitions here but in some cases a condition of a phenomenon of the environment a requirement is a goal it can be a particular type of statement or an expression of a need so overall there's no agreed intentional definition of what a requirement is so it is good to give some example and some examples and give an extensional definition then so here we have a few examples of typical requirements first example is a user story that is typically used in agile the second example is a so-called sha requirement the the very typical requirement specification although it is it is debatable whether the word specification is appropriate or not and you can have higher levels share requirements lower levels share requirements or you can have a structured text expressing the needs to be satisfied by the software in other cases you have longer structured text like in the form of use cases but you can have also other forms we've seen already also even in the presentations this morning some papers working on users feedback and in particular on app reviews analysis up reviews and users feedback in general even from twitter represent a form of requirement because it contains and includes information about the needs of the actual users on the other hand even bug reports are requirements because they specify what need to be changed in the current software and what are the problems with the current software and finally even regulations can be seen as requirement can be regarded as requirements because for example anytime i am writing down a privacy policy for an app i'm i have to abide to the gdpr which is a regulation and its requirements for my app and to which my my app should comply so in this talk uh anything that's that resembles this is a requirement so anything that reminds something that i've described up to now is considered a requirement so why are requirements so special i've mentioned that nlp techniques are used quite frequently in different applications so why requirements need a special attach attention and need a technical briefing well first of all because as you've seen they are very ethereal genius we pass from a very structured and formal text texts almost close to code to um informal text like the one in the app review so we need different different approaches to deal with that in addition for example requirement specifications used their very restricted vocabulary and a very different vocabulary with respect to common text by comparing the text of requirements with the one of common texts we've seen that 62 of the words used in requirements do not appear in generic tests so this suggests that nlp tools that are trained on generic tests may need to be tailored for requirements so let's here give an overview of what are the requirement tasks on which nlp can be applied to uh to automate let's say and provide some automation for this task so this is a non-exhaustive list it's just to give you an overview and then before we go into more technical stuff so a typical case is requirements classification requirements classification is uh it's like the name says i have a large set of requirements and i want to partition them for example in functional categories like user interface communication or i may want to do other types of classification for example simply distinguishing between what is a requirement and just informative text this can be useful for several things but in general classification can be useful for later retrieval of the requirement and also for apportionment apportionment of requirement to specific software modules or models okay nlp of course can be useful for the classification the identification of relevant words and associated classification directly associated to requirements or classification is users feedback classification and analysis here the task is more distinguishing what is a requirement in the in the large set of app reviews for example what is a requirement and what is just a general opinion or a bug report this has another function the function and the utilities could be for refactoring for example the software or for updating in general i i've written not just classification but also analysis because this has been a very lively and hot field of research recently and also summarization and other typical nlp tasks have been applied to users feedback retrieval is another typical task for example how how does it work information retrieval we all know what information retrieval is by our search in google applied to requirement for example looking here at the picture i am i may have uh i have no idea who has the microphone okay and but i imagine i am a software company have a lot of existing requirements that are linked to previously developed products and a new customer comes and wants to express new requirements and i want to understand for example what are what are the pieces of software that i can reuse and very frequently requirements are used as proxy for software similarity and therefore information retrieval systems can be built that give a new requirement to retrieve old requirements and in addition allow to retrieve software for reuse the other cases that is strictly related to this is tracing and relating as we know so the software process is not just made of requirements actually the requirements are just a little part and not always written down we have models we have code and other artifacts and all these artifacts are traced to each other and this is another task for nfp ferrari we had also distinguished paper award this uh this year on tracing by jim clena wang and and her staff and for tracing and relating again the the issue is finding relationship between the requirements and the architecture models the models about the design and the actual code or lower level requirements or requirements at different degrees of abstraction the use of tracing or or automated tracing of course is for refactoring and impact analysis or also for safety critical product for external assessment defect detection another typical activity for safety critical product here is a similar to classification because given a requirement document i may want to automatically identify those requirements that contains for example ambiguity or some expression of vagueness passive form etc to be reviewed later on by a requirements analyst information structure information instruction is related for example to to the extraction of relevant terms from from a set of requirements for example here i have a large set of requirements and i identify glossary terms like train automatic trend supervision etc the usage of this type of information instruction of information instruction technologies is various in the sense that i can use it in their basic form for example for construction of glossaries but also for to support categorization so classification that has that i mentioned at the beginning or for modest synthesis so to identify relevant entities that allow me to generate models from from the code models from from the requirements modus model synthesis is another task that can be can leverage nlp techniques for example in here you have two types of model synthesis in the first case you may have some early requirements and user stories and you want to create a higher level model of what's what is the content of those stories to for example for problem scoping so you extract the entities and extract also the relationship between this element and you visualize them on the other hand you may have very detailed requirements possibly belonging to different to different documents and you want to generate for example a feature model or a more detailed model like a sequence diagram like here in the picture because visual models provide a more comprehensive view on requirements and they can really help in the documentation and also in the analysis and reuse and finally another complex task and other composite tasks that make use of information instruction is regulatory compliance we have the case in which i have the gdpr for example or a set of regulations in general i want to extract what are the relevant entities that are important for the requirement of my product and i want to develop the requirement for the actual products conversely i may have a privacy policy for for a certain app and i want to understand whether it is ambiguous or not or if it abides to the current regulation so these are all tasks that are uh suitable for the applications of nlp techniques you've seen just a snapshot of a subset of the possible tasks but i think it gives you a general idea some observation most of the arri problems most of the tasks that i described could be solved top down i couldn't force tracing with writing requirements so i don't need any automated system for finding traces i could use a constrained natural language to improve quality so that i don't need to detect defects automatically i can talk classes in advance so i don't need any automated means for classification or i can write a grasser in advance so i don't need to do the term extraction i can do everything that has been mentioned manually unfortunately this does not happen because the requirements process is iterative and i cannot do and i cannot know everything in advance and therefore i cannot do everything in advance and that's why we need an lp we need an lp also to recover from errors when array problems are addressed top down by valuable humans so we need nlp to support us requirements analysts while we perform with our activities so now i will hand over the talk to leaping that will speak about techniques tools and resources and we'll tell you more about our systematic mapping study thank you thank you alessia i will now share my screen right good afternoon everyone as alicia just mentioned in this part of my talk i will be mainly reporting the results of our marketing study focusing on the technology used by nlp4ie research our marketing study set out to investigate the landscape of nlp for ide research area we reviewed five aspects of this area including publication status empirical maturity of the research and the research focus we looked specifically at ie phases addressed in this research area ie tasks developed and we also looked at input documentation types used in the research we also looked at nlp for ie2 development and the nlp technologies adapted to support two development and in lp for ie research in general to understand the publication status of nlp for ide research we reviewed a total of 404 relevant papers we found these papers published over four started from 1983. as shown in this diagram the publications before 2004 are irregular and the patchy however since then we can see a year and year increase in the number of publications you may notice the number of publications in 2019 was smaller than previous years that was because our database our database search ends in april 2019 so the number of papers in that year was not complete in our study based on our literature search we concluded nlp for ie is an active and thriving research area in ie and it has produced a large amount of literature however their state of empirical research in nlp 4ie is less satisfactory we find the majority about two-thirds of the papers we reviewed are solution proposals typically involving the development of a new technology a new solution and the new technique we found about a third of the solution proposals are not evaluated at all but only illustrated using examples discussion or simulation we further find the remaining solution proposals are only evaluated in a lab environment using either students or software subjects only a very small number of studies about seven percent of our reviewed articles is conducted in an industrial setting via a case study or field study our observation is a typical nlp for ie paper reports a solution proposal possibly evaluated only internally through experimental example but without evaluation in the real world evidently industrial uptake of nlp4id research is very limited we take comfort however this trend seems to be common in the fields of software engineering and requirements engineering let's now take a look at two development in nlp4ide research we identified a total of 130 tools developed specifically to support ie tasks as those mentioned by aleja but only 17 of them are still available online furthermore no evidence of these 17 tools are still in use therefore as you can see the state of two development in nlp for ie research is very poor in other words there are no tools available at all for nlp for ie research i mean the main specific tools without nlp for ie tools nlp4i researchers have to solely rely on general purpose nlp technologies to solve ie specific tasks we identified a expensive collection of nlp technologies that have been used to supporting lp4id research this includes 140 nlp techniques 66 nlp tools and 25 nlp resources in our marketing study we differentiated nlp technologies into three categories what we mean by nlp techniques are those that support basic nlp tasks such as path speech packing passing or tokenization what we mean by nlp tools are software systems or libraries for supporting nlp pipeline operations nlp resources are linguistic data sources for training or testing nlp tools and we further classify them into two categories and they are lexical resources such as what net web net and the annotated corpora or data sets such as british national corpus and the branch corpus of the 140 nlp techniques we identified only 32 of them have been used more frequently what we mean by frequent use is they have been used at least 10 times among these 32 techniques as shown in this diagram vast majority are what we call word or syntactic based techniques that means they process lexical or syntactic of the language we noted baseline techniques such as post tagging and tokenization and the syntactic passing are most in use and there are only very few semantic techniques and in this diagram the three techniques that are marked purpose are the semantic techniques as you know nlp techniques are often used in combination in a pipeline fashion to perform some specific tasks there are many different nlp pipelines for processing requirements tags but a typical one may be depicted in this diagram that is a input text will undergo a transformation of tokenization post tagging dependency passing magnetization and stopwatch removal and then this transformation will produce different information on the input tanks as justin mentioned we have identified 66 nlp twos of them only one in five in frequently used as shown in this diagram the top five most used tools are well known nlp tools they are stanford core nlp gate mi uh nltk open nlp and the weekly most existing nlp tools are open source including these top five most used tools and i am listing them here but i'm not going to go through of them and i just want to say none of these tools is new they are all developed in the late 90s such as stanford core nlp has been around for a long time and uh some of them probably developed in the early 2000s okay so these nlp tools provide application and interfaces for easy of use and to support application development for nlp resources we found 25 of them in our reviewed papers but only half a half of them in frequent use as shown in this diagram clearly what that is most used and then we also noticed there are only two ie specific data sets which are modius and the cm1 and clearly from this list of frequently used nlp resources we can see a lack of ie specific data sets i'd like to point out our mapping study has omitted many important nlp resources related to ie due to our exclusion of shelter papers in our review and such short papers tend to report data sets okay nonetheless the available of i.e related resources is still scarce here i gave a few of a few of ie related resources and at the top we know promise repository which is very famous one and it was created by syed and the minses from the university of anthony and tawa in 2005 and it contains 20 publicly available data sets including modis and cm1 and the pure data set created by our own alesia in 2017 contains 79 publicly available requirements documents and collected from the internet and the user stories created by fabiano babies in 2018 which contains 22 data sets and each data set has 50 plus requirements fmie created by our own what in 2018 which contains a data set of requirements annotated with frame net semantic elements finally up reviews um have a lot of data sets including 13 annotated one reported in this paper giving here and we also found a mobile app market important observation from our marching study is that since the publication of the landmark work by jing claire claron han and her colleagues in 2007 there has been a consistent rise in developing and machining based approaches to support automatic requirements classification we also noticed since the early 20 times there has been an increase in using non-traditional requirements texts in nlp for ie research such as app reviews and user stories and so on then last year we saw an app search in developing deep learning based approaches such as using belt and by lstm for supporting automatic requirements classification some representative references are given here but i'm not going to go through them and the if you are interested and i'll make them available later so what these strings tell us first of all they tell us nlp for ie research has entered a new chapter secondly it shows nlp foreign research is undergoing a transformation from traditional nlp and machine learning to deep learning third it tells us researchers in ie have started to use big data to help train nlp for ie approaches and tools however diploming is both data hungry and power hungry because it takes a lot of energy to train machines to learn how to perform some tasks it's also computationally expensive and financially expensive so we must find a way to reduce the large carbon footprint of developing such ai technology so in a wide picture of ie that means we really need to think about how can we develop more sustainable technologies however specifically for nlp for ie research the question is how can we reuse pre-trained deep learning models and technologies to serve the purpose of requirements engineering on this note i will hand it over to my colleague what who will give us a short tutorial on transfer linking the idea of which might provide a potential solution for us and may help us to learn how to um adopt existing deep learning technologies for rg thank you what thank you leaving and hello everyone uh i will share the screen now so in this part of the technical breathing i will give a short introduction into the basics of transfer learning and the use of language model and natural language processing then i will go through a friendly tutorial and how to use for the first time one of the recent and most promising language model namely bert as a human we have the ability to transfer knowledge across tasks so what we acquire as a knowledge while learning one task we can utilize it in the same way to solve a related task and the more related the task it is easier for us to cross utilize our knowledge so let's take for example if we have someone knows maths and statistics and has a good programming skills she will be able to build machine learning models so we don't need to learn things from scratch all we need is to learn new aspects of topics and that's based on our past knowledge the same concept is also applicable with machines so the goal is to train a model to learn from one type of problem and leverage or use that model that means the knowledge to solve the new but related problem so let's take for example if we have a model um or a learning system which has been trained to recognize different types of small cars the same model can be reused to recognize trucks for example with this feature of transferring or reusing existing or pretend models we are not only saving time from building models from scratch but also we don't need anymore to collect or label large datasets as we used to do before so transfer learning in the form of pre-trained language model has contributed a lot to that state of the art and wide range of energy tasks if we want to simply define what is a language model we would say it's a way to represent the relations or the meanings between words in a language so let's take for example the word woman if we want to consult our language model let's say it's x and then we ask what are the related word or most likely related word to the giving target word woman it will retrieve a list of words with a similarity score that indicates word relatedness so if to retrieve queen princess daughter and mother now this helper for many nlp downstream tasks such as question answering system machine translation and more there are many types of language models the idea of language model is not recent so one of the most recent techniques to build language models is uh is that is with the use of deep learning techniques as known as neural language model of contextual embedding this type of language model has been designed to overcome the context ambiguity and language variation so for example if we have the world bank it will have a different numerical representation as it is a a bank in a river or a bank or a bank account one example of this type of language model is birth which is a transformer base model so bert is a pre-trained language model which is originally um initiated by google ai to enhance the user experience while searching uh uh while using uh google search engine there is there is two paper uh describe the architecture of birth model i will skip this part and uh go to the tutorial section to explain how we can use birth however the first part of this is the attention also is all you need to describe the uh the attention architecture or the transformers architecture needed to uh build the best model and the details of birth mode in this figure so how we can what can we do with dirt well we can use the birth model as it is that means we can use the pre-trained birth model or we can fine-tune or update the weights in the birth model to our domain specific data set and then we can feed this model or the weights in that model into a new classifier or extract the features and train a new uh supervised machine learning classifier so um that the the tutorial will be about classifying a set of non-functional requirements which is uh divided as usability and security aspect the data set is found uh can be downloaded from this uh let me share the notebook the collab notebooks with you so you can follow me during the tutorial so the first part of the tutorial i will be explaining how we can use the pre-trained birth model without any training or unseen data sets with something called zero shot learning classifier it's a classifier embedded with the sentence birth model and then uh the second part of the tutorial i will explain how we can uh use pert to extract features from a giving data set and train a logistic regression classifier the final part will not be fully covered due to the time constraints so it's about fine-tuning pert with a specific or a limited set of non-functional requirement and use it in a single layer of a neural network classifier here so starting with the first part which is the zero shot classifier we start by installing the hugging face transformer it's it's a package provided by hugging phase it contains the birth models and other retrained language models and then i will import the requirement data needed for this tutorial so from this uh data set i only selected the usability and security requirement to the conductor experiment now i already run the notebook but you can do it by yourself but i would advise you to select the runtime type to be a gpu to make it faster so after we installed the transforms and included the needed libraries including the pre-trained sentence birth model by using the auto tokenizer that means that organizer used to recognize sentence as an input to this classified birth based classifier and the auto model to really to classify the sentence i created a function or module it's called zero shot classifier that take one requirement statement at a time and then i will um the the the burst model takes three inputs one of them is the input id attention max and the tokens itself then it will create two different representations one is called the sentence representation that means the sentence embedding and the labels embedded and then the the similarity will be generated and then i will rearrange or reorder the similarity according to the high score as you can see from the output here if we have this requirement let's say if the projected date the if the projected the data must be readable on whatsoever until the end of the sentence it classify this mostly as a usability requirement not as a security requirement and if we see that we will check the scores here here so it's most likely 25 percent it's a usability requirement not as a security requirement by eleven percent so this is for the the uh evaluation for the evaluation now i evaluated already the data sets that i have here it scores almost uh 90 um sorry 75 for uh the security for the usability class and 73 for uh the security class now the original paper which presented this data set scores 88 percent as an emphasis core uh using a retrained uh or uh sorry is using a fine-tuned perch model called northeart so this is for the zero shot classifier sorry i just went the code by mistake anyway the pre-trained models or the other type of the model which is using this uh to extract feature and train a logistic regression classifier as i started to import the transformer package and they needed packages also then i used the same data set i use with the the same classifier here and then i just checked uh the distribution of the classes that i have in the deficit and as you can see the usability and security classes um are equally distributed here and then um i imported the this cell sorry the distilled perth model and also the birth based model and we have also bert large model which is another type and much larger than these two models now bert has has been extended to different models one of this model is called distill pert which is provided by hugging face which is lighter and faster than the original model provided by google but in this example i used the birth base model um by google anyway i applied the tokenizer and here i should mention that the tokenization process for bert is different from any other models it used something called wordpiece tokenizer and tokenizing by adding special tokens and these special tokens indicate if we have a separator in a sentence and by adding something called cls which is a class token at the beginning of each sentence this cls um used by birth for the classification task and that cls token will contain uh will contain the embedding that we'll need later to train our classifier so here um i have um retrieved uh our um a loaded model uh birth model with a pre-trained uh weight and then uh i um i use it to uh classify um or to um to to train or to predict the weight of our requirement set and then um here i have identified the maximum length that means that we need to include something called batting here we in our data set we have different links uh uh of us a requirement statement each statement for example maybe we have five awards another statement six words another statement ten words so we have two ways uh either to specify a fixed size that means uh the smallest one or we consider the highest or the maximum uh length and uh for because we need to make an equal sequence of of uh of sentence and we bad the remaining tokens with zero so for example for a sentence with five fourths we will um about the remaining five places with zero and this is called the batting phase add something called masking the attention mask this is something uh if we pass the body to the the directly send the batting um layer to the birth it will confuse it so we need something called attention max and the details of this layer is explained in details in the in the paper and then um as i said the the tokens uh after it's recognized i i after it's uh trained and tokenized i will take only the first tokens of each sentence which is the cls and the cls will contain the embedding of the entire sentence and that's what we need as a feature to classify that sentence so from the last hidden state which is the contextual embedding resulted from a birth model i will use the first um cls token here as a feature and then normally i will use the same feature to train our uh classifier the our logistic regression classifier so easily so here i have divided our dataset into train and just dataset and then look for the optimal c parameters and then i apply this with the legit there with the logistic regression uh logistic rotation model here and then i have put uh there are several options that you can try by yourself different classifiers and then i blocked the score it was 88 almost 88 as a score which is um reasonable enough comparing to the um to the result reported in the original paper by north northeart the final part which is the fine tuning it's about how we can utilize the last layer of the contextual embedding and fine-tune it or update the weights in the bert model according to our customized dataset now there are so many details in this um in this in this part of the tutorial so i would like to leave it to you but there are some comments that could help you to uh change and to test a different way to train uh the embedding layer so in this part bert architecture this is the layer we use to fine tune um the model itself so uh this so language model really assists to identify contextual information and this is really helpful for nlp variety tasks it could help to classify requirement in the fly by defining and define labels and also to help auto complete requirements during for example requirement collection phase or enabling their usability requirements from large resources however using the pre-trained um model directory without fine tuning might not bring the best results so fine tuning is is highly encouraged and i would like to refer to this um resource uh by hey he he he developed a preacher sorry a fine-tuned pers model last year and the paper was uh presented in re conference last year he has done really good experiments in how to fine-tune um a paired model to classify function and functioning requirements however um there is a an issue with the use of language model and this is um related to the ethical use of such um of such free trained model yes they are large yes they could help to identify meaning and context but they they were trained on unfiltered and observed text data set so there are some bias that might be encountered in this language model that need to be carefully detected that's it thank you so much now i will leave the conclusion with a lesson thank you very much what for the very very nice tutorial okay so here it is summary of uh of this presentation and i want to to conclude by showing you this slide that i used uh three years ago in a technical briefing at an attendee about nfp4 area dxe so here i tried to make to make the a picture of the progress of the resolution of nlp ferrari task and as you can see i tried to partition between mostly solved making good progress still very hard at that time nothing was mostly solved there were some tasks making good progress and still very hard task was tracing all the synthesis and regulatory compliance i made this exercise also this year reflecting on our mapping study and the recent publication at aria rixie as you can see nothing has been fully solved in a way that can be used uh in industry in a large let's say you know that have that can have a large use in industry but there are several tasks that are making good progress and especially in the field of classification and feedback analysis also thanks to the several datasets that came in the last years and also thanks to the transfer learning technologies that that what just presented still there are tasks that are very hard and the overall scenario is slowly changing somehow but we see that progresses are made so when we've solved all these issues and we have applied all the techniques that are available what's next well we hope to see a little bit something that goes beyond an lp4re and takes into account the fact that requirements are not normally expressed in natural language although everyone that has been writing or that has written a paper on nfp ferrari probably have written requirements are normally written in natural language well they are written in our mainly natural language but they are not expressed at the source in natural language because we we do interviews so we do requirement solicitation in some different forms that is often verbal or we do focus group and video re meetings and we have also requirements in the form of models and in addition with the data driven re we have requirements coming from data analytics so associated to the usage of products so the next challenge the next goal is to be able to use this automatic technology to go beyond natural language and analyze multi-modal requirements that come from different sources and this is a challenging avenue that that we should uh we believe we should all explore so uh that's it for for this presentation and so we have presented i presented it shortly at the beginning a set of task families let's say a set of typical typicas ferrari then leaping made an overview of what is the status of nfp ferrari research currently and then what gave us uh some pointers to what can come next and what is actually coming now with the birth transformers and this transfer learning technologies and now i gave just very few reflection on where to go next with multi-modal requirements so we are now opening the floor for for some for some questions or clarifications or if you want to to see more about the tutorial and yeah please please go ahead thank you

2021-06-11 11:31