NETINT Technologies about Streamlined Streaming with THEO Technologies

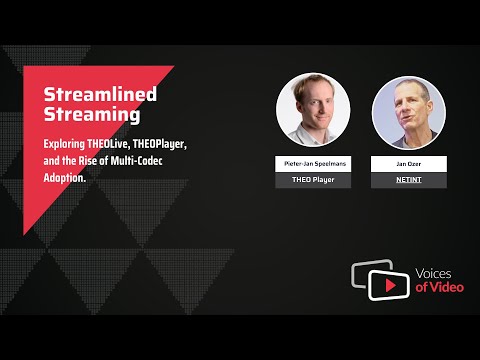

Welcome to NETINT's Voices of Video. Today, we talk with Pieter-Jan Speelmans, co-founder and CTO of THEO Technologies, the developer of the THEOplayer, and the THEOlive low latency streaming service. We'd love to talk about codecs and CDNs and encoding ladders when we talk about streaming, but it's the video player, like THEOplayer, that the viewer interfaces with, and that largely controls the viewer experience and certainly the analytics that we get back from the viewer. Of course, there are many different options to obtain a player. There's open source, there's commercial, and Pieter-Jan will

talk about choosing between open source and commercial and also the factors to consider when choosing a commercial player. Then we'll turn to low latency, which is a streaming mode of interest to many video engineers. THEOlive is a service that uses a unique protocol called HESP. Pieter-Jan will detail general approaches to low latency and

compare those to HESP, the types of applications or the type of productions that need low latency, and then how to produce a low latency production with HESP in THEOlive. If we have time, we'll cover what Pieter-Jan is seeing regarding codec usage from the player analytics he's getting back from his customers. Pieter-Jan, thanks for joining us. Why don't we start with a quick overview of your background and your history before THEO? Before THEO, I actually did not work for that long. I actually started as a software engineer after studying software engineering, and then after about a year we said, "We can do something else. Let's start a company." That's basically it. There's no more magic behind it. What was the big idea behind THEO? Originally, it wasn't called THEO yet. The big

idea was actually aggregating content. We saw that there was a lot of good content out there and this was, well, YouTube was there, but pre a lot of the other services, and we basically figured, how can we make this easier to discover, basically? So sort of a recommendation engine aggregation idea. Okay. So how did this evolve into a player and a live-streaming service? Especially back in the day, there were a lot of walled gardens, so it was not very easy to aggregate all of that content. And to get some money in,

we actually started doing some consultancy work, helping others bringing their streams live. So, basically, well, getting the cable from the OB van, plugging it into a server, getting that stuff out there, and very soon we actually noticed that just making it play everywhere was, well, more complex than you want it. So yeah, that's why we started thinking about, "Can't we make this simple? Can't we just remove the need for flash, remove the need for silver lights?" Stream it in one protocol, 'cause it was Adobe HDS, Microsoft smooth streaming, the whole shebang basically. And that's where the idea came from.

And what about HESP and THEOlive? When did those come into being and what was the big idea behind those? After we had our first player customers, at one point in time we were working together with Periscope. So well now, yeah, part of X, I would say, and I don't know if you even remember that, but I think it was in 2015 or 2016, I did a talk on Streaming Media West, together with somebody from Twitter. I know you were there, Jan. I don't remember if you remember or I don't know if you remember, but back then we were actually working on the first low latency HLS, the LHLS approach, together with Twitter. And we actually made the player for that and we started thinking, "Can't we also improve this?" Because improving on the HLS protocol, absolutely possible, but it was, for us, a bit repurposing something that was built for something else in the past. And we figured if

we would take a blank sheet, what can we do to push user experience, to push quality forward, to make sure that user experience just in general improves through latency, but channel changes, all that kind of stuff. What type of applications are you seeing are migrating towards THEOlive and using the low latency technologies? If we're honest, today, most of the services and most of the use cases really benefiting from low latency. They're still what I would call the user engagement segment. So that's very often things like starting interactive TV shows, but also and mainly sports betting, and things like webinars as well. If you're mass distributing, they have quite some benefit out of that as well. You could see this as still niche use cases, 'cause it's not like premium content being streamed as a TV channel. I don't see the big value there yet. It'll get there, but today, it's mostly the user engagement kind of streams. So, what's the latency that you're

delivering in those type of applications? With THEOlive, we are actually delivering, well, sub-second latency in the end. On average, it's like 800 milliseconds, but we have customers, 'cause with THEOlive, you can actually tune it how low you want the latency to be, we have customers who tune it as well to 1.5, two seconds, depending on where in the world they are actually delivering. If they are delivering globally, well, then it's not always achievable to go to 800 milliseconds to a shaky network connection in Brazil, for example. That's going to be hard.

Okay. And low latency HLS and DASH are in practically the four to six second range, is that accurate? Is that what you're seeing? It's absolutely accurate. It really depends also on the scale. You can go very low with low latency HLS and low latency DASH as well. I've

seen very impressive demos by other people in the industry, but in my experience, once you really start going to scale, hundreds of thousands of people being live, at that point in time, it just becomes very complex to do that with low latency HLS or low latency DASH, and you end up in a more realistic scenario with the broadcast latency. Six seconds, eight seconds, that kind of ballpark. Let's dig into the protocols. You've got a PowerPoint slide for us to let us compare your technology, HESP with DASH and HLS and some others. I'll start actually with sharing in different slides, which is one that a lot of people probably know. So of course the slides, this specific one was actually made by Nicholas Weil. I think he presented it on segments at the SVTA Conference. But historically,

I think most people know this kind of slide from the people at Wowza. I think they made one of the first ones really showing this. The classic slide. It's the classic slide showcasing here as well, similar to what I said in the past, the real interactive streams which benefit from low latency, but also it shows more that low latency HLS, low latency DASH, they're really around that broadcast latency. Well, if you really need to go lower, well, then you have to look for other alternatives. And yeah, that's what we wanted to do with HESP as well, really make that lower, that sub second latency range possible.

And the other thing that probably is relevant, Because that's the thing that really kicks in when you start looking at, "Which protocol should I use or what kind of service should I use?" At that point in time, it's not just about latency, or at least that's my opinion. It's about how much does it really cost to get this out to the audience that you want to serve. How is the picture quality? Are there trade-offs that you need to take? If you take for example, HLS and DASH, these protocols, they're very, very good at delivering a high-quality stream to a massive audience, but they are compensating on the latency. On the other hand, if you go to low latency HLS, low latency DASH, well, very often you are trading in a bit, shortening GOP sizes, all those kinds of things. Well, stuff you know way better than I do, but that's something which is an important

trade off that needs to be made there. So you're just zooming in on the quality bandwidth for low latency HLS or DASH, you're saying the reduced GOP size is going to restrict the quality or are there any other factors you're referring to? And often the GOP sizes are also a part of the trade-off with channel change times. That's at least what we are seeing. So a lot of the solutions where they want to make sure that you can tune in fast... I've seen a lot of people move towards,

yeah, GOP sizes of a second. In my experience at least, that's cutting it a bit short. I don't know what your experience is on that, but for most content, I mean a GOP size smaller than two seconds starts impacting bit rate versus quality. And somehow, you're avoiding that you're still using a larger GOP size. Is that why your quality is better on this slide

than low latency DASH and low latency HLS? So, what we can actually do with HESP, and I don't have a slide on how it works exactly, but with HESP, you actually have two streams. There's a stream which does only key frames, from which we can collect a key frame to inject into the normal stream at any point in time. And this gives us the ability to change channels very quickly, but also to change qualities very quickly. So if there's a need for an ABR switch, we can execute that at a very short amount of time. But because of that, we've decoupled the latency and the channel change time from the GOP size. And this is basically the secret sauce of HESP, let's say. Well, it's not secret, it's publicly published on IETF. And it allows us actually to

do a very nice thing and to, well even completely decouple GOP sizes from even segment sizes or anything that you're used to in, well, today's popular protocols for streaming. I wrote about low latency technology. So there's a pretty good description of HESP on the NETINT website, as well as low latency DASH and HLS and WebRTC. So they're

two streams. What are the names for the streams? The normal, the baseline stream is what we call the Continuation Stream, and that's actually a stream which could be identical to low latency HLS or DASH. It's like CMAF-based stream. And then there's the Initialization Stream, and that's the special one basically, which allows us to select a single frame as a key frame at any point in time. That's the all I-frame stream. And then the other stream of the Continuation Stream can be done with whatever GOP size you want, typically two to four seconds. Yes, or I've even seen somebody implementing it without a fixed GOP size. So really looking

at scene changes, really looking at most optimal bandwidth usage with occasionally, like if he was reaching, I think, 10 seconds or something, he was doing a key frame just to make sure the GOP didn't become too long. But that was a very interesting idea, to be honest. And looking at WebRTC, what are the restrictions on quality bandwidth for services like that? It really depends on how you implement WebRTC. The implementation that I usually see, is you do a single encode and then you distribute it towards the entire audience. So that's not how you would

do WebRTC if you would do it in a video conference call, but I think that's fine. But there the problem is actually the channel change time, and as well, the way how the network really works. A complaint that we often hear is actually that, well, WebRTC is made to drop packets. It's made that it can actually drop frames occasionally. But if you drop a frame, well, you need a new key frame to basically restart, and that often pushes these services to just reduce the GOP size so significantly that quality starts becoming an issue. When you talk about feature completeness, what are the features lacking in the typical WebRTC implementation that you're seeing? One of the big ones is listed above that as well, it's DRM, but this slide is a bit older. I hope we are getting there. I don't

think we're really there yet. It's not really standardized yet or available across the board, but that's an important one. But also, WebRTC is strong in metadata carriage, but it's not very strong in things like, for example, subtitles and all those kinds of things.

I've once been told, "You don't have a product until you have subtitles." And to be honest, I fear that, especially for the premium use cases, like the premium content, that's absolutely a thing. Accessibility, subtitles, it's very important if you really want to go after that kind of segment. But that's going to be available on a service provider by service provider basis, yes or no. Some services, I think, do provide captions, others don't. Is that accurate or what? That's accurate, but the problem is that

it's not standards-based. So as a result you get, it's the same with the DRM. I believe that anything can probably be built. The question is how portable is it towards other vendors or towards other solutions. Tell us about the production schema. What do you need on the initiation side if you're going to use HESP with your THEOlive service? Well, if you're going to use HESP together with THEOlive, what you basically need is you need to provide us with an SRT or an RTMP feed. The THEOlive product, we see

it as an end-to-end video API. We just take in whatever feed you have available and we will give you a player embed that you can drop anywhere, website, native app, whatever is needed. Well, that's or strength, right? The player side. So, we allow you to basically drop it anywhere and that's it. It's fully API-driven, you start, stop whenever you want it to be. But production-wise, we tried to make it as simple as possible. So it's kind of an end-to-end service, you scale up as needed, you provide the CDN type delivery services, the player? Basically, I send you a stream and you take care of the rest? That's the idea behind it.

Tell me about device support. I guess that should also be a strength of yours, but if I'm going to use the HESP service, what devices can I support on the playback side? Well, basically everything, but I need to make one small asterisk. When you look at player support, THEOplayer almost supports it everywhere already today. So our standard support for HLS and DASH, we cover HESP on those platforms as well, with one exception being Roku. We have an internal POC for Roku, but it's not as low latency as we want yet. I think we hit three to four seconds, which is not the target that we want. I know it's better than most, well,

than any other protocol on Roku, but it's not something that we are bringing to production yet. Talk to me about monitoring capabilities. When I'm producing a live event, I want to know at the time how the signal's getting through, what audience engagement is. What type of analytics do you provide within the THEOlive service? We don't call it analytics because that's not one of the things that we really focus on, but of course, we do have all of the monitoring that we deem necessary for live production. So we do have insights, for example, on how good is the signal strength coming through? Are there any frames being dropped? Are all the frame rates okay? Is the audio there? All that kind of, what we call basics that we absolutely have on the ingest side. But similarly on the egress side, we do have insights on what is the average latency that's being delivered? What types of devices is your audience using? Are there any stalls happening? What kind of qualities are people getting? But this is more what we call the operational metrics, and anybody can actually add whatever analytic solution that they would want on top of THEOlive as well. So HESP is a, I guess it's a group standard,

it's not an ISO or similar standard, is it? No. So what we did is we of course, well, we started to work on it, well, 2015, 2016 somewhere, but a few years ago we actually started together with Synamedia, the HESP Alliance, and we've been evolving the standard from within that, and we've published it towards IETF as a draft standard. So it's not an official RFC, who knows, maybe one day we get there. I don't know how long it took for HLS to become an RFC, but we'll see. Are there royalties involved with using the technology? I know that the organization's page talks about royalties, and give us a high-level view and where people can go and get more details and tell us how that applies if I use THEOlive. If you use THEOlive, there's nothing to be concerned about. That's something that we will take care of. If you would use HESP directly, yes, within HESP Alliance there is a pool that was started to make sure that if people want to claim royalties, that they can just join that pool. And that pool is focused on the player side as well.

So we developing the player side, that's where the royalties would need to come from. We try to make it simple for people. But yeah, all of the details are basically on the HESP Alliance website, so that's probably the best source for this. What's the URL of that? HESP.org or? I think it's HESPalliance.org. And who are the other service providers? You're not the only provider of HESP-driven live-streaming, are you? Or are there others? No, within the Alliance we have a bunch of other people or companies who have already implemented it. So, Synamedia I already mentioned, they have services around it available,

but also for example, Scalstrm, Ceeblue, they demoed it actually at IBC a few weeks ago. They have solutions which are end-to-end available. And similarly, for example, DRM partners, like EZDRM by DRM, they have sample streams up and running as well, with DRM then also included. What about other player vendors at this point? Not yet. We actually are hoping that others will start developing players for this as well. But from THEO's perspective, well, we obviously have THEOplayer as a player, which is available. So let's switch gears and let's talk about

THEOplayer. At a high level, what do you see as the primary functions of the player? It depends on how you define player. And if you look at a lot of the open source players, what they define as the player is actually, it's a streaming pipeline. You give it a stream and it renders it out on the screen and it does some stuff around subtitles, it does some stuff around multiple audio tracks, and that's about it. And if I talk with customers what they see as a player, well, that includes the UI, it includes integrations with analytics, with TRM, with advertisements, and with all of that kind of stuff as well. So in my opinion, and that's

also what the scope of THEOplayer is, well, all of those things are a part of the player as well. Open source versus commercial, what are the big decision points? A lot of people use open source and develop some of the features you talked about themselves. If you're talking to a major corporate customer, what are the pros you see of commercial, as compared to open source? The first, because I usually ask a bunch of questions to them, and the first question that I think any company should ask itself is, is this really differentiating you if you are basing it on open source and building everything else around it yourself? And very often you don't really get a competitive edge by integrating an analytic solution or building a very complex UI yourself or doing any of that kind of, what I would call repetitive baseline work that others have done already hundreds of thousands of times. And it doesn't, in a lot of cases,

generate you any additional revenue if you build it yourself. So for me, that's usually the first question that people need to ask themselves. And the next question is usually about manpower. Do you really have all of the people in house to build all of this, to add the integrations with DRM, the ads, the analytics to do the maintenance on it? And yeah, all of the budget that's needed for that as well. And the last thing where usually people get convinced, "Yeah, we should really not be building this in-house anymore," is that a lot of the companies that switch to THEOplayer from building it internally or doing open source or whatever, at one point in time, and this will even happen with a commercial player without a doubt, but at one point in time you will suffer from some kind of issue, from some kind of limitation. And if that limitation is with a vendor of yours, you get on the phone and you yell and normally it gets fixed or you switch to a different vendor. But well, I think the point is usually that it should get fixed. But if that happens with an open source solution, well, you can't really call anybody, you can't really yell at them. And if you submit a ticket, usually the answer is,

"Well, we're open for pool requests," and you need to dig in and you need to dive in, and you need to understand how that beast is working. And that's knowledge that, we're hiring or trying to hire people that know these kind of things, but that knowledge is extremely rare. What about the compatibility side? At the most basic level, the player is in charge of making sure the video plays reliably on a platform. How much time do you devote to that

within your engineering team and how does that compare to an open source type player? Most open source players and most in-house developed video players usually have it a bit easier. They follow the standard very strictly or they follow their own stack very strict, and they know exactly what they will get. We don't know. We have hundreds of different customers and they all do something which is slightly unique. And as a result, we have to be very, very robust, very redundant, and that's one of the things that drains a lot of time for us.

But on the other hand, when you look at it, you mentioned the player is very responsible for user experience, it is also the most visible part. If something goes wrong somewhere in your streaming pipeline, the player can probably accommodate for it even a bit and try to smoothen the user experience. But if that player goes wrong, then you can have an amazing pipeline and everything will, yeah, it'll just be destroyed from a user experience perspective.

What industries have you been particularly successful in penetrating with your player? That's a lot of different industries. If I really look at it, I think there's a few major verticals, and one very clear one obviously is the telcos and the operators, the cable companies where historically everybody was already working with to distribute their content. For example, companies like Swisscom, Telecom Argentina, they're a few customers of ours. Also, obviously the broadcasters, companies like TV 2 or Rai. Trying to tap new markets, going direct to consumer, trying to cut out a bit of the telcos doing that, but that's an interesting story. And also of course the OTT platforms.

Sometimes linked to the broadcasters, sometimes linked to the operators, but think of companies like Peacock. Also, a lot of major sports leagues. Usually they're a bit protective about their brands, so we can't always name them publicly, but if you name a few major sports brands, probably, well, a few of those are customers of ours. And then even, well, corporates, NASDAQ, CERN, these are customers of ours as well. It looks like those are very different use cases, Because they're of course not doing premium content, but we're really covering the spectrum from subscription-based services, to fast channels, advertisement-based services, and even the legislation, mandatory European Parliament kind of things, where the stream is obviously free, but usually not watch that often. What percentage of your customers are DRM-protected? That's actually the bulk of them. So most customers do have DRM protection on there. Obviously, it's required

once you get some kind of premium content, or at least for most of the rights holders, it's required. If I would need to make a guess, I would think that's probably 70 to 80%. Is DRM as complicated as it looks between the different families of DRM that you have to use to different targets or is there an easy button you can push to make that? It's a good question. Today, in my opinion, it's not that hard anymore. Four years ago, five years ago, yes. But for example, for THEOlive, we implemented this as a checkbox. You just check the box and your stream is DRM-protected. That's the level that we think it can get down to if you would really want to. Well, do I have to choose a certified provider

like EZDRM or buy DRM or? With THEOlive? No. Of course, if you would want to set it up yourself, yes, then you get one of those providers. They, to date, take care of most of the complexity. And players like us, we have all of those integrated, so you just load it up and it's done. So if I check the box and you're a player,

you're going to handle the DRM and you're going to send me an invoice, which is fine. I know I've got to pay and I might as well... As long as it's simple, I don't really care. That's the goal, yes. Try to make it as easy as possible. Streaming is hard enough already.

Yeah, it's one of the major DRMs that you're... Why don't you give us a two-minute overview of which DRMs to which platforms you're supporting? Top of my head, obviously all of the Google platforms, Android, Android TV, Fire TV, Chrome, and similar, meaning all of the Edge-based browsers these days or Chromium-based browsers. A lot of the smart TV platforms, all of those will do Widevine. A lot of the older smart TVs obviously with those platforms, they will all do PlayReady as well. And Apple will always be Apple, that will probably always be FairPlay.

The more interesting thing these days is if you approach it the right way, then you can actually start combining all of those with CBCS, DRM. And only disadvantage you have is the old smart TVs. And then I'm thinking, well, not that old but smart TVs that you bought a year or two ago in the store, those will not do CBCS, DRM yet. But the difference, Widevine, PlayReady, FairPlay, for me, it's more becoming a brand compatibility kind of thing. The real question I think will soon be, is it going to be CTR or CBCS encryption and soon it'll probably all become CBCS. And what are you seeing in terms of CMF versus HLS and DASH? How quickly is CMF making an impact and the analytics you're getting back from your customers? So, CMF itself, of course HLS and DASH, are fully compatible with that, so that's great. But if I look at, for example, HLS itself, how many segments have become CMF,

compared to how many segments are still transport stream, most of the VOD archives are still transport stream, and I don't expect that to change anytime soon. Even though it could be very easy to migrate those, it's just a cost. But today, I think most of the customers are using fragmented MP4s and CMF already. So it's an evolution, but especially on the live side, I think it's moving in the right direction. I was going to ask, what are the trends

you're seeing on the live-streaming side? Mostly CMF at this point, or? Mostly CMF. I am noticing a trend towards more HLS compared to DASH as well, which I found interesting. Reason for that probably being the mandate from Apple, or at least the tight coupling from Apple with HLS on their platforms. But beyond that, yeah, I don't really see any big advantages between HLS or DASH. Well, HESP-wise, of course, that's CMF compatible as well. Of course I'm

cheering that that one day will become a standard as well, that everybody is using, but we'll see. So let's finish off with a look at codecs. What type of analytics do you get back from your customers on which codecs they're using? We don't harvest the analytics ourselves, of course, so we leave that up to our customers, but obviously we do get insights from our customers. What are you seeing? Historically, of course, everything H.264, all the things. That's something which is still very much the case, but especially on smart TVs. These days as well, more and more companies and more

and more customers are looking at mixed ABR ladders. HEVC definitely on the rise for smart TVs. Let's see if the recent lawsuits with Netflix and others will change that or not, who knows? And AV1 actually surprisingly also getting a little bit more traction over the last year already. Not that commonly deployed yet. I actually see VP9 still a bit more than AV1, but it is a clear trend that those protocol or those codecs are also on the rise.

Give us a percentage of AV1 and tell us who's using it, if there's any concentration you can identify. I would probably think that on all the bulk of video that we are doing, it's probably still less than a percent for us. Pieter, your comment on hybrid encoding ladders raised a question. How much detail do you know about what people are doing on the hybrid side? If I'm offering H.264 and HEVC, do I do it in two separate ladders like Apple recommends? Or do I have a hybrid ladder that's got H.264 on the bottom rungs and HEVC on the top rungs? What are people doing?

We see both. In the past, most customers did separate ladders, but of course it's not always economically interesting to really do that. So, these days we're seeing more and more companies switching towards HEVC for the higher rungs and then H.264 for the lower rungs,

and not every platform allows for it, so that's an asterisk to make. But on most platforms you can today seamlessly switch between H.264 and HEVC. So, that's a very relevant change that we've seen. And on those platforms where it's not possible to do a seamless switch, what we do as a player, or what we at least attempt as a player and try to provide as a possibility for our customers, is that we start with whatever the best codec is for the curing bandwidth and the curing device, and if we see that it would be possible to switch towards the other codec to get a better quality or because we need to switch down, at that point in time, we can actually make that switch, depending on customer configuration. So if a customer could configure like, "I want to stay with H.264 and that's it," then we will not dynamically switch. But if they would say, "Yes, you're allowed to switch dynamically," even though it might degrade user experience because there will be a black screen inserted in between the switch or the switch will be very noticeable, that's an option that we provide for those devices that don't allow you to switch smoothly.

How much of that is 4K and how much of that is 1080p? Most of the times when it's about HEVC or AV1, it's almost always the discussion always starts with 4K. For 1080p, yeah, I see a lot of H.264 still. What are you seeing 10-bit versus 8-bit and HDR versus SDR? And if you're not getting data back, then let me know. But how much 10-bit usage outside of the premium content field, Outside of the premium content field, I think the value will be zero for at least what I know about. If I look at the premium content side, for example, services like Peacock, obviously they serve HDR as well, they do the whole Dolby Vision, Dolby Atmos, all that kind of stuff they have in there as well. I got a question in about THEOlive. You talked

about maintaining low latency with large audience sizes. What audience size are you talking about? What's the largest in terms of viewers type production have you achieved with THEOlive? And what latency was that? I would need to check, and I know that there's a very big one coming up in a few weeks that a lot of people are very happy, but also a bit, well, wanting to monitor for as well. I think today it's, yeah, tens of thousands, 100,000, that kind of ballpark we've seen already today. And that's usually at latencies, let's say, 800

milliseconds to a second. That's the latency that we see there. It depends a little bit on location, on device, there are always some users who go to a second and a half. There's always some users who are a bit lower than the 800 milliseconds as well. Usually, services talk about synchronizing those

so everybody's at the same place. How does that work with THEOlive? So, when you set what the target latency is that you would want to have, so if you would say, instead of go as low as possible, you set it to go to a second or go to a second and a half. At that point in time, all of the players will try to synchronize themselves 'cause they will all try to achieve that same latency. If you just say go as fast as possible, yeah, it's of course not

synchronized, but it's as fast as possible. What do your customers typically? I'm an auction house or a gambling house, how synchronized do I have to be? Most of the betting people, they will basically put it and try to synchronize around a second, a second and a half. That's at least the experience that I have today. Simply to level the playing field a little bit, make the integration with the metadata slightly easier as well, because in those cases it's highly important that all of the metadata is in sync. But yeah, that's more or less the ballpark that I see there. Couple of questions about origination streams with

HESP and THEOlive. What are the recommendations in terms of configuration for the origination stream, so GOP size, B-frames, profiles, codecs? B-frames and low latency, always a bad idea. That's not just my opinion I hope. So that's something that I would not recommend for the origination stream. And beyond that, it really depends on what kind of output you want to get. So what we see is when people want to output a 1080p stream, yeah, it doesn't make a lot of sense to send us a 4K feed feed. Similarly, when there are customers who

want to output like 720p, very common as well. Sometimes even it's like, what is it? 576p for some of the betting or when they don't have rights to go higher than that and they run it at two megabits or something, yeah, then don't send us a 16 megabit stream. Then it makes a lot more sense to take a two megabit or a four megabit-style stream, send that to us, and then we can take it from there. And obviously, frame rates don't force us to transform 25 frames per second to 30 or vice versa. That's of course not something you should be doing. A question about HEVC versus H.264. Any preference? We think both, but most people send us H.264 still today, which is fine. We can take either.

And I guess the last question talks about hardware versus software encoders on the origination side. How many people are sending you a stream from Wirecast or OBS versus some of the hardware encoders that are out there? That's a good question. I do know that there's a lot of people on the event side that are still using things like OBS or Wirecast. I think most of the more serious content that we have, they have dedicated devices for this kind of contribution, so probably, well, a part is still going to be in software, but a nice part is probably in hardware as well. Which protocols are you seeing being streamed to you, RTMP and SRT, or what are you seeing as the mix now? Most of it is still RTMPS, and the reason for that appears to be relatively straightforward.

With SRT, there's a lot of good tools, but very often there's not a lot of flexibility in how big you want the buffer sizes to be. And as a result, we sometimes see that using SRT actually adds latency on top of RTMP, so if the network connection is stable, there's no real added benefit of using SRT every time. One other question popped up. You were one of the first implementers of LCEBC. What are you seeing on that front? Are you seeing increased adoption or is it about to explode? Or what's your sense of what's happening with that codec? It's an interesting story, I think. Are we seeing an increased interest? Absolutely. Are

we seeing a lot of adoption today? I think that the answer is unfortunately no. But interest-wise, that's definitely something which is increasing. What does that mean? Does that mean it's about to pop or you just still don't know that it's going to be successful or not? It's difficult to say. If there is one thing still holding it back, I think it's the DRM question, which especially for most of our customers, makes it very difficult. You can't do DRM with LCVC today, or at least definitely not hardware-based DRM, and that's, for most of the premium content, that's still a limitation. For some of the user generated content, there it's obviously not an issue. And as a result, I do see a lot more interest coming from that corner. But

I know a lot of the big telcos and some of those types of customers as well, they've looked at it, they're interested in it, they want to test with it. But once they hit the DRM wall, yeah, that's usually when interest goes to sleep again, until they get to solve that as well. Well, give us a couple of websites, I guess, THEO Technologies. What's

your website? Is it THEOplayer.com? Yes. THEOplayer.com is still the place where you can find, well, almost all of the information. HESPalliance.org probably a good source for HESP kind of information, but that's at least two places that I'm most active on.

Listen, thanks for your time today. This was a lot of fun and pretty interesting stuff, so thanks for agreeing to chat with us. It was a pleasure for me as well.

2023-10-11 15:45