Measuring Online Distance Learning

>> Saima Malik: Welcome, everyone, to our webinar today. My name is Saima Malik. I am the Senior Research and Learning Advisor at the Center for Education at USAID, focusing primarily on foundational skills. And I have had the pleasure of working very closely with some of the folks will hear from on the webinar today. Our topic today, as I know you're all familiar with, is Navigating the Distance Learning Roadmap: Monitoring and Evaluating Online Modalities. At the Center for Education, falling COVID-19 and school closures, we have tried our best to respond to some of the needs from our programs but also partner programs on the ground around sort of navigating this new world of distance learning.

So at first, there were a lot of questions around how do we do this, how do we pivot from in-person to distance learning, what are some of the modalities that have been tried and tested, what even are some of the terms that are used. So we worked very closely with folks you'll hear from on the call today to develop and share some documents, some reports, some guidance tools to help folks through some of that journey. We had a distance learning literature review that came out earlier on.

And that was one that provided an overview and background to the world of distance learning and helped folks to navigate between in person and online. Shortly after we sort of had that pivot between in-person to online, a lot of questions we heard from our partners, as well as from our own programs on the ground, were around measurement. And so the next question was, when implementing these programs, how in the world we measure whether or not we are reaching our target audience, whether or not they're engaging in the ways that we hope they are? And then finally, of course, are they learning from the programming that we are providing? So the document that we're going to go over today, the tool that we have developed together with colleagues on the call, who you'll hear from just very shortly, is a roadmap that helps guide folks through this process of measuring distance learning programming. We had a call at the beginning of the week on Tuesday that focused very much on how to measure distance learning programming that's being delivered through radio and television. And the focus, as you all know today on this particular webinar, is on online modalities.

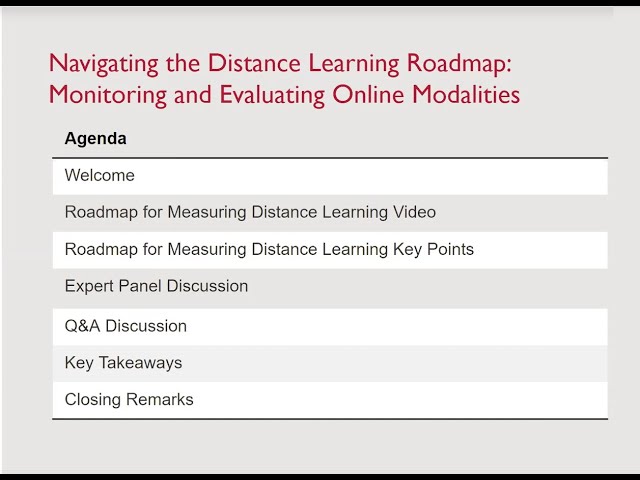

I see Rebecca Rhodes is putting some of the documents into the chat. The first one is the distance learning literature review, which was the first document that we shared out with colleagues for that intro into the world of distance learning. It's a very rich wonderful document. If you haven't had a chance to take a look, please take a look and share with other colleagues as well. The second one that Rebecca has put into the chat is the tool that we're going to be talking about today. Very quickly, I'd like to present our agenda.

And then I'll get us started here. So we are going to first take a look at a roadmap for measuring distance learning video, which essentially is a super short video. It walks us through some of the key findings from the roadmap review tool that was developed that we'll talk more about. After this video, we will hear from Rebecca Rhodes, who is our Distance Learning Lead at the Center for Education, as well as Emily Morris, who is one of the authors of the roadmap itself. And they'll talk us through some of the key points of this tool. After this, we have the pleasure of some fabulous experts in the field joining us today, and they'll be introduced too shortly, who will have some discussion around some of the key topics and questions that we know you all have in mind.

And we'll have some time for question and answer as well. Following key takeaways, we'll have some closing remarks. That's a little bit of the agenda that we have planned for you all today. We're hoping it's going to be a very engaging and useful webinar for you all to attend.

I would like to mention, first off, that we -- this particular webinar and this particular tool is not designed solely for USA programs but rather for anyone in the field who is grappling with some of these questions. So it is more broad than USA programming. Just want to make sure to present that caveat to all right at the top here. What I would like to do now is to hand over to our producers of the webinar, who will quickly walk us through some of the features of the Zoom call today.

So over to Emma Vernettis, who is our Lead Producer on the webinar. Over to you, Emma. >> Emma Vernetttis: Thank you, Saima, and welcome, everyone.

I just want to quickly go over a few of the Zoom tools. So only our presenters today will be on audio, so I do want to bring your attention to the reactions button at the bottom toolbar. Please feel free to provide feedback using those icons throughout the session.

At the bottom of your screen, you will also see a live transcription button. If you would like to view closed captioning, click on that button and then select to Show Subtitles. To send a message to the group, you can click the chat button on your toolbar.

And this will open the chat window. You can type a message into the chat box. And if you have any questions during the session, please put them in that chat.

And finally, if you'd like to send a message to a particular person, you can click on the drop down next to the To entry point and select the person you'd like to chat. We're happy to have you today. And back over to you, Saima. >> Saima Malik: Thanks so much, Emma. And if I could just stress the fact, we know that we have so many questions.

And there's so much that we want to discuss on the call today. And we have limited time. So I really would love to encourage you all to use that chat feature. Please type your questions in the chat. You all did send in questions that we have shared with our speakers on the panel today. But please feel free to type in questions as they come up in the chat.

We'll make every effort to ensure that those questions are addressed on this call. And if not, we'd like to ensure that we are able to keep in touch with you all and keep the conversation going and reach out afterwards as well. So just want to really encourage you all to use the chat. I know it's tough not to have the mics on. But please use that chat. So what I would love to do at this point is to request that we take a look at this short video.

It's not long. It's very brief. We understand that many folks on the call may not have actually taken a look or had a chance to take a look at the roadmap for measuring distance learning yet, although we hope that you take some time to do that. But what we have done is put together key takeaways and key recommendations that come from that report into about a five-minute video. And so it would be really helpful for you all to have access and to take a look at that video first. So I would like to request Emma to go ahead and share the video.

Or Nancy, I think, is sharing. So thanks so much. >> The roadmap for measuring distance learning provides strategies for capturing reach, engagement and outcomes.

This video shares three key strategies and provides an example of how to apply these strategies to one distance learning modality video programming. As countries around the world closed learning institutions in response to the COVID-19 pandemic, there was a surge in distance learning initiatives. Distance learning is commonly used to reach learners who need flexible learning opportunities such as working youth or where schools and learning centers cannot be routinely and safely reached. When implemented intentionally, distance learning can expand learning opportunities. What is distance learning? Distance Learning is broadly defined as teaching and learning where educators and learners are in different physical spaces, often used synonymously with distance education. Distance learning takes place through one of four modalities -- radio or audio, television or video, mobile phone and online learning platforms.

Printed text frequently accompany these modalities and can also be a fifth modality in cases where technology is not used. Distance learning can serve as the main form of instruction or can complement or supplement in-person learning. As countries and education agencies take up distance learning, it is important to design and implement evidence-based and intentional strategies for monitoring and evaluation. This video outlines three interconnected strategies for measuring reach, engagement and outcomes in distance learning. These include integrated remote and in-person data collection, multimodal technology interfaces and mixed methods data collection.

Using a combination of these strategies will help ensure quality, equitable and inclusive data. Key Strategy Number 1, integrated remote and in-person data collection. Remote data collection provides timely data on reach and engagement and can be used when in-person data collection is not feasible.

In-person data collection is preferable for measuring outcomes including attitudes, beliefs and behaviors and where building rapport with learners is critical. Combining remote and in-person data collection enables more frequent, responsive and systematic data collection in emergency and nonemergency contexts. One example of integrated data collection is measuring learning engagement and video programming using both in-person and remote methods.

Key Strategy Number 2, multimodal technology interfaces. Technology interfaces include phone or video calls, interactive voice response, text messages, social media groups, paper, images, video and audio recordings, learning management systems and educational apps, programs or games. Measuring distance learning through multiple technology interfaces helps reach a wider group of participants, including those with limited access to technology and connectivity. Interfaces should be selected based on technology device access and accessibility needs, connectivity and demographics of the users. One example of using multimodal interfaces for measuring engagement and video programming is combining phone calls, social media groups, educational apps and paper-based protocols to collect data.

Key Strategy Number 3, mixed methods data collection. Quantitative data collection methods include surveys, tests and assessments, teaching and learning analytics and observations. Qualitative data collection methods include qualitative observations, focus group discussions, interviews and participatory and arts-based research. Combining quantitative and qualitative methods allows for deeper analysis and provides greater opportunity to measure intended and unintended reach, engagement and outcomes.

One example of using mixed methods for measuring engagement and video programming is combining surveys and learning analytics data with focus groups and voice commentaries. Using a combination of integrated remote and in-person data collection, multimodal technology interfaces and mixed methods data collection will help ensure quality, equitable and inclusive data. To bring our example of measuring engagement and video programming together, we integrate remote and in-person data collection, combined multimodal technology interfaces and mixed methods by using in-person focus group discussions and paper-based surveys, remote phone surveys, recorded voice commentaries and social media group conversations and learning and engagement analytics captured through educational apps. For more examples and case studies, and to learn more about the steps and measuring reach engagement and outcomes, visit the Roadmap for Measuring Distance Learning. >> Saima Malik: Thank you for sharing that.

Hopefully, for our participants on the call, hopefully, that was useful. We wanted to make sure to show you a distilled version of the three key strategies that are recommended and are discussed in much more detail in the roadmap. So again, encourage you to take a look at that roadmap. We do have, next, our two speakers who are going to talk more about some of the main themes that are discussed in that roadmap document. I am very happy to hand over to Rebecca Rhodes, who is our Center for Education Lead on Distance Learning, as well as Emily Morris, who is one of the authors of the roadmap, to talk more about some of those main things. Over to you, Rebecca and Emily.

>> Rebecca Rhodes: Thank you, Saima, and good morning, everybody. We're very glad to see you here. As we go on discussing the roadmap, please put your questions in the chat, anything you want to ask.

And we'll do our best to see what to do with your questions. So we're going to give a brief overview of the document now and try to kind of connect it to the questions you've already asked. We can go to the next slide, Emma. So this slide shows you some data about the questions you submitted when you registered.

And according to the questionnaire responses that came in from the registrations, a lot of you have questions about the outcomes portion of that trio of metrics that we've been discussing -- reach, engagement and outcomes. Some of you have some questions about monitoring reach and engagement. But most of the questions we received were about outcomes. So thank you for those. And we'll try to go into that in more detail and really try to get at this question you've often asked about what the quality is of how to measure the quality of distance learning programming. Next slide, please.

So this wonderful map is really one of my favorite slides in this deck. And the reason is it shows you the countries and contexts that Dr. Morris and Anna Ferrell and the team that prepared this roadmap really looked at and used data from and information from in creating the roadmap. So their approach was to do a sort of, I'm going to say, like, a sweep, kind of light -- kind of a sweep across the world like a lighthouse would do and try to see who in the post COVID context or in the COVID context was using innovative approaches to distance learning and then write those up as program experiences and case studies and include them in the annex to the report and then draw from them to create the roadmap and to create the recommendations and the best practices that the roadmap contains. So we're just really grateful for all of that work and just impressed with how what is in the roadmap is centered in the actual real-life experience across this very broad range of contexts.

Next slide. So here, you have the actual roadmap, which we will continue to discuss and ask questions about. There are eight steps outlined in the roadmap, as you can see on your slide. But what we'll talk about today and what the roadmap largely focuses on is the first four. And it provides tools and resources to carry out those first four. And then it builds from there into discussing how the final four could be conducted.

Next slide. So the steps in the roadmap, as I said, are clearly delineated. And this is the first one. So this is about determining your objectives and setting up your monitoring, evaluation and learning plan.

And there are really four key internal and external objectives that you'll need to consider and weigh and think about the tradeoffs between when you're building your own monitoring, evaluation and learning plan. So there are some internal objectives and external objectives, as you see on the slide, that are possibly where you will want to focus your efforts. Internally, you may be looking at how to use data to inform program management or how to use data to inform teaching and learning or how to guide, adapting or even sustaining your programming. And then externally, of course, you may be looking at how the -- how just what is happening in your program contribute to the general global knowledge base about distance learning, how does it further that evidence base and then what kinds of accountability reporting you have to be involved in in order to justify the funding you receive or possibly scale or replicate your program in the long run. Next slide.

So what you'll find with the steps in the roadmap is that each is broken down into some guiding questions. And then it includes key recommendations. And so you'll probably be thinking about, when you're working with Step 1 of the roadmap, why is distance learning being measured here? And who will use the data? Who's the audience? And how will the data be used in the long run? So these are all background questions to Step 1. And from thinking about those, it's possible to go on and participate in Steps 2, 3, 4 and so forth. So the roadmap will help ensure that your MEL plan and implementation aligns with what you aim to measure and really kind of center you in your main purpose and objectives around your evaluation.

And that's the point of Step 1. Emily, over to you for Steps 2, 3 and 4. >> Emily Morris: Perfect. Thanks, Rebecca.

And just to reiterate, for Step 2, we have categorized the metrics into reach, engagement and outcomes. And these will vary -- the metrics will vary depending on the context and the modalities that programs are using. But reach roughly is looking at technology devices, infrastructure, connectivity. Engagement is participation in and use of the programming. Outcomes is change in knowledge, skills, attitudes, behaviors in teaching and learning. And so the roadmap includes a number of illustrative metrics critical to planning.

But it also -- keeping in mind that in order to measure quality, using reach and engagements and outcomes is important, using a combination of all of these measures. So in the roadmap, in the next slide, we have presented some key questions of how -- thinking about how are these going to be measured in the programs that you're implementing, what are some examples, how can teams build these measures systematically into monitoring, evaluation and learning plans and what kinds of equity analysis should be considered. We also provide the recommendation of using all of the measures as feasible and identifying who is being reached and engaged and who's not being reached and engaged from the onset.

So they're looking at equity from the onset. For the third step -- oh, and for those of you that are working with USAID programs and funded projects, standard for an assistance indicator is in draft. And that is really just looking at the reach and the percent of learners that are regularly participating in distance learning programming. And so again, that's going to be coming out soon and is just looking at the reach aspect of distance learning.

The third step is, as you heard in the video, thinking about which measures will be remote, which will be in-person and ideally using a combination of remote and in-person where feasible. Some key questions to think about is what is feasible and what is safe, but also thinking about the safety of teams, access to technology infrastructure, all of these considerations, thinking about equity and geographical reach -- all of the different equity considerations that need to be taken into account and which technologies will be used, what access is there to technology devices. And key recommendations, again, as you saw in the video, integrating in-person and remote and ensuring marginalized individuals and groups are included in the data collection. Our fourth step that we go over in the roadmap is really the mixed methods and combining the different types of methods, quantitative and qualitative, in the data collection. The key questions that we help users think through are what methods can be used? What technologies should be used? And what kinds of equity analysis should be used? And again, mixing methods to collect data that aligns with the aims from the Step 1 and the goals of monitoring, evaluation and learning and ensuring that data collection efforts are not further marginalizing participants.

So the final reiteration, the roadmap is in the document. And it goes through all these different steps and provides illustrative examples from the case studies that -- in the countries around the world that participated in this evidence gathering. I am now going to move to the section of -- the exciting section of this webinar where we hear from our experts.

So we will be hearing from Mary Burns and Dr. Barbara Means. Mary is an education technology expert based at Education Development Center and works at every level of the online learning landscape. She designs and teaches online courses, evaluates online learning programs for teachers, has developed strategies for education technology and distance learning for schools, for states, universities, Ministries of Education in a number of countries. She advises bilateral and multilateral donors on distance learning and has published popular and peer reviewed articles, book chapters and books on teaching with technology and distance education. So welcome, Mary.

And we have Dr. Barbara Means, who founded the Center for Technology and Learning Research Group and has served as a codirector for many years. Her research examines the effectiveness of innovative education approaches, supported by digital technology. Her recent work includes evaluating the implementation and impacts of newly developed, adaptive learning software.

She's also studying the long-term effects that attending an inclusive STEM-focused high school has for students from underrepresented minorities. A fellow of the American Educational Research Association, Dr Means has served on many study committees on the National Academies of Science, Engineering and Medicine, including the one currently producing a companion volume to the classic 'How People Learn'. She's advised the US Department of Education on National Education Technology plans and authored or edited more than a half dozen books related to learning and technology. Barbara earned her degree, her PhD in education psychology from the University of California Berkeley. So welcome Dr Means and Mary Burns.

And I'm going to ask them to share for five minutes each some of their insights in response to the questions that you all submitted when you registered for the webinar. And then we will open it up to questions that you have in the chat and other questions that you have submitted. So handing to you, Mary, and welcome.

>> Mary Burns: Right. Thank you and good morning, good afternoon, everyone. It's nice to see some familiar faces. I just want to thank USAID and EnCompass for the opportunity to participate in these webinars, I have learned a lot, and for the roadmap, which I think is really a valuable service to our field.

So special kudos to Emily and to Yvette. And I also want to say just how excited I am to be on the same panel with Barbara Means. She is someone whose work I have consulted for years. And she really is just such an expert. And we are so lucky to have her with us today.

So I'm just going to use a little bit of my time not to talk so much about technicalities of monitoring and evaluation but really to talk about distance learning, particularly online learning and the roadmap writ large. So I think, you know, to begin, I guess I just want to say that I think we know this. There's probably no -- every distance technology has its own set of complexities. But I would say that probably no distance technology has as much complexity as online learning, which is really what makes it very powerful, but also makes it rather vexing and challenging for us to use, especially in some of the context in which we work.

So just briefly, I'll talk about online learning through that lens of reach and engagement and outcome. So in terms of reach, you obviously need internet access to access online learning. And that's really been a problem in our field for a long time. But the news is good.

It's getting better. In 2018, 42% of people in the world had access to the internet. In March of this year, that number had increased to 59%. And almost all of that growth is in the low and middle income context in which USAID works. So there's lots of other gaps in terms of usage gaps, connectivity gaps. But at least in terms of actual internet coverage, there is -- that figure is promising.

So in terms of engagement and outcomes, again, I think perhaps no other distance education modality is really as diverse or as highly differentiated as online learning. There's incredible divergence. Or to use research and evaluation language, there's incredible heterogeneity in terms of online learning. And it really differs in so many different ways. It differs in how it organizes instruction, whether it's 100% online or blended learning or hybrid learning or high flex learning or web facilitated learning. It differs in how it's delivered, whether it's synchronous or asynchronous or now bisynchronous.

It differs in terms of its delivery systems, whether it's the learning management system or Zoom or a standalone software like Nearpod or Google Classrooms or HyperDocs or Choice Boards. It differs in terms of scale from one student learning alone to a cohort-based course to a massively open online course where you have tens of thousands of online learners. It differs in formats whether you have a class or a course or a meeting like this, which is an example of online learning. And it differs in terms of content. And that can be text, games, simulations, multimedia video, audio etc. And of course, it differs in terms of the hardware that we use to access it, whether it's a smartphone or a tablet or a laptop or gaming console.

So the great news about online learning is that it generates tons of front end and back end data that we can use to help with measurement. But I belabor this point on the heterogeneity because I think when we're measuring things like an engagement and outcomes, it really affects in many subtle ways how we do those measurements. So for example, you know, are we comparing apples and apples, or are we comparing apples and oranges, if one group is learning synchronously but another group is learning asynchronously? Or, you know, are we measuring an intended competency or a hidden competency? So in other words, if we have a student in a self-paced online course that involves tons of reading, are we really measuring that student's ability to read and comprehend online text, or is it the actual topic itself, because sometimes, the former gets in the way of the latter? And finally, I think, you know, is the technology influencing the intended constructs we want to measure, because when we are evaluating technology -- something online, we're actually often evaluating, suddenly, the technology which is being used, because learning online via phone is very different from learning online through a laptop. So I just raise these points for reflection and to really serve as a bridge to talk about the roadmap, because I think the power of the roadmap is Number 1, it addresses these modalities and these different types of online learning modalities. And I think Number 2, what I really appreciated about it is that Emily and Yvette have helped us make this gentle paradigm shift from just monitoring and evaluation face to face to doing it in a more distance-based way because as we scale these online offerings and distance offerings, we will have to do more distance based measurements.

And then finally, I just want to express a hope, which is that as USAID really formalizes this monitoring and evaluation process around online learning and distance learnings in general, that we begin to build a research base for distance learning in the context in which we work in the Global South, because right now we don't have a lot of great measurement methods. We don't have a lot of great data. We're still relying on -- we're still taking our cues and our information on our paradigms from the Global North. So I think I'm hopeful that as we do more of this M&E around distance education, as [inaudible] to this roadmap, that we can begin to build a research base that really serves our purposes. So thank you. >> Emily Morris: Thank you, Mary, for giving us so many things to think about.

I'm going to ask Dr Means now to speak a little bit. >> Dr. Barbara Means: Well, thank you, very much. And I want to thank Mary for really giving that great context and perspective for this work and also echo her praise for the roadmap. I did take a look at it and found it very useful, logical and accessible. I do want to build on Mary's point about the heterogeneity of online learning. And that does introduce the need to be very clear about what and how we measure.

So I would say that the foundation for implementing the roadmap is having a well-articulated plan for the distance learning initiative itself. It's what evaluators often call a theory of action. And what this theory of action does is it specifies the intended outcomes, the intended beneficiaries and the intended nature of the beneficiaries' engagement with whatever the intervention, that is the learning intervention is.

So it's entirely compatible with the roadmap. But you really need to start there both for the design of your intervention, your program and then for its evaluation. And this is important, for example, in thinking about the many different kinds of online distance learning because those are going to influence the nature of the engagement, enhance what you measure. So we do find that there are important distinctions between engagement and outcomes typically found in fully online learning experiences and those that are some combination of online and in-person. So we sometimes talk about blended or hybrid or high flex.

So that's an important distinction. And there's also an important distinction, I think, between synchronous, that is where you're having interaction at the same time even though not the same place, and asynchronous online activities. So in past research, we found rather different outcomes, depending on which type you're talking about. So we know that online learning -- fully online learning can produce learning outcomes. And in many cases, they're equivalent to face to face instruction. But there's also data suggesting that weaker students, whether it's because they're younger, they have less preparation, they're more anxious, do less well with totally distant learning than they would have in a face to face class.

So generally, I'm a proponent of trying to do blended learning if you can. And I will talk somewhat about that blended learning advantage. It can involve the instructor meeting with students face to face on some days and having students work online either from home or in a computer lab on other days. That's one form of blended learning that's used in many postsecondary courses in the United States, especially math courses.

Or it might involve students having a subject matter teacher online and also a teacher or coach or teacher's aide who was with them in person. This ladder model, for example, was used in Florida when the state put a cap on class size. And many secondary school students didn't have enough qualified teachers.

So they had their teacher of record be from the Florida Virtual School, which they did online. But they were gathered together with a teacher's aide or a coach in a technology lab within their high school. And that was a way to overcome that lack of teachers. Now when you have these very different experiences and you need to evaluate impact, the important thing is you're going to need a comparison or control group for which you'll have the same measures that you use for your online group. And that's where it gets tricky. This can be difficult.

If you're developing your own learning assessment, it has to be fair to students who are not in your particular online learning experience. And it may be difficult to induce a comparison group to actually take the extra assessment, whatever measure you're using. As an alternative, you can look to outcome measures that are automatically or that are already being gathered in your environment. It might be a mandated test for students of a certain age, or it might be whether or not they attend the next course in a course sequence. And when you do that, that gives you a lot of options in terms of being able to find a control group without having to go in actually touch them in person, which makes things -- which makes things easier.

But there can be a tradeoff between measuring what you really care about and your theory of action. That is it might be conceptual learning and the kinds of measures that are automatically gathered for all students. So that's an important decision to engage in as you are planning your program and your evaluation. I would also say -- I don't want to go too far into the technicalities. But in terms of evaluation design, if you are not in a position to be able to randomly assign people to either take your treatment or be in the comparison group, then you're always going to have to rule out alternative explanations for any positive result you find.

And that's part of the reason why it's so important to get a measure of students' prior educational achievement or a pretest, because that is so much a predictor of what the outcome is. Other student characteristics, the nature of the experience that students have and their instructors online is very important. And also, as Mary mentioned, what technology tools they're using, the time they actually have exposure to and their actual learning experience.

So that need for qualitative as well as quantitative data is really important as the roadmap -- as the roadmap describes. So I want to say -- finally, I'll just conclude, whether you're doing formative work where you're measuring your own program over time to make it get better, or you're trying to do the more summative work where you say this is the impact of the program, it's important to take an equity lens. I just want to reinforce that part of the roadmap.

So to show the data for groups that are important, that traditionally have had less access to good learning experiences, you want to make sure they are getting exposure to it, that they are having the same intended experience and that they are having the same intended outcomes. And I'd also say look to involving those intended beneficiaries in the design of your measures of engagement and outcomes and actually collecting data. Students can run focus groups for other students. And they can, in fact, be wonderful data collectors for you. And it's a way to incorporate their perspectives. You learn from them.

They learn from the experience. And it's a very empowering thing. We're trying to do this at Digital Promise in a number of our projects. And it's been working very well.

So I'll close there. I do have a book with a lot more information. I'm going to put it in the chat if people are interested in finding it.

I don't get any money from this. But it's not free, I'm sorry to say. Okay. There you go.

>> Emily Morris: Thank you so much. And just to reiterate again, we are so grateful and lucky to have Mary Burns and Barbara Means here to speak and use all of this time to answer your questions that you are starting to enter in the chat. So please keep the questions coming. And just a quick note that speaking to the theory of change and theory of action and the planning out distance learning, there is a comprehensive toolkit kit for planning, comprehensive distance learning strategies that lay out some of how you think through theory of change, how do you think through the monitoring at the same time, costing that will be coming out before September. And it's a step by step look at how to plan distance learning programs, speaking to a lot of the points that Dr. Means discussed.

And so that will be shared further before September. So I'd like to start with some of your questions. And one of the questions that has come through is looking at age differentiation. So recognizing that online learning looks differently for higher education and older youth and depending on technology literacy, from early childhood learners who may be using technology with parents and caregivers. So could you speak a little bit about how those measurements differ for different age groups and any thoughts that you have on sort of the age differentiation and online learning and the different measurement considerations that that leads to? I'll ask -- Dr Means, you can start.

And Mary, as well, has a lot of thoughts. >> Dr. Barbara Means: Well, I will start. I do think you can -- again, this is a time when it's important to work with the designers of the program. So there actually are measures you can embed, assessments of students' engagement and or their learning, actually, in the design of, you know, whether it's an app or whether it's some kind of web-based learning experience, even for young children. They make choices when they click a mouse.

And they decide to move something here, move something there. So that has been done. And it can be done very successfully. I also think the importance of those qualitative measures with young children is really important. Some of my colleagues, Dr. [inaudible], in particular, has done a lot of work

with the design of early learning in STEM areas in particular. And they go out. And they actually work with designers and take the early prototype programs. And they study how children and children with their caregivers interact with those particular apps. And oftentimes, they learn a lot of things from observation that you would not know just from looking at the data from the app.

And so they're able to make sure later that when they take data from the app, it's actually measuring what they think -- what the designers intended to measure. They've also involved working with caregivers of young children and giving them a voice in the design of these learning experiences. And that's proved to be really a value add to the design and quality.

>> Mary Burns: Hi folks. I'm going to keep my camera off. I've got workmen in the apartment right now.

So yeah, I'll just add two things. One is I think it might be really interesting to take a look at the research that came out of the Xerox PARC Institute in the 1970s and 80s because this is what researchers did. They studied the way young children interacted with technology. And so it led to things like the mouse, the graphic user interface, because as we know from John Piaget, you know, children go through these various stages of learning, one of which is this operational stage where you're kind of touching everything.

And the second is I'm just going to address the measuring children's behavior with a story from India. I typically work with adults. So I wouldn't really be able to answer this, but -- the question.

But in India, I worked on a professional development program. And we really wanted to assess whether it had any impact or not. You know, we went -- we did classroom observations. We talked to teachers. Of course, everything was wonderful. And, you know, response bias is real.

So what we actually ended up doing to really test whether this intervention was working or not was -- and we did this in Indonesia with some distance education courses -- is we actually brought a group of kids into a room. And we started a lesson. But we gave them very scant directions. And we watched their interactions and listened to them talk. And through that, just by observing them getting into groups, you know, sharing roles, the kind of talk that they used in their groups, the way that they approached the subject area, we were able to see that the teachers had actually used a lot of the content from our courses. So it was a really nice kind of low invasive, noninvasive and play-based way, really, to assess what -- you know, if children had learned through their teachers in these online courses.

>> Emily Morris: Great. Thank you so much. And maybe just building on this, we -- so that was a great question. Angelipose Sergio is also talking in terms of -- in terms of Honduras and trying to collect data and finding it difficult to measure with younger learners and asking about mixed methods. So I'm wondering if you had any follow up thoughts on mixed methods and how do we think using mixed methods to involve caregivers and others.

So if you're also working with early childhood or younger learners that you may be also involving others, teachers or caregivers that are involved. >> Dr. Barbara Means: Again, I'm very high on the idea of involving them in in particularly collecting the qualitative data.

And that includes listening to their insights about what it is important to measure and if you can incorporate them into your M&E design with some kind of compensation for their involvement. So they're actually getting compensated for advising you on your measures, getting trained on how to administer those measures and then providing you the data. I think that respects their time and respects them and can create a very fruitful partnership. >> Mary Burns: And just to add. You know, we've heard folks on previous webinars talk about, for example, I think Liana talking about Ubongo Kids where they actually have a camera in the room, and they're observing children as they interact with content.

You know, a great tool at our disposal, a terrific M&E tool is cellphone. And there's all kinds of research, whether it's from, you know, San Francisco United School District. They have a partnership with Stanford that's actually using text messaging and just simple messages three times a week to parents to help them get their kids to be ready for preschool. We've seen this in Botswana, a previous USAID webinar, where [inaudible] and Chris talked about the work of -- is it Young Love or One Love? Emily, you have to help me.

One Love is a Bob Marley song. Young Love is -- >> Emily Morris: Young Love. >> Mary Burns: So, yeah, where they're actually using cellphones and interacting with parents. And through that, they were able to assess what children are learning. So I just think, you know, in Honduras, the rates of cellphone ownership, like many places, is very high.

And that's a terrific M&E tool. >> Emily Morris: Great. Thank you so much. I'm going to move on to a question from May.

And speaking about in context where there's not a robust learning management system or where teaching and learning communications is in different ways, so mobile phone apps, text messaging, etc., how can we do data collection that is low touch in a context where there are low incidences of learning management systems and limitations on analytics? >> Dr. Barbara Means: I think the general strategy is try to use the technology that is available and that learners are using. So you can use text messages. You can ask for -- you know, you structure the response. So it's not going to be easy to gather as much data as you would with people who had access -- better internet access and learning management systems.

But it still can be done. And more and more of the work is actually looking at using mobile devices as data collecting -- collection instruments, as Mary indicated. So I think there are great strides in this area being made, but more to be made. And certainly, many of you are working in challenging contexts. >> Mary Burns: Yeah.

You know, to answer that question, I think I'd like to ask a lot of questions. So most online tools, whatever they are, have -- they generate some kind of data. You know, there's that famous saying, especially if they're free, that famous saying that if the technology is free, you are the product. So if you're using an online classroom like Google Classrooms, if you're using, you know, a standalone program like Nearpod, they are generating data. Now, you know, will they share that data is question.

But, you know, I guess there is something -- and I admit I don't know anything about it. I know very little about it. But increasingly, online programs are using something that's called an LRS, a learning record store. So I would actually ask you to look that up, because, as I understand it, it's a tool for generating data collection, although, does it have to be with the learning management system? I don't know.

One more thing, and this is coming down the road, and this won't be announced until later on. But ISTE, which is the International Society of Technology and Education, has been working for years on this idea of how do we start to collect common data across disparate systems. And so right now, even if something isn't technically online learning -- you know, most of what we do now is online because of software as a service. Microsoft is now no longer -- store -- Office isn't on our computer anymore.

It's on the cloud. So ISTE has created this. And they'll be rolling it out. So it's something to look for called a universe -- it's -- I just know the initials.

I'm sorry. It's called a UPC. And it is actually something that when organizations get one, they'll actually -- like a USAID -- they'll actually be able to generate data across a whole range of different types of software platforms, etc, etc. So just without specifically answering your question, because, again,

I think I'd have to ask a lot more questions -- I think those are two things, that learning record store and then this whole idea of a UPC that will actually allow data collection across different platforms, although that's, you know, a few months away from being unveiled. >> Dr. Barbara Means: Right. And I think it is farther from being actually operationalized in any particular place.

So I do think it's going to take a while before you see the benefits of that. It's, you know, like the internet access. It's getting better and better. Great strides have been made.

But that doesn't mean that everybody has what is needed to actually implement it and use it for either delivery of learning or monitoring and evaluation. >> Mary Burns: So this is the -- it's universal product code I just looked it up as you were talking. Yeah, thanks, Barbara, for that contextualization.

>> Emily Morris: Thank you for sharing those questions. And if you feel like your question hasn't been sufficiently answered, feel free to ask a follow up question from the chat. And we'll try to help answer those even further. So we have a number of questions, including ones from [inaudible], in the chat in really looking at what is -- what are the best ways to capture actual usage of programming and engagement with the available resources? And maybe to tag onto that, how do we measure time spent on the task -- learning tasks in online learning? >> Dr. Barbara Means: You know, the most consistent and broadest data you're going

to get, if you can, in fact, get it from whatever the app is or the learning management system itself. So I talk about backend data. So it's automatically collected. And certainly, the more sophisticated applications do this and can provide that data to you if you have in signing your agreement or whoever has signed the agreement has made that part of what will be available. So you need to make sure that that is in your contract because you don't necessarily automatically get it or get it in a form that you can use it.

So we have done this quite a bit. You need to arrange your agreement. And we also find that the data that is usually generated may not be exactly what you need for your evaluation. So here, again, if you can have good relationships with your vendor, your supplier of technology, they often are willing to work with you and may give you different data. For example, one of the things they typically will do is they will give you the number of days in which a person logged on. Now that may or may not be something that you can attribute to a particular student or even a particular class, depending on how the login is done.

Also, it could be that the computer was just left on, and nobody was doing anything with it. What you would really like from a evaluation standpoint is you would like to know what particular resources -- you want to know what student it was and who the teacher is, what resources were used. And then, also, the sequence of moves that the student made. So one of the things we found is very predictive, for example, is if a student takes a quiz on how their understanding of a content area is going, what did they do afterwards if they didn't do well on the quiz? Some students will immediately just try it again, hoping by luck, they'll hit the mastery criterion. Other students will actually do what the system tries to nudge them to do usually, which is to go back and study the content again. And it turns out that those who have the go back and study the content again behavior tend to do much better in courses than those that just either skip it or just try again without any study.

So this is something you often have to negotiate to get the meaningful measures out of the system. Again, people are getting much more sophisticated about this all the time. It's getting better and better. >> Mary Burns: To respond to the time question, I guess I'd approach it from the design end. You know, I know -- I'm guessing a big tension, of course, in online learning is that it really is supposed to be more competency or outcome-based versus time-based.

But that's the tension we have as we shift from, you know, a brick and mortar system to a much more online system. So I think there's a few things that you can do to make sure that the time that you're ascribing to an online course actually is the real time. And so some of these things are easy.

So for example, if you create a video, the video has an automatic time, you know, four minutes and 34 seconds. Or if you create a multimedia application, same thing -- two minutes, etc. It gets a little bit trickier with things like readings because you don't know typically how long it takes someone to actually do a reading or, you know, answer a discussion question. So what I would say is I think this really, really speaks to the absolute importance of making sure, especially for USAID people, that when people are designing and implementing online courses in your programs, that there is piloting, because what you want is you want someone to go through -- when I design courses, I get user testers. And I actually have them write down how long every single thing takes.

And then I combine the hours and add 20%. And that's kind of the time. I feel like that's really the best I can do. But yeah, you can't get actual time on the backend, unless you actually know what the time is on the frontend. And that's a design issue.

And again, I think if you are using a learning management system, that's really the beauty of it because it can track how long you spend on something. And if you're using time as a proxy for completion, then you can set -- your course desire can set up the LMS to make sure that the student has to watch the whole video in order to get credit. It's harder to do with a reading. Or they have to do this discussion in order to get credit. >> Dr. Barbara Means: And, of course, you can also build in assessments within whatever the learning materials are in those interim assessments.

And the sequence of scores on them are very useful outcome and engagement measures. >> Emily Morris: Thank you so much. So I just want to reiterate, what I heard from both Barbara and Mary is thinking and thinking about the design and measuring the design stage. And thinking about analytics takes negotiation with the different agreements that you may have with the different software and tech companies that you'll be working with is really negotiating those and thinking about the data from the onset. So I just wanted to reiterate those points.

We had another question from [inaudible] about how do we measure learning and absence of standard assessment. So when -- during the transition in COVID and having to go online very rapidly, how do we test that when we don't have necessarily treatment and control group? And I want to just say from our learnings from the panel on Tuesday, that also thinking about formative assessments versus summative assessments is really important. Formative, informing teaching and learning that are not necessarily for accountability purposes and don't need to have the same reliability and other measures that we need to have because those are for teachers. And informing, teaching and learning our caregivers, versus the summative, which are the ones that we're talking about more that need to be more thought about in terms of reliability.

And I don't know if Barbara or Mary, you have anything to add on to that, how do we measure. And I guess my question back to [inaudible] would be are those -- do you need to measure for formative purposes for the teacher to know what's happening and for wellbeing of students or are you are you being asked by a ministry or Department of Education to be reporting on, because those are two different types of assessment [inaudible]. And so maybe, Barbara, you have something you want to contribute to that question. >> Dr. Barbara Means: Well, I would just say you made a very important distinction.

I think both the formative assessments are very helpful in -- they're helpful in promoting the student's learning. And so it's a good thing to have included in your learning design. It's also very helpful for you in improving your program. As it's going on, you can look and see are there certain concepts or areas where students are struggling.

Or maybe it's a subset of students that you want to look at, whether you need to have additional experiences or a different approach for those students that need to return to the material a second time. In terms of the summative assessments, if I could wave a magic wand, it would be something that actually is taken from multiple points in time. And you add up the progress on the formative assessments. And then you create some kind of summative outcome measure. And we wouldn't have to have the external -- my colleague who calls it the drop in from the sky assessment.

But most of us live in a world where there's an education system that, for accountability purposes, wants those drop in from the sky assessments. So I do encourage you to look at those. The advantage is that probably, you can get a comparison group that has to take them as well.

It may be that they're just parts of them that are relevant to your particular learning program. So if your learning program, for example, is on maybe the very basic skills in mathematics, maybe that's the portion of the summative assessment you want to look at. Often, there are scales for subsets of the formative -- of the summative assessment. Or maybe you're focusing on the conceptual part of learning. And then that'll be a problem-solving scale on the summative assessment.

So the advantage of those is you can get comparison group. They're widely regarded within political context as being important. Another thing I would say is think about possibly using life outcome measures as your criterion.

And those can be things like the students stays in school for the next year. So sometimes, those are relatively simple to gather. But they're absolutely critical for the welfare of individuals.

>> Emily Morris: Thank you so much. And maybe I'll tag on to that question with -- another question we had was on how do we also measure social emotional? So these life outcomes in social emotional learning in an online space. And then there's a question about -- from Angelie about target setting. When we're asked to set targets on reach and engagement outcomes, how do we know responsive targets to set for our different outcomes? >> Dr. Barbara Means: Maybe I'll take the first and let Mary have the second. It sounds like I'm [inaudible].

But I will say that on those social emotional outcomes, there has been a lot of work in this area recently. And I put in a link to a website from the collaboration -- the Collaborative for Academic, Social and Emotional learning. They have a whole set of instruments and a guide to using them. These have been used in prior research. So many of them do have some reliability and validity data.

You can look to those. I would caution that this work was done predominantly in the United States. I think there are probably other resources from other countries. Some of these things are cultural in many ways. So that pilot, that pretesting that Mary talked about, I think, is really important if you're translating one of these instruments to pretest it in your language and culture you're working in.

>> Mary Burns: I was hoping Barbara would take the second question [inaudible] the first question. But no, just, I'll piggyback on that to say that yeah, this is -- there is a lot of work being done on this. And this is an area that people are struggling.

But also, there's, you know, I think a lot of really interesting stuff happening, certainly at the international level. And, you know, one way in terms of looking at social emotional learning is to use game-based approaches. So that's not necessarily to create a game because some of those are very simple, as you know, like what's the capital of Burkina Faso.

But much more, you know, simulations game, based principles where students have to, you know, solve a problem. You know, an example that people might know is this whole notion years ago of something like Sim City. So you have to solve a problem.

You're confronted with roadblocks. And you have to persist. And just the fact that you kind of get through and create something is kind of -- is a proxy measure for persistence. You can add to those measurements through facial recognition systems that, you know, look at your frustration, which is a form of engagement, your interaction, whether you give up, etc. And, you know, increasingly, people are using more

and more things like artificial intelligence to kind of start to look at social emotional learning. Then you can use technology, I think, as part of kind of a larger problem-based or project-based activity. And maybe it's not the technology that you're measuring. And now I'm really talking about in-class uses of technology. But it's much more the whole instructional design itself.

You know, are students cooperating? Are they collaborating? Are they flexible? Are they persisting? Etc. Etc. And so in terms of the target setting, how do we know how to respond to a target? You know, that's another question that I'd want to answer with a lot of questions. I guess, you know, in our work, wherever we work, it's context, context, context, right? Location, location, location.

I mean, I would say that we try to design targets that are realistic in the context in which we work in terms of, you know, people's literacy levels, their numeracy levels, their years of schooling, their access to schooling, their access to technology. So I apologize if that's not a good answer. I'm happy to follow up. But I just answered it that way. Barbara, I don't know if you want to add anything to that -- >> Dr. Barbara Means: No -- >> Mary Burns: -- non-answer.

>> Dr. Barbara Means: Yeah. No, I think that's -- you know, it's often the case, I find, as an evaluator -- I go in and I -- and whoever the funder is who wants the summative data, sometimes, they have expectations that I think are unrealistic. I try to bring prior research and discuss with them, you know, what would you really expect by that time. But it is something you have to be careful of.

And you can't always win over, you know, win over the funder. I do try to make sure I'm building in some measures that maybe are somewhat less ambitious. But, you know, you measure a couple of different things and measure some outcomes that in retrospect, they'll be happy they had that other measure too because it will show something good even though they didn't get the miracle that they thought would occur in the first iteration of their program. So I guess that's all the advice I can give is, you know, where you have a choice, you try to negotiate some of those things, build in some fallback positions and redundancies in your data. >> Emily Morris: Great. Thank you so much.

And I love the reiteration of setting targets based on evidence and learning. And so one thing that we have also learned from the different groups and we reiterate is going back to those measures and going back with data as the -- as you collect data as well and reforming and thinking about targets is an ongoing process as well. So thank you for those points. I have two more points from some of the questions that were submitted at the beginning about quality. How do we measure quality of online learning? And then I have a question related to cost benefit and knowing cost benefit tradeoffs for different types of online and any thoughts either of you have on those two issues.

So quality and then cost benefit. >> Dr. Barbara Means: Well -- go ahead. Go ahead.

>> Mary Burns: No, no, you start. No, no. No, I mean, you know, I would just say in terms of quality, first, you know, just standards or criteria for quality. And you have to have those for, I would say, everything. You have to have them for the content, for the instruction, for the assessments.

You know, these can be international standards. They can be locally developed ones. And then I think you have to develop checklists and rubrics to assess your online program for these standards of quality, for rigor, for fitness. I think you have to make sure that the quality of outcomes of the educational experience is consistent between modes. I mean, frankly, I find there's a lot.

So now, you know, before COVID, online learning kind of meant one thing, which was a learning management system. After COVID, it means Zoom. But I'm talking LMS period BC, Before COVID. I mean, oftentimes, what we did was we really worked on asynchronous thing or synchronous learning and left kind of asynchronous out there, let them figure it out on their own. And we can't do that. So you have to make sure that you have consistent quality of outcomes.

And then you have to systematically collect and analyze this. I would say especially student feedback is a real core component of academic quality assurance mechanism. So yeah, paying attention to these products, to these processes, to production delivery systems. You know, universities will often have quality assurance systems for online learning.

But our donor programs often do not. And I think we need to. In terms of the second one, I'll just -- I know Elena is on this call because I -- and she gave what I think, with all due respect to every CIS presentation I've been to, possibly the best presentation I've ever been to where she talked about the difficulty of doing cost benefit analyses and return on investment stuff. So I'll leave that to Elena because that's her area of specialty. And she's at USAID.

So you can talk to her. But I think we really can't measure that whole idea of cost until we know the whole total cost of ownership of online learning.

2021-07-17