Is Visibility an Art of Possible or Impossible?

- [Lu] Hi, everyone, welcome to my sessions 8:30, so I'll get started. My name is Lu. I never pronounce my last name in front of audience because it's difficult to pronounce. I'm a principal system engineer at The Home Depot.

My domain area is around SIEM, SOAR, and general enterprise logging and monitoring. I grew my career based on Splunk. I worked in a global finance institute for a couple of years and then became a Splunk PS consultant within a small consulting company in Texas. I worked with a couple of local government and higher education as my customers. And at Home Depot, I architect and design our SIEM, SOAR solutions. For today, we are going to talk about visibility.

I'll begin with introducing the context, and talk about what visibility is all about, and why it is important. And I'm going to talk about one of the data pillar making visibility possible. That is logs. I'll talk through a couple of case studies to walk you through the journey of building a secure logging program to how the maturity journey looks like. I'll talk about looking ahead and some takeaways from my session for you. I have two kids.

One is 10 years and then one is 8 years old. They are adorable, and they're lovely. They make my life every day, but they can be annoying sometimes. In the middle of this screen, my son is using his math book as a cover, but watching YouTube videos and without sound. The problem is that they are real people.

They run, they play, they do all kind of activities. I can't put my eyes around them every day and 24 hours. Shifting into our professional world and the thought goes on.

What does visibility look like for a medium-sized company with 100 employees and 10,000 of applications? What does visibility look like if we're talking about a large-size company with tens of thousands employees and hundreds of applications distributed in multiple geolocations and hybrid environment? And the scale can goes on and on. And the complexity can goes on and on as well. So what is visibility? What is visibility for IT specifically? Visibility is the level of data accessibility within our environment. Within our environment. It is empowered by enabling users like us and systems to access to the right data to make right decisions.

If you ask visibility, it means differently to different people. The other day, I talked with a CISO sitting near me at the keynote. I asked, "What do you do every day as a CISO?" He said, "Every day I check am I breached? What are my top priorities? What are my top vulnerabilities?" I asked, "Do you think you have visibility to all?" He said, "No, it's shadow IT on manual asset." So he talk about the asset management visibility problem around asset management. If we talked with a network smith, they are going to talk about the traffic flow in the environment and how visibility can help with those flow data to help properly segment the network. If you talk with an IM smith, they are going to talk about with you the users, groups, and the federated identities, all those kind of stuff, right? This means that visibility needs to be defined from top-down approach.

It is an organizational strategy and needs to map into each specific areas for implementation. From aspect of we not only want to run our business with minimal outages but also protect ourselves from all those kind of threats. Traditionally, monitoring is being used to help with this visibility.

It help us to detect what we wanted to know and what we want to be alerted upon. Over the years, it has been evolving with another word, observability, which emphasizes to use data centric to help identify our noise. In another simple word, monitoring is proactive while observability is proactive.

Seeing and observing are two different things. Both methodology are being widely used in the company, right? There are two aspect of IT monitoring. On one hand, we have operational monitoring.

On the other hand, we have our security monitoring. Both share a lot of commonalities. Both starting with defining what to monitor, what to know. For operational aspect, we use service level objectives and key performance indicators to help us know whether my service is up and running and whether it's meeting the SLOs. On the security side, typically, we are using some common frameworks like MITRE and other component to help us build what to monitor. Both areas are also using some common analytics methods.

We can use single signal alerting to detect a specific scenarios or correlate all those data together to reconstruct a full story before firing an alert. Machine learning and AI technologies help us to detect anomalies, but all those analytics methods can't work without data. The data pillars for operational monitoring are metrics, traces, and logs.

While logs also being used in a security monitoring world, namely the audit logs, the activity logs, and so on. From response perspective, both areas are always seeking ways to shift from manual operations into automation to achieve operational efficiency. Another commonality is about retrospection to help close the gap of monitoring, conduct blameless post-mortem lesson, or security incident reviews to apply what went wrong onward.

Dig into the historical data and patterns to identify anomalies behaviors and apply to the data onward as well. Feedback the status of alerts, whether it's benign into the model to achieve model accuracy. In security side, there are additional process to do this proactive monitoring gap closure that is purple teaming, threat hunting, and digital forensics. Visibility is hard, it's data management.

Without data, without proper data management, everyone is blinded. Every cybersecurity technologies can't function. So this is the three pillars for overall observability, where we can see the intersection of metrics, traces, and logs are extremely important to pin down the problem and help respond most efficiently. The other aspect is that logs being used in both areas.

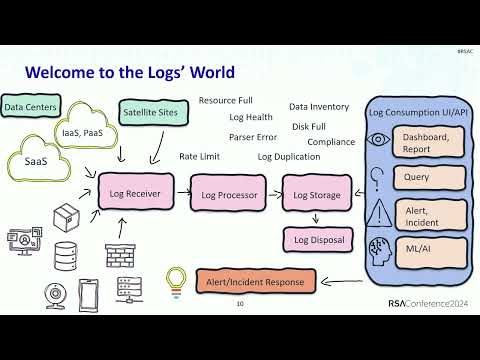

It is a common data pillar. Given the high volume and diversity of logs, it is a whole area of farm. Here we go. Welcome to the lovely logs' world.

This is a simplified log life cycle, but you can consider it as any kind of data, any kind of pipeline. On the left side, where we call it the Log Generators, log start locally, they can be anywhere. They can live in our data centers, satellite sites, the IaaS, PaaS, SaaS cloud providers. They can also come with different log types. The logs from traditional compute or containerized environment, network appliances, database login, web applications, IoT, mobile, all those different kind of log types.

Keep this in mind. In order for the logs to be generated properly locally, there is a certain configuration you need to exercise. Not all logs are enabled by default. You need to work with different log type, and for example, network appliances, right? There is different level of login configurations that you need to make sure that the proper configuring in order to get the right data to the logging pipeline. Once the log is configured and forwarded, the first component is called Log Receiver in a logging pipeline. A log pipeline is, a pipeline is anything but moving data from A to B.

Log Receiver receive the log and pass on to the Log Processor. The Log Processor is going to parse, filter, and organize your data before indexing into Log Storage. You can do the enrichment at that level as well.

Log Storage can be tiered in order to save money. A lower-cost tier can be used to archive the data. You might have heard, like, data lake, right, to support historical analysis or some threat-hunting activities. The high-cost tier of storage, which to support the use cases where you need to get data retrieved faster to support real-time monitoring. Well, after logs are available, we need to make use of it. That's the whole purpose of doing the log collection.

The Log Consumption side lists all the different exercises that we can analyze the data. We can build a dashboard, we can generate the reports, we can launch queries. We can also generate the alerts, which is the purpose of monitoring those logs. And then we can use machine learning to generate alerts as well. All those output is a trigger to our incident and alert response, which I'm gonna talk about more in later.

So this is log usage. After log is being retained for a period of time, we need to dispose logs. Log, keep in mind logs are also data. They can contain different information. Keeping logs unnecessary longer is also a risk exposure. Given the vast of logs and EPS, the volume, multiple issues can have in this pipeline.

I've listed some commonly known log issues. If you run a logging infrastructure on-prem, how much time is spent on dealing those problems? Even if it is a SaaS solution, those problem are still happening. I'm going to talk about few case studies listed here. Hope it resonate you, a maturity journey from building a program into a mature state. When building a secure logging monitoring program, there are three different parties. There are three different parts with distinct responsible parties and tasks.

Governance, governance is the control and command. It's essential. It helps to answer the questions of why we are doing this, how we are doing this, and what it's all about. It should consist a list of policies, logging standards, and logging procedures and controls to help with the program awareness and planning with the organization. It should include any compliance requirement that your organization is subject to, PCI or SOX. It needs a process how to manage the risks when it comes to a logging gap.

Depending different sectors, different adversaries are using different TTPs to target us. In order to catch them, we need to take into the consideration in order to get the right logging element to detect on those activities. We want also to list the success criteria upfront in order to measure the maturity as we develop this program.

We also need to have a budget plan, not only on the dollar amount, but also our personnel to make sure that they are trained and we have enough resources. Architecture and engineering is the enablement of this program. It's like our bodies. We'll start by select the technology that support the mission and requirement of this program. As we saw so many vendors in this event, we need to know every vendor has their advantages and disadvantages. We need to know what we want, organizational-specific requirements listed upon with different severities clearly stated and go through a proper evaluation process.

Grill the vendor. Tell them exactly what they can tell us. A lot of things is that they tell, "I'm going to provide you a unified data collection platform and enabling the consumption for different use cases."

Well, ask them, what kind of data they are talking about? How to map to the detection coverage? Does that meet your need? What is their observability stack look like? Even though they are SaaS, there are still logging collection issues happening. And we have to go through their support cases to do all those, to deal with all those problem. It is time-consuming. We need to, in the process, in the selection process, we need to really ask hard questions in order to pick the right one for our own organization. We need to design the pipeline and what's the logging agent to use in order to make sure the pipeline and agent, the agent does not consume resources, enough big resources on endpoint, and the pipeline can scale and digest all those kind of volume. We need to observe our own staff.

We are also service provider for our internal teams, right? We needed to be able to tell whether... When there is a log ingestion problem, we can tell exactly what is going on. Data normalization, with different log types, we need to normalize data so that users can have, can run one query and get all those data together to reconstruct the story. What is going on? Log data is very important. We need to ensure that need-to-know principle, and act, and list the privilege.

Your tiered SOC analysts or some logs you might want to share to your IT, right? They want to monitor the traffic flows for my application. So you do not want to share rest, but rather the only the data that they need. Log data inventory.

This is a visibility about this program itself. Know what you own, know what you have, know what you don't have so that you know where your gaps are to collect and then to close the gap as small as possible. Alerts, incident, and response at execution, at value realization of all the effort have done before. Own your detection detect library. And take the input from MITRE, from the gaps of your threat hunting and attack simulations to continuously improve the coverage of your detection library.

Tuning, tune the alerts to remove the burden for your analysts. Consider how to help the team to respond the alerts more efficiently. Any automations you can help with them.

Most of the time, it is not automation. It is really the put in place the right runbook and have the proper education. After you run the program for a while to support a digital transformation, according to a survey, 72% of the organizations is defaulting to cloud technologies. Let's look at the cloud, public cloud log and monitoring. AWS, Azure, GCP, those are familiar names in the market.

They take 2/3 of the cloud, public cloud shares as of today. Since business are shifting sensitive data and important workload to the cloud, yet also brings public cloud threats with the top ones listing here. Data leakage, financial theft, and denial of service. What are the main reasons causing those, make those threat possible? The number one reason is because of the cloud misconfigurations, such as excessive IM permissions, unrestricted inbound and outbound ports, and so on. So how do we prevent that? There are two preventive and effective countermeasures. First one, infrastructure as code.

It codifies the infrastructure in a repeatable and standardized way. It can also incorporate with security as policy to make sure that the cloud resources are provisioned securely before it gets deployed. Secondly, scans.

Scan the vulnerabilities of your cloud assets. There are so many tools in the market to help you do the report, the nightly scan, to know where your vulnerabilities are. The second biggest reason is the lack of a real-time cloud threat detection and response. The misconfiguration part, one is preventive, the other is maybe button scanning, right? It does not really give you what is exactly going on in the environment in real time. That is comes in play the cloud threat detection response.

If we think about SIEM, and they use the concept of distributed SIEM, this would be what we call cloud SIEM. It covers the cloud exposure for us. When we decide which product to use, we need to make sure that it is agnostic and support multiple clouds, and it can integrate the analytics portion into our full SIEM and SOAR to reuse the existing response and correlation flows. It does not matter.

It does not really matter which product you use. That fundamentally, they are using the logs. They are using the logs generated on the public cloud environment in order to enable those analytics.

So let's take a look at it. Cloud resources are organized in a hierarchy structure. The act logs or activities are generated at a different level. From vertically speaking, it can happen at org level, cloud-space level, or at individual resource level. Horizontally speaking, because when building an application on the cloud, it will use multiple components of the cloud. You have load balancer, you have database, you have Kubernetes environment, and you may want to consume data from the other project or subscription.

So in order to get full visibility in and out an application, it requires a combination of different levels. From log type perspective, there are two high levels. One is write, meaning any change happening for your cloud environment. The other is read, pretty straightforward. Here, I list the mapping for different log types across the three cloud providers.

The first column, finally, it works. The first column contain the write logs, the write logs. Keep this in mind as I mention, not all logs are enabled by default. Most of them are not. If you want to gain visibility of different logs, you'll need to go and read the documentation of your specific cloud provider, and then do the proper configurations for the log generation. In this presentation, I'm using CloudTrail Event from AWS as an example.

CloudTrail Events include the management-plane activities happening on your AWS environment. It tracks the activities by user through API or SDK. It is enabled by default, so you do not need to worry about it. It is kind of the same concept in other public cloud as well.

The admin activity logs are enabled by default. It can also enable data, data-plane operations if enabled explicitly. What can we detect from collecting those? At least, it's these few detection use cases here, but there are more. On the top part, it's related to the data. The data exfiltration or denial of service due to unable to de-encrypt the data. The middle part related to the identity-based, the detections, suspicious logins, suspicious operations from different countries, and so on.

The last piece is really about what we are talking about today. As I mentioned, without log, the tools are not going to be functioning. So adversaries is going to seek ways to disable the login so that we can't, that blind our visibility. There are multiple ways to export this data out, whether to your cloud SIEM or your central SIEM. The most common one is the logs can be routed to the S3 bucket, and you set up the SQS to trigger the connector to pull from S3 bucket, and then get that ingested in your pipeline.

This mechanism can work with several other log types from the cloud provider as well. Okay. After we run even further this program, we need to do technology rationalization to look at what are the market for logging management and SIEM product and also to keep up to date what our business needs. In a simple world, we always want to use least of money but generate maximized value. Here, bigger and more apples.

How do we do that? The ideal state is that, I'm going back to the overview slide, is that we only need to work through the technology selection process to choose a new technology. The fundamentals, the processes that we have built in the beginning of the program remain. What are the thoughts to enable this? First of all, having a universal and centralized logging pipeline so that you do not need to touch each individual log generators. Rather migrate from the central control pipeline to switch from the legacy system to the modern system. For example, using the Kafka service, where you can queue the data within your environment, and then be consumed by different destinations.

Secondly, adopt everything as code principle. As I mentioned, we are also a service provider, internal service provider. We are also a DevOps principal and adopt all those securities, applications start by design, all those kind of principles. Infrastructure as code, as I mentioned, really help us to reuse infrastructure and then easily spin up needed for the new technology. Service as code, for example, you have a dashboard, and you have a set of dashboard you are building for your metrics team.

Those kind of element can be codified so that it can easily translate into any new queries in your modern technology. Configuration as code, same concept. Try to modular your change and make sure that you can parameterize your environment to get what you need to your new modern technology.

Detection as code is very interesting. You may have heard detection engineering, automatic detection engineering process, integrating with the robust tools, and so on, so forth. Because the really main difference between the legacy and the modern technology is the query language changed.

The detection as code use a Sigma rules, for example. You can build your detector library, have all the mapping to MITRE, or to need the framework in place already so that you have a fundamental, you have a code base which can guide you to easily translate into the modern technology required languages, right? That makes sense so far? Okay. However, when it comes to data migration, it becomes trickier. I'm now talking about migrate a piece of data via API. We are talking about in the legacy systems, let's imagine we are indexing 100 terabyte per day for one year.

How can we migrate data to the new modern technology? It is very difficult. We have few options. Either we have to crack our head and then spend all the effort work with both vendors or ourselves, engineering team to migrate data from one or the other. Or second option. Second option is what do we call it? Federated search. Is it possible to search from the new technology to the existing data stores living in the legacy environment? Think about, right? So we have one search layer, but we can search multiple data stores.

In this area, this concept is not new, but not a lot of vendor, I have not seen very mature in the vendor aspect, to support customers to do this federated search. There are many, many considerations here. For example, how to convert the query, how to manage the read limit, how to manage the egress and ingress charges, how to manage the latency, and so on, so forth. But there are some vendors are looking into this space, so we will see how they can help. Most of the cases, what I see, is really keep legacy.

Keep the data in the legacy systems until the data is aged out. Okay. As we heard from the keynote on Monday, one of the theme that we saw for this conference is burnout, which translate in the SOC area as alert fatigue.

According to a study from Trend Micro, 70% of SOC systems emotionally overwhelmed by security alert volume. And this negatively impact both professional and personal life. The consequence to the company is that the true signals were missed and the required actions would not be able to made on time to stop the breaches. How many of you are also seeing the same problem? So how can we help? Let's use those three Ps to help: people, process, and product.

Start with people training. With all the technologies that we have, the security's new sensors, EDRs, XDRs, MDRs, all those kind of new technology emerging in this space, and now, we are talking about AI. So we need to help our analyst teams and both analysts and also engineering teams to be equipped with the knowledge to respond those alerts more efficiently. One thing that I think I see is that we always think that SOC analysts are operational or tiered.

They have the security background, which is great, but what I've seen is that the lack of the business logic for them to be able to know, the alert I'm responding, what is impact to my business? What is the, architecturally, the data flow or the activity is traversing from different component of the architecture for the business application? So people training as we are in this space, it is not only the security aspect but also the business context, right, that we want to equip to our people to understand. Secondly, tuning approach, layered tuning. So I talk about the logging pipeline, but we can reuse the concept again, again. Here, we can talk about alerting pipeline, right? We have these cyber sensors generated the security event. It gets ingested into the central SIEM and gets correlated, got it, they get stitched, and they bubbled up as a final alert or final incident for analyst to respond, right? So through this alerting pipeline, multiple way, multiple areas that can be done to tune the alert from different component, help them to tune out the vulnerability scanners, the IP ranges that are not relevant, and so on. The third is really about severity, not alert coming with the same severity.

And people can only respond high critical alerts for giving amount a day, right? How to help them to quantify the problem, severity formula so that they can prioritize their time? We'll talk about enrichment. We have been talking that for years, but how to work in reality, right? So the user, the asset criticality, the user risk score, all those threat intel information all gives us certain information. How to use all those information to help justify the severity properly? We need a formula.

We've worked a really quantitative formula to look at all those aspect, and then have a meaningful way to help our analyst to look at those high security alert, high fidelity, high severity alert. Automations, automate as much as possible. Automations is easy to say, but it is not easy to implement.

From use case perspective and response action perspective, look at the data. Again, still going back to the data. Give a period of time to run the automation to make sure it caught up all the scenarios before automation is put into production. Measure and monitor false positive ratios so you know that you are heading to the right direction.

Also, you know, provide the feedbacks to supervise machine learning models. A lot of cyber sensors, for example, EDR, NDR, all those kind of technologies, all those vendors telling us that, "Hey, we have used machine learning and so on, so forth, but what we get from them also has a lot of false positives, right?" How to establish this feedback loop to tell them that, "Hey, there are certain things that does not detect correctly, right?" To help tune this model for us will be essential. The feedbacks is not about the reasoning about why it is benign, it is about just the status and field information so that we do not give enough info. We do not give our contact information to the vendors.

What is next? So thank you for being with me so far. The data volume and visibility goals need to go hand in hand. Without data visibility, goals can't be achieved, but given the high volume of data, we need to balance for, we need to balance ourselves in order to focus on high-risk asset, focus on objective that we want to do. Also interoperate and integrate all those data pillars for enhanced visibility.

Combine monitoring and observability. Observability is not used in the security monitoring, but a lot of practices in this area, as I mentioned, can be perceived as also observability, really proactively to know what's going on in our environment. And automate, automate for prevention from human errors and operational efficiency. So automation, probably not everything can be automated. Okay, so here's the summary slide, how to apply.

So next week, understand your org-specific visibility goals and have a top-down approach to map each individual areas for implementation. Assess your areas' current visibility status. The next three months, put in place a roadmap, how to achieve it from technology-specific, from technology perspective as well as the process and implementation perspective. And then within six months, try to automate what you can automate and reduce noises.

Do the tuning diligently so that it can help SOC team and also will reveal true visibility for your environment. (attendee coughing) I think I'm actually through. Thank you for your time. And I'm open for answering any questions. (audience applauding) Thank you.

2024-06-14 20:06