Information Access in the Era of Large Pretrained Neural Models

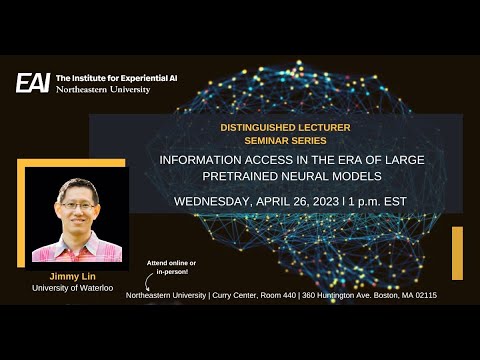

The Institute for experiential AI is proud to invite you to our spring seminar series featuring leading AI experts and researchers sharing Cutting Edge ideas you can expect AI leaders from a broad range of Industries sharing perspectives on defining and applying ethical AI in our distinguished lecturer series and eai faculty in Northeastern University researchers discussing their AI research in our Expeditions and experiential AI series up next join us with Jimmy Lynn professor of computer science at the University of Waterloo for his seminar information access an era of large pre-trained neural models on Wednesday April 26 2023 at 1 pm Eastern online or in person at the curry Center room 440 at Northeastern University [Music] registration is required and the seminars are free to attend we hope you'll join us for an invigorating spring semester hey so my name is Ken church and I'm here to welcome Jimmy Lynn it's my great pleasure to have Jimmy Lynn here um too many things going on um Aaron um Jimmy and I go way back and it's it's really you know I remember uh uh when I was at Hopkins and you were at Maryland you asked me to uh um to give a a guest talk in one of your classes do you remember the first question that was asked I'll never forget this question I actually don't I'm sure you wouldn't so the student wanted to know whether anything I was going to say was going to be on the final and what did I say um I think you said it wasn't oh and then I lost the audience yeah they just went to sleep afterwards anyway so I think none of this was going to be on the final so please take it away okay so that's permission for you to all zone out I guess all right so let me start at the top so what is the problem that we're trying to solve and by we I mean myself my research group and the research community that I'm part of so it's rather simple we want to figure out how to connect users with relevant information now as it turns out this is a relatively modest Endeavor in the sense that I really don't care that much about building agis artificial general intelligences whatever those are I'm not particularly interested in unraveling the mysteries of Consciousness or how babies learn based on limited information I want to tackle pretty simple tasks you got users you got information they need how do we connect them right so in terms of um academic silos this is generally referred to the as the the community for information retrieval dealing with search but it also encompasses things like question answering summarization recommendations and a lot of other capabilities so broadly I like to use the term information access to refer to this uh these bundle of uh capabilities now ideally we want to do it over lots of uh lots of different types of information the Myriad of information that are available so text images video and ideally we like to do it for everybody arranging from everyday users to domain experts such as medical doctors lawyers and the like however for the purposes of this talk I'm going to be mostly focused focused on text and everyday searchers all right so let me deal with the elephant in the room right and the elephant in the room of course is catchy PT large language models and all the noise that we hear coming from the Atlantic the New York Times uh and the we're all gonna die crowd um and so over the past several months I've had to play therapists to many of my graduate students experiencing this uh this sort of existential angst about you know what's going to happen and I'm gonna share some of my thoughts on those topics with you uh right here also all right so the tldr is none of this is fundamentally new however we have more powerful tools to deal with all the problems that we've wanted to solve before and it's a great and exciting time for research right so it's not a time to panic it's a time to look forward to a bright future and so the bottom line is we should keep calm and carry on all right so that really is a message I've delivered it and so since this is not on the final uh feel free to file out the room and I will uh I will see you all for a coffee later or something like but uh since you're here I'll continue on with the talk let me try to offer a little bit more context and to justify and back up this top level message okay so I'll start with a question of um you know where where are we and how do we get here okay so here's where we are right this is the new Bing search engine codenamed Sydney that was revealed a couple months ago and it is search as chat uh this is the example that Microsoft gave in its blog to launch the whole entire Enterprise here's somebody asking for a trip for an anniversary in September what are some places uh that the search engine can recommend that are within a three hour flight from Heathrow and the search engine gives I'm quite a remarkable result while it says you know here's a summary if you like beaches and sunshine consider this if you like mountains and lakes consider this and if you like Arden history consider that it's able to pull a lot of results from the web and synthesize everything together complete with citations wow amazing this is the future of search right okay I'll get into that but this is uh let's start with the history uh with the history lesson right so this is where we are now let's talk a little bit about how we got here right so before being the dominant mode of search was this well it was Google right so this is actually from Google at stanford.edu from Circa 1998 or so um and prior to search as Chad alabing we had a search as 10 Blue Links you type in a few keywords you get back a list of results and you had to sort through it to figure out what was going on right okay so unwinding the clock a little bit before that well this was uh the time with Google there was the time when there was digitized information on the web that you could access before that was a time when there were digital materials available but they were not available from the web so most of you are probably too young to realize this but in the 90s there was a time period in which we got CD-ROMs um and put them into our machine and booted up something like Encarta encyclopedia because Wikipedia didn't exist and that's how we got information right going back even further um before information was widely digitized there were books and we were at the stage for several hundred years right so libraries data back to uh uh several several more centuries before this is an image from uh Trinity College Dublin Circa 18th century this may look familiar because if you're fans of Star Wars this is where they got the inspiration from uh for the the Jedi archives okay and so if you unwind the plug clock back even more you arrive at what I claim to be the first information retrieve Pro a retrieval problem so this is a cuneiform tablet from the Babylonian period um Circa the 7 700 BCE and it essentially is a record paraphrasing of course of Jack owes Matt to sheep right so there were accounting records of debts and they put it in the storehouse Somewhere In The Granary somewhere and then sometime later when they had to settle the debts they had to go out and pull out these records the first information retrieval the first search Problem all right so we've been at this for a long time now right so the next thing I'm going to talk about is uh to uh I at least bring some credibility to the the things I'm gonna tell you about to tell you where I play in this sort of grand scheme of things so I've been working on this problem for a while and a lot of the things I'm about to share I've sort of experienced firsthand all right so where do I enter the picture somewhere between here and here so slightly before this and slightly after this so my own Journey began in 1997 um so when I began studies at MIT across the river so this is a image of 545 Tech Square this building doesn't exist anymore uh it used to be home of the MIT AI lab this is a building right there Tech Square does not look like that anymore um and so my previous my former advisor uh his claim to fame was developing the first question answering system uh for the web and I remember I was absolutely floored by this right remember this is the era of Encarta and the beginning of Google there was a system where you could ask a question in natural language like who wrote the music for next stop Wonderland and get back the answer or you could ask a question uh staying in the entertainment theme who directed Gone with a win and you would get back the answer without having a bunch of links that then you had to sort through this was amazing and it blew my mind and so I've been working on these types of systems and these type of capabilities since around that time so one of my um perhaps most proudest early accomplishments uh was the following so um ichkai is a annual International Conference in artificial intelligence in 2001 uh so over 20 years ago it happened to be in Seattle where Bill Gates was a keynote speaker uh very much younger Bill Gates and one of the things he talked about was ask MSR automated question answering system uh from information on the world wide web and that was my internship project I thought that was the coolest thing so yes so this explains sort of my my place in this sort of uh long narrative of information retrieval capabilities all right so I think I guess this is all a slightly roundabout way of saying the technologies have changed but the major problem that we're trying to tackle haven't it's still about the problem of connecting users with relevant information okay so let's get into a little bit more detail we being computer scientists we like to think of abstractions and so that's the perspective I'll start to walk you through how these capabilities develop and came to be all right so at the end of the day what we want to build is a black box that looks something like this right in comes a bunch of documents and there I'm leaving beside aside what uh generally known as the content acquisition phase you gotta get the content from somewhere crawling the web you got to do data cleaning and a whole bunch of data Preparatory steps so let's set that aside for now right so you get a bunch of documents you shove it into this black box and on sometime later on the other end you put in a bunch of queries and you get out some results and lo and behold they're amazing and a light bulb goes off and you're happy all right so how do you build this system now as it turns out we've known how to build systems like this for many many years for decades in fact right so this is the vector space model that some of you have all are already familiar for doing search and it was proposed in 1975 although some of the ideas date back even further so if you zoom in to some of the diagrams that are presented in that paper some of the figures will look familiar here's an example the vector space with query and document vectors if you pull up the text what does it say well it says you're going to represent documents by T dimensional vectors where each term represents the weight of the jth term and then what are you going to do you're going to use the inner product to compute query document similarities and you're going to do search errors as a result and that was in 1975. so we've had some idea how to do this reasonably well for quite a while all right so um this black box first cut at it would be it's something like a vector space model let me go into that a little bit more detail on what that actually entails okay so you're going to have the documents that come in you're going to run them through some type of term waiting scheme and you're going to get back out a bunch of vectors right so here's an example Passage this passage is about the Manhattan Project and the uh and the efforts to build the atomic bomb during World War II and from there you would get a bag of words representation that looks something like this I've just written in Json format right so you're going to get all the terms and then you're going to get weights assigned to it via the term weighting function okay so you're going to take uh all these document vectors you're going to shove it into the inverted index uh and then then you're happy for a while until the queries come in the queries get represented as multi-hotvectors also in this Vector space and you perform top K retrieval and you get out the top K results and then you're happy okay so the tldr is research and of course I'm being incredibly unfair but the research during the 70s to that say the 90s and information retrieval or mostly about how to assign term weights and at the end of the day people just said find you know BM 25 is sort of what the entire Community converged on so here's a formula for bm25 it's not important except to recognize that the term weights are a function of things like term frequencies document frequencies document links and so characteristics of the statistical characteristics of the terms and the documents they occur in all right so that's a a high level overview of what's going on in this black box here okay so um that's hop in our time machine and Skip ahead a few years I realized that uh after doing my slides that many of you are probably now too young to get what this means this is the Delorean from Back to the Future The Time Machine of a classic movie but these days when I show this I just get blank stares so anyways imagine a time machine and so we're hopping from the synthesis uh to uh from the 70s to not quite modern day yet because this is February 2023 but just before the previous major innovation okay and what was that that was Bert so Bert popped on the scene Circa 2018. all right so what's Bert while Bert is Google's Magic pre-trained Transformer model uh when it came out the author said it could do a variety of things it does classification it does regression it does named entity recognition it does question answering um it does your homework and of course it walks the dog also okay so um but what does it actually do so Google actually wrote a blog post that tries to sketch out at least at a high level of how I applied bird to search and this came out in October of 2019 um where they describe how they apply Bert to search okay now of course not to be outdone Bing came back the next month and said oh yeah we're we're using some of the same models too and it led to all sorts of increases uh in in how effective Bing was so yay bird yay Transformer models okay but what what's actually going on so let me share some thoughts with you on on that okay so instead of having a single black box now the architecture we as a community basically settled on is this is this two-stage architecture where in the first stage you select some candidate texts and in the next stage you try to understand the text and I put understanding quotes here that really means uh in the first stage you use bm25 maybe not literally but something like bm25 and in the second stage it really means taking the results from keyword search and doing some type of re-ranking on them okay what does that mean okay let me talk talk you through it so this is the usage of bird in a technique known as a cross encoder so here's the complicated architectural diagram of the Transformer layers and Bert but at a very very high level what Burke does is it computes a function of two input sequences so if you read in the original paper it'll be sentence one and sentence two and the output will be are these two sentences paraphrases of each other right so apply to information retrieval you can put a query and you can put a candidate text in instead of the sentences and you could ask Bert to give you a probability of relevance and so you're essentially using it as a relevance classifier so this re-ranking works something like this you get out the candidate text from the first keyword retrieval stage and here's Bert peeking in from the side you get the you iterate over each candidate document you say hey how relevant is candidate one how relevant is candidate two how relevant is candidate three and so on and so forth and based on the answer given to You by The Bert model you do re-ranking and you get a better set of results all right so um the roots of this idea in fact go back many many years so this is a paper from a journal article in 1989 and just honing in on the abstract you see oh it's it's by an author from a country that no longer exists as an independent entity just to show you how old it is and if you read the abstract uh it'll talk about uh estimating the probability of relevance PR given some feature representation of the documents that's what I just told you about except it was done with Burr right so today we'd call this a point wise learning to rank back then they were using relatively primitive features today we use Bert to do the same task and uh it's much more sophisticated of course but the underlying principles are the same so bird of course is a neural network but neural networks have been applied for re-ranking and information retrieval for a long time also this is one of the earlier ones that probably dates back even further but in all already in 1993 people were talking about using three layer fee for neural networks for estimating query document relevance all right so we've been doing this for a while or at least we've had the aspirations to solve the problem in this specific way for a while now okay Bert now this was the previous major innovation how does it relate to this Bing thing right so well the connection is quite clear they both draw their intellectual ancestry from Transformers so this is the typical architecture of a transformer diagram you have the encoder that tries to read an input and generate some latent semantic representations from it that then you feed to a decoder that generates output so Bert and all these models I'm gonna briefly talk about draw their intellectual lineage all the way back to this paper attention is all you need in 2017 the last time I checked this paper had over something like 70 000 citations so um Transformer architecture was proposed in 2017 the way they trained the model was from initial initially randomized weights the next Innovation after that was of course GPT now this is the original GPT right this is the uh there was GP now we have gpt4 before that we had GPT 3.5 before that we have gpt3 this is the original GPT right so they basically said hmm well let's take the Transformer architecture and that's Lop off the encoder part so it only has the decoder and then that's add the pre-training using Auto regressive language modeling on top of right around the same year came bird and Bert was essentially the opposite they said hmm uh encoder decoder too much that's Lop off the decoder part work only on the encoder part and we'll pre-train it using mass language model as the objective and then finally in 2019 came the T5 model that was the pre-trained version of the entire encoder decoder Network right so there is common lineage of all these models that we're hearing today dating back to the original Transformer architectures all right so back to this two-step process of um of of doing search of doing information access so you select the candidate text and then you re-rank them somehow by trying to understand where I put understanding quotes to get a better result these days it's mostly done with Transformer based architectures encoder only decoder or encoder decoder okay now what about Innovations up here selecting candidate text well so as I've already told you before it was done using something like bm25 a bag of words term waiting scheme as a reminder this is what it looks like you get back uh you feed it a document you get back a feature Vector that looks kind of like this now as it turns out what you can do is simply rip out the bm25 waiting function and replace it by encoders that are based on some type of Transformer architecture right so this works by feeding the Transformer a large number of query document Pairs and essentially asking it to do representation learning right so you're asking it so you see a query document pair I want the similarity to be close together and you see a query and a non-relevant document pair you'll want to push the similarity apart and you do that with neural networks you learn good representations for queries and documents right but it's very much the same high level design instead of a bag of words a representation you get in a you feed it the the model a piece of text and you get out a dense Vector representation typically something like 768 dimensions of real value to to pick random numbers um and uh that's the representation where putatively the dimensions of this Vector represent some type of latent semantic meaning but don't read too much into that okay so this is if you hear the large uh the the discussion about Vector search that's what people are talking about it's nearest neighbor search over query vectors using uh over document vectors using a query Vector so you have a bunch of document vectors that are encoded by a Transformer based encoder you have a query Vector that comes in and you're still doing top K retrieval all right so Transformers have been used in search since 2019 right so even though you just heard about chatgpt a few months ago the same type of technologies have been deployed in Google at Bing for several years now all right so the next question I want to address is well what really is the big advance from this to what came before the the modus searching We Know by the by the phrase the getting the 10 Blue Links okay so I like to talk about abstractions so that's go back to boxes and filling in boxes okay so at the high level what is chat GPT it's a large language model it's fed into it uh pre-training Corpus and some instructions right so step number one is auto regressive language modeling and step two is instruction fine-tuning using uh reinforcement learning uh with human feedback right so you build the large language model and into it some type of prompt and you get out some type of amazing response a completion is what they call it so um how does Bing work while Bing is uh bing this is uh the new Bing search is using a large language model we now know that it's using gpt4 behind the scenes so in comes a query and out comes amazing search results like the example I just showed you a few slides ago but how does it actually work well it works by talking to a retrieval model right so the query comes in the language model does something it sends a query to the retrieval model the retrieval model uh does some searching Returns the result and the language model post processes it okay I'll I'll give you an example exactly how that's done in a bit but I want to open up this black box and uh ask the question well what's going on here well in fact I just spent the last 20 minutes telling you what's going on in that box all the things I discussed Transformers being used in search since 2019 well all of that is now squeezed into this box all right and so this is generally the approach known as retrieval augmentation right the large language model is not doing everything by itself it's calling out to a retrieval model that's supplying with it the candidate text that in the later post processes in a way okay so retrieval in other words forms the foundation of information access using large language models right you don't have to believe me this is exactly how Bing works if you go read their blog post oh the URL got chopped off but the Bing's blog post exactly describes this type of architecture they have an orchestrator on top but it's essentially GPT talking to a retrieval model in their case a retrieval model is the Bing search engine okay Bing calls is grounding and I'll illustrate the sort of the effects of grounding using this example okay so without grounding without retrieval augmentation you could just ask uh the large language model something like this uh tell me how hydrogen and helium are different and it'll give you something reasonable if you want something better what you could hand to the large language model is a prompt that looks something like this given the following facts tell me how hydrogen and helium are different yeah and you put in a bunch of facts about it and it will construct an answer that is much more to the point because you've told the large language model I want the answer but I want to ground it on this this is what retrieval augmentation provides right so thinking now in terms of uh so rolling it back and not talking about Bing and chat GPT in particular I'm going to send the next few minutes talking about this interaction a little bit more what are the rules of large language models and retrieval Okay so to explain that I have to make a confession all right and the confession is that I lied um I lied mostly in a mission yes I hallucinate it also so what is the problem that we're trying to solve in the first slide right I uh stated you know I want to solve the problem of how to connect users with relevant information but what I forgot to add is like why are we doing it all right why that's the critical omission okay so some of our colleagues have expounded on this at length so uh Nick Belkin a senior information retrieval expert would say something like well we want to connect users with relevant information to support people in the achievement of a goal or task that led them to want that information in the first place okay so some people would say we want to connect users with relevant information in order to address an information need I think that's accurate but I think it's uh kind of circular you want information because you have an information need [Music] it fits but not not ideal okay uh some people would say well we want to connect users with relevant information to support the completion of a task that's actually very practical and accurate although it's not particularly aspirational right we want to connect user for information to do a task okay so what I really like is what my colleague Justin zobel says we want to connect users with relevant information to Aid in cognition well now that's something aspirational that we can all lash onto now you say cognition seriously isn't that too grandiose absolutely not if you look up the dictionary definition of cognition here's what it says this is from Merriam-Webster I believe it's the mental action or process of acquiring knowledge and understanding through thought experience and the senses at exactly explains what we're trying to do Okay so looking at the broad picture I focused on this black box but in fact I've drawn it too big this is the cognition process right I've drawn it too big in fact it should actually be cut down to size because the actual process by which you get results exist in a broader set of activities of which that it's just a small piece okay let me walk you through some of them okay so for example uh the query has to come from somewhere right the query comes from somebody with an information need so the information need or needs to come from some abstract information itch that I want to scratch into something that I actually type into a computer keyboard or or talk into my device right we haven't actually invented uh mind reading computers yet not not yet so we still have to go through that query reformulation process okay um how often is it that you get the results and you're like oh that's it I'm done I got exactly what I needed no that doesn't happen so there's this interactive retrieval process where you read the results and you're like Ah that's not quite right uh I don't want that oh here here's an interesting keyword let me put it back in the search right there's this interaction Loop that goes on um when you're doing this you're doing this in the context of some tasks you're trying to accomplish this is a once again that got cut off in the slides but this is a task tree diagram from a recent paper by uh sharak shell at all and so it's trying to show that things that we're trying to accomplish can be hierarchically decomposed right so for example you're trying to plan a vacation what are the subtasks of that well you got to figure out where to go first you got to reach uh research each destination then you gotta book The the flights and the hotels and the activity and so on so forth and it can be decomposed into a tree-like structure and each one of these subtasks might be on different devices I may start with a uh on the browser and then come up with a brilliant idea and ask in uh ask some questions on my phone and then move back to the computer for some other types of tasks um but and and this may occur temporarily distributed over days if not weeks all right um and of course there's synthesis right so you have multiple queries you gotta look at the results pull in the relevant portions and synthesize them together before uh and all of this needs to go round and round in an iterative manner before you finally get the task completion and have that light bulb go in your head right so um previously we've been focused mostly on this why because it's the part of this whole broader processes that we can make Headway as computer scientists but the cool thing is now with large language models we have the tools to be able to tackle the other parts around just the core retrieval Black Box right so um and in fact this is where the Bing interface is trying to go okay let me talk about some specifics right so before we had large language models in the Google search engine Temple links view of search you had to come up with query terms yourself you got to look at the results you got to say hmm that's a good query term I'll plug that in oh that's a bad term I'll throw that out and I'll iterate uh with another query with large language models you get much more natural interactions through natural language so that's one key difference that llms had may have improved the process here's another one before you had multiple queries multiple queries and multiple results and uh you all know this one each one appears in a browser Tab and you have a browser window that has way too many tabs and your putting through the going through the tabs trying to synthesize the results now you get some level of awesome automated synthesis that's aided by the large language model right let's talk about this before you had to manually keep track of subtasks right to plan a vacation you had to do some preliminary research and you got to dive in into each one of the options and finally you got to make the reservations with loms it'll help they'll try to guide you through the process in a helpful way and that alleviates a lot of the burdens on essentially navigating this task tree all right so this is where Bing wants to go foreign but of course none of this is fundamentally new let me just give you some example so um here's a paper from computational Linguistics journal in 1998 this was 25 years ago it's about generating natural language summaries from multiple online sources by Drago radev and Kathy McEwen here's another word I don't need to tell you the date because it's already up there and this is from over 15 years ago and it's the task overview from one of these uh Benchmark evaluations that are a community-wide and if you look into one of the paragraphs I've blown it up what is the task well the task is answering a complex question synthesizing a well-organized fluent answer from a set of 25 to 50 documents uh and it has to be more than just stating a name date or quantity and summaries are evaluated for both content and readability 15 years ago we were already trying to do this and then even the idea or the metaphor of information retrieval as chat this goes back even further this goes back decades right this was a time before you couldn't use the search system yourself you had to go to a library and talk to a librarian that did these mediated interactions with you they conducted with you what's known as a reference interview right they ask you questions about the stuff that you're trying to find and they were the ones that were trying to that access the online databases uh behind the desk right so Bing is getting its metaphor from something that is decades old right so coming back to this right none of this is fundamentally new but the key Point here is these large language models allow us to um do everything better before these goals and these capable capabilities were more aspirational now today with large language models as a tool we actually have the the capabilities to to to execute on this vision and that's why I think it's really really exciting let me give you an example right so back in 1998 when uh Rod of and McEwen working on this multi-document summarization uh at a high level it worked because they did a lot of manual knowledge engineering right the system had a lot of uh templates essentially ontologies that were manually engineered this system was designed to summarize events a specific series events related to terrorist act and and the like and it was only with the aid of these knowledge templates that we were able to make Headway on the problem right so as soon as you fit in uh personal interest stories or something like that and wanted the systems to summarize those it basically fell apart Okay so today we don't need this knowledge engineering right llms allow us to execute in the idea but do it in a much better way Okay so the message I'm trying to convey is that before we are focused primarily on this box because that was the part of this broader information ecosystem that we could most easily make progress on but with llms as a tool we can now tackle the entire problem okay let me start to wrap up by giving you a few examples okay so here is an example from Bing search not doing so well all right so this was uh on a tweet thread that I I pulled off the uh off Twitter a few months ago all right so the answer that it's trying to uh to give is uh recommend me some phones with a good camera on battery life under this particular budget and you see in the answer it makes a lot of things up it just doesn't get it right uh so gets the price doesn't get the price right doesn't get the price right here uh it thinks the camera has a wrong megapixel count the wrong battery size so on and so forth right we know this today as the hallucination problem all right it's gotten better but it's still not solved but I think we have the tools to solve it retrieval augmentation that I described to you is the most promising solution to this challenge in my opinion right so instead of the recap asking how hydrogen and helium are different this is the working example we ask we we tell the model the large language model to tell me how hydrogen and helium are different given all these facts and if you hand it a bunch of facts it's less far less prone to hallucination right well what's that well that's this box and so this is what I say to my students we've been working on this for a while today it is not only com important but it's critical right because we all know the garbage in garbage out phenomenon right so if the grounding the facts that you're trying to feed to large language model are garbage you're going to get garbage output which makes a retrieval component as I said not only important but essential but this also means that the large language model once it's gotten the facts shouldn't screw it up right that's another complementary line of research that we need to explore further okay so none of this is fundamentally new llms allow us to do it better and there's still plenty left to do now let me Circle back to this uh to this problem that we're trying to solve right how to connect users with relevant information to Aid in cognition so let me step up at an even higher level okay so at the end of the day we're starting with some artifacts digital or maybe physical and we're going through some process and at the end on the other end comes some type of cognition some types of Greater some type of Greater understanding that we've gotten and in the beginning this was all mostly via human effort manual effort right with technology what we've noticed is we've gotten more and more system assistance with each new generation of Technology we've gotten more and more system assistance so the interesting question is now that there are tools have gotten better what happens right do we all become fat and lazy like Wally or is there an alternative future well so one simple answer is that we now become far more efficient all right but I think the more the better answer is that these tools free us to do more to tackle tasks with greater complexity right so I don't think it's about AI artificial intelligence it's really more about IA intelligence augmentation so all of this I've been hearing about AIS replacing humans that is not the right discussion to be having it's not about replacing it's about assisting and augmenting human capabilities all right so coming back full circle right with so much noise from catch EBT llms and the we're all gonna die crowd where are we or you already know the answer so the tldr is none of this is fundamentally new people have needed access to information for literally thousands of years Transformers have been applied to serve since 2019 multi-document summarization is at least 20 years old and this whole idea of a search as a as an interactive dialogue dates back even further right technology has augmented human cognition for centuries right but the key difference now is we have more powerful tools we have more powerful tools to make us more productive and to expand our capabilities and there's still plenty left to work on okay so the message is Keep Calm and Carry On but the more optimistic version of that is actually it's an exciting time to do research and that's all I have I'll be happy to take any questions okay so do we have any questions right so uh here the rules are you got to speak into the shiny part okay all right hello you mentioned uh something about query reformulation right in the beginning we used to type in something into Google find something else and not be satisfied yeah yep with large language models we have a very similar type of procedure with product engineering right you're trying to engineer The Prompt and get some results you're not being happy with what you get and then re-engineering the problem sure so how does it exactly solve the problem of query formulation ah so the question is uh you're you're saying that before you just put in keywords and change the keywords and now today uh well you just try a prompt and the prompt didn't work and you got to try another prompt so how have things gotten better um I think things have gotten better because the cape it's it's able to the models be better able to understand your intent and so the amount of low-level fidgeting with the queries has decreased and so I think what you're trying to do with a prompt is try to alternatively formulate your information you know what you're trying to do at a higher level right so it's not choosing the right keywords it's about well that tone is not quite right so I want to change the tone so in that way I think it's an improvement so that's sort of Point number one another way to address it is that look prompt engineering didn't exist three months ago right so we are in the beginning of this revolution right so learning how to do better prompt engineering is like learning how to better search Google in 2003 which was a valuable thing people didn't know how to search Google and so uh in some ways things have changed and in some ways they haven't does that answer your question absolutely okay um here excuse me oh gee I can really I could really mess things up there yeah right can you pass this back thanks so much very interesting and like I like how you sort of laid it all out I think in the beginning you sort of said there was this process of uh document collection and I'm wondering uh it seems like that plays an even more important role when you start to get to these like use case specifics so if you're talking about you know a travel company might have to collect their documents in particular fine tune the model in a particular way and so I wonder if you have any thoughts about that process and the role that that process plays and sort of the development of these so the answer is you're absolutely right all right and this all goes back to garbage in garbage out right and so um people have expressed concern about the data that's being fed into these large language models number one we actually don't know exactly what uh GPT 3.5 gpt4 is trained on we have some idea uh and but we're pretty sure it's ingested a large portion of the web including all the toxic material that's found on the web and so that is a point of concern but I think we're uh as we're as we move forward and these Technologies become more and more commonplace or commoditized I think it will become more and more practical to essentially train your own models so there are not quite as capable but fairly capable open source models that you can download the so-called jailbroken llama models and some other open source Alternatives like Dottie is a one that people have been playing with that you can download and you can further uh pre-train on on your own internal data you can change the alignment by by doing your own instruction fine tuning and so um I'm optimistic because there are a lot of options to solve all these all these problems yeah okay so all right hi Jim thank you for the really interesting uh talk so you were presenting or discussing the idea of using retrieval to improve the or you know manage a little bit the hallucinations of large language models so what went wrong with Bing because Bing has this very architecture that you were mentioning uh but it still had all these factual errors so okay um so there's a separate question of whether or not um Bing and all these Technologies were deployed prematurely all right so um I I think that's one of the that's one of the uh that's one of the concerns here is that these large companies may have perhaps rushed the models out to Market before they were quite ready right so uh but you'll you'll notice in in the slides that I have up that there were actually two steps right the first is You Gotta Give it good grounding right so if you retrieve misinformation the language model is going to spew out more information right if you actually gave it articles from the New England general medicine and asked it about uh vaccine Effectiveness for example it's going to do a reasonable job however I was very careful on top that we gotta make sure the language model doesn't screw up the facts that you fit it via the retrieval augmentation and things are getting better but we're not quite there yet but I think this is a technical problem we can make progress on does that answer your question no oh so I'm going to queue up a question from um the the web the the last one we saw but before I get there I want to sort of set it up with um the hallucination problem is clearly a big problem correct and I think you were outlining a solution which is that we want to identify each of the facts in the statements and then we want to attribute all the facts to something that's credible and so that's sort of a attribution problem or retrieval problem now um could you read the last question we have come in enough precise context included llms might have a better factual response as there are numerous kg databases and other forms of knowledge do you feel we can make llms respond with facts efficiently and what's your suggestion for dealing with this oh absolutely uh I have to thank the uh I think the uh the the asker of the question um and giving these slides there's always things that you have to call that's sitting on The Cutting Room floor but um the more general form of a large language model depending on a retrieval model is a large language model depending on external knowledge sources and external apis of which a search engine or a QA engine is one right so there's nothing to prevent the large language model from querying a SQL database from issuing a sparkle query to a knowledge graph from issuing a query that is uh that gives you a real-time feed of the latest scores from last night in fact that's exactly helping is able to answer questions about the game last night hmm okay so that's that's a very promising area of future work uh wonderful thank you for the very nice talk I I wonder and this is more on the retrieval augmentation stuff so we have these two components we've got these giant llms we've got retrieval models that do a pretty good job but they're totally decoupled and so like I wonder like you know I completely agree that you know retrieval augmentation is likely a path forward to addressing some of the hallucination issues but nothing in the standard llm objectives uh you know encourage or uh you know necessitate that the model actually pay attention to the context with which you are prompting it yeah right and so I wonder if you have thoughts on perhaps like you know better pre-training objectives for the llm component that enforce that as a constraint by construction or if you have other thoughts on like how do you actually realize that kind of criteria yeah that's a that's a really good question and it's something I've thought about so I at least um Can restate the problem in a slightly different way so whenever you have these decouple components um you lose differentiability pretty much right and as soon as you lose differentiability you lose a lot of what makes these technology these techniques work being able to train end to end and so uh various people have been trying to have their cake and eat it too right so attention mechanisms that allow you to access uh the external knowledge sources directly there have been various attempts along those lines they're all promising and I think you're answering part of my same question those are that's another example of why this is such an exciting time to do research right now does it help I yes I I think I I think I know what the problem is but I don't have a solution and if I did that would be my next Europe's paper or something like that right uh thank you so much um it was a you know it was really nice to know your views I would also like to ask you without losing differentiability is there a way to get the biases out of these models that generally creep in and I'm sure you were expecting that question yeah so I I yes um I think what gets lost in this discussion about biases and more generally the question of alignment is that we often lose sight of the fact that I think at the end of the day we're gonna need models that are aligned differently for different tasks for different audiences uh based on different cultural backgrounds different expectations different domains Etc so I I think it's more it's less helpful to talk about you know how do we get bias out of the models in the general case and focus more about how do we get bias out of the uh the models for medical diagnoses for uh job recommendations for particular Downstream tasks I think if we start to look at the problem from that perspective it becomes a little bit more tractable um does it help yeah all right so I think we're sort of out of time but I did want to sort of end with one note I have some students who are feeling a little discouraged with all the hype they're hearing and they're kind of wondering if it's sort of pointless to be studying uh this stuff anymore because it's all been solved but I kind of suspect that your program the Ia program is probably going to take about as long as the other programs you describe so do you think it's going to happen before you retire yes no I I don't think you're on the record uh yeah yeah so what's gonna happen I I mean in the sense that our tools will become more capable and will be able to do more so from that perspective uh this process will be um a never-ending yes in the sense that the problems that we think are problems today I think they'll be solved by the time the my my career comes to the end so for example I think the hallucination problem is I'll I'll say this on the record it's not going to take as long to solve as we think it is because the way I've formulated as a retrieval augmentation problem where the language model's job is just not to screw up what the retrieval module gave it I think that's a much more technical problem I think with respect to bias if we start thinking about uh properly lining the models for particular Downstream tasks we're going to see these problems uh solved in a much more practical manner you'll have to repeat any of that ah so what about ATI I anticipated this question and I addressed it at the very very beginning right so for the purposes of my own research program what I'm interested I just want to connect people with the relevant information that they want I to be honest I really don't even know what an AGI is I have today not heard a precise definition of what an AGI is and so I say yeah you know people can do other things like unravel the mysteries of Consciousness and build agis I'm going to focus on something that I think is much more practical but still very impactful so let me end by I'll go on the record and say I think you're wrong I remember uh there are the recordings of people in the 50s saying that all the problems in machine translation will be solved in five years uh and uh it's 50 years more than 50 years long later and we're still working on it okay I have a feeling that this is a good time to be studying this stuff because there's no problem about uh uh you know employment you're going to be busy yep um and uh I think you'd be lucky to get to the progress you have in mind before you retire okay all right but anyway we'll see if you're a writer see if I'm wrong when you retire a retirement party okay we'll have this discussion okay great let's thank the speaker [Applause] [Music] thank you

2023-05-05 04:27