Robert Johansson - From Machine Learning to Machine Psychology

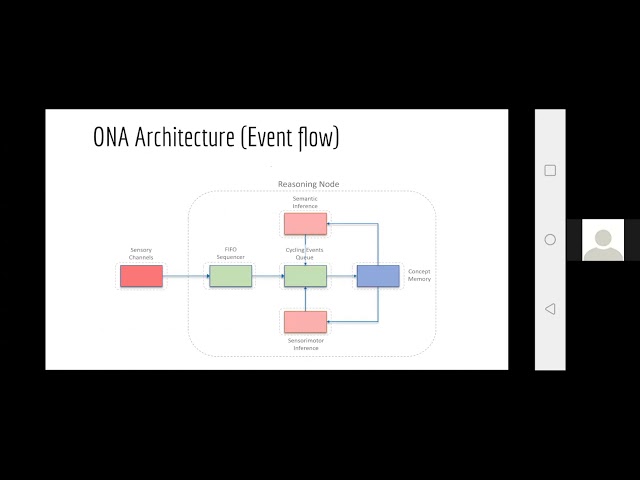

the talk is uh only very briefly about uh machine learning and we hope to also speak about machine psychology and the title of the talk is also artificial general intelligence from the perspective of non-axiomatic logic so so we we're here to do digital futures and digital futures as many people know it's about solving problems with the within this domains using technologies that we develop collaboratively between kth and stockholm university and the rise and they can be very hard problems to solve actually uh for example how can in a smart society autonomous systems communicate with each other and learn from each other or from human beings uh how how do we need to adapt educations to be effective how can we create something like empathic artificial agents how can we understand depression and can we model it in a machine and resolve the depression in the machine with human dialogue i think that's problems typical for something you could attack with the technologies within digital futures but the question we want to ask today is what type of technology can we expect that we need to solve these problems that's the question that we put in the back of our head and patrick and i will speak from the perspective of agi which artificial general intelligence which typically can be said to be the field that aims to build general general purpose intelligence systems systems that could solve many different problems across many domains typically taking very holistic approach and sometimes associated with these things like human level ai and or strong ai but very importantly distinguished from ai where highly efficient solutions are found to very domain specific problems like image recognition so we will introduce agi from the perspective of non-axiomatic logic nars non-axiomatic reasoning system and i will provide patrick will speak about that and i will provide perspective from behavioral psychology including research methods and how we view scientific progress and we will argue for a research path that can integrate agi and behavioral psychology that we hope could benefit both fields so over to you patrick okay i'm glad to build out the doc in this uh in this meeting and uh what i will introduce to you is a is a reasoning system called open mouse for applications and i think it's it can be one of the relevant future technologies um and uh this is uh to first show you my main course which is uh to show the the benefits of a general purpose reasoning system that can learn and to contrast it with special purpose solutions which are which are very well suited for specific tasks which nature solved nature had similar differences between selecting for specificity and generality and which is shown in this picture here illustrated um and uh for me uh the main objective was or is uh to create an effective nas implementation to design uh um mass implementation designed to enhance the autonomy of intelligent agents because this is where i think the general purpose actions can make a difference over agents which can only do one task better but first what is this monoclimatic reasoning system it's a general purpose reasoning system proposed by my advisor actually by dr berwank which follows the idea that intelligence is the ability for a system to adapt to its environment by working with insufficient knowledge of resources and such a system should work in real time except new information at every moment so new information can constantly stream into the system and it should work with finite processing demands on storage space which means even if you feed it additional information it should not get slower and the way it's realized is there is a control mechanism which is a attention driven which means it's it's doing selective processing of the information and a memory structure which exploits composition compositionality of buttons and the logic of this reasoning system is actually a dom logic and not not predicate logic um and it contains multiple uh forms of statements like uh inheritance statements like cut is an animal the first one or sequences and implications like a and b happened in the circulants or temporal relationships like lightning leads to thunder sets like salmon garfield or cats and cats are meowing for it on 40. and uh also relationships can be expressed like that animal needs food um what distinguishes this uh logic from predicate logic is that there is no binary growth so it's not so statement is not either force or true but instead there's a positive and negative evidence measure associated with each statement based on this you can define the frequency value which is the positive evidence over the total evidence the confidence measure which is uh the total evidence map to a value between zero and one and why do we need those two measures it essentially allows the system to keep track of the size of the sample space that has seen so far so for instance uh if you ask yourself is a coin is a coin fair and you do 10 coin flips and you get 5 heads this should give less confidence than 100 coin flips and 50 hertz well in both cases the frequency value is 0.5 but the amount of samples collected is different this is by the two valued truth value associated to each statement the frequency and the competence were equality positive and the negative evidence and with this you can do interesting things for instance you can build a system which is able to learn temporal relationships so for instance if you have a event sequence abc then you can make this event sequence uh attribute positive evidence to the relationship aliens to be positive evidence that a and b together leads to c and positive evidence that aliens to see but if a does happen but b does not happen this is when negative evidence is collected for this implication statement so in this case we can see we can say that the frequency is actually the success of the temporal implication to predict the outcome while the confidence encodes the amount of samples which the system has seen about this relationship so on a large part of my phd phases is about a design on the implementation of such a system um and what i tried to do is to combine only stable aspects of the nas theory and to make the system focused on agency that is to have systems that make decisions to reach desired outcomes or goals so the system i've doubled up this is a special case of a non-axiomatic reasoning system and so the capabilities of this system is to learn from event streams in real time without interruption um and to extract sensory motor contingencies what is this the idea is that certain events can correspond to motor actions to activate those and essentially what you want the system then to do is to learn which actions lead to which outcomes under which circumstances so or given specific circumstances what will be the consequence of of the action what other events will happen if a certain exec certain activator is is is used and this the system can then use to plan ahead to reach wars and this can enhance the autonomy of intelligent agents including robotic solutions because this allows the system to learn new procedural knowledge at random the architecture proposed looks like this we will go briefly step by step through it so it receives events from different modalities which is point one can be camera can be microphoned can be a dutch sensor and it uh it combines those events into temporal sequences and from there it goes into the attention buffer where all this information from the different sensors computes for attention and in and from there essentially it's a reasoning loop which happens there's a memory structure which will call concept memory and the idea is always to take an element out of this attention buffer favoring the highest priority one and then select the second premise which is already in memory and then to send both premises to those inference blocks to derive new events which will then go into the attention buffer again and um there are two types of inference here this is where there are two inference blocks which is semantic inference which always depends on a common premise like you have ssm and m is a b so you can can conclude that s is a b you see there is a common term m here or so if cat is an animal and animal that's a being we can conclude cat is a being so here it's the animal which is the shell dome so semantic inference block is about inference you know both parameters share a common tone well well there is other there is sensory motor inference which is about dealing with with temporal relationships like if uh like if you have event a and event b you wanted to build this a implies b relationship so to give positive evidence to this relationship and if you already have such a relationship you can use it for prediction meaning if you get an event a and you already have the relationship that aliens to be you can predict b and as a special case of this sensor remote the inference is subwooling that is the system wants to reach some gore there are multiple candidate hypotheses how to get g how to get this quarter given current circumstances and it should select an operation which most likely will will lead to g to happen but sometimes this cannot happen in a single step so it needs to to generate the context as a sub core and this is exactly what it does so if it can do it in a single step it will simply use the best operation which most likely leads to j if it cannot do it it will derive this upwards um the usage of nas is always your it events which can be beliefs scores and questions beliefs are essentially current state of affairs or current current sensory measures um scores are essentially the events the system wants to achieve and questions are statements the system for us to answer and the output of the system is always operations to execute and answers to questions so so this is the input output interface to the system um but and here here i will now now briefly show a use case scenario in the smart city domain which is about anomaly detection um so the idea here is uh you need to find or you want the system to detect different traffic anomalies can it can be speeding can be j working can be um can be a dangerous potentially dangerous situation and what the system here does is there's a camera here and there's a deep neural network which is able to detect uh to detect objects and then there is an object tracker which is a which uses those detections to drag objects over multiple camera reference and or in real-time and the system then automatically builds categories of okay this is a zone where there are mostly pedestrians like it's a it's a sidewalk and this sounder there are mostly cars those are so it's a street and it automatically also keeps track of the evidence for the direction entities are going and it can use this to make predictions about the future location of entities and also to detect anomalies with some background knowledge but but what jblocking is for instance as we will see briefly um so the idea here is uh for the operator to define some background knowledge about about anomalies of interest and then the system builds spatial temporal relationships between entity deductions and builds region categories and it is supposed to be able to predict future locations of objects and uses knowledge to detect anomalies um so there's a camera then there also object detector and tracker which which gives gives the reason again from the the information about the current thing about what the current this is and then there is some background knowledge about the anomalies which the original uses to detect them um we won't have them to go into the details of encoding here but uh to show an example for instance here you see uh you see uh pink errors uh arrows which indicate the system predicting the future location of this car entity and uh and interestingly the learning speed is quite quick if we if we show it such a scene it only it's only a matter of seconds for the system to to learn how how objects moves uh how how the cars move and and it can do this in in a matter of seconds so it's really quick in building those temporal relationships um for the anomalies this is more similar like also with expert systems because in this case it's it should simply detect what the user is interested in so it's possible to give this reasoning system background knowledge which is which it can then use for instance to to make it detect tray working like here if there's a pedestrian and the pedestrianistic at the street the system should generate a jaywalking message so this is the less interesting but the more interesting one is that it learns those temporal relationships you can can use them for the detection of their normal system integrating it with the background knowledge as examples of the type of anomalies it can detect is like dangerous situations like or potentially dangerous like here or a j walking where a pedestrian is walking over a spirit so this is our something we did together with cisco systems and uh and the good thing about this approach is it's not really dependent on a specific camera if you if you put it in a different scene it will equally learn various displays where are the sidewalks what is used as a bike lane and so on and it will automatically um adapt to the different situations and be able to identify those kinds of anomalies um maybe so this is a case of real-time reasoning for anomaly detection um and which also shows this ability to to learn those temporal relationships but i wanted i always wanted to go more into robotics because i'm more interested in sensory motor and action rather than simply a passive observation of a sin so what i what i then realized is that nice is actually more like our belief desire intention model but with the ability to learn how to learn new procedural knowledge on the fly which i think is very important for robotics there are also some other observations like the denote autonomous robotics usually you have multiple objectives to reach so it's not a single single state action mapping like in reinforcement learning that you can use um also also the learning speed is a very important metric here because of body integrity of the robot on stem constraints so large scale drama and error is not an option and uh um [Music] yes and the other part is maybe not that important for now but uh with with this system i really tried to build a reliable real-time learner um which can adjust or which can learn procedural knowledge on deflate and uh and i have experimented with some robotics cases or use cases like that it should learn to it should learn to avoid obstacles um simply based on the gore do not have low ultrasonic readings and it uh want to keep moving essentially and what we what we see in this experiment is this ability to learn a behavior which fulfills both course not to stay in one place but also to avoid low ultrasonic values which the behavior with which it automatically learns here is to avoid obstacles but to move around obstacles because this allows it to keep moving while avoiding low ultrasonic radius a more interesting example builds on this which is uh which is uh to to find the bottle and to to find a bottle which is in the room and to return it to the other bottle and here it uses the knowledge it has learned in the previous experiment that uh like a curriculum learning where it uses the knowledge from the previous task in order to be able to complete this stuff and in this task there is also some additional background knowledge i give it which is what it should essentially do namely it should pick the bottle and it should redone it to the others and this is managers to do um we can split this a bit up that's almost done so it's a combination of background knowledge and learned knowledge which is the key here um and we see it it simply tries to redone this mechanically this was not very well executed yet because there are some mechanical issues with the with the grappler you have a newer version but that i cannot show currently um but the the really good thing about this is that most of the behavior which you have just seen is actually learned like to move forward if nothing is seen to move left when an obstacle is in front to avoid it also other other things it learns from objects like if there's an object on the left and it moves left then it will be centered if it's if it's on the right side and it will move to the right it will also be in the center and it automatically is able to learn this knowledge which it has learned from other objects it's automatically able to to apply it to the bottle even though it has not found a bottle before but because it has obstructed that abstracted or learned this abstract knowledge that is okay when something is on the left and they don't do the left then you can see it in the middle and the mission specific background knowledge is very minimal it's only that it should pick the bottle if it's in front and to drop it and to drop it to the other bottles if it is the other group's group of bottles so this is quite powerful because this means you don't have to give it all the information beforehand information can even be wrong or outdated and the system is supposed to notice this it's supposed to collect negative evidence for information or for knowledge which doesn't work or for some reason maybe because the circumstances changed maybe because some some other reason so patrick uh i think we should uh start to finish your part of the presentation quite soon i'm almost almost done um that maybe the last thing uh i also experimented with question answering but maybe this we can skip um yes and uh maybe we can jump them a research course which is really to to allow the agent to explore its environment their exploration on play and to pick up knowledge when doing so and to allow it to physically manipulate and utilize objects to reach different outcomes like with the bottle we have seen which was a simple case and in all those cases which is really a central aspect of autonomy is this ability to learn from observations and interactions at random and we also wanted to effectively remember places up and objects to essentially build a model of the world and of course the key to autonomy is also this ability to to operate independently of humans if necessary and also it would be good as a bonus to be able to use information or to extract useful information out of human speech to answer questions and to use language in other ways that's that's it thanks a lot patrick you're welcome so um yeah as i said the purpose of the talk then is okay so patrick has introduced agi and the possibilities with the agi from the perspective of norse and and as you saw there he really stressed the integration thereof semantic and sensory motor knowledge so i will continue now with presenting the behavioral psychology perspective and research method and what potential research path that that could be so some of this is described in a longer paper that i published this summer at the north workshop at the agi conference so i will be speaking from the theoretical perspective of relational frame theory which is a behavioral psychology approach to language and cognition that has been going on since the 80s basically with its most updated version in 2016 but why even talk about these things at the same time uh to start with there are some conceptual similarities patrick supervisor pay one really stresses that as patrick said intelligence as the adaptation with insufficient knowledge and resources and learning from the perspective of relational frame theory is really about learning during the lifetime as onto genetic adaptation so these definitions for sure are very similar and nars also stresses the experience grounded semantics that every concept is fluid based on experience which is very similar to how we we talk about meaning in rft so when i say rft it's relational frame theory so some methods we use is typically to conduct something called match to sample experiments and for example studying something called conditional discriminations using match to sample so very simple for example uh here is a study from 95 where pigeons were trained to discriminate between monet and picasso paintings so they started picking for example when they saw the munich painting but not when they saw the picasso and they could generalize it to new muni and picasso paintings and maybe this is how it could look like on the forehead robot for example oh it said that is a person [Music] okay [Music] multiple objects from one person [Music] so so systems can can typically do this thing with the sensors that patrick talked about and be able to learn how to discriminate using sensory motor knowledge and in a typical experimental setup you if you learn a relationship between a1 and b1 you basically say that the system picks b1 in the context of a1 and pix c1 in the context of a1 or or you can learn train the system in these relations so this is how it for example could look like in nars using patrick's system uh that after if the system bubbles between the left and right operation uh it can over time learn this derived knowledge that in the context of a1 if i and left this b1 then if i do the left operation that will lead to some kind of success so so you can say in this if you learn this we can talk about success or g as the it functions as a reinforcer in that context and the sample a1 statements functions as a conditional discrimination so generalized identity matching i will step up to make harder and harder experiments and this is in the match-to-sample task [Music] someone a person or a sea lion in this case learned that if i'm shown a1 i press the same symbol so here the the c line is shown the palm tree and press the pawn tree but then in a new situations being shown a new symbol uh it presses that symbol rather than something it that it was reinforced on before so it acts on the abstract knowledge of identity this is also something you could talk about in nars but it would typically need the layer 6 variable knowledge relations but what really sparked the interest in relational friendly was the problems raised from stimulus equivalence if we take this to human beings we typically learn relations between a1 and b1 and a1 and c1 but we typically derive a lot of other relations for example in this experimental setup again if you are learned a1 b1 and a1 c1 and a2 b2 and a2 c2 let's say that the sample and choices are switched like this we're presented with c1 or we're supposed to pick b1 or b2 based on the training history uh and most people with with repeated experi experiences pick be one done so this is uh this is the stimulants stimulus equivalence effect which is something this is a study from 1986 where they compare this with normally developed children with those with disabilities but with language and those children with without language and only the children with the language could do this phenomenon so it's an argument that this the stimulus equivalence seems to require language and and it seems to develop around two years of age uh and and it hasn't been reliably demonstrated in any non-human animal and it seems to be a case where sensory motor knowledge and semantic knowledge needs to be integrated um a harder problem would be transfer or stimulus function so this is a study from 1987 people were taught a set of equivalence relations and then the subjects were trained to either wave or or clap in the presence of b1 or b2 respectively so that's b1 acted as a discriminator um so the question then would the discriminating functions transfer to c1 and c2 which is which it did and in the same study three other subjects were trained to sort the set of chords using feedback correct and no but shown chords b1 or b2 at the same time and would then the reinforcing function of just showing a card but a card from the same equivalence class with the function of the reinforcer transfer to those untrained symbols and it becomes even harder when you introduce other relations than sameness like opposition because if something is opposite to something and that is opposite to something else then a and c are the same and this is a classic study from 1991 uh where this was tested you were basically shown a symbol same symbol and was trained to select b1 in the context of a1 and in the context of being shown the opposite symbol and a1 you were reinforced for selecting b2 um so the network train was like this and you tested they tested for derived relations and this is something you could do with a semantic knowledge in nars but it's it's it's a real fundamental thesis of the relational frame theory that the relating act is an operand meaning it's a it's an action the integrated action of picking and thinking at the same time basically so it this is really a case where sensory mode knowledge and semantic knowledge needs to be integrated that means to make a robot do this is hard and transformational stimulus function not only transfer transformation for example if we learn like this coins that we never heard of a1 a is worth more than b and b is worth more than c we derive a lot of information on value even if it's totally contextual we don't you can't base the value on how the coins look or what size goes on so so these patterns are really contextual in nature so this is just very very briefly this is how you typically study this in these experiments that you train a network of more and less relations and study if something is derived to be even more reinforcing uh and this is another very cool study where people were trained a more less than relationship network and had an electric shock to one of the symbols and they measured skin conductance and people actually had higher intensity of skin conductance to a symbol that they had never seen before because they derived that it would they it would get them a an even larger shock so to speak so that's a real case of transformational stimulus function this is tony lofthouse who is on the call here he has studied the transformational stimulus function using so this is coffee and juice are opposites and coffee is good coffee is bad and juice is good but [Music] it's beer even better so tony has had to say shown how this can be done using the semantic inference part of mars and importantly this is like what was studying in rft 20 15 years ago so so a lot of stuff has happened in that field uh this is just one example of a paper from 2017 that i think really takes rfd to the next level so i'm just arguing that this is not the end of rft it's a large road map so to speak okay but we were here to talk about machine psychology what is that so rft is a theory that is based in contextual behavioral science so so that is something emerging from behavioral science that emphasizes context and the centrality of situated action and emphasizes predicting and influencing psychological events with precision scope and depth so if we are going to talk about something like machine psychology the question is can behavioral psychology then provide some kind of hints what would that be maybe it's based on this premise learning as adaptation compared to for example learning as pure information processing maybe this can be predicting an influencing machine events with context in context with precision scope and that and highly likely emphasizes the centrality of language processes and i think this opens up for many exciting research programs in something i would call the digital futures area for example i think you only need to open a an rft based book for example on how to train people with autism and develop developmental disabilities and then you have lots of research programs so for example teaching a system reading and spelling teaching syntax uh functional communication at the bottom training analogies training perspective taking establishing empathy mathematical reasoning uh self-directed rules and flexible intelligent intelligent and creative behavior so the self and the perspective taking is really something that is emphasized in these models and of course also connecting it to clinical psychology and i think this is nice that since norse also emphasizes this it has a self model and an emotion model this is a paper that patrick wrote with his supervisor so rft has accounts of empathy for example and my own research field is in clinical psychology is very much about understanding depression which has grown to become a very large problem so for example let's say that we wanted to have a robot that was depressed that we could treat with human dialogue uh this is a demo we did with fur hat as a dialogue system acting as a depressed patient but can we extend like a dialogue system with something of a [Music] i would say three layer approach of the non-axiomatic logic and rft that provides a foundation how you can look at depression in the machines based on rft models of depression where typically the cell the self is emphasized being in a relation with i'm a horrible person which can be strengthened from experience of statements like i'm divorced fired no kids i drink too much so i think yeah i think you understand where i'm going with this so it seems like it is a real centrality to emphasize both these aspects of of reasoning if these massive problems are going to be solved so in summary we with this talk we hope to illuminate some problems that might be hard to solve using standard machine learning and deep learning and i think open norse for application has a very very interesting model to solve this integration of sensory motor and semantic knowledge and behavioral psychology seems to provide an interesting path for how to make scientific progress in adi for example studying it in match to sample experiments so i think rft relational frame theory opens up for a theoretical account of many of these hard problems that we could then study in intelligent systems so thank you very much

2021-03-22