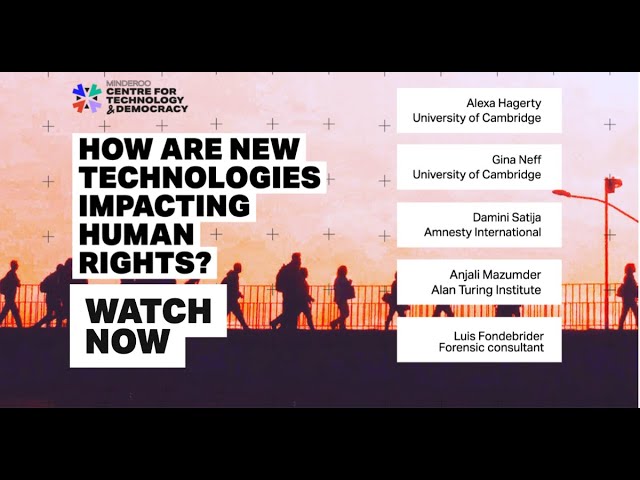

How are new technologies impacting human rights

thank you everyone and welcome our sonar will begin in just a few minutes welcome everyone thank you for joining us today A.M Gina Neff I am the executive director of the minduru center for technology and democracy at the University of Cambridge before I introduce the seminars guests I want to let you know that this event is being professionally live Human captioned if you would like captioning you can select it using the toolbar on Zoom at the bottom of your screen additionally stream text captioning is also available for this event this is a fully adjustable live transcription of the event in your browser and if you want to open this we are sharing the link to the stream text now a transcript will also be made available online after the event now before we begin some points of housekeeping the event will be recorded by zoom and streamed to an audience on the platform by attending the event you're giving your consent to be included in the recording recording will be available on the mandaru center for technology and democracy website shortly after the event tonight's guests will speak to us for about 40 minutes and then we will open up the discussion and take questions from the audience those questions can be asked through the Q a function on Zoom that's another tab at the bottom of your screen and we will share those questions during the discussion please do not place the questions in the chat as we won't be monitoring it we would very much appreciate if you could complete a short feedback questionnaire after the session the link to the survey will be sent via the Eventbrite which you used to sign up for this event please follow us and tag us on Twitter and other social media platforms that's at mctd Cambridge um for example if you are live tweeting this event so turning to our our event tonight a digital Transformations and new technologies have had profound and contradictory implications for human rights how are new digital Technologies a threat to Human Rights and how can we see new resources for action with new kinds of Technologies the conversation we'll be having brings together an AI and human rights experts a forensic consultant an Anthropologist of genocide and digital Technologies to ask what might a rights promoting technology look like can we untangle how new technologies undermine rights support humanitarian action and offer potential for Frameworks for regulation I'm delighted to introduce this panel of experts first Alexa Hagerty Alexa is an affiliate at the mendaroo center for technology and democracy Alexis an anthropologist for searching human rights and Technology she is particularly interested in activism and how communities resist refuse and reimagine Technologies her work examines the use of forensics and biometric Technologies in humanitarian interventions such as identifying the dead in the aftermath of political violence in Latin America and the current war in Ukraine she's the author of the recent book still life with bones genocide forensics and what remains and we'll be sharing a link for a discount code for that book in the webinar chat next is the mini satija dominia is head of the algorithmic accountability lab and deputy director amnesty Tech of Amnesty International domini's team research investigate and campaign on the increasing use of algorithmic systems and Welfare provision and Social Security and on their harms and marginalized communities around the world previously the meeting with senior policy advisor at the center for data ethics and Innovation an independent expert Committee in the UK working on ethical challenges in data and AI policy to me was also the UK expert at the Council of Europe's policy Development Group an artificial intelligence and human rights next we have Anjali mazumar Anjali madzumer is the theme lead on AI and justice and human rights at the Alan Turing Institute her work focuses on empowering government and non-profit organizations by co-designing and developing responsible and inclusive Ai and methods tools and Frameworks for safeguarding people from harm and finally we have Luis Fonda breeder Luis is co-founder of eaaf the Argentine forensic archeology team and a forensic consultant and his former head of the icrc the international Committee of the Red Cross forensic unit Luis is a forensic anthropologist who has worked as an expert witness or and a forensic advisor across the globe this part of the forensic international team leading on the manual on genetics and human rights under the government of Argentina and the icrc Luis is currently a member of the international forensics Advisory Board the international committee for the Red Cross and now I will hand over to Alexa Alexa you might be muted oops after three years um okay here we go okay so I can remember the moment that I began to think seriously about new technologies and human rights I was doing my PhD field work with forensic teams exhuming Mass Graves after political violence in Latin America and I was in Guatemala where 200 000 people were killed in state-sponsored genocide and I arrived one afternoon at the Historical Archives of the National Police in Guatemala City so the word archive might elicit visions of pristine alphabetized libraries and temperature control storage but this archive was something quite different here we can see these documents piled up some of them were in Stacks 10 feet high so an estimated 80 million pages of papers documenting surveillance torture disappearance had been dumped in a half constructed building in a police compound and they'd have been left there to fester and they had been discovered quite by accident about a decade before my visit and this would turn out to be the largest secret cache of State documents in the history of Latin America a small dedicated team was trying to save and organize this immense record of Human Rights abuses and they showed some of the documents which included features which were index cards typed with the names and details of people being trapped annotated lists and photos like you see here with names and captions like subject to investigation and agitator and some of these were marked with codes like 300 to indicate assassination the archivists estimated that at the height of the violence in the 1980s the police possessed information about more than one million Guatemalan Guatemalan citizens about 15 of the population at that time so encounter encountering this archive and its materiality in what I wrote In my field notes as it's decaying immensity like a rotting corpse of a beached whale powerfully affected me because I glimpsed there this connection between mass surveillance and mass atrocity I saw that a feature an index card can be as deadly as a bullet and that a list of names can fill a mass grave to carry out political violence at scale requires organization it requires a system and infrastructure archives of surveillance continue to be amassed of course maybe now more than ever they have changed April gives away the computer code algorithms and Inked fingers biometric scams so surveillance expands at the same time that it becomes increasingly invisible the United Nations High Commissioner for human rights has warned that emerging Technologies are enabling quote unprecedented levels of surveillance across the globe by state and private actors and that many of the current uses of these Technologies are incompatible with human rights so organizations like Amnesty International and also the mindro center for technology and democracy hosting this event are hearing out important work of exploring this theme of the dangers of these Technologies for human rights at amnesty Tech and the amnest algorithmic accountability level Alexa sorry I'm gonna just can you you've got you've got your slide sharing on presenter view can you make them full screen use slideshow instead maybe if you go up to the top thank you oh I'm so sorry maybe this is that oh much better thank you okay thank you thank you okay so where were we so amnesty Tech and the algorithmic accountability lab are doing really important work in uh our issues of spyware technology so mass valency algorithmic square right of misinformation disinformation and hate speech welfare Automation and many other uses of emerging Technologies it's an interview Center has dried out a really important social technical audits I think the least use of facial recognition in England and Wales and other vendor researchers are working on issues like how automated decision-making systems pose risks of bias and harm for disabled people that's led by Dr Louise Hickman the use of tracking Technologies and immigration contexts and many other topics with profound implications for human rights but a lot of chance I'll discuss this evening yet technology is also a potential resource for human rights and this is also something that I learned in my field work so in Argentina's dictatorship of 1976 to 1983 the families of the Disappeared who are the people who were kidnapped tortured and killed in secret prisons courageously organized to search for their loved ones these families reached out to scientists because they wanted to know how forensic science and genetic research could help them find and identify the missing and the dead so through these efforts of these families the American Association for the advancement of science the Triple A S arrived in Argentina in June of 1984 for a 10-day visit and quite incredibly this 10-day visit really marked a turning point in the history of science technology and human rights because it launched early crucial scientific interventions into human rights and it would eventually revolutionize the fields of both forensics and genetics so here we have a picture of the geneticist Mary Claire King with with the abuelas and what's happening here is that geneticists from the United States but also exiled Argentine geneticists worked closely with families of the Disappeared to create new forms of genetic testing for Grand paternity that allowed the identification of babies who had been appropriated by the dictatorship for example after pregnant detainees gave birth inside of secret prisons on the forensic side families of the missing worked with legendary American investigator Clyde snow and a handful of local students among them Luis Fonda breeder who's with us today to Pioneer the application of forensic methods to identifying the remains of people disappeared by the dictatorship and this launched the field of forensic exhumation for human rights them this new team or what would soon become a team documented forensic evidence that was crucial in early Landmark human rights trials so if you've seen the film Argentina 1985 these are the trials sometimes called Argentina's Nuremberg that cleared the way for what some Scholars have called The Justice Cascade of similar trials in Latin America and around the world but this powerful meeting of science technology and human rights was not inevitable it happened because people dared to do it mothers and grandmothers stood up to dictators students who had grown up under Military Circle it's a state Terror and so the the emerging forensic team the first grave they exhumed they were working with borrowed Garden Spades and spoons it was dangerous um work and there was no there was no foregone conclusion that it would work or that the Democracy would last so it was quite dangerous these students went on to establish the Argentine forensic team the world's first professional war crimes exhumation team and to found a new field they rapidly adopted Technologies like DNA analysis geographic information systems ground penetrating radar photoanalysis from drones remote sensing but they did this always working very closely with families and they've now worked in more than 60 countries around the world so I wanted to share some of this history because it offers an alternative vision of the relationship between Technologies and human rights how Technologies can be a resource and can be developed not from the top down in the Silicon Valley model that we are living with now but also from the ground up in collaborations between scientists technologists and affected communities and I'll end with this photo which I took in the field which is of a drone launch which I think encapsulates this kind of community-centered use of Technology unfortunately I'm not a good enough photographer to actually captured the Drone um but I think this Spirit of community centered technology also informs the way that people in this discussion are attempting to develop and use Technologies for human rights Luis Fonda breeder of course um and also Angeli mazumder's work at Turing exploring how data-driven approaches Can Shed light on the complexities of Labor issues including modern slavery also at minduru there's work there's researchers have joined the international AI for trust team to tackle online misinformation by bringing together algorithms and expert human fact Checkers so there's really deep experience and wisdom on this panel and and among many of you joining us this evening so we're really looking forward to exploring this question of what does rights promoting technology and I'm eager to get to the conversation thank you Alexa that's great and really very interesting I'm going to invite all of our panelists to come on screen please now and yes great and so we have this question um before us that it really is quite there is a complexity to ensuring that new technologies new kinds of data um work to support human rights um don't work to threaten um human rights and each of you bring different kinds of experiences Alexa called many of your projects out specifically in in their presentation I wondered if we could start um I wonder who wants to start in in just reflecting reflecting on that complexity um perhaps from your own work or from something that Alexa said um Angeline would you like to launch us sure uh so thank you um for inviting me to be on this panel and and uh Alexa for uh really introducing both your material your book and and introduction into actually the intersection with forensics which I think is often uh not uh appreciated as much um or or acknowledged in terms of its real Essence um and presence within the human rights space so uh particularly when we're in a space of digital Technologies so from my own experience I've I've worked uh in areas um involving uh forensics as well as the broader sort of use of data data-driven Technologies uh as Alexa mentioned in terms of labor justice issues such as modern slavery and there's I'd like to address the complexity in a couple of ways one is that we are bringing sort of technical interventions that are really in a complex social environment and that is to remind us that actually these are socio-technical sort of interventions and approaches that need to be considered and so that means that it's the data is about people even when we're talking about satellite imagery data or drones uh and it's looking at Amazon rainforests or something that that's indirectly about people even if you're not capturing the people in themselves it's also an opportunity to actually address where the labor rights or the the human rights kind of uh abuses are occurring and what we constantly deal with is this Dynamic right of the opportunity that Technologies provide to shed light on the human rights abuse to potentially detect um and and try to prevent or to understand the the Dynamics but there's also the risks that we actually create of potentially also infringing on the human rights of surveillance discrimination uh as sort of some of the fundamental ones but also you know the association the connection with other people all of these um rights start to play a role so in my own work um with the team we've worked on really trying to build up a multi-disciplinary team that really tries to think about it from a socio-technical perspective and really thinking about the um the safeguards we can put in place but also the approaches which is really embedded in a participatory approach a multi-disciplinary approach and also where does the law play a role and recognizing where we may have gaps what are those um what's our role to play what can we do and um in this particularly where we sit from a research perspective so we really do try to work towards a participatory sort of a co-creation co-design perspective they all bring challenges and also recognizing that our data is often it's bringing together data which has its own challenges but um with the data rights and the data holders to actually whose got the data whether it's coming from private and public or a mixture of that whether we're developing a tool or whether another organization is developing the tool and then who's deploying it so they all bring this really complex system into into play uh and again our role comes into so what can we do um both from the design and development and deployment perspective guidelines and safeguards foreign that's great thank you Luis I wondered if you might want to bring some of your experience to bear in this conversation because you've seen a lot of things have changed and a lot of things have stayed the same yes thank you thank you you and and the Mildura Center for limitation as a forensic scientist I've been using technology in the middle 80s when an American organization called witness these people video cameras around the world to people to document human regulations and from that sign almost 40 years ago was a long way I don't like the term new technologies in fact our Technologies for new applications and that's the first contradiction most of the forensic people were talking about forensic prosecutors investigators we use technology have been created for other things because normally private companies don't produce for forensic because a big money is not there so we try to apply a technology to a new field and that transition is not easy that's the first point the second point science go faster than the law and the legislation so very often there is a contradiction and problem obstacles for the quartus flow but also for legislation to accept this Technologies and this application today and the third point I would like to mention is the the there is a momentum to everybody to talk about about artificial intelligence open sources but they still we have to think the human beings behind that so the way we investigate is always the same we use open sources but we use an interview which is all part of the general investigation but that's for one side the other side is the need to democratize the use of Technologies in people who that have money to use Technologies a private company is difficult for a local human rights investigation apart from cell phones cameras and some kind of software to have access to this technology which is expensive so it's not easy to use many tags so there is a good side of Technology we can manage huge amount of data today but I still we need a human being behind and still we need regulations because today we have a total control of the states on our lives with our cameras all our daily life is control so I think we need to discuss to where we are going it's good to stop and to think and congratulate Mendota for this meeting because it's a good opportunity to think about this all we are people who use technology in our daily life and also in our world but sometime is too fast and we need to think before keeping developing these Technologies thank you Luis thank you and that's a great segue into the work that domini is doing and amnesty Tech tumini do you want to take a few minutes to reflect on this yeah absolutely thank you Gina and thank you Alexa for the the presentation I think I'm very much going to build on on so many of the themes you you raised and and this tension you articulated for human rights actors um where there is this difficulty in thinking about how Tech can be used for our own actions but also in doing so how how can we be careful that we don't uh legitimize or enable harmful uses of the tech that we we fight against so um in my Reflections I very much want to address this question of whether these Technologies are from Human Rights standpoint um a threat or are they a resource for our own action and speak to more of a framing point that I think comes up repeatedly in trying to answer that question so I mean I think the clear answer to this question hearing from from everyone today is is yes the the new technologies do come with human rights threats associated with them and yes they can also be used as as our own resources to to further our own um actions and and there are many examples of this at amnesty two we have teams that use forensic tools uh to look at um you know the spread of spyware Technologies we have teams that use open source intelligence methods um but but for me from a framing point of view what is really key is that we don't um always get caught up in taking a forced position of balance in in weighing up new technologies and the threats and benefits um because I I think that is what sometimes gets us in a place where it's hard to sort of draw the red lines that we need to around that the human rights arms of technologies that we're also seeing um and I think a lot of that that prevailing push for balanced view is what has has gotten us to where we are today where certain Tech harms associated with technologies have been able to persist um or even uh been enabled because we've presented the risks of things that can be managed or mitigated um and therefore we're unable to be proactive with with regulation and action could have that action that could have prevented a whole gamut of technicians and Alexa mentioned some of the issues that that we work on at amnesty Tech um from you know data privacy and surveillance issues which harm some of the most marginalized communities around the world in every Walk of Life um whether that's the use of facial recognition Technologies in public spaces apps on our phones um an issue I work very closely on is looking at government use of tech and Automation and how that can deny people's access to basic services like housing benefits Social Security Programs which you know really uh rights violating implications of some of these um Technologies so I think the starting point is yes we know that these Technologies can can both be used by human rights actors for for the impact we want to have um but we also know that there are many harms that we need to fight and I think what we really need to do is take that as a starting point and then and go further than that and interrogate who is articulating and has the loudest voice in in talking about the benefits of new technologies which we all know tends to be a homogeneous set of predominantly Global North white male voices from a more techno solution to school of thought um and and remember that also often the harms we're talking about with with various new technologies are to marginalized groups of people um and that's why taking this sort of false balanced approach of benefits and risks um doesn't always work because these harms are to you know smaller or minoritized groups of people but that doesn't mean that we we don't need to take action on on those harms so I think that's the kind of interrogation we need to to be doing if we want technological Futures which are more rights promoting protecting um and enable us as with human rights Civil Society Academia and more generally Tech critical Community to think about how Tech can be used to mobilize action and Power in communities who typically don't get to exercise power through technology or don't typically get to hold powerful forces to account um with tech or Aura excluded from society's mainstream use of Technology exactly as Alexis presentation raised um and I think there's a lot that comes into how we make that a reality the practice of that through regulation developing an environment where we can dismantle the power and who can fund and develop technology um and sort of promoting the the positive Tech Futures we can get behind as a human rights Community but I will stop there and hopefully we can get into the specifics of what you know putting all of this into practice looks like um throughout this discussion thank you dimini that is a great segue back to Alexa and possibly Luis as well because both of you mentioned what it takes to bring the commute bring communities into these conversations and discussions and I wonder if there's something in the practice of um of of the work that you've done and the work that you've studied to that that helps um highlight how important it is for for working with communities to to draw as davini said draw those red lines well I'll say just a quick thing and then I want to pass to Luis because I feel like Luis is really has such expertise here but I I think that this is the the real question of who who gets to decide what technologies are made or who gets to frame the problems that we are then going to decide whether or not we want to apply Technologies too so I think that if we think about that and as Louise said that it's you know it's not the the technologies that you know forensic teams are working or the other human rights actors are are using are not generally developed for those applications because that's not where the money is and it's not for solving it they haven't these haven't been specifically designed to solve these problems because that's not what the sort of hegemonic Tech environment is those are not the problems that interest that environment so I think that this is the real possibility is widening the circle bringing people in to frame these questions to decide how to use technology but Luis like you you know developed this field that has included families from the very beginning like are there lessons that we could learn from that for well I know you don't like new technologies but for things like uh yeah AI yeah thanks I would like to mention that still the most important technology we use are cell phones and internet social network around the world not only human rights organization we use those Technologies were created the cell phone 30 years ago now everybody has a cell phone the possibility to take pictures to record Etc is amazing you know spy we like to talk of the last technology still this is what we have and I would like to give a positive example 40 years ago I was working in his team or in the Pacific investigating victims of the Indonesia Invasion and we identify a body and the family came to the mortuary not just the family half of the village entered to the room over 100 people and everybody pull out iPad and phones and they started a conference in front of the skeleton calling families living in Australia in Indonesia and everybody was communicated in that moment we said we never saw this we never saw the technology coming to something so cool totally important that moment of sensitivity so we say in that moment 40 years ago something is changing the world and we were used to this kind of changes and I think that impact is is positive the other positive impact is a now more disciplines we use all these uh Power science to investigate we don't use just medicine anthropology or ontology we use Architects with situ or forensic architecture we use Engineers physics so it's it's a it's a wide angle from the positive side the negative side still is the lack of control everybody can do whatever they want and disagrees on that and we have to be very aware of that because we don't think from here to 20 years [Music] is talking half a hundred years the concept of artificial intelligence but for what we are going to use that and how the human being is going to be used on that and that is what is scare me with the supply of the human being for sun technology okay we thought is faster more intelligent and and more useful than us I think is that the debate a little bit talking at every level from the very basic human rights institution to very powerful states that's a great way to segue into our first question and I will tell our audience that the Q a tab on Zoom is the way to ask questions Edward halsell asks based on your experience and research do you have any expectations of issues arising from the recent explosion in AI development um for example there has been evidence of open AI using Kenyan workers earning less than two dollars an hour who would like maybe um Anjali so um thank you for the the question I think it's a really important one and and you know we're we often talk about you know if we're talking about AI Technologies whether that's um large language models or any other um sort of AI or data driven technology we talk about the downstream consequences but actually this is partly the Upstream consequences um that a lot of the large language models computer vision all of these areas they have been developed on workers that um have been a labor inequity labor justice issue right so it's often marginalized minority communities that have been doing the labeling uh and that's how we built these Technologies so it's recognizing that Upstream um issue it's also potentially put them not only you know just if we think from an economic issue but actually um additional harm right so they're they're viewing images these might be traumas that they've actually experienced themselves um and so while they bring that experience to that process it um it's also potentially re-traumatizing so it is something that we do need to take into account we need to recognize and acknowledge and we're still developing these tools and Technologies and we're still requiring um and using that human support so uh while we focus on the downstream we need to recognize the Upstream anybody else want to jump in on Upstream Downstream yeah Dominica thanks Gina yeah I think this is a really pertinent question I'm sure others um on this panel saw last week um some unionizing action amongst I think it was the African content moderators uh Association I might have got the exact name in Craig but it speaks to this exact issue of sort of exploitative Labor practices which are being used to then build AI systems um and and just as Anjali says I think that it's been really good to see in the AI policy AI effect Ai and human rights World a shift from not just talking about the life cycle of the AI system which is what dominated for a while but to also talk about the the inputs and the raw materials and the human labor that goes into developing um certain uh into into developing AI tools because that really is what will give uh certain companies and actors more power in being able to to develop AI systems and therefore build AI systems for the the purposes that speak to them and as Alexa said earlier not to the sort of problem set that that some of us might be thinking about in in this group um and sort of with AI it's it's it is that human uh labor and exploitative practices there but it's also who has access to computing power who has access to vast data sets um and and I see this only kind of worsening the the Monopoly power situation we have been thinking about a lot of tech policy problems um and and again I think that speaks to uh Alexa your point as well on sort of the hegemonic structures we we live with um and that if we want to see more rights promoting use and develop of Technology development of Technology a key question for us is how do we dismantle that hegemony that power in the design production proliferation of these these Technologies because that power is really asserted at each stage from who has the capital to fund quote unquote new technology which I agree is also that we can unpack that term but who has the the power to fund those um whose voices are heard in articulating the problem set that that Tech then is designed to solve a good question this is from Anthony sterk on on our question tab how do you address the question that individuals and governments do not have a built-in drive to protect someone's human rights how how do we how do we think about encouraging rights promoting um in general I think it's something we see in order to feel of Human Rights is the mobilization of the Civil Society and the role of human res organizations like coming from a country it has been on a strong mobilization and that's why we achieve what we achieve in terms of truth justice memory and preparation but in many societies around the world that doesn't happen there is a status who control the the country and these debates are not given in around the world just are beginning to start in the US and Western Europe in Australia in Canada but they still we are not thinking these kind of things and sometimes will be a little late what is kermi is someone thinking for me that's one of the problems of Technology someone Superior or supposedly superiors is taking decision for me is thinking about my life and that we can know allow to happen we need to change that can I add a little bit you know yeah I I think um Luis is spot on there about mobilization of civil society and I I mean I think this question you know it's at the core of a lot of our work um and it it um and it is a constant challenge but I also think the the amount of impact that Civil Society groups are able to have when they are mobilized and coordinating amongst each other shouldn't be uh you know underestimated and especially in driving forward and Landing important human rights messages um on my team at amnesty we're really engaged right now in advocacy on the eu's artificial intelligence act and we saw over last week from from the EU Parliament with a lot of really important wins from for civil society including bans on predictive policing and certain types of biometric tools so and I think that came from you know really coordinated Civil Society action um that said I think one issue we do run into a lot is uh Civil Society is a broader set of Human Rights actors is um that sometimes it takes really serious Scandal to see actual sort of rollbacks or action on on technology for instance a few years ago we saw some companies imposing moratoriums on facial recognition after George Floyd's murder and the ensuing protests um in the UK there was an example with the algorithm that was used to give students exam grades and after a lot of public outrage it was that system was rolled back and I this is not an answer to the question but rather posing another problem but I I do the one thing that does does really bother me is that sometimes we're really stuck in that sort of Scandal Reliant trap and I think as human rights actors there's always the challenge of how do we not only rely on that to see the action we want to take not to again underplay actually the the immense amount of impact that coordinated Civil Society action does have but I think that's oh Anjali please uh I mean I was just going to add I mean agreeing with the panelists ahead is is to say I think we also need to recognize what our role is as we you know work in these different um organizations and sectors and that we all have a role to play to help to sort of do better so whether that you're sitting in Civil Society whether or not we're in research whether or not you're within the public sector or in the private sector as individuals and collectively through your organizations have a role to play and I appreciate that that's not the easiest um and and that also comes from the fact of not only who's deciding you know where you know which Technologies to deploy and into what interventions is just who's making the decisions um within an organization and the opportunities to actually speak up and to say this is the role that we can actively play um so again it's not necessarily answering the question fully um but it is I think trying you know from trying to say you know we all have a role to play the accountability you know um issue actually is um is multi-fold right and and doesn't sit in just one space uh and as we're all working in it have have the opportunity to do to do better right that ties us very well into a question that Genevieve Smith asks it would be great to hear an example of how communities have been effectively brought in especially um with how certain AI attacks are designed and developed so earlier in that process than the the later scandals as demini said what strategies were or could be used and how was it ensured that processes were done in an empowering way how do we grapple with tech and digital literacy differences in these processes and what about ownership I I'll start us off um I'll say that I don't think that we have lots and lots of great examples of this yet unfortunately but I do think that we have that we can look to other places for example in forensics where there have been there are blueprints for doing this better and we do see some emerging um some emerging initiatives like data and Society has a new algorithmic impact lab that's going to Center communities like that's really exciting and they're going to figure out the methodologies to do that so and and we do see small we see small projects that that do this but I think that we also you know see the danger of what some people have called like participation washing that like were that sort of putting together like a quick focus group and sort of checking it off the list or something so that's very different than you know at Genevieve this raise the point of like real really empowering communities in terms of um digital literacy I think that's a good point but I think that we don't need to get stuck there because I think that that's not as big of a hurdle as sometimes we think it is I think that communities really can speak from their experience without having to know you know all of the ins and outs of particular technology so I don't think we should use that as kind of an excuse that somehow it can't be done but maybe I'll pass um I don't know Louise like how what do you think from the ways that families have been involved in forensics I think one of the problems because the impunity and direction of the stairs we tend to put the family to substitute the state and that is a problem the state has the function to investigate is a role of the state and we'll start in my field taking DNA samples digging ourselves doing fruitions of the state we have a problem because that really could be a responsibility of the the prosecutors of the state so the families were forced to do that because they are desperate they have other way to to move and to advance and in many cases communities Villages are in power but there is a limit for that at least in terms of Investigation which is my thing maybe in all fields of application of Human Rights the idea there are different examples to just piggyback quickly on that one thing that I see is the way in which journalists investigative journalists have taken on this accountability role for example through propublica's work on Compass and and things like that like that's I think I mean Bravo to the journalists who have done that but on the other hand like that shouldn't be sort of our only mechanism of accountability that's a great way to launch into our next question this is from Nathan Roland could the panel discuss what a framework on new technologies needs to accomplish that isn't met today what do we need to ask of new for for understanding regulating what would a framework on new technologies need to do I've been basically it has to be the excuse a new legislation how we can apply Technologies how not to invite people's lives how you know control the population and there is a total lack of legislation in many countries around the world about those issues and the state advance and Advance that's why we need parliamentarians which are the people who take the decisions to excuse these kind of things and to take decisions of course is not going to be easy there is a very powerful library for private companies producing technology we are know in the way to to change how the fields are but again that's why it's one of the roles of the human rights organizations Nationals and Internationals to be there in the front line promoting discussions asking our members of the parliament how they are going to do with the lack of any regulation the application on these Technologies in our life I might come in here Gina all right uh I think maybe bringing together uh what Luis just shared about the law and the importance of that of Law and regulation but also where were you we're talking about the participatory sort of element right that we have the um both the opportunity and again the the challenge here of we're bringing in Technologies uh where they're there may not be a regulation in place but there are laws that we can actually rely on um and should uh recognize it even if it is um in you know we're relying on multiple areas of the law to help to to support us um in the development and and deployment of these tools there's also the part from the participatory approach that as um Alexa sort of said the risk of sort of particip participatory uh washing is how do we do that well and I think there's a real opportunity there to in terms of Frameworks uh to recognize that actually um uh you know helping to Define actually what do we what do we want the technology to actually do and from an not just from a process but from an outcome perspective what does that good look like that there is you know the opportunity to bring people in that are most impacted by this to help actually Define um what that looks like again I'm not saying that that's easy there's all sorts of ways to kind of look at it about you know when and how do you involve people all throughout the does that that process um and you know even once a tool is is designed is deployed is recognizing for some of these types of tools particularly if we're talking about machine learning tools there there is something that they need to be checked because you know you're usually updating new data and they'll be drift so what are those mechanisms but also the checks in place of is that where we still think our values and our our are there are there shifts in the context so I think it's also recognizing that a lot of this is also context dependent so while you know we can we can work towards I think better practices in the interim there needs to be sort of a sustainability kind of framework um and monitoring foreign just to build off of anjali's point on a lot of the I think you said something about a lot of the kind of Frameworks or principles exist um I think what is a major limitation for us right now in an effective regulatory framework on new technologies is the fact that it sees these Technologies as brand new um and that a lot of the regulatory attempts are also caught in the sort of hype cycles of new technology and we've seen this again to to refer to the eu's AI act which we've been most engaged in recently um in EU policymakers is response to all the recent developments in generative AI chat GPT Etc and and thinking suddenly like Oh how we we've got this draft regulation framework but now we need to somehow absorb this new technology which we didn't prepare for and you know a robust regulatory framework wouldn't wouldn't uh face that challenge in the face of a new AI development it would be uh developed in such a way that it can absorb those developments and I and I think the fact that our uh attempt at regulation of maybe too focused right now on exact applications or um uses is not always correct and really what we need to remember is we know what outcomes it is from a human rights perspective we need to achieve and these are already embedded in the human rights framework whether that is a right to privacy or you know the right to equality and non-discrimination and that uh a good robust regulatory framework will move us towards those outcomes No Matter What technological developments come up so I think maybe I'm not pointing to an exact solution or at least you know a more outcomes based framing um rather than uh regulational Frameworks that are a little too tied right now in my opinion too whatever is the sort of Technology of the hour or the day that's a great way to segue into our last literal minutes of our time together tonight today um depending on where you are um demeaning I really like this kind of call to action right what what we need um in terms of Frameworks that would get us to more sensible regulation for rights promoting Tech um I want to give each of our panelists our other panelists um less than 30 seconds what do we need Alexa well one thing I realized is that everyone on this call Works in very radically interdisciplinary ways and works in some way with participatory methods so I think that that's a clue that that's something that we need that's great Luis what do we need iPhone is a collective process I would like to see United Nations I would like to see the Academy I would like to see other key institutional expressing ideas and thoughts about that because it's impossible for one sector it's impossible just for one human versus organization we need a collective process transition sectors of the society really sorry was that yes so I'm just gonna Echo everyone and say again that from my perspective it is about interdisciplinary cross sector and in the even cross-border no one you know individual or organization or discipline holes um the I think the direction forward and it is really about um coming together on this uh the risk of being self-promoting I think we need more conversations like the ones we've had today I want to thank this incredible panel you have been fantastic I want to thank everyone for attending we've had great participation I'm sorry we couldn't get to all the questions um it was a it was a great um interaction details of what we will be doing in the future are seminars and our events can be found on the mendru center for technology and democracy website and that's www.mctd.ac.uk please follow us on Twitter and other social media platforms at at mctd Cambridge and I want to thank you all again and enjoy your day wherever you happen to be

2023-05-25