Deploying VMware vSphere with Tanzu on Dell PowerStore X Model

Deploying VMware vSphere with Tanzu on Dell PowerStore X Model™ Hello and welcome. In this video, I will demonstrate the deployment of VMware vSphere with Tanzu on Dell PowerStore X Model AppsOn storage. VMware vSphere with Tanzu can be deployed on both the PowerStore T model, and the PowerStore X model. However, before enabling Workload Management, there are three configurations to address that are specific to PowerStore X model.

These configurations are needed to meet VMware vSphere with Tanzu installation requirements. I’ll highlight each of these in detail. The first configuration is to create a PowerStore X model cluster consisting of two or more appliances. VMware vSphere with Tanzu requires a vSphere cluster with a minimum of three ESXi hosts for the Supervisor Control Plane VMs. A single PowerStore X model appliance contains two nodes which deploy as two ESXi hosts. By leveraging the native PowerStore clustering feature, we can combine two or more PowerStore X model appliances in a cluster to meet that minimum requirement.

In this demonstration, I have two PowerStore X model appliances clustered to yield a total of four nodes or four ESXi hosts. Looking at the vSphere Client, we can also see these four ESXi hosts in a vSphere cluster which was created at the time of the PowerStore X model deployment. The next configuration is to increase the PowerStore X model Cluster MTU or Maximum Transmission Unit. The Geneve Overlay tunnel used in VMware vSphere with Tanzu requires a minimum MTU value of 1,600 Bytes and VMware strongly recommends 1,700 Bytes or greater in their architecture and design documentation. The PowerStore X model Cluster MTU defaults to 1,500 Bytes at installation and this value carries over to the vNetwork Distributed Switch used by VMware vSphere with Tanzu.

Before we can deploy and configure NSX-T, we need to increase this MTU value in PowerStore Manager. In this demonstration I’m going to use a maximum value of 9,000 Bytes which is a commonly used Jumbo Frames value in vSphere. When configuring this value, make sure the physical switch ports support the Jumbo Frames. Looking at the vSphere Client, we can see that the vNetwork Distributed Switch MTU value has automatically been increased to 9,000 Bytes which will more than satisfy the VMware vSphere with Tanzu requirement. The third configuration we have to make is to configure DRS Automation Level for Fully Automated.

By default, PowerStore X model configures the vSphere cluster DRS Automation Level to Partially Automated. To enable Workload Management, the DRS Automation Level must be configured as Fully Automated. That wraps up the PowerStore X model specific configuration.

Now I will continue with the steps to deploy Workload Management within the vSphere Client. In the vSphere Client, I need to create a VM Storage Policy that can be used to enable Workload Management. I’m going to name this Storage Policy vvol-silver. This VM Storage Policy will leverage the VASA Provider tied into PowerStore X model.

I’ll select a QoS Priority of Medium. I can see that my PS-21 storage container is compatible with this VM Storage Policy. I’ve already created the Content Library that will be used by vSphere with Tanzu and I’ve also gone ahead and deployed NSX-T. So now we should be ready to enable Workload Management. To enable Workload Management, I’ll begin by clicking Get Started. I can see that my vCenter Server is already populated tanzuvc1.techsol.local

and we’re going to be using NSX-T for this deployment. I’ll click Next. The compatible cluster we are going to use is PS-21. You can see that we meet the minimum requirement of three ESXi hosts.

Here we have four ESXi hosts. This is where we are going to leverage the VM Storage Policy that I’ve just previously created. I’ll use vvol-silver for the Control Plane Storage Policy as well as for the Ephemeral Disks Storage Policy and the Image Cache Storage Policy. Click Next. Now I’ll configure the management network. Once that management network information is entered, I’ll click Next and we’ll move on with the workload network configuration.

Our vNetwork Distributed Switch in this case is DVS-PS-21. Our Edge Cluster configured in NSX-T is the vk8s-edgecluster. The Tier-0 Gateway configured in NSX-T is the vk8s-tier0. Our DNS servers will be populated.

And now for the Ingress CIDRs and the Egress CIDRs. Click Next. This is where we need to supply our previously configured content library. That’s the tkg-content-library. Click Next and we’re just about done.

The Control Plane size I’m going to leave as small and I’m not going to provide an API Server DNS Name at this time. We’ll click Finish. I can now monitor the creation of the supervisor clusters. This process will take several minutes and once this is completed we’ll pick back up.

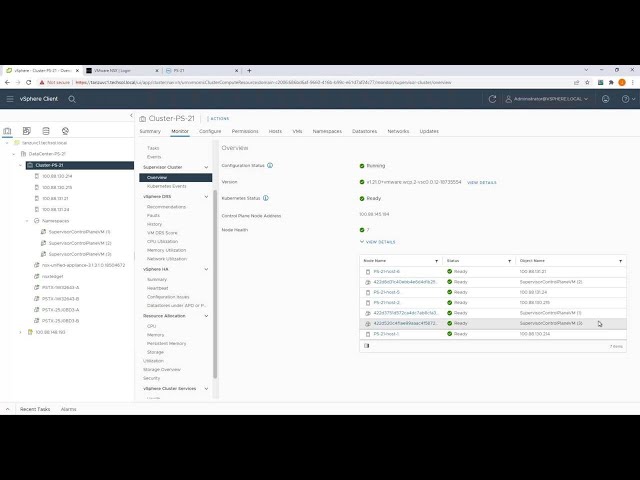

I can see that the Namespaces resource pool has already been created. The supervisor control plane VMs have also been created. There’s three of them and they will be powered on and configured shortly. Ok we’re back. That workload management deployment took about 15 maybe 20 minutes and it looks like our workload management cluster is up and running and healthy.

Let’s take a look around. I’ll go to the hosts and clusters view. Under monitor. Supervisor Cluster overview. We can see our Control Plane Node Address. This is going to be helpful when we log in shortly and deploy some containerized applications and also view the details of the node health.

So you can see that we have our three Supervisor Control Plane VMs across three of the ESXi hosts. They’re distributed. And we can also see the version of Kubernetes that’s running. 1.21.

If we look at the configure tab, there’s more Supervisor Cluster information in there about the Control Plane VMs, Control Plane Size, Namespace Service, and the Tanzu Kubernetes Grid Service. The networking page also has some good information. This is where we can validate all of the network information we provided for the management network as well as the workload network.

Some of these values we can change. Some we can’t without redeploying workload management. So if you’re having any kinds of problems, network problems, ingress or egress problems, this is a good place too look to verify the network configuration that was provided for each. We can see if it’s egress or ingress issues that’s going to be under the workload network.

We can also see the storage policy that was assigned. This was the VM Storage Policy we provided for the control plane nodes, the ephemeral disks, and the image cache. That was the vvol-silver VM Storage Policy and that ties back through the VASA Provider to the Storage Container and individual vVols on our PowerStore X model. Let’s go ahead and create our first namespace. To do that, I’ll go to Workload Management, Namespaces, and we’ll create a namespace. We’re going to create this on the only cluster that we have and we’ll call this demo.

So here is our demo namespace that is created. Now we should also be able to see that namespace was created under our cluster and our resource pool. There it is. Demo namespace. In my demo namespace, I’m going to go ahead and assign some storage. I’m going to add the vvol-silver storage which ties to the vvol-silver VM Storage policy.

Now let’s go ahead and log in and deploy our first demo application. We’ll open up a command prompt and issue the kubectl login command. Before I deploy my demo application, I want to switch to the demo namespace or context in this case and now I’ll deploy my demo application. This demo application is called Hello Kubernetes. It has been created. We can now see back in the vSphere Client that the application has been deployed.

This particular application is configured with a replica set of three I believe. Let’s take a look. Yes, three replicas. Alright so that application has been deployed. I’ll go ahead and deploy another one.

How about Busybox. Ok the pod for Busybox has been created. Alright so those applications are deployed. We can go ahead and tear them down by issuing a similar command.

Instead of apply, it’s going to be delete. There goes Busybox. I’ll also go ahead and delete the Hello Kubernetes application. Ok now that those applications have been removed, let’s go ahead and create a persistent volume claim using a new VM Storage Policy that I’ll assign to the namespace. So to get started, I’m going to create a new VM Storage Policy and I’ll call this vvol-gold. Again, this is going to leverage the VASA Provider tied to a PowerStore X model storage and the Storage Container on it.

The gold policy is going to leverage the High QoS Priority. We can see that our Storage Container PowerStore PS-21 is compatible. View and Finish. Now let’s take a quick look at the .yaml file for the persistent volume claim.

It’s called my-gold-pvc.yaml. You’ll be able to see why I created that new VM Storage Policy because the .yaml for our persistent volume claim is going to be leveraging a storage class called vvol-gold which also ties to the VM Storage Policy that I just created.

The size of this persistent volume claim and the resulting persistent volume should be around 286GB. Before I can use that new VM Storage Policy, I need to add it to my namespace called demo. So we’ll edit storage and we’ll add vvol-gold.

Now I’ll go back to the command prompt and issue the command to instantiate the gold persistent volume claim or PVC. The my-gold-pvc was created. If we look under the cluster container volumes, we can now see that 286GB persistent volume claim.

In the command prompt we should be able to see this as well. There it is. Lastly, if we go into PowerStore Manager, navigate to storage, storage containers, drill down into the PowerStore PS-21 storage container, and then look at virtual volumes, I’ll sort by the provisioned column, and there it is. Our 286GB persistent volume. If we add the column called IO Priority, we’ll be able to see that our 286GB persistent volume is leveraging the High QoS Priority.

What this means is that if there’s IO contention on the array, our persistent volume with High QoS Priority will actually receive a higher share of I/O and reduced amount of latency as compared to volumes with a medium or low priority. Now I’m going to go ahead and delete this persistent volume claim. It has been deleted. If we refresh our vSphere Client we can see that persistent volume has disappeared under Container Volumes. We no longer have persistent volumes or persistent volume claims in the demo namespace.

In PowerStore Manager, we can see that the first class disk in the size of 286GB has also been removed. That concludes this video. Please visit the Dell Technologies Info Hub for more videos and white papers.

Also check out Dell.com/PowerStoreDocs for user manuals and product documentation. Thank you very much for watching.

2022-04-24