Critical AI Issues in Europe and Beyond

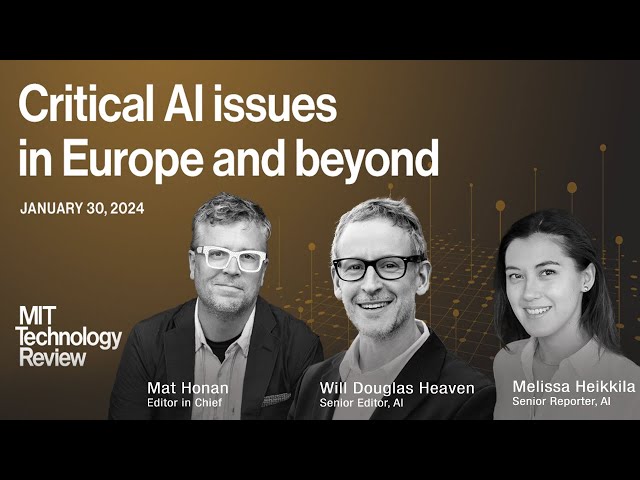

Hello, and welcome, everybody, to a special LinkedIn Live. We are going to be talking about critical issues in Europe and beyond today. My name is Matt Honan. I'm the editor -in -chief at MIT Technology Review. And I'm here with my colleagues, Melissa Heikkila, who's the senior reporter for AI, and Will Douglas Heaven, our senior AI editor.

Today, we're going to talk a little bit about global AI innovation. We're going to talk a little bit about the regulatory environment in Europe, in the US, and other places. And we're also going to talk about some of the rising concerns and rising awareness, I think is fair, around responsible AI.

So we're going to try and cover a lot of ground. If you have questions, please do drop them in the chat. We're going to try and get to them. And also, I want to make sure that you know that we've got EmTech Digital in London coming up on April 16 and 17. If you enjoy today's event, you are really going to get a lot out of that. So I'd encourage you to attend.

Melissa and Will, hello. Thank you for being here. Hi. Hey. Hi. Well, let's just go ahead and get right into it.

I think we live in a global economy. The tech industry is global. So what's different, what's unique about Europe's AI industry compared to the rest of the world? What is it that might be different from something that you might find happening in the United States, China, or wherever? So, I mean, let me jump in, obviously differences, and I think we'll get to those, but I think it's worth remembering that, I mean, the similarities are probably more than the differences, right? It's not as if Europe is reacting to AI that's being built elsewhere. I mean, many of the biggest household names in the AI industry are based in Europe, of course, DeepMind, Stability AI, and much of the research as well coming out of university.

So the research that went into diffusion models behind image makers like DALL-E and Stability came from a German university. But of course, the big, big difference is regulation, and there's a much greater appetite for regulation in Europe, and different ideas about who should do the regulating. Well, we're going to get into that in depth.

But before we do, Melissa, I wonder if you have thoughts on the same. What is it that's different about Europe? Yeah, I think Europeans tend to be very anxious that Europe doesn't have the sort of big unicorns or big tech companies like Google. But I think they've realized that that's not going to happen and they have to take another approach. And like Will mentioned, Europe has some really groundbreaking regulation like the GDPR, which is data protection law, and now the AI Act. And so European startups, they're thinking about these things from the get go. They're thinking about how to protect our personal data.

They're thinking about how to with the AI Act, how to comply with copyright law. And even though Europe doesn't have the sort of investment that the US has, it still has the talent. And we're seeing a really exciting new crop of generative AI startups like Mistral in France and Aleph Alpha in Germany.

And they're really making an impact all over the world. I'm curious, so when you talk to people who are starting companies there in Europe, in the EU, are they talking about GDPR at the outset? Are they talking about AI Act? Are they thinking compliance just as they're getting off the ground? Melissa, maybe you could take that. Yeah, absolutely. I mean, yeah, that European startup can't exist without thinking about these. And increasingly, any business, any AI company, anywhere in the world that wants to do business in the EU, which is the world's second largest economy, will have to start thinking about these things and start complying with these things. You know, the AI act will start kicking, it will enter into force in a year.

Well, let's talk about that a little bit. I'll stick with you on that. But I do think it's interesting because I've covered Silicon Valley for 20 -something years. And the mindset here is often like, let's run really fast and then let the regulation catch up, hopefully when we can lobby to control it.

But so tell me a little bit about the AI Act. Not everybody is going to be familiar with this. So maybe you could start by describing basically what the basis of it is and what kinds of effects it will have and just help us understand it. Thanks, Melissa. Yeah, my pleasure. So the AI Act is a big deal.

It's entered into force later this year. And basically, the idea is to tackle the riskiest AI uses. So AI that has the most potential to harm humans. So if you're an AI company, or a service provider who wants to use AI to, you know, affect education or healthcare or employment, then you're going to have to comply with this law. And it basically requires more transparency to AI providers, you have to share what how you're training your models, what data went into them, how you combine with copyright law.

And the most powerful AI models such as Gemini or GPT-4 will have extra requirements. Yeah, and so, sorry Matt. Can you tell me a little bit what those extra requirements are? And also, I'm curious because I think people know there's an executive order around AI here in the United States. What sets the two apart? So those extra requirements, you know, they have to share how secure their models are, how energy efficient, those kind of things. What sets us apart from the executive order in the US is that this is binding.

The executive order is a pinky promise. And this will require tech companies to actually show authorities and regulators, this is how we're doing it. This is how we're collecting our data and using it.

Whereas in the US, you know, the White House wants tech companies to think about or develop watermarking. The AI Act requires people who create deepfakes to have watermarks and disclose that people are interacting with AI -generated content. So there's a question from the audience that I would like to get to. I think this is good. Who's in charge of that compliance? Are there governmental agencies? Is it just up to the companies? Who enforces the compliance? So tech companies, it's up to them to like share this data. But the EU is setting up an AI office which will regulate and enforce this law.

Gotcha. Okay, and then maybe to both of you. So we've talked a little about the AI Act that is coming online later this year. What else do you think, oh, we're gonna see in terms of regulation in 2024.

I think, I mean, we're already seeing a little bit of it, but it's also worth remembering that EU countries, they're not afraid to enforce these regulations, and particularly against US companies. I mean, the symbolism there is obviously not going to be lost on anyone that, and the AI Act is going to be extremely powerful, but also existing regulations, we've mentioned that GDPR also has measures to basically bash US companies if you wish. So Italy, a year ago, some people watching may remember threatened to ban Chat GPT because it said it violated Italy's data protection agency, decided it violated the GDPR, and that hasn't gone away. We sort of hadn't heard much of it, much news about that, but Italy has been running an assessment in the last year, and has come back and given its findings, I think, this week to OpenAI and give them 30 days to basically come up with their defense, again, threatening to ban OpenAI's flagship product. So there's a little bit of saber rattling there for sure, and a little bit of standing off across either side of the Atlantic. But I mean, if I were OpenAI, I'd be seriously scared about that, and it's interesting to see which direction that will go in.

And maybe we'll see more of that, especially now that the AI Act comes into play. Please. Another initiative, I think, worth mentioning and kind of been overshadowed by the AI Act, but the EU is also working on an AI liability directive, which is basically, you know, if the AI Act is there to try to prevent AI harms, the AI Liability Act kicks in when the harms have already happened and could, you know, you could see really big fines. And I remember a couple of years ago when this was first proposed, this was the bill that tech companies were actually scared about. The fines, I mean, I forget what they are for the AI Act, but for GDPR, they're up to like 4% of a company's earnings. I mean, this is like seriously, seriously scary if those fines were enacted.

And so one big question, I don't know, Melissa, if you have an answer to this, but it might just be like a question to throw out there. But I mean, all the regulations that we have in Europe is this plausible will. I mean, this is people by and large in Europe favor greater regulation. But if the upshot is that maybe US companies don't release their products in Europe or release like a sort of a watered down version of their product and people feel like they're losing out on this wave of cool new tech, will that political will go? And will that have an impact? Maybe not this year, it's too soon. But like further down the line, will regulation in Europe go more the way of the US because we want the shiny toys? I don't know.

I wonder about that. Well, I'd say if you look at the GDPR, when that came out, people were freaking out. They were not happy with it at all. Now, it's sort of the gold standard of data protection laws around the world. Even states in the US, California, lots of other countries like India, China have adopted GDPR -esque rules.

And so the hope is that the AI Act will become a sort of golden standard, and lots of other countries will align with it. And if you look at the document, it's basically companies have a lot of wiggle room. So I don't think that'll be a big ask. So we have a question from the audience that I'd like to get to.

Both of you are based in London. What will the AI Act mean for people in the UK? Um, well, ironically, the UK left, uh, left the EU to get more control. Um, but then the EU remains one of their biggest trading partners. And if the EU as the UK wants its superb AI company is like DeepMind to do business in the EU, it'll have to comply with the AI Act and yeah. That's the short of it. We're sort of somewhere in the middle.

And I think that's not lost on people. The CEOs of US tech companies have been paying visits and sitting down behind closed doors with our political leaders, lobbying for if there are any regulations that they should be favorable, I think we'll end up somewhere between the EU and the US. And also the UK is interesting because it's trying to position itself as, and this happened just in the last few months or last year, the UK hosted a big AI summit, and it sort of wants to position itself as a leader in, you know, AI safety, you know, we don't want to build AI that's going to be dangerous or just detrimental in big scary ways for society.

I suspect largely because some big influential tech leaders have sort of, you know, have the biggest voice in the room, and politicians have sort of listened to maybe some of those scary stories. But I mean, we'll wait and see how that actually plays out. A lot of it is just talk for now.

I'm going to get to something that's been one of the biggest things in the news in the past week, which is that we saw in the last few days Taylor Swift, there were non -consensual deepfake nudes of her created on one platform. They were then posted on another platform, or they went viral or made it up for several hours. Melissa, you had this great open letter that you wrote.

I'm curious if you could tell us, basically, to break down what to start off by maybe if anybody doesn't know, let them catch us up on the news. But then could regulation have prevented something like that from happening? Or do you think that this is going to be the kind of thing that spurs regulation? Tell us a little bit about what went down and what's to come because of it. Yeah. So last week, the sexually explicit deepfake videos of Taylor Swift went viral on Twitter. I think over a million people saw it.

It was widely shared. And Twitter, oh, X, the platform formerly known as Twitter, X, their content moderation policies and systems clearly failed. But that has really sparked a conversation in the US and around the world really about deepfakes. I think people are really understanding, you know, deep fakes are old technology. They've been around for the better part of a decade. But I think this is the perfect storm where everyone is aware of AI.

And this wave of generative AI has really made deepfakes easy, like it's very, very easy to create a very convincing deepfake just based on one screenshot or one photo from social media. And so this conversation has really invigorated lawmakers, I'd say in the US, the White House made a statement, politicians in the US, both sides of the political spectrum are not talking about this, there's a bill that's been reintroduced. So there's a lot of momentum to regulate this technology, and whether regulation would have prevented this potentially, some places, some states such as New York prevent the sharing of these deepfakes, other places, prevent the creation and sharing. Of course, it's really hard to enforce these laws if you don't have a federal bill.

And I think that is definitely what is needed. Other places regulate deepfakes through disclosure or data protection. And yeah, the more harder we can make the creation and sharing of these models, the better. Thanks Melissa, if folks haven't had a chance, please do go read her open letter. It was really fantastic. I'm gonna change gears again a little bit here.

Will, another story that we published recently was in our 10 Breakthrough Technologies issue was your look ahead at 2024 and what that meant as you saw the big questions out there for AI. Can you walk us through what you think some of those big questions are that we're gonna have to address this year? Sure. Yeah, I mean, it's all the big questions. They're not necessarily new. They're the big questions that people have been talking about in AI for a decade.

And, you know, now they're just more urgent than ever. Now they're sort of, you know, I kind of make the case that, you know, this used to be maybe the problem of the people building this tech or some AI researchers. But, you know, now it's everybody's problem because this tech is out there in the world.

And just just to link this to what Melissa was saying as well, I mean, it's interesting that we don't really know, like, at what point we should regulate this tech. Should it be Microsoft's problem? Because allegedly most of the deep fakes of Taylor Swift were made by, you know, a free to use Microsoft tool. So should Microsoft have, you know, they've now closed the loop policy.

You can't do it. But should they have anticipated this misuse and stop that before it happened? And that's really, really hard to do because one of the amazing things with this technology is that it has capabilities that you don't really figure out until loads and loads of people use them. So by which time a lot of the damage is done. And there also there are free versions from open source versions of this tool.

So if Microsoft closes the loophole, doesn't mean people will stop using it. So that's just to say that like these big questions, there aren't easy answers to them. We still don't have answers to how we handle bias in these large models. I mean, bias is just a factor of the world and you train these models on that world.

They incorporate bias. You can put band -aids on there to try and mitigate the worst of it. But that's not going away. Copyright is a massive problem that we're just seeing now in the headlines more because the New York Times, one of the biggest media brands, is going to head to head with OpenAI, one of the big tech companies in this race. And we don't know how that's going to play out.

And at the heart of it is really deep questions about how this tech actually works. And I think we're really, really going to get into the weeds, hopefully in interesting ways. If this were to go to court and to try and figure out whether or not a large language model does copy IP when it's trained or it's something other than copy. I mean, at the very least, I think our notion of copyright and what it is to share content online is going to change.

But on that specifically, I mean, the tech companies are all making the argument that this is fair use. This is no different than if I were to go into a library and read every book and then walk outside and you ask me a question and I just tell you stuff based on what I've just read. Is that argument seen differently, getting back to the topic of our conversation here in Europe, in the UK, than it is in the US? Possibly. We certainly, you know, have stricter ideas about what's fair use, maybe not necessarily on copyright, but what's fair use in terms of using people's data. I mean, if it's personal data, then this is what the Italy's complaint against OpenAI comes down to.

They think that it sort of broke some rules in training chat GPT on personal data. And I imagine some of the copyright questions will be part of that, but I'm not sure. Melissa, do you know specifically around copyright? Um, I'm not entirely sure.

I think it's an open question everywhere, honestly. I think so too. Um, yeah. Well, moving on a little bit, I want to get to one of the questions here from the audience, which is they want someone is asking, to what extent could the EU regulations limit or bias AI innovation? I think if I'm interpreting that correctly, they're not asking about bias and AI, they're asking, you know, how could regulations perhaps hinder the industry there in the EU? Well, this is one of the main anxieties EU lawmakers have, you know, they, you know, they want to regulate, but they also want to have the innovation. They want to, they want a piece of the pie. And so it's worth noting that the AI Act only kicks in for maybe five to 10 percent of AI applications with the highest risk.

So if you're doing facial recognition in public places, you'll probably have to comply. But if you're doing some sort of, you know, B2B email app, I think you'll be fine. Also worth noting that open source AI is exempt. So that should really help. And a lot of, if you look at Mistral, you know, a lot of the, a lot of the startups, AI startups in Europe are open source.

Do you think that regulation and responsibility are compatible? I think that's sort of the deeper question there, is can we have responsible AI that doesn't hinder innovation? Yeah, absolutely. Absolutely. And I think all the harms we've seen, it's, it's clear, we need that we need some sort of baseline, we need to have a conversation, we need to have agreed rules, or else we'll just see, you know, the past, that the tech harms in the past decade, you know, exponentially worse with this new AI age. I mean, you hear that a lot more. I mean, obviously, we've said already, Melissa and I are based in London. But I hear that a lot more talking to people in the US that responsibility and innovation is presented as an either -or.

And I don't think that's how it's seen in Europe. Certainly, none of the people putting these regulations in place are trying to sort of hamper innovation. Fostering innovation is a big part of the motivation for doing this.

And I think the right thing to do with technology that moves this fast is to ask questions and then act, rather than act and then ask questions. And we may find that we end up in a much better place and get there more quickly, get to the place that we all want to be more quickly if we have some regulations in place. And just one small thing worth bearing in mind, the companies in Europe that have sort of come up in this regulatory framework under GDPR, for example, it's just a matter of course, that you would have people on your staff that handle that to make sure that you are complying. And it's just part of doing business. It's not a massive hurdle that you have to overcome.

It's just how things are done. And I think it's when US companies possibly sort of bang their heads against that, that it's seen as hampering innovation. But it's a little bit of work and maybe it won't be looked at that way. I think it's a popular lobbying tool to say that it's going to stifle innovation. And it's interesting. You typically see the big US companies saying, OK, look, you can't regulate us because it's going to stifle innovation.

Or, like, we want to define the type of regulation that we're going to have. We have to have regulation. Please regulate us in these ways that we are very specific and that we want to be regulated. But I do think that the world learns some lessons from social media and the proliferation of the internet and things that happened with which we weren't really ready for.

Melissa, I'm going to come back to you with a question from the audience specifically wanting to know more about the exemptions that apply to open source and why those exist. Is the reasoning there just that the code can be inspected, or is there something else? Yeah, I think it's simply that that they already complied by default because they're transparent. Yeah. I see. We've talked a little bit about competitiveness, and this is a question I wanted to try and get back to here while we have a few more minutes. Actually, this is kind of a fun thing to get to.

Just let me just ask because it just came up. Can we teach AI to regulate itself? Anybody want to take that one? Probably. I wouldn't be surprised if people were already doing that. Yeah. Who watches The Watchmen, right? That's what you always come back to. I'm going to go back to the competitiveness question.

At the top of the show, you mentioned a lot of these big companies that have come out of Europe. It's also true that you mentioned DeepMind, which is now a part of Google. And I think there's a question of how can European companies, how can more European companies compete against some of these big US tech incumbents, if either of you have a view on that.

Yeah. I think, well, they need to keep growing. I think we need some, the problem is all these big tech companies are full of European talent, are full of excellent, excellent researchers coming from Europe. And Europe needs to find a way to bring them back to Europe or not lose their talent, not have this brain drain from Europe. And I don't know what the solution to that is.

Is it attracting more investment that salaries can be more competitive? Is it, you know, doing more marketing to show the European way of life? I don't know. But anyway, Europe needs to attract the kind of talent and have, you know, have more startups like these startups that we have, we should boost them definitely. And yeah, just encourage more of that to happen.

Yeah. Let's go ahead, Will. I was just going to agree. The brain drain is a problem. I just think there's more money being thrown around in the US, but that might change.

I mean, I don't think it's regulation necessarily in the long run that's going to hold Europe back. Well, to that point, do you think that there needs to be different business models? Do you think that there needs to be more government investment, more venture capital focused in Europe? How do you think you solve that brain drain, which sometimes comes down to money? I was at this conference a couple of weeks ago, Emtech Europe, which was in Athens, and I spoke with an Italian AI founder, and he was he was saying that Europe has all of these EU funds horizon that he was saying how great they are. If you know how to write a good pitch, that's a great source of money. So initiatives like that are great. Well, thanks so much for both of you.

But while we've still got you, we have a couple more minutes for questions here if someone in the audience has tried to get one last one in. But before we do, I want to bring up that you're both going to be at EmTech Digital in London on April, let me make sure I get the date right here, 16th and 17th. I'm glad I checked.

Are there any speakers or sessions that you are really looking forward to that you want people to know about? Will, you can take this one to start with. Well, yeah, well, we're going to have, you know, we're going to have people from some of these massive European companies that we've been talking about. I mean, obviously, DeepMind, and you can put questions to to them yourselves.

I hesitate. There's possibly there's a super secret guest, hopefully, fingers crossed. I'm not sure I'm actually allowed to mention yet, but I mean, all this stuff we've been talking about today is a teaser of what's coming at the event.

So if you want to go straight to the source and ask questions of the people who are making these decisions and will be impacted by those decisions, then do sign up. Melissa, how about you? I'm looking forward to my session on AI regulation with Dragos Tudorache, who was one of the lead politicians pushing forward the AI Act. And also a really great session with Angie Ma from faculty, which is a great AI startup in the UK. And she's going to talk about how to upskill in the AI age. Personally, I want to know what she wants to say.

So looking forward to that session. And now we're almost out of time, so I'm going to try and get to this last audience question. And this comes back to so much interest in regulation. And I think this is a good question. Because one of the dangers of the regulation is we might have one regime in Europe, one in the United States, another in China or the rest of the world where there may be patchwork of those things.

So the question is, do we need to worry about countries that are not subject to the same level of AI regulation gaining competitive advantage over European or other countries? And I think also just like there's another question there which the person didn't ask, but I'm interested to know is, how do you compete if there is this patchwork of regulation? How do you comply with all of it? And maybe stick that first part first. Melissa, would you want to do that? Yeah. So, well, if you think of China, China has a lot of AI regulations. They're regulating deep fakes, recommendation algorithms, you know, they also have that. And to your competitiveness question, it is a big problem if there's a patchwork.

And so I think what we really need is some sort of global convergence. And I think that's the European vision that they're offering this solution that will be that. There's also a lot of excitement to do something globally on a global stage at the UN level or the OECD. So I expect that will be a big thing we'll be talking about this year. Amazing. Okay, I think yeah, I think there is a push to make more more of a global regulatory framework because I mean, it's just a headache if you have to deal with these different regions.

Yeah. OK, well, guys, Will, Melissa, thank you so much for joining us today. Thanks to everybody out there on LinkedIn who tuned in. As a reminder, I'm going to say one more time, we've got EmTech Digital London coming up in April, on April 16th and 17th.

You can see Will and Melissa there on stage, talking to really smart people, asking really smart questions better than the questions I ask, I promise. And that's it. That's all we have time for today. Hope to see you on April 16th.

Thanks for watching!

2024-04-13