Color on the Web and Broadcast - Chris Lilley (W3C), ICC DevCon 2020

Hello, I'm going to talk to you today about color on the Web and Broadcast, and we'll see the ways that these interact. I'm Chris Lilley. I'm a technical director at W3C, the World Wide Web Consortium. So, topics for today.

Firstly, the prehistoric Web, how it all started. Then, what happened when color management arrived? How the Web is different from everything else that we've been hearing about today? How do Web and Broadcast interact? CSS Color 4, which brings us wide color gamut, and how we can build on that to mix and manipulate colors. High Dynamic Range, and lastly, some future challenges. So back in the beginning, 1989, the Web was started. People weren't using it for a few years, but that's when the initial thing happened. And I'm going to go immediately back to broadcast because in 1990, there was an interesting specification: ITU Recommendation 709.

Previously, all television standards had been country specific. There's one set for the US, one set for Europe. This was the first one to bring High Definition television, which was worldwide.

So, this specification defines some chromaticities: a D65 white and an opto-electrical transfer function with a linear segment to avoid noise. It was scene referred and the overral gamma was 1.2, because the assumption was you look at television in the evening in a dim or dark room. And there's the chromaticity diagram. As you can see, the gamut is nothing special.

It's not bad, but it's not amazing. This was typical of cathode-ray tube displays at the time. And speaking of cathode-ray tubes, this is what they look like. If you were using the web in the early nineties, it would be a big, heavy monitor, 14 or 15 inches and probably 256 colors, indexed color. Lucky people like myself had "Truecolor", but most people did not. And gamma was a big issue in those days.

On the Mac, there was a 1.45 correction, which with an assumed 2.6 gamma gave the often quoted 1.8 Mac gamma. Silicon Graphics had an even bigger correction at 1.7, giving an overall 1.4, and PCs and Unix is used whatever the cathode-ray tube did, they basically didn't do any correction whatsoever. So it depended really on your brightness and contrast settings.

Almost as soon as I arrived at W3C in 1996, there was a workshop on printing from the web. And most of that was indeed about printing. It was about embedded fonts and this sort of thing, high quality print, but one proposal was called A Standard Color Space for the Internet. And it proposed the same chromaticities and D65 white as BT 709, but this was displayed referred. There was an "Inverse OETF" again with linear segment.

Overall system gamma is now 1, because the assumption is normal office lighting. And because of that, and because of the screens of the time, a 5% viewing flare was incorporated. And as you can tell from the chromaticity diagram, it's the same because the transfer function doesn't show up in this as any different. Six months later, we had the first recommendation for Cascading Style Sheets and, pretty much at my insistence, it used sRGB.

Most people liked RGB. We know what RGB is, is what you give to the screen. And it makes colors. And I said, no, it has to be actually defined what it means, colorimetrically, because later on that will be important.

Everyone's like, yeah, sure. Okay. And you can see from this wording that people had very low expectations. Basically, please try to have a consistent gamma is what the spec says. And beyond that it's this is what color it should be, but it probably won't. Then color management arrived. Firstly, on the Mac with ColorSync, then Windows NT.

I remember using the Kodak CMS. You had to buy that afterwards. It didn't come with the operating system.

But in Windows 98 and 2000, there was ICM and shortly afterwards, ICM version two, which I think came from Canon Information Research Systems in Australia. And then much, much later, Linux with GNOME and KDE got their own color management. So finally we said, aha, all different platforms have color management. Amazing. All right. And then of course mobile happened.

Mobile phones went from something that you use to call or send a text message, just something you could browse the web on. And they were small, underpowered, low resolution devices, and they certainly didn't have color management. So we were back to square one. Now I want to cover how the Web is different from all the other color solutions you've been hearing about. In industry, if you're doing commercial printing, if you're making some colored objects like plastics or fabric or print, if you're making paints or dyes, there is a paying customer.

Therefore there is some money in the system to pay for the production. Design is finished before production. Production is centralized. There is a factory or a print shop where all of the products are made, which means that you can do QA on them because they're right there. And because there is money in the system, buying reliable, calibrated instruments for consistent color QA is not that much of a problem. You can afford to do it.

And in particular, you can't afford not to do it, because the cost of getting a batch wrong is high. And thus we have this sort of situation, commercial printing press working day in, day out, giving consistent color. Contrast this with color management on the Web, even assuming you have a color-managed screen. Firstly, the content and the browsers are free. So the only money in the system is from advertising and it goes to the advertiser. The design is customized in various ways to the end-user's display: the resolution, the colors available, various other factors.

The production is distributed. It doesn't happen in a central location but on each user's machine, on their laptop, their tablet, their phone. And end-user calibration is rare.

The device may arrive with some sort of factory calibration, but the user will not have even a colorimeter, let alone a spectrophotometer. The result: very colorful, but rather messy. And this brings us to fairly recent times. CSS Color 3 was therefore sRGB only, with 8 bits per component. As you can see, the integers are a zero to 255 scale we use to specify color. Some browsers were color managed, either just for images or also for the CSS part.

Firefox, unfortunately, just threw the data at the screen. So if you had a wide gamut monitor and you had what was supposed to be in sRGB color, it would look too saturated. So, broadcast. What was happening in this space?

Well, one of the big changes was the move to digital. Content was captured digitally and it was displayed digitally. The Digital Cinema Initiative, moving on from projecting in cinemas using film stock to using digital projection, defined a color space which these projectors would use. They define the chromaticities, they had a rather weird white, which was greenish white.

They had, notably, a monochromatic red at 615 nanometers. So they were starting to move to a wide gamut. The luminance was only 48, but that was fine because you were sitting in a pitch black cinema. And here's the chromaticity. I've also shown sRGB 709 for comparison.

You can see that the red is definitely more saturated, it's right on the spectral locus. And it's further off towards the infrared end of the tongue. The green is almost on the spectral locus.

It couldn't get much further over and still be parallel to the straight line at the top of the chromaticity diagram. So this means we've got good greens, yellows, oranges, reds. Blue is the same as 709 or sRGB, but we can see that there's some impact on cyans and magentas as well. So why would we care? We're not in a cinema! Why would we mind what they do? Well, that standard got picked up elsewhere. Consumer devices that were HD started to have branding requirements.

The UltraHD Premium requires you to display at least 90% of the DCI P3 gamma. (That's 90% in area, not volume, unfortunately). And now we have VESA for the DisplayHDR set of specifications.

Now we're going to talk about HDR later. But I should note here that they have several tiers, 400, 500 etc., based on the peak luminance. And for basically all but lowest one, they require conforming devices to display at least 90% of DCI P3. So this was coming into the commercial consumer market.

Then Apple came out with something called Display P3. Now, actually, this is almost the same as sRGB in terms of the transfer curve, the viewing conditions, the D65 white, the only thing that's different is the primaries, which came from P3. Also, it was specified as being display-referred. But most significantly, they started shipping phones, tablets, laptops, even watches, which had very very good coverage of the P3 color space. And they were factory-calibrated. And they have a color management system.

So when you got a device new from the shop, they actually had a profile for your screen that would bring it into conformance with P3. Here, it is again, just to see the only difference from the previous slide is that the strange white point has disappeared and we have D65 now. Lastly, moving on, there was another ITU recommendation, 2020, which came out in 2012 because they expected that by 2020, they will be able to display this huge wide gamut. Well, we all know how 2020 turned out, but getting onto the gamut it is ultra wide, all three primaries are monochromatic.

10 or 12 bits per component, because with a wide gamut like that, obviously you need more bits to avoid banding. The opto-electrical transfer function was defined and a non-matching gamma 2.4 EOTF, D65 white again, a dim surround because again, the assumption was people only watch television in the evenings. Display-referred, which is good. And this was used for UltraHD, so 4k and 8K broadcast and streaming Now looking at this chromaticity diagram, we can see this has a substantially larger gamut. Between the red and the green, we're going right along the spectral locus there.

So we're as saturated as we can be. Again, the green can’t be moved further over towards the more cyan light greens, because otherwise it would start to deviate from the straight portion. And blue has also had a nice upgrade; it's right on the spectral locus. So the magentas and cyans are definitely better, and some of the blues are better too. So in practice, how was this used? The content is still mostly mastered in DCI-P3.

There aren't really screens which can display the entirety of Rec.2020 gamut though some of them can display up to 90%. Also, HDMI only supports 2020. It doesn't support P3. So therefore, what happens is it's mastered in P3 and then it's converted into the 2020 color space, what they refer to as being in a 2020 container.

And then for HDR, which we will talk about later, there is some metadata to say what the mastering gamut volume is. Volume, yes, not just area. So CSS Color 4: what happened? Why could we suddenly produce a wide gamut improvement for cascading style sheets? Well, firstly, one of the things that hadn't changed really was SLR photography. Sure. People were taking photographs with an SLR. They were processing it in something like Pro Photo, a very wide gamut color space, and they were displaying it on a fancy high-end monitor. But most people weren't doing that.

Professionals were doing that, the general public was not. So this wasn't really a driver. Apple rolling out Display P3 devices on the other hand was definitely democratization.

The general public could have these devices and it wasn't just Apple. Dell, HP, Microsoft Surface all had wide gamut laptop screens. Samsung, Pixel and others started coming out with wide gamut phones. Most of them had DCI P3 in that case. And as I mentioned, there were wide gamut HDR TV/movies/streaming TV services. And lastly, the mobile devices got more powerful and they started catching up and having a color management system as well.

And, as I mentioned Safari, once they brought out these devices, they very rapidly supported CSS Color 4, at least for the display P3 space. This is the syntax that we have. I use the color function to declare what color I want. Display P3 is one of the options, obviously, ProPhoto RGB for the photography crowd. Rec2020, because we will need that. And, all of those have the same color.

I've given it in LCH there . Since I mention LCH, quick reminder: this is the polar form of CIE Lab, and you can use it directly in CSS Color 4 as well. There, I've given the background color. You notice this says 50%.

That's because the CSS compatibility, the lightness, because it's on the zero to 100 range is expressed as a percentage. Apart from that, just very standard. The second example here has 50% opacity. And the third example, after the comma, there was a fallback.

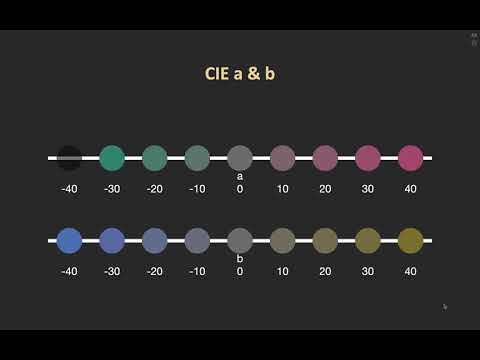

So if this LCH is outside of the gamut of your screen, then the fallback color of the sRGB fallback will be used. And lastly, when converting from Lab to LCH, there's often a problem with the hue angle because very close to neutral values, tiny changes from noise in the a & b channels can give you wildly different hues. So sometimes we return Not A Number for neutral hues. And you can use a & b as well. Here is a representation of the axes. You can see that trying to manipulate a color directly in a & b is about as usable as trying to manipulate it in RGB, do I move this slider to have a bit more pinkish color or a bit more skyblue, a bit more mustard yellow...

It's not a good way to select colors, but is of course provided from most commercial instruments. And so we do have it. This is the same example as previously, but in Lab. I should also note that Lab and LCH are chromatically adapted to a D50 white point, partly for ICC compatibility and partly for compatibility with most measuring instruments. But that's not all. We can also use ICC color profiles.

You point to them like this, in the same way you would point to a Web font. There's an ICC color profile. You declare a name by which you'll refer to it. And then you can just go ahead and use that with as many parameters it needs. So for CMYK we need 4, this is a fogra39 example. I mean, we’re not restricted to just 4 parameters either.

Here is a CMYK Orange Green and Violet example, using fogra55. So we have this capability, the next specification was CSS Color 5, which puts this to work. You can mix and manipulate colors. Fairly recent specification. Here's an example.

I want to mix teal and olive together, and my mixture will be 65% teal and thus 35% olive. And by default, the mixing is done in LCH color space. So here's a diagram showing this, you can see the teal and the olive. The dotted line represents the mixture curve. And there we have our mixture. You can also mix just the individual components.

Here, I've described two colors, which I've given names tomato and sky. And then I want to mix from tomato, which is 75% of its hue, but the lightness and chroma will remain the same. And there we see again. This time we have a circular dotted line because the same chroma will be used regardless of the hue. So the chroma of the sky blue doesn't matter, and you can see that we're taking the shortest hue arc.

There's also the option to specify the longest arc, if that's what you want. So to summarize, you can mix colors with CSS in different color spaces. Like one can be ProPhoto RGB, and the other one can be CMYK. Because both of those have a colorimetric definition, they can be converted to XYZ, then Lab, then LCH for mixing.

You can specify the long or short arc. Now, note when you swing the hue around, it's very easy to go out of gamut because the gamut is an irregular shape. So something that was in gamut, you add 90 degrees to it.

And therefore we are also specifying gamut mapping. This is an ongoing task, but at the moment, experimentation shows us that reducing the LCH chroma until the color is in the gamut, seems to give a good enough gamut mapping. Remember all of the colors in CSS are individual. It's not like we're trying to take an image, a photographic image and bring all of that within gamut. We're bringing individual colors within gamut. So effectively, this is like a relative colorimetric rendering intent.

Another thing that people want to do with colors is to find the most contrasting one. This is a common accessibility need. To date, what happens is that people design the website, then it put it in a testing tool and it will tell them if the contrast is too low on some particular combination, but this is a manual process. And it is much harder when there's a lot of customization going on when people can choose certain color schemes or they might be using dark mode or light mode.

So we've added a function which calculates the contrast for you. This is the Web Content Accessibility Guidelines contrast formula. It's simply the luminance of the lighter color divided by the luminance of the darker color.

And there's a 0.05 addition there for viewing flare, which also conveniently means we never divide by zero. Here's an example.

I've got color contrast of wheat versus a comma separated list of other colors, tan, sienna, etc. And here's the calculation. Firstly, we work out the luminance of the background color, wheat, and then the luminance of the others and the contrast ratio.

From here, you can see that the red color is going to give us the best contrast, a contrast of 5. There are various regulatory requirements for minimum contrast for different sizes of text. This allows that to happen automatically, which is a big boon to website designs. Okay. So high dynamic range: what's happening there? I should point out that CSS Color 4 is specifically wide gamut, but it's standard dynamic range.

Why do we need high dynamic range? Well, it's used everywhere. It's used on your television for films and sports and so on. Gaming consoles are using HDR now. TVs are using it.

Laptops are starting to have HDR at the high end, and it's not just for video. There are also still image formats coming. And we want CSS to be able to match colors in that, so you can have the whole experience. Here's another broadcast standard. This is Recommendation 2100.

I hope that isn't a prediction of when it will actually be implemented. It uses the same 2020 gamut and the same 10 or 12 bits per component, same white point, same viewing conditions. But what it introduces is 2 new transfer curves.

The first one is Hybrid Log Gamma. This basically is a standard gamma SDR gamma function up until media white. And then it has a logarithmic function after that. It's scene-referred, and it uses relative luminance. So that means it places the diffuse white at 0.75,

3 quarters the code space is used for the standard dynamic range, and the other quarter for high dynamic range, giving you 2,5 stops of highlights or 12 times diffuse white. This means it can be used in a range of viewing environments from dim to bright. If you're wanting to watch a film at night, you can do that in the darkness of your room and then at noon the next day, you can watch the football and you turn the brightness up of course, so you can still see the screen. So to summarize Hybrid Log Gamma, it's brighter displays for brighter environments. In contrast, Perceptual Quantizer is different in various ways.

It's reference display referred rather than scene referred. It uses an absolute luminance scale from zero to 10,000 candelas per square meter. Therefore, the diffuse white varies depending on the program content, but approximately it's going to give you 5 stops of highlights. And again, it's assuming a dim viewing environment, dim to dark. If you want a different viewing environment, you'll actually need to do color re-rendering.

So the point of the Perceptual Quantize is brighter displays for more highlights, more piercing highlights. Basically, one of them tends to be used for live action stuff or things which haven't been color graded, like content from phones and that sort of thing. And the other one is used for carefully graded things like films, movies. If we want to use HDR, can we still use CIE Lab? And the answer is no, not really.

Firstly, even for wide color gamut, there is a well-known problem of non-linearity in the blue area. If you increase chroma, then the hue will be seen to shift, although the hue angle is remaining the same. It's primarily used in the printing industry for reflective low luminance print gamut stuff.

It is reflective color samples. And if you're looking at a viewing booth where you're not having a very high luminance level. It's hard to extend the L function for specular whites. It has been done up to 400, but it doesn't necessarily work that well. And it has been under tested, under evaluated, for wide gamut colors, where it's known to overestimate the Delta E, even with Delta E2000, it's overestimated for highly saturated colors.

This next spec I'm going to show you is an unofficial draft. I mentioned that Color 4 is restricted to standard dynamic range. I wanted to make sure that the same approach could be used for HDR. We weren't painting ourselves into a corner.

So this is my proposal, which has gone to the CSS Working Group once, but it hasn't yet been adopted. And what does it add? Well, it adds BT 2100 obviously, with both transfer curves. And then it adds two new things, 2 new color spaces, which in some ways are an HDR upgrade to Lab. One is the slightly badly pronounceable JzAzBz, and also the polar form of it. This works on cone fundamentals, the LMS space, and then applies a PQ transfer function on that or PQ-like, and then ICTCP, which is increasingly used in broadcast nowadays. It's defined by Rec 2100, actually.

And that again, uses PQ on an LMS color space. And lastly, this specification defines how you would composite SDR and HDR content. You might for example, have an HDR film, but then you have a web-based pop-up, which is giving you actor bio's and this sort of thing, or upcoming movies or whatever it is that sort of web content add-on. And that might well be SDR. So we need to define how to compose it together. Luckily, there's an existing ITU report, which explains how to do this.

So it's fairly simple. So future challenges. In general, the Web platform is moving towards wide color gamut, and starting to get HDR. But there are areas that have older assumptions. Compositing, for example, when you have alpha transparency and compositing foreground and background objects.

It uses sRGB and worse, it uses gamma encoded sRGB. Yes, we know that's wrong. It was done that way because that's the way the Web worked when it first came out. And it was continued that way because of Photoshop compatibility with your various blending modes, these are all done in gamma encoded RGB. The ideal though, would be to composite in linear light. So it's just X, Y, Z.

That specification needs to be upgraded. Gradients. Again, they use alpha pre multiplication, and again, they work in gamm -encoded sRGB space, which means you tend to get a dock, a color, and you wanted midway between two color stops. The ideal would be keeping alpha-premultiplied, but a perceptually linear space, and preferably one which is chroma preserving.

So LCH or JzCzHz, rather than say, Lab. You should still have the Lab option. But that means if you have a wide hue difference between two color stops, you'll start going close to the neutral axis.

And canvas, which is the immediate mode graphics rendering for HTML. Again, gamma-encoded sRGB, built in assumption of 8 bits per component. The canvas people are starting to add other color spaces, copying the ones from CSS Color 4, which is a good move.

They're also adding 16 bit or half float sRGB. Why would they do that? Well, the zero to one range gives you the sRGB gamut and then negative numbers or numbers greater than one could be used both for wider color gamut and possibly for HDR. This is still being experimented with.

And lastly, the CSS Object Model, this is how JavaScript will interact with the web page. Again, it assumes colors are 8-bit sRGB. Although it's called an object model, it actually works by serialization. You pass around strings and then reinterpret them. And there is a huge legacy code base. Basically every script on the web that manipulates color now knows that color is 8-bit sRGB, and will have to be upgraded.

So in Color 4, we define the serialization for that more closely than has been done before. We went for extreme backwards compatibility for all of the existing syntactic forms, but we also added serialization for the new color(), Lab and LCH forms. Moving beyond that, there's work on a Typed Object Model. Instead of getting a string back, which represents a color, you would get a color object and you can then call methods on that, to do things like color space conversion, or whatever. There was also a need for expert review. Color wasn't a strong point of W3C necessarily.

So when there were discussions, not enough people were taking part. We now have a Color on the Web Community Group, which has been running for a couple of years, and it is a great way to give feedback, and also W3C joined the ICC. And lastly, I want to bring to your attention an upcoming workshop. This will be a virtual workshop on Wide Color Gamut and High Dynamic Range for the Web. The actual event will happen in several weeks across April, 2021. Speaker submissions should be by end of January of 2021.

And we're putting up information on that now, but meanwhile, if you're interested in speaking, contact me and I can help you out. Thanks very much.

2021-01-20 12:22