Cloud Agnostic or Devout? How Cloud Native Security Varies in EKS/AKS/GKE

- [Brandon] Good afternoon. I hope you've had a great conference so far. And I know that there's been a lot of information you've had to absorb over these past couple of days, and you might be, honestly, a little overwhelmed with all of the technical knowledge that you've taken in. So I thought one way that we could bring it down a notch as we end this event is to do a little guided meditation. And I know what you're thinking.

The answer is, yes, I'm serious. So everybody close your eyes. (gentle music) I'd like you to clear your mind from all distractions.

Breathe in and breathe out. I want you to visualize a bright, fluffy cloud. That cloud has three familiar letters on it: A, W, and S. Now, your organization has a lot of data in that cloud, and that might scare you at first, but don't worry, assuage your concerns because you all have a great team back at your organization who understands the security of AWS and knows how to deal with it.

Or at least I hope you do. So, everything is just fine. (gentle music stops) Now, suddenly, out of nowhere, there is another set of clouds that show up, that show Azure, GCP, Oracle, Alibaba, oh my. And it's not like these clouds are any more or less secure than AWS. It's not like there's a unique problem with these clouds, but your team doesn't necessarily know how to secure those different cloud providers. And all those clouds get together into this awful multi-cloud dark thunderous blob, and you feel like you're gonna scream.

Now, how are we gonna deal with this? You might be saying, "Oh, Kubernetes! I've heard about this technology, Kubernetes, several times at this seminar, at this conference. Isn't that a multi-cloud, cloud agnostic solution that would allow me to go multi-cloud without having to worry about the security controls, being able to consistently apply security controls across each of the cloud providers?" Can Kubernetes help you weather this storm? Well, unfortunately for you all, this talk will be my argument that it cannot help you weather that storm, at least not automatically. You all can open your eyes and welcome to this talk: Cloud Agnostic or Devout? How Cloud Native Security Varies in EKS, AKS and GKE. I am Brandon Evans, and I am a independent security consultant, as well as a certified instructor for the SANS Institute, and a course author of SEC510 Public Cloud Security: AWS, Azure, and GCP SANS's Multi-Cloud Security course.

Here's the regular disclaimer you've seen before. These are my opinions, not RSAs. Hopefully, we're gonna have a lot of fun with it. So why did I make this talk? Well, a big reason why I made this talk is because of the popularity of Kubernetes. Everybody's heard of it at this point in this conference, right? Everyone? Well, it has been around for quite some time at this point.

A 2021 survey from the Cloud Native Computing Foundation found that 3.9 million Kubernetes developers were out there. Think about that. 3.9 million Kubernetes developers! Think about how many applications they developed there. And that is a 67% increase year-over-year according to the CNCF.

Now, this is not just a technical matter, but also one that leadership has recognized. They found that 85% of IT leaders, Red Hat has found, that 85% of leaders think that Kubernetes is at least very important. Now, I am someone who has gone through several different hype cycles in technology. We've seen virtualization, containerization, serverless. We've had some technologies that have stuck around. And now we have AI.

We also had blockchain. That hasn't really stuck around very well now, has it? So we have to evaluate whether or not this hype is justified, whether or not Kubernetes is a solution that is really going to last and whether or not it's going to be useful for our organizations. And it's really hard to answer that question if you haven't played around with it much at all yourself.

Now, I am a person who has not played a whole much. I have not played around with Kubernetes that much. I know a lot about multi-cloud, but prior to this talk, I had not actually launched a Kubernetes application in the cloud to validate some of the assumptions that I've been given, such as that Kubernetes is cloud agnostic and that it could help us solve the multi-cloud problem. So part of the reason I made this talk is to force myself to learn a little bit more about Kubernetes. What was my conclusion? Largely what I expected, that Kubernetes is really complicated for basically no reason, and I don't really like it that much, but that's neither here nor there.

The main conclusion I made from a security perspective is that Kubernetes is not the one-size-fits-all solution for the issue of multi-cloud and cloud agnosticism. Now, in my course, I talk about how multi-cloud, even though I talk about it extensively and I do a lot of research in multi-cloud, a lot of consulting in multi-cloud, multi-cloud is not necessarily an ideal thing to opt into. You may end up multi-cloud by merging or acquiring an organization, but you may not want to actively pursue the route of multi-cloud because more platforms means more footprint, means we need more folks who are able to secure those different platforms, people that have that kind of subject matter expertise. So my goal for this talk was to show that Kubernetes cannot necessarily solve that problem and in some ways might actually make it worse.

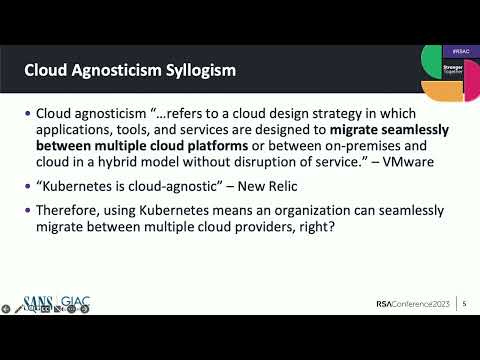

So let's define cloud agnosticism and understand where the concept of cloud agnosticism falls apart. So I'm using definitions from third parties to be as objective as possible. VMware has stated that cloud agnosticism refers to a cloud design strategy in which applications, tools and services are designed to migrate seamlessly between multiple cloud providers on or between on-premises and cloud in a hybrid model without disruption or service. In short, a cloud agnostic technology allows you to move a workload from point A to point B. And even if those are different clouds, even if one's on-prem and one is in the cloud, there's no functional difference. The workload doesn't even need to know where it is running in order to function properly.

That is what cloud agnosticism should mean, according to VMware. Now, another thing that a lot of people say is that Kubernetes is cloud agnostic. I think most people with familiarity with the term know that Kubernetes is one of the top technologies that people discuss when they talk about cloud native, cloud agnosticism, and New Relic is one organization that agrees with that definition. So here we have two statements and that should lead to the logical conclusion that therefore, using Kubernetes means that an organization can seamlessly migrate between multiple cloud providers, right? Doesn't that logically make sense that if the application doesn't even know about what cloud provider they're running on, therefore, you should be able to pick it up and drop it off elsewhere? Unfortunately, I will argue that that is not the case.

Here are some of the reasons why that is not the case. The main one is that cloud agnosticism is not inherited. Just because Kubernetes is a cloud agnostic technology does not mean that the applications that you run on Kubernetes are necessarily cloud agnostic. And there are not a whole lot of things you can do about this because the vast majority of your applications in the cloud are going to take advantage of other cloud services. Who runs containers in the cloud without integrating to other cloud services? Does anybody just run a VM or a container and not have it talked to S3 or Azure Storage or DynamoDB? I didn't think so. Maybe one person, but not a whole lot of people go that route.

A lot of developers at least wanna take advantage of those cloud baked-in services and they don't wanna just run a VM in the cloud that is isolated from everything else. They wanna integrate with these great services. Amazon has over 175 fully fledged services.

Why are we using Amazon if we don't take advantage of any of them, many developers would argue. Now, if you are integrating with those different services that are not just compute, but are databases or storage solutions or AIML solutions, the way you integrate with those services is not consistent across the different cloud providers. Can I have an application that talks to DynamoDB and then move it into Azure seamlessly? Definitely not. There is no service in Azure that works exactly the same way that DynamoDB does.

The APIs that are used for these services are highly specific and highly proprietary. So if you utilize these technologies, you cannot simply move from one cloud to another. So, to make your application cloud agnostic, you can't just use Kubernetes. You also have to choose one of these two options. Either, one, you get rid of all of those cloud-specific service integrations, which some people do but it is rare, and simply run your containers in the cloud without any integrations, or you rewrite your application three times to support integrations with AWS versus Azure versus GCP. Do either of those solutions sound nice? I would argue no, both from a security perspective and from a development perspective.

Creating cloud agnostic applications, in my opinion, is a bad idea. Why? Well, you could run a container in the cloud and you can set up your own database that isn't powered by AWS or Azure GCP, but you'd have to deal with patching that database, configuring that database, the life cycle of the virtual machines and containers that scale up that database, access control to it. And your organization may not be in the business of focusing on creating those services. It's likely that your organization uses services like this to get something else done. So why, if you're already trusting the cloud, would you want to reinvent the wheel when the cloud providers are doing this work for you in the first place? So I would not opt to avoid those services.

And the second option, the choice to rewrite the application multiple times for interoperability, that's arguably even worse because there's going to be a lot of error that comes about when you make those rewrites. And further, the services have vastly different security configurations that are very hard to bring across one provider to another. It's very complicated. So I would argue that you should not go cloud agnostic in general. But now from a leadership perspective, if you are hearing that your organization has a cloud agnostic application, you would be completely correct or completely logical to think that if you have a cloud agnostic application running in AWS, Azure or GCP, those three versions of the application should be consistent in their security.

It might be super secure, it might be the least secure thing ever, but it should be consistent because it's agnostic. But that assumption falls apart when we talk about this topic because not only are those cloud services that you integrate with highly specific, but even the Kubernetes engines themselves have differences with security implications. So even if you're truly just running containers in Kubernetes, you'll have different security implications across the big three cloud providers. So it's important that we understand these differences and that's exactly what we're gonna tackle today. Before we get into this, I'm pretty sure this will be a little redundant 'cause we've had several Kubernetes talks at this conference. But really quickly to make sure everybody's on the same page, what is Kubernetes? Kubernetes is a container orchestration platform that was developed by Google and released in 2014.

It allows you to spin up nodes in order to support your application with a variety of different services. It auto-scales, it does health checking, it downscales when there are unhealthy instances, and it has a lot of other bells and whistles like a secrets manager and shared file system and much, much more. Since it's been released in 2014, it's been taken over by the Cloud Native Computing Foundation, which I referenced earlier, the CNCF.

And each of the big three cloud providers has its own Kubernetes engine. Amazon, I believe, was the latest to the party. They have the Elastic Kubernetes service, which came out I think around six years ago.

Azure has the Azure Kubernetes service and Google, reasonably, has their own Kubernetes engine. Google invented Kubernetes in the first place. I sure would hope that they have a Kubernetes engine. But these engines are not consistent or agnostic. And we're gonna talk about why that matters from a security perspective. To have this conversation, we're gonna reference the Center for Internet Securities Benchmarks, the CIS Benchmarks.

And these provide step-by-step instructions about how you can harden various platforms. For example, Kubernetes. It's a project that is founded or maintained by the Center for Internet Security, but it also has community collaboration.

You can submit changes to the CIS if you want. And I like the CIS benchmarks. They're not perfect, but they're something that we can use to harden our systems in a relatively consistent way.

Now, they have four benchmarks that we're gonna reference. The Kubernetes benchmark, the EKS benchmark, the AKS benchmark, and the GKE benchmark. Now, Kubernetes was truly cloud agnostic.

Wouldn't we just have one benchmark, just the Kubernetes benchmark? Doesn't the fact that these different benchmarks exist prove that there is some inconsistency? And the benchmarks bear that out. So let's get into that first inconsistency. And this relates to cloud identity and access management and how it relates to the Google Kubernetes engine. And if you take nothing else away from this talk other than the thesis about cloud agnosticism, this inconsistency is my favorite and the scariest one, in my opinion.

So first, let's do a quick review about Cloud IAM and what it does. Cloud IAM allows for us to have our applications and our users access other services within the cloud provider. So if you want your Kubernetes application to integrate with DynamoDB, you need to give that application the corresponding IAM permission. And it will get some related credentials and then they'll be able to log in and talk to DynamoDB. Now, these identities can be assigned using cloud IAM to EKS and AKS nodes.

And the benchmarks say that you should utilize these dedicated service accounts and give them very specific permissions. But the key thing here is that, by default, no permissions are given to EKS and AKS. You have to explicitly grant your Kubernetes application in those two platforms permissions. You can already probably guess where this is going. That statement is not true for Google at all.

So before we get into the specifics, we need to understand a design decision that Google has come up with, which is a different set of roles, primitive roles versus predefined roles versus custom roles. So back when Google Cloud first came out, they only had primitive roles. These were the most basic roles that you could have. It allowed you to own a project, view a project, edit a project.

Then they came out with predefined rules that were service-specific. So allowing you to view storage or view a database or delete a big query table, et cetera. And then there's also custom roles, which is something you can define yourself, kind of outta scope for this conversation, but those are the three options you have here.

Now, let's dive a little bit deeper into those primitive roles. We have the owner role that can do anything in your project: delete everything, update everything, create everything, and give people access to your project that didn't have it earlier. So the owner role is really dangerous.

But almost as dangerous is the editor role. The editor role allows you to update everything, delete everything, create everything, read everything. You just can't give other people those permissions.

You can do anything other than give permissions to someone else. Still really bad, right? And then the viewer role is like the editor role, but read only. You can read everything. Now, any of these roles will be problematic, but the editor role is somewhere in between and still very, very problematic.

Why am I bringing this up? It's because Google has this unbelievable habit of automatically granting service accounts in their platform the editor role. One example of a service account that gets the editor role by default is the Google Compute Engine service account. And that service account by default is used by your Kubernetes engine. So if you launch a brand new Kubernetes cluster like I did in the demonstration I'm gonna show you and you change nothing about its configuration, that Kubernetes application will have the ability to update, create, delete, and read your entire project.

Whose application needs to do that? Maybe Jenkins at most. Seems very problematic. Now, let's really dive into this.

Let's actually look at this editor role. The primitive editor role is the combination of every other service-specific editor role. So you may have storage editor, you might have Big Query editor. All of those are combined together for the primitive editor role, which has the hilarious side effect of the number of permissions that are provided grows every single year.

So back when I first looked at the editor role about four years ago, it had 2000 permissions on it. Now, because they've launched so many new great services, that set of permissions is now up to 6,438. So every year this problem's going to get worse unless they eliminate the editor role altogether, which is gonna be very difficult for them to do, honestly. So here are some of the lowlights of the things that you can do with this editor role.

You can read all of the contents of your big query tables. That seems bad. You can also delete storage buckets, which is useful for ransomware. But in the middle and possibly the most terrifying is that your Kubernetes application could actually launch other Kubernetes applications and instances.

You could launch brand new VMs in your Google Cloud account using the set of permissions. That's a dream for an attacker, right? They can launch crypto mining VMs, they could do password cracking there. They could use those VMs for distributed denial of services. Why is this a default permission? It boggles the mind.

So how do we solve this problem? The problem of using the editor role by default in our GKE infrastructure. First, we can follow benchmark 5.2.1, which literally says what I just mentioned, which is that you should ensure that your GKE clusters are not running using the compute engine default service account.

So that is the default behavior. You can change it. Additionally, the main reason why that default service account is so problematic is because it has that editor role. Is there something we can do to prevent it from getting that editor role? Well, we can both remove that permission or we can set up this org policy, which is right here on this bullet point: Automatic IAM grants for default service accounts.

If you set that setting, it's gonna make it so that if a default service account is created when a new service is enabled, that new service account will have no permissions by default. This is something that every organization should have so that when new projects get spun up, you're not gonna see the editor role everywhere. But this policy is not retroactive.

So if you have default service accounts that were created earlier, they're gonna have the editor role, you're gonna need to remove those permissions explicitly. And that's what the third bullet point talks about here. You should use non-primitive built-in policies or custom policies and not only limit the actions as much as possible, but also limit the resources on which those actions can be done as much as possible. Too often we get that second part wrong where we give a very specific set of permissions like the ability to read storage, but we don't specify what buckets can be read from.

And now any random piece of information that's in the Google bucket, any of the buckets in your project is now readable. So you gotta get both of those things right, both the actions and the resources. Some other mitigations is that if you're truly never going to have your Kubernetes in GKE talk to other Google Cloud services, you can run this org policy, which is gonna disable this capability called workload identity, which is one of the ways that you can authenticate to the cloud IAM APIs. But if nothing else I want you to take away from this is, even though I've given you some negatives for using these built-in service accounts, these built-in roll definitions, please do not create custom long-lived service accounts with a long-lived username or password or service account key. Because as you'll see shortly, at least in the attack that I'm describing that could happen where a Kubernetes application is taken over, at least the credentials that are stolen are temporary versus if you made them permanent, it would be even worse.

And there's a benchmark related to that as well. Okay, enough about GKE. Let's talk a little bit about EKS and AKS. So this is where it gets a little bit confusing 'cause there's actually two different service accounts that we're talking about here. We have the cloud service accounts. So your IAM users and IAM roles, your managed identities in Azure, your Google Cloud service accounts.

And these all can access cloud APIs based on the permissions that are granted. But you also have the same thing at the Kubernetes level. So you have a separate set of service accounts in Kubernetes that allow you to perform various actions in the Kubernetes control plane. Now, these two types of service accounts can be mapped to each other, but they have distinct usages.

One talks to cloud APIs, the other talks to Kubernetes APIs. So, they can be mapped together. And this is something that the CIS benchmarks does recommend that you use a centralized cloud identity to get access to your Kubernetes control plane, that you don't have two separate sets of service accounts. But the way that this mapping is done can result in inconsistencies that have security implications. Before we can get into the specifics, let's very quickly define the system masters group.

Any user in the system masters group has unrestricted access to the Kubernetes API, which means they can do anything at the Kubernetes level to administrate Kubernetes. And their credentials are irrevocable per the CIS benchmarks. So they recommend in both the Kubernetes vanilla CSI benchmarks and the EKS benchmarks that you should avoid using this group whenever possible. Well, unfortunately, if you use the EKS service, they're gonna basically force you to use this group. Now, why is that? Well, when you launch a brand new cluster in AWS, and this is direct from their documentation, the IAM principle that creates the cluster is automatically mapped to a Kubernetes service account that has the system master's permission.

In other words, whoever created the cluster can administrate the cluster. And the more problematic thing about this is there's no way to determine what user did this. You can't go into the UI and say, "Oh, this cluster was created by this IAM identity, which means they're the user that has this superpower that we should monitor for." No, you can't do that.

In order to determine who created the cluster to determine who has the system masters group, you have two choices. You either look through your cloud trail logs and hopefully find the event in which the cluster was created and see who that was associated with. Or you contact AWS support.

That doesn't sound like a fun solution. Definitely not how I would wanna spend my afternoon. And worse yet, this access cannot be removed.

So if you are going to create an EKS cluster, it's very important to understand what IAM principle is doing this, and it is important to determine how people access that IAM principle, when they access it, and under what circumstances, or else they would have that administrative access. Now, AKS has a similar issue related to system masters, and that is there are multiple ways to authenticate with a service account in Azure Kubernetes service. You can either log in with OAuth. So you can log in with your Azure AD principle using OAuth and get a corresponding service account in Kubernetes.

Or you can use this "--admin flag," which is going to generate a client certificate that you can use to authenticate to the Kubernetes control plane. This second option has some problems. Firstly, the certificate that is generated cannot be revoked.

It also is going to be valid for two years. There is no attribution to this client certificate. It has a generic username and password.

So you have no way of knowing, or sorry, generic username. So you have no way of attributing actions that are done with this client's certificate to any specific individual. And most relevant to what we're talking about is that this user is in the system masters group, which means that it will have that same administrative level access. So what is the solution to these two problems? Largely monitoring for them before they come about.

And that's about as much as I can share with you on this inconsistency because it seems like this is a critical design flaw, especially in the case of EKS. It should be really easy to identify who created your cluster and the implications of who created your cluster. Final inconsistency before I get to my demo and our Q&A, which is that there are multiple different deployment modes in EKS. And this is not a bad thing, but it's something that you have to understand. So we have our, quote-unquote, "classic infrastructure," which is a multi-tenant virtual machine that runs multiple containers on it. You can SSH to that underlying virtual machine and administrate it just like any container service on a local machine, for example.

And this can allow for insecure configurations, but also have flexibility, like you can manually patch the server, you can handle the lifecycle. You have flexibility, but it also has security implications. But EKS also has this mode called Fargate. And Fargate allows you to run your containers in an isolated environment with very, very minimal permissions and no access to the underlying virtual machine host.

So if there was a breakout vulnerability in Fargate, it would be less problematic than in the case of the classic infrastructure. And this capability, Fargate, is oftentimes described as a serverless solution because you don't care what server's running your containers, you just run them. The problem with this is that there is nothing analogous in the other two cloud providers, as far as I can tell.

The EKS benchmark says explicitly that you should consider running Fargate because it is going to minimize the impact, the security impacts of container breakout vulnerabilities, for example. So how do you fulfill this CIS benchmark in Azure and GKE? You don't. You don't have that option. So you can't fulfill that same benchmark, you can't get that same level of security, arguably.

So those are the three differences that I wanted to highlight for you, and I wanted to take an opportunity to demonstrate the first one really quickly and hopefully you'll get a kick out of this demo. So here I have a virtual machine, and in this virtual machine, I created a Kubernetes cluster. And apologize, I know this is pretty zoomed in. I created this cluster named cluster one.

Why is it called cluster one? 'Cause I did literally as little as possible to create this cluster. I clicked create cluster and I clicked go, go, go, go, go, I want a Kubernetes cluster, please. And this is what it created. So what was the impact of this cluster being created in this way? Well, it has that default Google Compute Engine service account. What am I running on this Kubernetes cluster? Well, I have the DVWA, the Darn Vulnerable Web Application, which is a known cyber range kind of environment that you can poke around with and break.

It's a vulnerable application on purpose. This DVWA, I deployed using this container definition, this docker file. Let me zoom out one more really quickly.

This is the same definition as we have from the official Docker Hub repository for the DVWA. I just had to install curl onto it for reasons that will be apparent shortly. So here we have that application and this application has a command injection vulnerability. Very classic vulnerability where it says, "Hey, you wanna ping an IP address? Put in this IP address."

And you type 0.0.0.0 or whatever. You click submit and then it's going to execute that command and it's gonna show you the results. So it pinged that three times or four times. Here's what the result is. But this has a command injection vulnerability.

And what that means is that using a semicolon, I can terminate the first command and insert another command that is executed. So for those who've looked at command injection before, the classic way of testing for command injection is doing semicolon, cat etc password. And if this prints out the list of all the Linux users on the machine, you know that there's that vulnerability here. Now, why is that relevant to Google and the cloud? Well, on your GKE application instance, on your pod, there is this thing called the instance metadata service.

You may have heard of it before. I know that the previous presentation talked about it a little bit. But that instance metadata service has the credentials on it that you can use to authenticate to the Google Cloud APIs. To demonstrate this, I am going to inject this script right here, which is gonna talk to metadata.google.internal,

which is an alias for 169.254169.254. And I am going to fetch the service account that is being used by this Kubernetes application. When I do this over here. So I'm gonna do semicolon and then I'm gonna paste that. We'll see that the result is the project ID, "-compute@developer.gserviceaccount.com."

This is that default service account that has that editor role. So now, using a second command, I am able to get a token associated with that service account. So this is gonna fetch the actual set of credentials that are used by this application. I paste that here, semicolon, paste that, and we're gonna see this access token. It looks a little bit like a JSON web token, it's not. It's some weird Google proprietary thing.

But if I copy this and I paste it over here, so it's a little bit easier to manage, I will have this token here and I'm going to copy all of the token. I am then going to take this token and put it in my terminal. Here I have this attacker Linux user. This attacker Linux user has no access to anything in Google Cloud, but I'm going to export GCP token and I'm gonna set it to this. Then finally, I have this last script here, which is going to take that GCP token and attempt to talk to the Google Cloud API to look at the storage service and list all of the buckets within the storage service.

So now if I have that editor role, I should be able to access all of those buckets and read them. And when I hit enter, it turns out that, yes, that is the case. I can enumerate all of the buckets within this Google Cloud project. And because the service account has the editor role, I could do so much more than that. I could create buckets, delete buckets, and even launch additional applications. So this permission being there by default has a security implication that we simply don't have in the other two providers.

So with that, let's wrap this up. Here's how you can apply what you've learned today in the next week, the next couple months, the next year. I think the first thing you need to do is you need to get an inventory of where you're using Kubernetes in your organization. Are you using it on-prem? Are you using it in hybrid cloud? Are you using in EKS, AKS or GKE? Just get an idea of the different places in which you're using this, quote-unquote, "cloud agnostic technology."

And then you should, if you're using EKS, determine which ones are using Fargate because as we mentioned, that is a fundamentally different type of deployment than the other Kubernetes engines. Then in the next three months, I would say the most important thing that you can do is use that Google Cloud org policy to restrict the permissions of those default service accounts, as well as go back to the service accounts that already exist and remove those editor rules. Very, very important that you do that or else you'll be able to perform the attack that I just demonstrated. Then in AWS and AKS, in Azure, in AWS, let's take a look at who's created those clusters, who's created those client certificates, and see if we can use that information to find potential privilege escalation opportunities. And then finally, within six months, I want you to revisit the conversation we started off with in the beginning of this session.

Does Kubernetes solve the multi-cloud problem? I would argue that it certainly does not solve all of the problems associated with multi-cloud. And if like me, you understand multi-cloud and for that reason want to minimize the instances of multi-cloud where possible, I think it would be valuable for you to look at possibly consolidating your Kubernetes infrastructure to one cloud or on-prem into something consistent. Because if it's not consistent, it's going to be hard to secure. You're gonna have to at minimum have a lot of these cloud-specific bits of knowledge in order to secure all of the different engines. So in summary, Kubernetes has security quirks across multiple different cloud providers. This is not something that is surprising.

All of the cloud providers have many different services with many different security differences and associated implications. And honestly, this presentation has just scratched the surface, people. I am not a Kubernetes subject matter expert. I did this talk to learn a little bit about Kubernetes.

Can you imagine how many other differences a motivated Kubernetes knowledgeable attacker could find? Probably many, many more, probably many that are much worse than the ones that I presented to you today. So I hope that you'll continue to stay curious in that domain and potentially find other inconsistencies that are potentially even worse. And it's not even just about the explicit differences. There's also a lot of differences that none of us can possibly know because there are many implementation level details that exist in these different engines that you may never see a document associated with. And those behind-the-scene differences can also impact you from a security perspective. So I hope that you will always challenge cloud agnosticism as a result of this talk.

If you liked this talk, please feel free to follow me on LinkedIn or connect with me. I also have a Twitter, I barely ever use it. I prefer if you use LinkedIn, but definitely make sure to follow SANS Cloud Security on Twitter, as well as on LinkedIn as well as the main SANS Institute account. Here are all my references. This slide deck will be available on the session details on the RSA website.

So, please check my work. I'm still learning here too. And I wanna give an acknowledgement to Kat Traxler who helped a lot with the Google content.

Our Google SME at the SANS Institute, Jon Zeolla, who is one of the most knowledgeable people I know in the space of Kubernetes, which he gave a look over and gave me some of the ideas here that helped make this what it was today. And Frank Kim, who's the head of the cloud security curriculum who gave me the idea for this talk in the first place. And with that, I'd love to answer any of your questions. (audience applauding) All right, well, if you have any questions, please feel free to see me at the front. And I hope you have a wonderful rest of your conference.

Take care.

2023-06-09 20:06