Ask the Expert: Building real-time enterprise analytics solutions with Azure | ATE-DB111

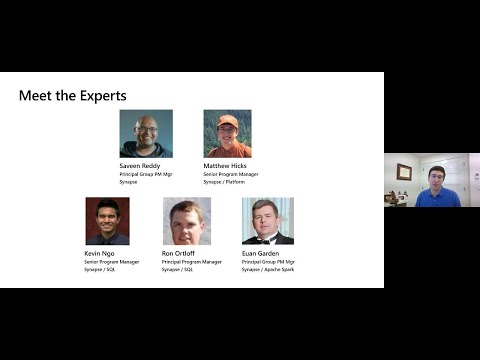

Welcome everyone to the ask the expert session for building real-time, enterprise, analytics, solutions. With azure synapse analytics. Uh we're so excited to have you uh, join us here for um some great question answered period, um, some of you may have just come from, savine ready's session, with the same title. So this is a great opportunity, for you to ask questions and us to get some expert answers. So you should see some chat on the right for you to be able to. Engage, with everyone so use that chat to ask questions. As you ask questions. Folks. Who are ready to stand by and answer questions we'll be able to answer your questions, really quickly, and. The questions will appear to all, attendees, once, your questions are approved by the moderator. You can upvote your favorite questions with a thumbs up and that makes sure that other folks can sort of agree and we can get some popular questions, uh rising to the top, um. Note that you know we'll have experts standing by to answer questions verbally, i will have about five, people, fit five people on our team to, answer your questions live. As determined, by, one of the moderators, that we have on the on the team here. Uh. Note that we'll also provide some resources at the very end, so that you can learn more and and take some next steps. Just note that uh the session may be recorded. Um and please you know help out with our moderation, uh you know please don't spam or post, inappropriate, comments in the chat uh we also have, a code of conduct, uh pretty standard stuff but just uh. Please keep that in mind, and here's the code of conduct just, so everyone is aware. Um, and uh, and there's some information at the bottom there if you have any questions. Um. All right so with, no further ado uh. We have uh some. Excellent, uh chat experts, from uh our big data community. Uh and experts helping out here so thank you everyone that you see on the screen, um you'll see their uh you'll see their names pop up in the in the chat as folks respond, to your questions in the chat so, um, a big thank you to to all these experts, attending, today and helping out in the chat. We're so, grateful to have you. So, and, let me now introduce, our experts who will be answering some of the questions live. Um. Uh. My name is matthew hicks i'm a program manager on the synapse, platform team here at microsoft. Uh, we have savine ready uh principal program manager, on the synapse team, and uh, kevin ron and euan, program managers on the synapse team as well are here to, help out and answer questions live as well and we're so excited.

And Let's dive into it. So, we have uh some questions ready to go, it seems like we have some great questions flowing in. Uh, the first one we see, i'll. Ask this question from tony k. Does synapse analytics, workspaces. Use, new features like the photon, engine. Can you elaborate, on how you would use it in conjunction. With other services. And i think ewin would you would you able to take that one, yep. Happy too. So first of all the photon, engine, is databricks, proprietary. Technology. And, so, it's not something that's available in the open source version spark and the versa spark that we use inside. Of. Synapse. Is derived. From the apache, trunk version and then we layer on, a whole bunch of microsoft. Technologies, and capabilities. On top of that, so in terms of compare and contrast. Um that's that's one difference. I'm also going to kind of attack a couple other questions which are, sitting out there. One of the big differences, between databricks, and what we have with synapse, is with synapse, we bring together a bunch of what we believe are best and breed technologies. To solve what is an analytics, problem. So what's the best tool we have in azure for integrating, data well it's data factory. And so we use the core of data factory with instant apps as part of orchestration. Integration. Um what's the right way to answer, sql queries, well. It may not be spark it may be an on demand, sql type engine, or serverless, sql, engine, and what's the right way to access, modeled, sql data, well that's going to be through a dedicated, sql pool, and so from our perspective, we're providing, multiple different technologies, to solve the end-to-end, analytics, problem, rather than a single, uh technology. Thank you ewan. All right so uh we have another great question um it's a little bit of a long one so i'll read it out, uh is it or will it be possible to connect, to an analysis, analysis, services, source more specifically, xmla, endpoint for power bi premium. To ingest data into synapse. Um. Let's see. Savine. Who do you think would be the right person to answer that question. Oh i think you're. Muted. I think for that one we're gonna have to follow up a bit offline. Uh we get one of our other, experts, josh. To talk about that. Okay, all right thanks. Uh so let's see. We have another question from saren, uh using, synapse analytics, for end user data mart. Is that a possibility, without running into query concurrency, issues. Let's see. Ron would you be able to take that. One. I i think andrew. Um touched on it a bit but yeah i mean there's other things like workload, management, that you can use to. Um. Set importance, and and, you know divide up resources. And, kind of manage loading transformations. That go into data marts as well as end user queries, so, the the tools are there um, for you to to be able to do that, uh i you know i'd also recommend, looking into.

To Things like, materialized, views. You know results, that caching, is is not a, resource, consuming. Query so where results, are caching can help out. That can also help with your overall throughput. For for data mart access. All right thank you so much ron. Uh so we have a great question uh how does cost work with synapse, um, kevin, uh no would you like to take that one. Yeah sure so for um cosplay synapse, this is a purely. Opt-in. Experience. Where you pay and, you uh, you're charged, for the resources, and components, that you consume. So there's a public kind of facing dock that we do have on our pricing page that does go into. How we. Charge for spark how are the sql resources, that, you provision. Are charged as well so it's a completely opt-in experience. Excellent thank you kevin. Uh so i have a good question i think for you and um, when should folks uh think about using synapse, versus hdinsight, versus other services. For uh. For example, yeah that's uh that's a great question. So, i think, each of the, the three primary spark experiences, that we provide in azure has, unique and differentiated. Capabilities, so if i take a look at something like spark. Running in hdi. That's really all about control. And integration, with other open source platforms, because hdi is not just about spark it's about kafka. It's about hive. It's about a bunch of different technologies. Most of which we don't have inside of synapse. Also if you want to be able to ssh, into the head nodes and your spark cluster, and, control configuration. And things like that then hdi. Is going to be your answer. I think the nice thing about synapse as i said in my earlier, answer. Is that in synapse, we have these different technologies, but they're all designed to work together. So, we have a single user experience, we have a single off model we have a single monitoring, model. Across all of those, and realistically. Any big complex, analytical, app is going to involve some spark stuff. Some data integration, stuff some sql, in terms of serving the models, up and serving them up to some sort of technology, like power bi, so i think they're just they're different ways of solving, the problem, and you get to choose and the nice, answer is that. In azure we have all the options available. Awesome thank you so much, uh a good question for savine uh, are notebooks, and synapse studio accessible. Or downloadable, so we can use these outside of synapse studio. Yeah. You know. Our notebooks. Are importable, and exponential, as ipy, and v files so there's nothing, there's nothing special about them. Especially when you're just writing normal spark code or. By spark or scala whatever. The thing to keep in mind, is that, we do have of course. As as i mentioned or showed on my slide. Uh there's a ms spark utils library. Right, so that's of course. Unique, to, uh. Synapse. So, that library won't be available, elsewhere except for synapse that's the one catch that statement. And you and maybe there anything else you want to talk maybe. With regard to libraries and notebooks that might affect, the ability of people to use them in export. Um i think you hit the main point they're studying. You know different. Uh back ends and different, implementations, of the jupiter engine, support different libraries. Uh there are some technologies, like we don't support ipi widgets if you're a jupiter, person. Um, and i think ms spark utils is one of the other differences. Also magic support, there's another place where there's differences between different implementations, of jupiter. But i would argue that 90 95. Of your code. Should just easily, migrate. Along with the notebook and then there's some tweaks, which are environment, specific. Yeah. Excellent, thank you all right so we have another question, uh, i think first of you on this one as well uh, when, will, devops, or, github, integration, be available. Can you share some more details on that. Yeah so this is uh probably our number one question. Um, so the the, straight truth is this uh. We are working on it actively at this moment. It will be part of our ga. And, you know we don't have a ga date yet we are working on it you won't be waiting too much longer. It will be part of that, that ga release. Excellent. Thank you. All right uh so let's let's go into some of our some of our other questions we have one, regarding.

Um. Let's see. Do you have information about. When. Sql server lists over, sql pool data will be available. Uh savine who do you think would be the right person to answer that question. I think ron is probably the best one for this one. Ron could you take that one so when will server list over sql pool data, be. Available. Oh and you're muted. There we go sorry, um that's something that we're certainly working towards, um. And yeah and that's a future, sort of thing that we're. Definitely, see um, you know user scenarios, for to be able to have the the serverless, pay per. Terabyte, scanned. And and, the provision, paper dwu. Um, accessing, seamlessly, over the same data sets so for sure something that we're looking into. Excellent. Thank you so much, all right uh, so one of the one of the common questions that we definitely get and one that's good to cover i know sabine covered it in your session but if you haven't, seen the session yet and you're attending a different instance of it there's a question about, synapse analytics, the preview features. Uh when, when do we expect. Those capabilities. Uh to become available, and, uh when can can people try those features out today. I just make sure i understand the question, uh could you can you say it again the preview features when they can, so we have uh you know azure synapse analytics preview features including the workspaces. Uh can users try those out today, and when do you think those will be available uh in a generally available fashion. Okay. Absolutely. So everything. I had a large slide, that i mentioned at my, my session, my breakout session. Everything i showed you will be available. It is either available, now. Or shortly available by the end of this week. So, i think most of them are actually available this very second so you can try everything out now in preview, just in general you can try, azure. Synapse, workspaces. It's been available now in public preview for a while. So. When the switch, turns, to generally, available. Which is again, you know not too much longer from now. Uh though we don't have an exact date at that moment, every feature i mentioned. Uh in that list anyway will become sort of automatically, a generally available feature, that we support in production i hope that answers the question. I think so, thank you uh and. So we have some other questions coming in that are related to uh the topic of migration, so, uh for for folks who are using adf today. Uh or sql dw, and there might be a separate person answered the sequel there'd be related questions, uh if you're using cpw. Newly branded. Synapse, analytics, ga functionality, synapse analytics. Uh, is there any sort of migration. Planned or is there any, how, do those, users. Take advantage of these preview workspace features. Right so let's let's start with the for you know you said the word migration, and migration, sort of. It has an implication, of. Maybe changing things or moving things. So when we general we make synapse workspaces, generally available. And you're already using what you used to call a dw. There is no per se migration. What will happen is if you have an existing. What we now call of course, a dedicated, sql pool formerly a dw. We will give you the ability, to use the synap the new preview. Sorry, the new workspace, feature set. You can essentially, add a workspace, on top of your. Your sql pool your formerly dw. Right, you don't have to even do that you can continue, working. After our ga date completely as normal, we'd encourage, you. To you know add the workspace, feature to it because it just enables so many more scenarios. Okay so that's. So there's no migration, it's just you can now use a new feature. Right it'll be available for you. And that's er and that, features, everything, in the workspaces. Spark. Serverless, sql. Pipelines. You know, a bunch of things synapse, link. The second question, is about, i believe adf. And some of you already have an existing. Adf, instance. Right and you have pipelines, that are.

Calling, Sql, pools, either to do data movement orchestration. So the short answer is, you know we're not, upgrading. Adf. Factories, into synapse. Our strategy, there is and this will be post our generally available date. Is if you've already developed pipelines, in a data factory. And of course you you want to simplify everything by moving everything, into a. Synapse workspace. We're working on a plan to allow you to. Import. Those pipelines. From an existing data factory, into a synapse, workspace. Right. So that's our plan for addressing the ability, to like sort of put everything back together into a single workspace. Excellent, thank you so much. Um, we have a, great next question for uh ewen, uh will the library management, in synapse. Change at all and then will i have, the ability to use maven central for example. Uh to manage my libraries, uh with synapse. Apache spark in synapse. Yep another great question. We're actually rolling out some changes this week, um in terms of library management you now be able to use jar files for library management in addition. To your previously, able to use pi pi, files. Um the ability to just specify maven coordinates, is on the list, of things to do library management is, going to be an evolving capability, for quite a while it's quite a big and complex. Space, and so we're going to keep adding and adding and adding, based on user requests over time. Excellent thank you. All right um. So, we also have a question. Uh savine, in your um, in your, session you talked about power query, uh could you talk about some of the cases where you would use uh data flow versus power. Query. Absolutely. Absolutely, so. Um, just to make sure everyone's on the same page, uh where they're where these are exposed, in synapse, are in our pipelines, and their two kinds of activities. Their data flow activities. And there's, power query activities. And power query activities. Uh were formerly, knit called uh, data wrangling, flows or something that word data wrangling in them right. So, um. And the different approaches to answering this question. How i would say is this. By and large they can be used to do the same thing they take some input data and can transform, it, in all sorts of ways. Right. Um. And there's some, differences in the feature set etcetera but, primarily. If you want to think about your transformation, of data, in this kind of. Graph based way it's kind of visually oriented. And that's the way you think about it then the. Uh the data flow is a great way of doing it, right, on the other hand, if the way you think about transforming your data. You're used to think about as a set of transformations. On rows that you visually, see in a tabular, manner. Then the power query, experience, is a much more optimized, for you you'll, you'll understand, it better that way. So uh that's one way i would say like how do you sort mentally pick between the two, again there are other differences but that, that to me is the primary way you think about it. Awesome thank you sabine. All right, we have another question, i think this one will be for kevin, uh sort of a sql question, uh, when to choose. Creating an internal table versus an external table what are some best practices to follow. Thanks matthew um. So the difference is so the question was um. Yes when do you choose between. Internal, or i guess managed tables versus, external, tables. I think a very very common scenario. Is for external, tables. If you don't want to. Before you actually import, your data into, my sql database, as a managed table. There's a very common scenario for data lake kind of exploration, scenarios, where, you want to immediately issue ad hoc queries over the lake and a very common interface that. Users do today is to do that over using external, tables. And once you kind of, discover, and understand, your data. You understand you want to transform, it or join what kind of high-value, data within your sql database. You then do a load using kind of our interfaces like the copy statement into a. Sql database. As a managed table, and then you can do further. Transformations. Or. Start kind of driving insights, on top of that manage table. Thank you so much. Um, so, we have, two questions, from from gary, uh. I'll split them out to two different people one is uh, for ewan. Regarding spark libraries, are there any storage limits for spark libraries, that we should be aware of. In terms of how many you can have. How many or i guess uh in terms of this the size of the libraries, that you can use with apache spark and synapse. Um, no but do bear in mind. That libraries. Um, are.

Installed. At runtime so we provide, a couple hundred libraries in the base, image that we use for spark. And then in the libraries you specify, with our requirements.text. Are installed as part of cluster boot time. And so if you for example have a dependency, on mkl. It takes about, 20 to 30 minutes to download and install. That means the fastest, that your cluster will start will be 20-30, minutes every single time. Um we do add more libraries to the base image but there's some that we can't. For a variety of reasons. There is a limit, on the maximum, size of requirements.txt. File you can have, in terms of the number of entries and it's, around 500, rows if i remember correctly. Okay all right thank you um so, we actually uh have an interesting, uh other question, from, uh. From someone related to cost so, if, folks are concerned, about sort of, the cost of synapse analytics. Um how should they understand, you know uh. Whether you know, it's it's really meant for, huge amounts of data, or you know it can be used with small amounts of data, i think sabine, this might be another question you uh talk about within, your session but if any if any folks on this call haven't been on the session yet i think that's an interesting question so what sizes, of data, uh and, how does that impact cost uh, should people be familiar with when they use synapse. Yeah, it's a great question and you know uh all performance, and scale questions, are, answered with it depends. But. In terms of you know what synapse can address. Uh you know when we talk about the product we do use the word limitless, and, we do talk about that in both dimensions, or, both ends of the domain same dimension, number one. It's for any you know there are customers who have not really that much data. Like in the in the low terabyte even less actually. Terabytes. Uh certainly synapse, scales to those smaller cases abs absolutely. Synapse, uh, is also scaling to the world of petabytes, now some of you may have seen.

A Petabyte, scale demo with serverless sql, i think last year, at uh, ignite, in 2019. Um. On top of that you know we of course by integrating, apache spark we're gaining. Tremendous, scale. Right and can do larger low workloads. I would say uh, anything up from you know their smallest data sets into the petabytes. Scale is where where synapses. Are that's a sweet spot. Excellent, thank you so much. All right um, i think i have a question for, uh. For either kevin or ron i'll let either of you take this uh. Are you planning to include, an execute, sql task in synapse, pipelines. Um this might be related to the stored procedure, support that we might already have but um maybe you can expand upon that uh why don't we go with uh run, or or kevin. Whichever you want to take that one. I, i guess i would assume that's more related to azure data factory. Um. I don't know kevin do you have any thoughts on that yeah so um. With synthetic, pipelines, uh, it has very similar capabilities. As, azure data factory, um where. Um you can actually create multiple. You know activities, within a particular, pipeline. In this case in for, specifically, sql. There is the, stored procedure, activity, which, you can find you can define, and create a stored procedure within your sql database. Which has a you know transformation, logic, and that can be executed from these pipelines. Or you can create. You know another activity. Called the copy activity within the pipelines. Which internally, does use. You know, sql statements, like the copy statement, or. External, tables. To load data, um so these are kind of the. Integrations, between, synapse, pipelines, and kind of the. Sql pools, within. Synapse workspaces. Excellent thank you so much kevin. Um all right, another question. For let's see savine, um, is it possible, to just query, data that's already in my lake rather than storing it into a data warehouse, first. Yeah absolutely, that. That's uh you know, that's one of the key things we're enabling with synapse, and probably the way, you most easily experience, that which is serverless, sql. You know our very first sample, with the product. Is doing a select star open row set. From a file and blob storage. And there's no schema specified. And that file could be a csv file it could be a part k file. And of course uh i mentioned blob storage there but also in data lake storage. Uh in gen 2 here i'm talking about. So yeah it is absolutely, built to do that, without creating, views, or using polybase, just directly, query. The data. All right, awesome, um, so i think we might have time for one more question before we dive into the sort of the, the next steps and the action items.

Um So. Yep. I think there's a there's a, question, for you and around uh. Databricks. And synapse, and how the technologies, relate. Let's just. Maybe clarify, like how these two pieces of, what's in azure relate to each other do they use each other etc. Yeah, so i thought a little bit earlier. About how. The, um, the different. Engines, provide different capabilities, and actually, you should search through the answers that andrew burst has provided. He gave up a very kind of pointed. Answer on what's different between the different, texts between hdi, synapse. And databricks. But let's be very clear today so synapse does not use databricks, today. It is not part. Of which is not part of the synapse, offering. But because of common file formats. Uh for example, delta lake is supported by both par k, csv, these sorts of technologies. It means you can use the two in conjunction, with each other, you just won't get the integrated, experience. That synapse, provides. With data breaks. Um and so, if you're already on data bricks today, probably best to continue using databricks, but you can also bring synapse into the equation. As well. If you're making a choice about which version of spark you want to use today. I would look at other factors. Like, do you need a sql. Uh serverless, answer, do you need a sql. Dedicated. Answer as part of your offering if the answer to those is yes. Uh then, note synapse, certainly comes into play at that point in time. Um, and so from that perspective. They solve, as i said earlier they solve the same domain. In terms of, cloud scale unified, analytics. But we've taken two different ways to do it and the nice thing about azure is, if you want to use azure data bricks, it's right there you use the same adls, gen 2 lake, that synapse, can also use. Awesome, all right thank you so much ewan um. You know looking at the clock we might have time for one quick uh one more question uh before we wrap up with uh some of the action items and next steps. Um. Ewing just going, going back to you for one last question, uh could you tell us a little bit about uh, the, about the apache, spark, uh. Uh, offering that's available within synapse, and and what kind of uh capabilities, come with apache spark and synapse. That's great thanks matthew. Yeah so we take the core, apache, spark trunk so the completely open source version. And we do it we add a bunch of work to it some of which, savine, actually showed on the ml side during his session the hummingbird. Technology, which is also open source, uh we also add in things like, hyperspace, indexes, which is an open source project. And we add in uh. Net, for spark which is also open source so we add in, a bunch of these technologies. Including some of microsoft proprietary. Ip, particularly in the perf space we work with microsoft, research. From that perspective, then we add features. A lot of those features are to do with being part of synapse, so we think about the most important. Feature in spark. In terms of synapse to be integration, with synapse. And so that means that things like single sign-on, must work, the security, model must work v-nets, must work, the monitoring, experience, must work, so that's our most important feature, and we'll continue, over time to build on what we get from. The apache foundation. And in the case of delta lake that actually comes from the linux foundation. And so there were open source projects, uh multiple, ones out there, they were able to integrate, into this. Excuse me into the, stack. All right thank you so much ewan, um, just a big thank you to all our experts for joining today and also, most importantly thank you uh everyone in the audience for asking some great questions. Um it was a great opportunity, for folks to ask questions and ask. Have us answer your questions live um, what i'd like to do is, is show you some of our uh, some resources, and call to action, um i believe, a, recording of this will be available, for everyone as well as, these links in the slides so. Take a look at this and there's some great next steps for trying out the latest azure synapse, features. With a free, trial account from azure. You can also try out our analytics in a day for a hands-on workshop.

There's Also a step-by-step, tutorial, that we offer for synapse analytics. And you can also download a getting started toolkit, and all these resources, are available for you now so we encourage everyone to try that out so, uh, thank you so much everyone, uh for, attending this and participating. Um, and if you haven't seen civilian session yet, i encourage you to uh there's two more time slots and uh sabine is there anything you like to say to close it out. Yeah i would say first of all thank you for your time and patience, you know. Synapse, is a big step forward, for us and it's, you can't imagine the years we've spent. Getting to this point, and i can assure you that, where we're going it's just, an amazing, place. An absolutely amazing place so. I'm glad you're on this journey with us so thank you everybody. Thanks.

2020-10-01 14:58