Artificial Intelligence in Creative Industries and Practice

Hello, and welcome to artificial intelligence in creative industries and practice, or, as some of my colleagues have been calling it, AI Curious. Today, we are having a panel discussion with journalists, researchers, lawyers, and people who cross one or more of those disciplines to talk about developments in artificial intelligence. My name is Sophie Penkethman-Young and I am the manager of Digital Culture Initiatives at the Australia Council for the Arts. I would like to start off by acknowledging the traditional custodians of the land. The Gadigal people of the Eora Nation, and pay my respects to elders, past and present, and extend this respects to the lands that you are coming in from today, and any first nations colleagues I have with me in the room we're just gonna start off this session.

With a quick one line up about what Australia counselor is doing in the digital space, and then we will cross to James Patel, who is a technology journalist at the ABC. Who will be out facilitated today, and he will introduce you to the rest of the panelists, and then I mentioned before, I am the manager at the digital culture initiatives, which means that I oversee the programs that sit under the digital culture strategy a strategy that was developed by Australia Council for the Arts, and released in 2,021. I do like to say that it wasn't development pre covid, but it was, you know, really impacted by the developments that the whole sector made during that period of time. We have a series of programs that are part of the opportunities offered by this. And I'm just gonna throw to Julie, who is the producer.

To just give you a quick fun line up of all of those programs. Hello, everyone. So under the digital culture strategy, we have a few programs, including digital skills, digital skills programs is a series of workshops, seminars and intensive, that focus on using digital and emerging technologies to develop creative practice including this seminar right here, there's also the academy symposium next week, which is also a part of this program.

We also have the digital strategist and residence program, which is a program that provides arts, organizations of access to a specialist. He will organize, to develop, and I'll enable a digital strategy. That program just kicked off last week it's really exciting.

And we also have the digital transformations or creative industries, which is a partnership with uts a 6 week online short course which provides insight into digital strategy and new technologies for small to medium organizations. And other program is the digital Fellowship program, which is in partnership with creative New Zealand and brings together artists to explore and develop their digital practices from Australia and New Zealand. Thank you so much for that, Joelle. Now that is enough from us, because I know that everyone has come here today to hear from us. Panelists talk about all these very exciting developments that are happening in AI.

So I'm gonna pass over to James now he's gonna just give you a quick overview and then talk us through our panelists. And what we're gonna talk about. Thank you, Sophie, and welcome everyone to the panel. Discussion. My name is James. I'm the tech reporter for the ABC. Specialist science team and to start off before we get to the panel discussion I'd like to give a quick introduction to the topic we'll only go for 5 min, so 2022 was the breakout year for AI and it's the reason we're here today with record turnout and lots of questions. I'll come back to 2220, 22 in a minute. But first let's look at how we actually got here.

Personally, I grew up seeing AI books and movies, and I had the big idea that we know it would be invented. But I like it as I got older. It sort of receded into the future. It was always 20 years away. We would that AI would change the way we live! But it was really too abstract to care about. Then, about 6 years ago, in 2017, a team of

engineers at Google invented a new Ii model called Transformer, and I only mentioned that name because it's the T in Gpt. As in chat, gpt, and transformer, is like a super charged Predictte, you know, on your phone, it's an algorithm that predicts what comes next. In a sentence, and it's trained on huge amounts of data, all the journals and books and articles, and read conversations.

Everything that we've digitized. It's trained on and the turnover could right 20. 18 Gpt. One was released 2019 to 2020. Gpt. 3, and that's when I sort of tried it for the first time, and when I saw whole sentences scrolling across the page of machine-authored prose, I felt the sense of wonder.

Yeah, this is a machine doing something that I considered to be exclusively human writing, you know, most of the time, technology is annoying and frustrating and clumsy, but sometimes it can feel miraculous. That was 2020, and these models were bubbling around in the background. People in the industry were getting excited but I hadn't written broken through. Most people would still something in the future 2022 is different, and probably what changed was text image generators became much better, much more available, much more commonly used. We had mid journeys they would diffusion Darley to relief.

And you know, we saw people posting these images on social media. Became kind of this fundamental thing, and then, at the end of the year, the kind of icing on the chat, Gpt was released. Basically it was 3, which was 2 years old by that point, with a slightly upgrade and a chat interface. The technology. Wasn't that much better? But it passed some kind of invisible popular threshold, and I suddenly become accepted as this real thing was finally here, after years of waiting, and there were emotional reactions to this. It was fair Todd stained contempt also excitement, and all we saw Nick Cave calling the ais and the code style lyrics, the grotesque mockery of being human.

But also I spoke with others like architects and authors and visual artists who talked about how they were using these ui as useful ways to spark ideas and explore their imaginations so I'm coming at this from a very open minded perspective. So if you would say on an eye Curious, I'm kind of open minded to the point of sitting here. I'm very invested. I work in journalism and I love to ride and Hi Sis, ability actually makes me quite uncomfortable. Sometimes I have this nagging sense that it devalues writing in terms and sometimes I marvel at what I can create.

And other times I'm reminded that all those very impressive it's also very dumb and that's a dangerous combination. It just makes up facts, makes it up. It sounds convincing, doesn't actually understand anything. It has no common sense. What appears to be a conversation. It's just prediction. It's just remixing human orit sentences and on top of that it's owned by enormous corporations. And it's trained on publicly available data that was arguably sourced.

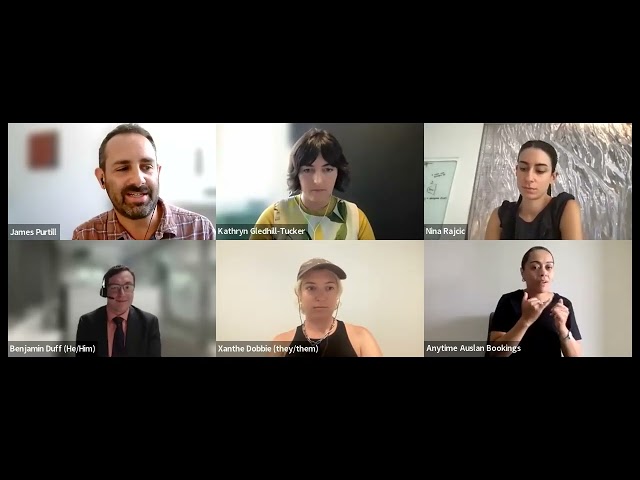

Unitsically, it's ripping off others. So I've come here to learn from our excellent panel assembled by the Australia Council we'll have 1 h of panel chat, and then 30 min of questions from the audience. So to introduce the panel. I'll go one by one, is an Australian artist, and filmmakers working across on annual flying modes and making their practice aims to capture the experience of contemporaryity as reflected through queer and feminist ideologies katherine is a noma technologist writer, digital rights activist currently living on what she explores.

The intersection of activism, science, section and text in a imagining radical futures. Dr. Nina, Rajitz. Nina is an interdisciplinary artist researcher and developer, who, exploring new possibilities of human machine relationships. Her recent work draws inspiration from the link between language and the self exploring the role of narrative in the sympathizing of meaning and the construction of identity and Benjamin Duff been as a commercial and intellectual property.

Lawyer at Maddox with an avid interest in AI. And how it is changing the intellectual property, landscape. Ben is involved in the provision of legal advice on a range of commercial issues, including copyright trademarks. It information and communication technology contracting to a range of Australian government and private clients. I'm sure people will have a lot of questions about copyrights. But then, so just to get the ball rolling, is that the kind of interesting the way that you approach AI, and how you might be already using AI in your work? Yeah, sure. First of all, thank you all for having me.

I'm tuning in today from unseed country down here in Nam AI is such an interesting one. James, and I think that I, too, would classify myself in the hey. I curious sexuality group of of this phenomenon I in my practice use of a bunch of different technologies, and I tend to just jump on whatever is happening at the time to get a bit of a lay of the land. So historically, that's been everything from virtual reality, tool augmented reality to just random generators on the Internet and figuring out how to smash them altogether. And, as you said, 2022 is very much the year for AI to become this sort of prolific mainstream thing. So naturally I had to have a go at that. Somewhat more successfully than the last time I tried to jump on a trend which was nfts.

So in my work I collaborate with AI. I suppose I think that the art that AI makes specifically the image art is incredibly banal, but it can be a really interesting starting point for creative practice, and I think that the writing stuff like she is phenomenal while you were speaking, I just wrote down a little list of programs that I've used in the last yeah, across various works I most recently attempted to train jet to her chat Gpt. To write a chapter of my Phd. Thesis. It was not successful.

But why not? But I've also used copy. AI. Writer, various text to image generators Dali and Dolly to got really obsessed with vocodes, which is now fake. You.

I created a series of works across 2021, and 2022 that are they're all narrated by AI generators. There's one work the long now that's still up at Acme online, I think in Gallery 5, which is narrated by I resurrected AI version of Alan Rickman and my work that I did for the Sydney opera House shortwave program in 21 was narrated by David Attenborough, so, you know, can really cut budget costs with this stuff. It sounds like you're you're very open to using it, and I'd love to hear in a little bit how you sort of how your students have been using it as well. Yeah. But Nina, you know I mentioned before Nick and his sweeping criticism of the AI Song Lyrics.

I'm interested, you know, in your type, on that. Your own work looks at AI, and maybe a more nuanced way. It's sort of exploring the ability to connect with AI. So I wonder what you made of Nick Cave's disdain? Yeah, I mean, I am a fan of my case. So I definitely biased.

I really do value what people like. I think it's quite a nice take down of AI. And Gbt, I guess especially. But yeah, I think that he kind of brought up that idea of like anything kind of generated by an AI system kind of falls onto this idea of like that was really interesting basically that it's just kind of like replicating or caricatureuring like human art and I do really do agree with that. I think it's kind of hard to imagine this kind of Gbp or stable diffusion algorithm coming up with anything truly new.

It really just spits out like an amalgamation of what it's seeing in the data center, like what it's been trained on. And of course that kind of great, like a number of legislative, ethical concerns which I'm sure others have more to say on that. But yeah, I think, maybe like reflecting on that article, I think, what's maybe problematic about that conception of AI is that we're kind of trying to imagine these algorithms as artists or creators like in themselves like basically like an independent system that's supposed to kind of have some intention, and when you view it like that, I think obviously, the output is going to be meaningless.

It's gonna be a meanless caricature, because nothing really behind what it's outputting, just like Nick Cave said. There's no kind of story of suffering. There's no overcoming. So, or triumph, or there's no kind of like struggle of creation. But yeah, the way I've been using it in my work really is more of seeing the technology.

As an extension of humans and human creativity, and basically just another tool that we can use in our creative practice. But yeah, I mean, I would. Maybe we can talk about this a bit further. But I think as long as they don't have self awareness or intention, or sentience, or General AI. Whatever. I don't really think that the output will ever be like compete with human creativity. Hmm, yeah, maybe we can get that lighter kind of looking at the way that these AI might change ideas of creativity kind of machine augmented creativity. But you mentioned some of the ethical concerns there.

Catherine. I'm interested in what you said about some of the ethical concerns raised by both developing these AI and and using them. And I'll just, you know, quick anecdote. I was chatting to a school the other day, and they said one of their main concerns with Chach.

It wasn't students cheating but dotted privacy, which I was surprised by. They said, you know what happens to the prompts that students enter into the BoT. So, yeah, can you walk us through some of the ethical issues right here? Sure. Yeah. And we've brought up a couple of concerns already.

I think I love again what you've said about this kind of like Bellesque experience of artificial intelligence made me think of like robots, cause playing as humans are laughing as humans, which is a framing that I've never thought of before so thank you Nina. Yeah. So down to privacy, we've talked, I think, James, you've already touched on a couple of concerns already. So I guess, like large language models require lots of data they're pretty voracious beings.

More data means a greater training set, and platforms like Chat Gbt are using publicly available data across the Internet. But publicly available. As you say, doesn't always mean ethically sourced and arguably. None of it is ethically so hosted, because no one has consented to feeding their data or information into these machines. So data can be proprietary or copyrighted as well.

And I've got an IP specialist on the panel. So I went into too much about that, but it can also contain culturally sensitive information. And this is where we come into questions about indigenous sovereignty and cultural protocols around knowledge, sharing that a machine, you're not programmed to understand unless we teach at these protocols. And certainly the companies building these platforms are not interested in in baking into the foundations of these artificial intelligence machines. So I would always encourage people to read terms and conditions when we're using platforms like this.

But even then it, that's very rarely in accessible, reasonable expectation to ask for people. With how many times have we read through the terms and conditions of anything that we use there, you know, dozens of tools that we in applications are using our day-to-day life? It's just not feasible, but I would presume that even when you do retain the ownership over the output of whatever prompt you provide to chat, Jpt, which is what has been suggested in the privacy policy of this particular platform the company can still use your inputs to further train the model. So you're essentially contributing new information to the database. So I I would just presume that you're relinquishing power and relinquishing control over what information they are giving to these platforms. Unless you're very careful or very cautious, or very confident that you're using.

You know it all, flying or open source model, or something isn't connected to the cloud which is unlikely. So yeah, I don't put anything into it. That could be commercially sensitive or confidential, or that you wouldn't be comfortable handing over to a stranger, and that is particularly concerning when we're thinking about children or people who haven't consented to giving up their information or maybe don't understand the consequences of relinquishing this kind of data and this kind of privacy, or are too young to even technically illegally consent to that kind of data sharing. Yeah, that topic of unethical sourcing of data.

I'd be interested to hear your thoughts later on. You know what a visual artist can do to prevent the art being used to train future. AI models, but just to go to Ben for a moment I know there's already questions about copyright, and it's a huge and merchant and complicated topic you know, just to sketch it up.

If I ask a generative eye to produce an image in this style of a certain artist, you know, is it the artist? Who made images that the I was trained upon, who has a copyright? Is it the maker of the AI program, or is it me who wrote the Prompts? Was it some strange combination of all of those kind? Of what's the state of the law there right now? And how are legal systems around the world approaching this issue? Yeah. Thanks. James. So as you kind of already alluded to, it's really going to depend. I think the area we can most kind of work for an answer is probably putting aside the question of what it's been trained on, or what the whether or not the person who created the AI tool can actually claim some sort of ownership of the output and more look at. what happens when you enter in your prompt and something gets generated, and usually what we do. And we do. This kind of stuff is we look at. There's a test we run through where we ask questions like, first of all, what is the type of work? Is it? Is it something that's covered by the Copyright Act? And given. We're talking about maybe an image generator or Gpt where normally going to lean

to them, being literary or artistic works that do attract copyright. There's a bit of a question of whether or not, because there's no human involvement at that point. Does it even go that far? But that's not. This is just to say it's still a bit unclear. There. Once we know we're talking about a actual.

Work, we then look at whether or not it's original, and this is where a lot of this is gonna come down to. I think, Sam for you said, that AI generation is fairly banal, and we courts have actually recognized that there needs to be a level of independent intellectual effort that does occur when you are working with electronic tools. So putting something in and just getting whatever spit out, I mean claiming ownership over it might not reach that threshold.

That intellectual effort. Once we kind of have that so it will really turn on kind of the nature of the work, and how you're going about producing it. We've then moved to whether or not, there's a human offer behind it.

That's the next kind of step and normally, then, we've also got case law that tells us that normally this requires some sort of manual input by the human and it can't just be like just dragging files or sound like that it needs to be some sort of shaping or directing of the material by humans, and that kind of once we have those 2 things. If you can demonstrate those 2 things, you kind of, and can say, Hey, look! This is probably saying that has copyright in it. But then we come to that final step which is, whatever the term say, in the actual tool that you're using. It is open for people to to contractually agree to various different things, including that they've given the IP of something that they've created away. And I think it's that's always gonna be that last step that you need to check.

And and it can also sometimes help in working out what I other people think. So night cafe, for example, doesn't actually say you're gonna own that there's any intellectual property, and whatever it produces, it says, Hey, this is a really complex area of law. If there is intellectual property you can have it once it's generated. But you don't own anything that goes into making it, or anything else like that. But you do get that final output. So that's kind of a rough area of where we go to get that kind of answer.

But then that's all factored in with what it was trained on. If you tell it to, you know. Rip off Nick Cave, for example. Then maybe there's other questions there, and I mean, there's an open question as to whether or not all of this is actually just the AI, the person who creative is AI tool actually owns everything that comes out of it, because it's all just an extension of that tool.

And that's the actual work for lack of a better word there, or the actual work. So the AI model itself is the outlook. Hmm, yeah. And everything that created if it is just an extension. But that's probably online to flow fully. But it is another way to look at it as well.

So it's a really complex area. So there's no clear answer. Well, I'd love to sort of hear your thoughts later on. You know, when we might see. Get some clarity here, and what sort of cases are update already? Yeah, it's interesting. You save that cause. I know the Australian Government is currently open to submissions on on the Copyright Act in March. And whether or not it's working as intended, and you, I think, until March, it's open for people to put in.

Their faults and ideas. So we might see a bit of movement in this tech space, because Australia has to date kind of been a bit reluctant in accepting some of the evolution of technology. Hmm! That other places have accepted so. I find that quite a confronting idea that the AI model itself could be the artwork instead of just kind of tentacles of the octopus, Xantha. I'm curious about. Hmm! You know how your students using AI, because I imagine that might be a bit of an insight into how it might be used in the future.

Yes, I've just been taking down so many notes as we go through this conversation. But yes, I told us I taught a class last semester at our Mit. Where I'm doing my Phd. And where I teach. And it was on the this sort of it was practice based subject loosely about the trajectory of cyberpininism and cyborg theory. All the way up to legacy.

Russell's glitch feminism, which came out of 2020, and each week I was setting different tasks just about looking at different technologies, and how we can use them and embrace them as different mediums, and of course I had to cover AI cause it was so hot at the time, but I found that as a Ben was just saying that the students were not able to create art with it, like they got so wrapped up in the idea of being able to just like put a text prompt into a form and get an image back, and it was like they weren't able to push past that step. And, as I was saying, in my haphazard introduction to my practice, I find those images really banal, and I think that there is a version of art where you can really embrace these tools and create something that as Ben was pointing to has a human artistic voice i'm not really opposed to being a tentacle of the AI octopus. I think that that's kind of amazing like that AR is just like this Meta performance artist that will eventually overthrow us.

That's hilarious and devastating. But you know we've come to a very existential place these last few years. But the students, anyway, hopefully, I can push them a little bit further this year across various schools that I am going to be inflicting myself upon. I do think that as an example of a of a practice that I think is really successful in this area is somebody like holy handen that takes AI images and then creates these sort of incredible psychedelic transformative animations from them in my own practice, where I suppose I think I've been successful, I have predominantly used text based stuff. But I mean, I've used a bit of everything, but I think that I've enjoyed the collaborative aspects of using Chat Gpt, or I actually like the clunky ones.

I love copy AI because it's so weird like the stuff that it throws back at you as marketing copy is insane. I recently wrote a collaborative eulogy to the addition of Runway journal that I am currently guest editing, and I was trying to create a base script. For my video performance work thing. That is the editor's letter that is actually a eulogy to the addiction which is themed ghost. And I probably generated about 10,000 words in that process.

I had to go through 2 free trials to get that many words out of it, and then from those 10,000 words condensed it down into like a 400 window script. So it became more of a callage process. But then, which was closer to writing and writing, something unique would be much, much faster. But I really wanted to have the voice of the machine. I love a little cyber moment.

Nina, I wonder if you have anything to add there, I mean but use the word color kind of asked the question, or you know people in the chat have been saying it's similar to using a camera. Is there anything analogous here, you know? Historically, analogy is to what we're going through right now. Yeah, definitely, yeah. I mean, I'm sure everyone's kind of picked up on that with like, this is happening again with the new technology and everyone breaking out. But that happened when cameras were invented. And I thought it would replace painting like I think that

kind of argument. Has kind of come through, and people maybe are realizing that it. I hope, anyway, this is the way I feel about it, that it is just another technology. There is like obviously more complicated things going on with like ethically, and also how it's affecting kind of like, you know, like physical artistic practices, let's just say, but I think that analogy does work quite well.

And I think when you see it like with through that metaphor, then and you're talking about kind of like creating out through it and creative practice the way I kind of like to see, or the most hopeful kind of vision I have is treating the AI let's just say, stable diffusion, as all or any of the image generators as a medium in itself, and I think you kind of view it as a medium, then it's not so much kind of like. If you just create something, you know one thing with this medium, then that kind of constitutes it to be out. But like, if you take one photo, it's not necessarily a piece of odd, but you can create with that medium. If you kind of follow, I mean, I guess this is more of like an odd theory. History, conversation, but you know things like pushing that medium to its limits, or subverting the medium. I think that's all like really interesting ways. You can I don't know, I guess.

Go forward and use it creatively, which is kind of what I've tried to do, and I think when Anthony was saying, you like to use like the worst version of Gpc. Like the coffee version of Gbp yeah, I really, I think I related to that kind of similar with the diffusion models where it's almost more interesting to me when you get when they're a bit wrong like when the outputs a bit like the fact that you can't generate correct looking hands, although always have, like the wrong amount of fingers on them, or my personal favorite is when you try to generate any kind of text. It's basically looks kind of visually like it's text.

But it is just meaningless. And so, for some reason, the model was never actually able to learn a language when it was being trained on those billions, or however many images. So I think kind of going in that direction. We don't. Your point, you're basically finding the errors of the medium.

And then exploiting them and basically finding something interesting in them. I think that's part of the that's probably like the way forward in terms of ais for me, anyway. Yeah, so you don't see any. Maybe inherent distinction between AI between art that's made with contribution from AI.

And and that sort of it is nothing to do with AI. I think. Are you saying that? Is there a difference? Yeah. Do you see any difference? There? I think there is definitely a difference. It's just, I think that's also a super complex problem.

That I'm also trying to figure out the moment like there must be. I. Yeah, there might be. I think there is. We are entering into kind of a new era, like a new. It's not. It. There was something more there, I think the AI has more agency. Hmm! If we wanna think of it like that, then maybe just a camera I think there's a lot of complex issues there. So I think it is different. But in a way it's useful. Metaphor.

When you're talking about creating or creative practice. Hey? Remind me of that, and then you would know about this better than I do. But that AI, that copyright case a few years ago, when the monkey took the photo and it was question over. Oh, yeah, that's right. Yeah, it's interesting. Not necessarily, a monkey taking photography, taking a photo, but I think someone mentioned photographs before, and that kind of stuff, and useful to recognize that the copyright act explicitly calls out these things like photographs and and cinema graphic films and stuff and gives them specific provisions and that's not, and maybe that's where the step where we need to go next with AI generated material is is to recognize it as a separate form of work, and that gives a bit more flexibility to recognize maybe more.

I, a thermal nature of the copy of the work itself, cause they're pretty fast and punchy. I I don't see someone wanting to keep their AI art, for maybe I'm wrong. For 70 odd years or so like that. Maybe someone else has a different view on that. But I think maybe that's the way we're probably heading in there, and I can already see it. Well, we've seen other countries grapple with the same issue, and most are not at a point where they're willing to recognize AI.

Art produced itself, so the Us. Has denied it. Have a Copyright Council. Italy is starting to make moves towards it, but it's still very early stages. So I think that's where we're at. And I think it's just gonna be a legislative change. Yeah, it's gonna ask, is this something Australia could legislate on to give clarity, even though the I models it. My dog is and based overseas, could we let you just like just say, look, this is where copyright falls.

Yeah, we could for Australia. It would then be a question of whether or not that's recognized anywhere else. So I guess a broader picture to recognize is internationally, we have several large agreements that deal with intellectual property, and how each country handles intellectual property kind of as a standard, because obviously each country can kind of make up their own laws and set their own laws in relation to IP, and other bits and pieces and so we need to have that standard here. So, for example, Australia recognizes, despite our copyright acting and all of copyright needs to be an Australian citizen. We also recognize that citizens of other countries can also generate copyright and they'll be treated like Australian citizens for the purposes of that that act, and so, even if we start in Australia, it might not have the global impact that we want but it's something that will need to be dealt with kind of at a global level. Really, given how we're all connected now. In any event.

Catherine, can you see any role here for legislation as well? Yeah, and it's tricky, because I think it's it's a common thing to say, technology moves faster than legislative reform. I don't think that's a controversial thing to say. Then. So where I do feel like we're constantly playing catch up, both in terms of copyright law and privacy. Reform is too. So I I don't know what a world looks like, where we can have a legislation that is more technology, agnostic and we can kind of keep up. Regardless, because as soon as we legislate for a AI tools and platforms like Chat, a new thing will come up, and then we'll have to figure out how to legislate for that as well.

So, yeah, it is. It is a to ricky thing to balance, I suppose. Like well, local local legislation and regulation and global regulation. When we're working with global platforms and and local artists and local users. But yes, I ben you mentioned the copyright actress under review, and people are able to make submissions.

I just wanted to like touch on that a little bit, because it can be a little bit daunting to write submissions, but I encourage everyone to. You don't need to be an expert and submission can be really short, and you can just speak to what's important to you about copyright or about art. So if that is something that people are interested in doing like, I highly recommend it can be a page long. It can be. I'm an artist. My name is, and this is how copyright. Affects my life, and this is how I'd like to be different. Sorry. Yes.

And you know, before us. I was wondering if people can do anything to prevent them. Their own images being used to train it. And, AI, I want to put you on the spot of it. There. But is there anything apart from, you know, not uploading them to the Internet?

Yeah, and it is a bit of a tricky thing to encourage, I suppose, like individual action and responsibility for things that they're very systemic. And there are powers that are much bigger than an individual artist or an individual user. And so maybe I'll separate them a little bit. Yes, you can, you take all of your images down, and they'll put them on the Internet and not make them scrapeful or shareable. I know.

And maybe the other artists on the panel can speak to this a little bit better, but I know that there was, you know, a big conversation around Debian art and artists being able to like opt out of their images on that platform being used to train models, but a lot of it. Is about movement pressure, too, and the kind of activism that comes with putting pressure on not just platforms, but also legislation to determine and I have a bit of a say around like what is acceptable and what feels ethical and what kind of changes we want to demand for both government legislation and also commercial platforms. Just to jump in there as well. I think it's really interesting, because so far we've not seen kind of a uniform approach. So, so there's this issue going on of being taken from the from the Internet and being used to train these ais. And you've gotten a wildly different set of things.

Art station hasn't really chosen to do much but then you've got people like I think. Is a Getty images, has now set up a separate fund which it's using to pay for people who haveve uploaded it to kind of acknowledge that that's kind of been going on. And you're getting a whole gamut of different reactions. And I think it's a bit of a difficult issue as well, because how else is an artist supposed to showcase their work without the Internet? And maybe it's a case of just finding the ones that appeal to you, and make sure you don't go to save the outstations. If you ever don't want them stealing or taking things. Yeah. And right now, we're in this sort of interim period where the AI what did the IM

models actually free to use? Because they're all on trial, and so on. So you know, you can't get this flow of, you know. AI is being trained on artists images, but it's also the artist can use the output for free.

I imagine that's gonna change pretty quickly as this technology matures I mean, how would that affect your work if you have to start paying you know, potentially kind of corporate rights? Or for the use of these models. I'm not paying for anything. I don't favor anything. If my work, like AI is one thing, but every aspect of my work is stolen, like what I do is direct elements together, like, I sort of work within an expanded college space.

So I'm always coming up against copyright stuff, and the Australian legal system is really all over the place. I seem to be kind of fine when I'm in a museum or an art gallery context, and then when I work in theatre spaces, they freak out. If I've been film, then we have to pay for shit. It's like nobody really has a goto on how any of this works. And AI will be no different for me. I will just infinitely create email addresses and free trials into the Eighth.

I already have, like 20 of them. I only just started paying for icloud storage. Not icloud, Google Drive. And it took a lot to sacrifice that $2 a month but I just started be getting my passwords. So it had to happen.

I mean. So it sounds like what you're saying is, artists will just kind of get around it and. Honest will get around it. I think that what I kept saying of within this conversation, and I've been watching this chat as it develops as well, and looked at some of the things I think about us like soda, Jack, who just you know prolifically use other people's work in their work. And that's the point, like the whole point is that you know that it's a reference

as it is with AI. I think that the reason that I find these AI generated images banal is because you just like, okay. Well, that's a robot image. Who cares like? Okay, putting it within context is the thing that gives it a meaning which is true for any source, material or any college practice, or any remix or anything that's going to be produced by AI ongoing. And auto, the pirates will find a way. Yeah, and Nina, I mean for me.

I wonder if, when you have to pay a monthly hefty monthly subscription for the use of an AI model? I wonder if that would, even though I is a pirates, whether that would change attitudes towards this technology cause? It seems to me bye quite unfair, that, and I train for free or not for free, but on freely sourced images, is now outputting images at a cost. Yeah, I mean, I maybe I'll eat my words on this. But I mean up until now the kind of programming computer science Github community has always come through like there is, I guess you would say that most money is in kind of that private industry and yeah, they're probably leading the advancement. So they might have. Like the biggest models. They have the most money for training, or whatever it might be.

But there is always somebody working on it and trying to make it open source. And that's what it's been like up until now. I know open a. I was great for a while, like when they first released. Gpt. One and 2. It was just like you can download the model, and if I mean the only, I guess the thing that needs to be democratized is the ability to actually code and like use these models without necessarily having a kind of interface that is more user friendly so I think that that accessibility pot. And also, you know, having access to a Gpu is a big step still for a lot of like artists and just people who wanna experiment.

But I think you know, if you're in that space, and the more kind of programming, Github, it should, I hope, always be accessible. I don't think they could really kind of block that from us, but I mean I don't know. Maybe I'm envisioning too much of a Utopian. This is how it's been going, anyway. Yeah. Yeah, on that topic of you, Toky is, we've still got about 15 min left in the panel discussion, and I thought it might be cool to kind of go around the panel and sketchup scenarios for what could come next? You know, for example, why copyright is important, and what happens if you don't resolve these copyright issues, or you do. But you resolve it the wrong way. Oh, yeah, some of the ethical issues rights and so on.

Maybe Ben to start off with. Why is that? Suppose the question really is, why is copyright important, like? If other cases where copyright is left murky for too long, and it creates lots of confusion, or it's decided the wrong way. I think it always depends on where you're coming from, and I think what's really interesting, especially in inverse AI and a tech space.

And AI space in particular, in those areas. And Githubs and stuff, is there is a weird fusion of private corporate interests. A lot of big, big proponents of open source or copy left licensing and an artist communities as well and they've all got very different interests in how they'd like to see an intellectual property regime kind of land. I mean, you've got anything from everybody should be free to use whatever creative outputs and share and remix, and do whatever you want all the way to. They should all be locked down as tight as possible, for as long as possible, so I can continue to make a return on my 13 investment.

Okay, and I think that's kind of why you need a heading. Is, you need some sort of copyright act that sets for bounds of this kind of saying this is what we've decided is appropriate. Right now we've we've kind of recognized these private interests up to a point, be it for artistic works. Life of your for plus 70 years give or take, depending on which part of the copyright act you fall under, but also recognizing things like, there are times where it's fair to use something, or it's a fair dealing to use it or recognizing interests of librarians and museums and other bits and pieces, and making sure it's still captured under the same umbrella kind of make sure everybody understands that we're all operating on the same kind of framework but what that framework looks like. I can't really begin to understand but I think it's that's probably why.

So everybody has a shared kind of response. Pattern is probably the best reason for it. And just quickly it sounds like we're still alive from getting any clarity here. 5 years. I don't know.

I it would really depend on, I think the first step is whatever comes out of this review of a copyright act, and that might give an indication as to to where sentiment is, and to where the Government thinks for they could head to next or what they suggest next, I'm sure there's other larger organizations, international organizations that are also making similar submissions or calls. But I'm not aware of anything in the moment. Just reminded to get your submissions in there, Catherine. I'm interested in your kind of just type in and Utopian vision here for how it might work out. Let's start off with the

the bad vision. I think we have plenty of disturbing visions, and it's fascinating, because I think a lot of tech companies. And you know, tech founders and Ceos, look at dystopic fiction as blueprints rather than morening signs. And so I think we have plenty of just open visions. We have snow crash. We have William Gibson, and Zuckerberg's kind of looked at all of it, and be like, yes, that's what I want rather than like this is this horrific future that could happen if we're not careful.

I, in terms of Utopia? I do often, yeah, think wistfully about the bonus lea vision of this free and open web. But I think that does contribute part of my Utopia free and open access to the hardware and software and network. To whoever wants have to have access to it I do extend that to models like and even like models like stable diffusion, as tools that are interesting, and might be able to inform our practice. I think that exists alongside rules and protocols that determine how we want our data and our art to be shared. I think there iss up to the individual to be able to enforce that kind of agency and autonomy over here. Here's something I've made, and here's exactly how I wanted to be shared rather than that, those rules and protocols being dictated by multinational corporations. That's my Utopian.

And your attitude once seems to be one of kind of gleeful experimentation. Is is that how you imagine the next? You know the future rolling out? I mean, the future is a difficult thing to imagine at this time I think that I like Catherine sort of enjoy writing this Utopia dystopia dyotopy, and and you know all sci-fi ever as Catherine has said, has been leading up to this point. You know, we've arrived. The future is now we don't really no what. The next thing is, we don't have any mythologies around that yet which is as exciting as it is terrifying. You know where are a real? What is the meaning of life? Moments where we have fully arrived at this like cyborg and interlocking of experience.

And you know, technologies, technology where the people that are gonna screw it up and we'll see what happens like, we're in a really strange, pendulous time. So sociopolitically, we have this sort of neoliberal healthscape emerging where matter essentially owns the world, you know, Jeff Bezos is rich as like 4 countries combined alright to think that we have any power at this point is just absurd. we'll just see what happens with all of this data.

And you know it's a bit exciting. I don't know if it's like a millennial or Jen said thing to be like. Well, if if the world's gonna end, I'd prefer to see it. Yeah, I I think there is that move to it all.

I'm on that topic of sci-fi, you know. Sort of feels like Science Fiction without AI at this point feels as dated as Science Fiction without mobile phones. It's sort of it's like a kind of fork in the road. We've gone down that way, Neena have to say the future. I think I do have this really like hope. One hopeful vision that maybe could happen, which

is basically, I guess, seeing the kind of stable diffusion dialing as like, I think the reason there's so much funding behind it is kind of purely not so much for like auto creative people or whatever but mainly for like content creation, and like social media, it's like a really easy way to just get like low cost. You don't have to hire someone. So basically, if we kind of carry on in this direction, we'll see, you know, like 100% of what we scroll through.

And our feed being AI generated right? And then maybe that will eventually become so meaningless and just detached from reality, or something that it might take down. It might just fully take down social media in some way, and it'll all just be like this is completely pointless. Let's kind of return to physical practice in some way, and we'll all just feel like this is completely pointless. Let's kind of return to physical practice like working with materials again, the real one. This is probably too hopeful. That's kinda how I feel already.

Yeah, I can see kind of a I already nibbling at the edges of my industry like I use it every day to transcribe interviews, and in in the kind of content system that we have for posting on the Internet there's already talk about automating some of the metadata, and things like that. Some other newsrooms. There's sports and business journalists have been replaced by AI. So, yeah, I'm I'm excited. If it means that I'd have to do less drudge work. But you know, worried if I won't have a job at all at the end of it.

It might be time to to go to some questions from the audience, and we have, thank you, Sophie, for sending a couple through. One of them is. I was wondering about the privilege to access all this technology for creating art. Additionally, the digital literacy that people will require for appreciating this sort of a hard.

That might be a question for San Diego. Do you have any thoughts on? You know you know it requires a certain kind of skill set to be able to both. Uc. Su Iii. Tools, but also to appreciate the art that's created by them.

Well, I mean, I think that's a broader question of privilege and access across all arts and education, and pretty much to every other aspects of life in colonial, so called. The strength. But yeah, absolutely like, we sort of have arrived at at a point. That will become alienating intergenerationally in the other direction, though I certainly, in my own practice, and in the way that I teach my students, and wherever else, as I said, I don't have any interest in the paid versions of these tools, there is a lot of open access software, out there most of the stuff that I use is apps on my phone. So. Yes, there is a threshold there which is, you have to have a level of access to technology.

Technically, I suppose everybody kind of does with public libraries and facilities and all those sorts of things, you know. You tech literacy is available to you at your local apple store. If you wanna go. But.

Yeah, privilege is an issue. Everywhere is my answer to that question. And this is no exception. On that topic of sort of intergenerational alienation. I want to, you know. I think that probably is a little bit of what's happening here. Have you observed that with work that both involves AI, or, you know, work that for people wanting to create AI work or kind of understanding the or what the AI work is trying to say? Yeah, definitely seen a lot of mixed kind of levels of understanding.

I guess of the technology behind it. I think I mean also, maybe cause it's so new. Really, it's just the last 4 years, or something. It it wasn't as generational. But you know majority of people didn't really like when I was kind of exhibiting my works in the past. Don't really have a good kind of base knowledge of how powerful this is, actually is.

So how much does it actually know? What is it actually aware of there's a little bit of this kind of maybe a mysticism around that term. And I'm sure cause it is so new to all of us, I'm sure, kind of as we kind of grow older. I mean. I already feel it myself. So many things like to talk, or whatever.

But there will be some kind of gap where it just becomes really hard to grasp exactly what this is. And hopefully, I mean, the newer generations will be able to handle it. This one might be for Catherine. It's a question around. You know this, how they database is that the I was training upon

it kind of skewed. Question is how you know, based on the contents that are already out there, which are predominantly hetero, white, male first world. I'm wondering why you would want to source from this dance unless you were producing satire. That's such a good question, and have. That's exactly right, like, I think there's a tendency

to think of AI, because they're machines, because they're technology as unbiased kind of like rational actors. But they especially natural language, processing and large language models. They're going to be as biased as the data that we put into them. And we need to be very mindful of that when we're assessing any of its output. So you're exactly right. It's going to be as wide as colonial, as capitalist, as the underlying data set that is being fed into it.

And that's going to be replicated when it's output. It's not going to be this. Yeah? Unbiased output. I. And it's I know we're talking about creative practices in AI, and we're talking about art and and not to say that that based output is less unethical. But these models are often trained for the purpose of surveillance and policing, and I think we need to be very responsible for the way these models are built.

The way these platforms are developed and deployed because of the kind of worst case scenarios of what happens to that biased output which is perpetuating a system where people are overflowed and incarcerated. Yeah, so your point is that computer vision models. Methods of facial recognition, of mapping images. You know, one application of that is survivors. Yeah. Yeah, yeah, I facial recognition. And also like predictive policing has been coming up again in conversation.

And should immediately be thrown in the bin. But that's that. That does it's it's it's all kind of one ecosystem, I suppose, of large language models, and artificial intelligence. They're being trained for various applications, but they don't exist in the screen markets. We don't have, like our art and creative practices over here, and surveillance and incarceration over here. They're they're all kind of feeding into one another. There's a question here. It's really question around jobs. And AI, particularly graphic design, and a bit of anecdote.

I don't think I can ever replace humans and making what we call art, but I do have very real concerns as a creative industry student and soon to be graduate about companies using AI tools to create digital content instead of paying human to do that how does the industry keep digital creatives and creative industries workers from being skipped over bye I tools to create simple content tools like Chat Gbt can already use it. Web design, and it's getting better at writing articles and other content. I can confirm. That's the case. I'm concerned that creative industry workers will not be. Able to find pay gates with the rise of aye, that might be 1%. It would need not do. You have to, either of you have any thoughts on.

Yeah, what this means for for young grandpa artists who are graduating right now. Yeah, I definitely think that it's a voting moment for many different professions. But I think that this same conversation is very easy to translate over onto things like gig economy and uber. And the it's a sort of crisis that we've been having with, you know. Apps running the world already Eugenia Limb has a really good work about this. Actually can't remember what it's called, but it's it's all about the economy.

And and sort of good giving out, giving ourselves and lives to the robots and the apps essentially. Yeah, I I don't know what's going to happen, but learn to code. I feel like coding, coding is gonna be one of the safe guys chat, chat, Gpt can now write code. Yeah.

Yes. Yeah. So maybe that's over to get person skills like baked bread. I have no skills for the Apocalypse, like pretty much just leadership. At this point. Okay. Just to jump in as well. Oh, sorry, Nina, you go ahead.

Alright, just like quick thing is that we've done a little bit of research at our lab. A few workshops just with our like getting artists to basically work with this kind of technology, we were doing some specifically with music. AI generated music and musicians working with that and kind of just a workshop, discussing whether they would want something like this, and something that came out of that which I thought was interesting was a lot of artists didn't really mind the idea that you could use this technology. To kind of replace some of the Bronx work, like some of the work where you're at the paid jobs, where you're not necessarily like. If I guess it's a problem when companies themselves outsourcing.

But maybe I don't know how it would be structured, but the fact that you wouldn't necessarily have to do that, work and pay jobs, and you could actually like focus more more so on the actual creative pursuit that you're working on so maybe that's a way to capture content from, I think is the way I've been thinking about it. Yeah, that's interesting. And sorry, Ben. I'll go to you in a moment. But just quickly. Yeah, the architects us are speaking with. We're saying, Yeah, we're using. Ii to spark images and so on. And the means that our architects have more time for design and less for the sort of tragedy work of you know. 100 kind of planning an office with a 100 desks, and putting in the order to the builder, and so on.

So that's kind of the optimistic vision for how to effect work. Yeah. Good. Then what are you gonna say? Oh, yeah, I'll just quickly add in as well when you talk about architects, lawyer is seeing a similar problem.

We've now got AI. Is that claim that they can beat parking tickets and other such things, and I'm not in that space. But I'm sure that's funny. And we've also got a gold coast lawyer who recently was in the news, saying that he uses Chat Gpt to do his initial cuts to visit vice, and then edits from down. So it's not just to me. Art, space. I think it's everywhere but what you we are.

I did see when I was playing around with some of his tools is, there's now also tools that allow you to even edit or change the AI after you get that output, so you can, you know, make it fit what you need. So you use it. First generation. I mean, you just kind of play with it to make it fit the infrastructure.

Hmm! So maybe we're gonna see more tools around the initial output. And artists are gonna be working with those tools. And it's just changes how you work as opposed to necessarily anything else.

Hmm! If I can jump in as well. I think you touch on some really interesting points around gig economy and the kinds of works that are being outsourced to AI platforms feel like the kinds of works that have been historically outsourced to underpaid workers in the global south, I think it's a really good reflection on the kind of work that is value to analysis. On the kind of work that is valued in our society. I noted particularly.

I think it's chat. Gpt had been out sorting a lot of moderation work to work in. Kenya. And so this is in this kind of bug economy and globalization of work is all embedded in all of these tools as well. I think there's a really interesting ethical question and a reflection of those practices here, too. Just to circle back to my point earlier. Eugeneie Williams, work is called on demand it's from 2,019, and the other works that I would recommend people look up is K.

Ate Crawfords, and how to be an AI, which deals so well. Deep, diving down into these like really, specific ethical dilemmas of so that is an amazing piece of text, that kind of deep dives into Amazon's echo right down to, you know, where all of the parts of the technology is sourced and the mineral lakes that are getting destroyed from it, and you know how much Jeff Bezos is earning in comparison, like an Amazon minor, to make one of these echoes would have to work for something like 237 years to earn a day. Of. Ye

2023-02-20