AMD’s 128 Core MONSTER CPU – Holy $H!T

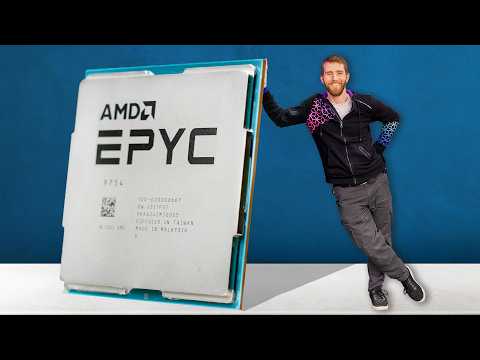

Ladies and gentlemen, AMD has done it again. The EPYC Bergamo 9754 crams 32 more cores into the already well-stuffed SP5 package of EPYC Genoa for a total of a whopping 128 cores in the palm of my hand. Whoa! Or is it? Yes, it is. But I can practically hear you guys furiously typing away, Linus, who's gonna spend $12,000 on a CPU? I'm never gonna use or even touch one of these monsters. But here's the thing.

In the same way that the technologies from multi-million dollar Formula One cars trickle down eventually to our daily drivers, the advances that debut in high-end data center chips almost inevitably make their way into your home PC. And there is a lot of goodness packed in here to be excited about. And besides, who cares if you ever need one? Holy s*** it's a 128 core CPU. Let's take this thing out for a rip. Rip, like this segue to our sponsor. Odoo, a great business needs a great website.

So make yours for free with Odoo's intuitive all-in-one business management software. Click the link below or watch till the end of the video to learn more. To achieve this level of core stuffery, AMD took the same impressive IO die found on their EPYC Genoa CPUs that we met last year, and they reworked the CCDs or core complex dies for higher density. I will not be popping the lid off of this thing to show you guys, but if I did, you would notice a couple of key things.

First is that in spite of the higher core count, there are actually fewer CCDs. That's because instead of up to 12 eight core dies, we're looking at eight 16 core dies. How on earth did they pack them in so tight? By using Zen 4C cores rather than regular Zen 4 cores. Now, Zen 4C is not like an Intel E or efficiency core.

It should have the same performance per clock and the same level of feature support, just packed 1.5 square millimeters tighter each. This means up to 256 cores in a 2P or two processor server, but it does come at a cost. You lose some clock speed and you lose some level three cache which doesn't sound like a very good thing and is probably the second thing you'd notice because in spite of having 33% more cores than Genoa, Bergamo ends up with only 66% as much level three cache.

Now, AMD's argument for this is that in a cloud native workload, we're not expecting to overcommit core. So it'll be less impactful than you think, but what even is a cloud native workload? I'm so glad you asked. It's still a little vaguely defined, but examples might be things you use every day like search and social media and also tools you might not realize you're using every day like software infrastructure and platform as a service providers.

So in a way that makes sense, since each workload is likely to be contained to the core or maybe couple of cores that are allocated to it without as much sharing of cached data across the CPU. Enough about that though, let's get this thing built starting with the motherboard, which wow, if you are into motherboards, this is a really sexy one. As I mentioned already, Bergamo uses the same IO die as Genoa, which means there's support for a whopping 12 channels of DDR5 ECC memory and as many as 128 lanes of PCIe Gen 5 with a single processor or up to 160 with a dual processor setup. And you can see evidence of that all over the ASRock Genoa 8UD-2T slash X550.

This thing is absolutely bristling with high end features to the point where in spite of it being a wider, deep micro ATX form factor, it doesn't even have room for everything that Bergamo has to offer. I mean, it doesn't help that the SP5 socket plus the accompanying VRM that can do anywhere from 360 to 400 Watts of power delivery takes up about a quarter of the board. Let's go ahead and get the CPU installed. This is my first SP5 installed, but on the surface it looks pretty similar to what we've seen with previous AMD Threadripper and EPYC processors.

So you lift up this carrier frame, take out the cover. Man, that is a lot of freaking pins. 6,096. Really, is that right? That can't be right.

Go ahead and count them. We go ahead and slot that guy in a little something like that. Really? That's it? You don't even have to torque down the CPU. Does the pressure come from the cooler? Yeah. Oh, let's talk about that.

The reason these server CPUs have so many more pins than a consumer one is because of all the different end points that they need to be directly connected to. And one of the biggest mechanical challenges with these platforms is making sure that every single one of them is contacting correctly because even one being missing, you could have a memory stick not show up or a PCIe slot that stops working. It's actually really common on EPYC and Threadripper builds, especially if the installer either doesn't torque the screws down enough or doesn't torque them down in the right order. As for the cooler we're using, this is the XE04-SP5. It was sent over by Silverstone and it looks surprisingly wimpy considering that it's cooling 128 CPU cores. But because the heat sources, the cores, are spread over such a large area under this metal plate, the IHS, we don't have to worry about the same kinds of concentrated hotspots that you might see on something like an Intel 14900K.

That means even the heat pipes that aren't dead center on the cooler can work much more efficiently and you can handle a 400 watt CPU with a little thing like this. The instructions call for anywhere from 10.8 to 13 foot pounds of torque on each screw and for it to be tightened in a star pattern, starting with the sides and then doing the diagonals one after the other. Of course, if you wanna be extra careful, the best thing to do is start all the screws in that order and then do a second pass checking all of the torque values. With that out of the way, let's move on to the really cool stuff, like RAM.

To help us take full advantage of this platform, Micron sent over 12 96 gig sticks of DDR5 5600, which would give us a total of over a terabyte of memory, if we could install all of them. But unfortunately, due to limitations of this form factor, we're only gonna be able to put eight on it. I know, I'm as disappointed as you guys are that we're slumming it with 768 gigs of RAM, but you know what? I think we're gonna be okay.

See, look, it's not so bad, eight channels of memory. I actually think ASRock has done a great job of maximizing the IO, given the limited space they're working with. We have four full fat PCIe Gen 5 by 16 slots down here. So that's 64 of our lanes taken care of. Each of the larger MCIO connectors here is another eight lanes. Two of them also support these eight ports to a six gig breakout cables.

So that's 96 total now. Each of the M.2 slots, that's another four lanes. And the same goes for the different MCIO. Yeah, this one right here. So what's that? 108, we're still 20 lanes shy. Maybe they did need a bigger motherboard.

AMD has described EPYC as being as much a PCIe switch as it is a CPU, and they're really not kidding. They've crammed as many expansion ports as they possibly could onto this board, regardless of whether you'd actually be able to use all of it. And we've still got 20 more PCIe Gen 5 lanes swinging in the breeze. We haven't even talked about the eight PCIe Gen 3 lanes, four of which are serving our dual 10 gig NICs, and one more going to our AST 2600 remote management system. That leaves three more lanes just hanging out.

No one? No one? Down the street? Okay, doesn't matter. ♪ Down the street, down, down, down, down. ♪ Point is, you toss in some minimal USB, a VGA port, and we have a truly EPYC amount of IO. Anyone? I don't, I don't get the thing. ♪ Down the street. ♪

I'm sorry, we'll stop that now. All that's left is to plug in some storage and figure out our power situation. See, I have no doubt that this 1500 watt unit from Corsair can power the machine. I'm just not sure how we're going to connect it. You see, there's no 24 pin power connector on here.

That would have taken up precious PCI Express and fan header space, but that's okay. Slowly but surely, the industry is moving away from the lower voltages that make up, realistically, most of the pins in a 24 pin connector and to 12 volt only designs. So what we've got instead is three eight pin ATX CPU power connectors, and then this tiny little four pin that takes an adapter off of your 24 pin just for the power switch.

That's the green and black one right here. Power okay, and then five volts standby, which is gonna allow you to wake the system with a USB device, for example. Now I can practically hear you thinking, wow, that power supply is being comically underutilized, but don't worry, guys. We're gonna find something power hungry to plug into it.

That's foreshadowing. But first, putting this thing on a test bench feels like a bit of a disservice, doesn't it? I think we need a case, and I think I know just the one. Oh, you're not getting that run, are you? Dang it. Oh, God. What? Oh, God, this is awesome. Am I giving me a hand with this? Oh, boy.

Oh, how much do the wheels cost? Surprisingly, nothing. They were included. What is this? Okay, this, this is a piece of history.

The year was 2003. I had a lot less facial hair and had never experienced gaming on a dedicated GPU. There's no clip for it. YouTube hadn't been invented yet, but AMD existed and was announcing their first foray into the workstation and server market, Opteron. This was also our first look at the 64-bit instruction set extension to x86 that Intel would ultimately license and is still in use to this day. AMD wanted to make a splash for the launch, so they held an event down in Texas and showed off a dozen prototype Opteron systems in everything from single CPU to eight CPU configurations, all in cases just like this one, complete with included wheel lock.

I love this pull-out drawer, too. Remember kitschy 5 1⁄4-inch devices? This one's a speaker. Anyway, after the event, the systems were decommissioned, so a bunch of them ended up being sold in a San Antonio computer store. At least one ended up on eBay, and several more were simply scrapped. This one, though, was bought by fellow YouTuber Wolfman Mods, who put it in storage for several years before it eventually made its way up here.

Now, we've cleaned it up as much as we dare to, but we don't really want to damage something so unique. Now, this case, not exactly designed for ATX motherboards, but it's so big, we can just toss our test bench inside it, I think. Perfection.

Completely tool-less installation. Oh, other than the side panel. But for that, all we need is a quarter turn with our trusty LTT screwdriver.

This is so janky. How did they think this was a good idea? How unexpected. A graphics card. Ha ha. Hey, we're booted! 768 gigs of RAM and enough logical processors that it looks like a quad socket machine from just a couple of years ago. Do you see how long that took to load? Look at this list! What? This is absolutely mind-blowing.

I mean, okay, it's only running at 1.8 gigahertz right now, but 73, 75 watts? That seems pretty reasonable. AMD's Radeon division could take some notes.

They have desktop GPUs that consume that at idle. Here we go. How fast will this go? Remember, guys, this is R23, not the older R20.

This should put the burn on. Are you freaking kidding me? One chip. One chip! It barely even managed to spool up! We touched 235 watts for five seconds. Maybe it was a fluke. Yeah, yeah, yeah.

Maybe it was a fluke. Maybe it was a fluke. We'll try again. We'll try again.

Is it done right? Yeah. I'd say maybe two-thirds of the cores actually got to 100. Holy crap! The rest just sat there at like 70. Wait! We might not actually be using enough cores! Custom number of render threads, 256. Can it go even faster than 86,000 points? It might've been using 256. It might just not have fully spooled up.

Oh yeah. There goes all your cores. Holy crap. I think that was even...

89,000 points. Guys, context here, okay? A Threadripper 2990WX kit that's a 32-core chip is left completely in the dust. But that's okay, because Maxon has a new generation of Cinebench, R24. Let's see if that can bring this monster to its knees. I haven't actually used this before. It looks like it visually represents what's going on a little bit differently.

And it has all cores working on small chunks at a time, rather than each core working on a tiny, tiny, tiny little chunk. Now let's have a look at clock speeds. Our highest boosted core is just over three gigahertz. Most of them are in the 2.6 to 2.8 range though. Oh wait, no.

I lied. There's a bunch over here that are at 3.1. Oh my goodness. It's kind of all over the place. Now I want to look at clock speed. We've got more cores than I was expecting, boosting all the way to the maximum of 3.1 gigahertz.

But most of them are somewhere in the 2.8 to 2.9, three gigahertz range. That makes sense. Remember how we talked about these Zen 4C cores being more tightly packed than regular Zen 4 cores. That's why our max boost is just 3.1 gigahertz on this chip.

But that reduced clock speed means that this Bergamo part shares the same default TDP of 360 Watts with the Genoa chip before it in spite of the 32 additional cores. Plus, as Serve the Home found in their testing, Bergamo can comfortably run with all 128 cores boosting for hours on end without neighboring cores interfering with each other. Maybe we need to do a quick pie calculation. Oh, hold on. First Cinebench is done and we are about four times the speed of an Apple M1 Ultra.

That is, that's a fast chip, guys. It's not like, yeah, but whatever, it's Apple. That's a really fast CPU. Now it's time for more delicious benchmark.

Using Y Cruncher, we'll be calculating pi to, oh, I don't know, 250 billion decimal places. How much RAM do we have? Sound about right. We've got a terabyte. Oh no, we don't have a terabyte. Crap.

We have 768. Oh, sorry guys. Maybe a hundred billion decimal places.

Watch this memory usage go. They are not turboing as high in this workload. That tells us that this is a more demanding workload and we could be somewhat power limited or VRM limited. I don't think it's gonna be power.

We're only a little over 300 Watts. Could even be thermals though. We managed to hit 82 degrees on average. Mind you, that's the higher averaged die reading. The actual CCDs themselves, where the compute is happening, are all in the 55 range.

Kind of incredible when you consider how much heat is blowing off the back of this, but that's what having a more spread out heat load will do for you. Wait, we can check this. We are thermal throttling. Oh, crud.

We're not getting nearly as much performance out of this CPU as we should. Is there anything we can do about that right now? Okay, hear me out. We missed the fins of the heat sink. That's actually a terrible idea. That would probably work. Do we have a- If you put a spray bottle full of alcohol, you could make some alcohol mist.

Interesting, interesting, interesting. Okay, hold on. Hold on, let me just- This is how fire is made, kids. No, no, no, no, no, no, no, no, no. There's not gonna be a fire.

There's not gonna be a fire. Okay, hold on. This is the dumbest thing I've ever done. It's close. Buddy, it's close. I need actual water though.

Can't? Sounds like a challenge to me. I've got a shield here on the backside, so none of the water will end up where it's not supposed to be. Okay, let's see if that'll help it. Hey, hey, look at this. CPU power consumption, 300 watts almost. I don't really think it's working.

Realistically then, we're not gonna get a representative reading given that we don't have an adequate cooler, and this is the only cooler we have for this socket. So why don't we move on to a load that's not going to stress the entire CPU, like a video game. Come on, you guys, you knew we were gonna do it. We had to do it. Oh, where's our FPS? Oh no, 50 frames per second? I mean, oh man, that's why I got shot there.

Are you kidding me? Something I wanna clarify is there's nothing architecturally about this CPU that makes it bad at running games. I've seen it so many times in the comments. Oh, man, it's using a server CPU to run games, especially when we'll do, for example, older generation Xeon builds as kind of cheap and cheerful gaming rigs, but that is not the case at all.

This is the same as any other Zen 4 core, except that it's running at such a low clock speed that it's just not able to keep up with our poor 4090 that's like, wants to go. It wants to go, but it can't go. Oh, crap. Wow, that guy was terrible. No offense, buddy, but wow. We're using 96, this is just not working, guys.

I've also got another game to try out. See if something a little bit more multi-threaded is gonna do better. Now that's more like it.

Over 200 frames per second. Clearly, there is something about CS2 that does not like having a slow CPU. I mean, we know it runs way faster with a faster one, but I was not expecting it to be that bad.

We're getting 180 FPS, I'm only at 1080p, mind you, but if we had a CPU bottleneck, we should be quite CPU bottlenecked, regardless of the resolution we're running at. I mean, this is a 4090, so that's not gonna hold us back. CPU usage is at 0%. Oh, then it goes up to 25, then back to zero. What are you even talking about? You know what, it doesn't matter. The reality is, nothing we run here is a real test of what these chips are capable of or what they're designed for.

And prepackaged benchmarks for cloud workloads are few and far between, with most customers for a product like this either being the kinds of folks who build data-centric capacity, then lease it to third parties and let the customers figure out the rest, or the kinds that operate at such a large scale that they would expect to receive samples from either AMD or their own budgets that they would use to optimize their workloads and evaluate the suitability based on real-world testing. And honestly, seems like things are going pretty well for AMD on both fronts, with their massive lead in core counts and PCIe I.O. Everyone from Microsoft and Meta to Amazon and Google is building up infrastructure around Bergamo and other EPYC CPUs. And with Intel's Sierra Forest response, using stripped-down efficiency cores and being as much as eight months away, it doesn't look like that's gonna be changing in the near future.

So Opteron might've ultimately fizzled out, but EPYC looks poised to continue making massive gains in the data center. Well, at least until the competition from ARM-based competitors gets even stronger. Strong, like my segue game to our sponsor. Odoo.

Okay, it's 2023 and you're telling me your business doesn't have a website? What are you going from town to town, hawking your wares, maybe with a horse-drawn carriage? If you wanna improve customer engagement and increase conversions, you need a website and Odoo can help you with that. Their open-source platform is easy to use and best of all, any single app and its supporting apps are 100% free. And don't worry if you don't have any coding experience. Odoo keeps it simple with drag and drop elements and an intuitive website configurator, allowing you to quickly build your site and get back to what's important, that business that you're running.

Odoo provides unlimited hosting support and they even pay for your custom domain name for the first year. And if you need more than just a basic website, Odoo is scalable with over 50 apps available, meaning that you only have to install the ones that fit into your business needs and they're all fully integrated. So give your business the companion it needs. Visit the link down below and start creating a website for free.

If you guys enjoyed this video, especially this little piece of history, shout out Wolfman Mods. You can check out his follow-up modding efforts at his channel. We'll have that link down below. And if you're looking for something else to watch, why don't you check out our coverage of EPYC Genoa, the full fat non-compact version of Zen 4 EPYC. It has a lot more in common with the upcoming Threadripper 7000 CPUs that I remember that thing I said before about making its way to the desktop.

It's coming. It's happening.

2023-11-16 18:02