Personal Monitoring KLANG webinars

Hello and good morning from Germany! I hope you are all doing well and hopefully enjoying your time learning some new stuff with all those amazing webinars that are out there right now. We are trying to be a good part of that as well and use the time that we are locked down to update a couple of skillsets. We can all learn something new hopefully.

Today's topic is Personal Monitoring for musicians using the KLANG processors. So over the course of the next hour we'll go through some of the background, some of the strategies that we can use to get the maximum out of our monitoring situation as musicians. In-Ear Monitoring in general is still on the rise. It's coming, it's unstoppable because it has a lot of advantages over a conventional wedge monitoring. One of them would of course be that we don't have to be worried about feedbacks unless we crank up our In-Ears that loud which i heard has actually actually happened with a couple of musicians but that should not be happening - be careful with that. Then another factor would be of course, that we have a personal mix.

It's just for us. One mix for each person, so we don't have to fight with our co-musicians on stage about my wedge being too loud or whatever. Then we are more or less independent from the acoustics of the venue, so no matter if we're playing in a big concert hall or in a small jazz club or an open air festival stage - the sound for our monitoring should be more or less consistent.

Then speaking of consistency: No matter where we move on stage, our sound is more or less consistent, so we don't have to be stuck in front of a wedge to be able to hear what we need to hear. We actually can move around all over the stage or even into the audience and then In-Ear Monitoring gives us the potential to protect our hearing, because we can listen at a lower volume. However that is not a natural given. We can also damage our ears with any monitoring, if we do it wrong and if we give too much level to our ears.

So that is also something we have to be very careful with and we at KLANG think we came up with a couple of strategies and possibilities to make it a little bit easier to protect our hearing, because no matter how many limiters we put into our signal path: there are some factors that cannot be prevented, unless we take care of some very crucial basics of mixing. We will look at that later. Mixing In-Ear Monitors can be a quite hard thing even for professional sound engineers for multiple reasons. First of all:

a conventional stereo or even mono monitoring, which we should should never do, is a compromise. Qe hear three-dimensional in real life, so actually just putting and confining everything that we should hear on stage between our two ears, inside our head, is a very unnatural way of hearing. That gives us some challenges with transparency, naturalness and connection to the audience, as well as of course the hearing protection factor. So there are some situations where you

are playing on stage and you will have a monitor engineer who is taking care of you. We also gave the monitor engineers some very good possibilities with a full integration in the top of the line mixing boards, which makes it easy for them to work with KLANG and give you a binaural sound or immersive sound on your ears, but in other situations often theaters, houses of worship, tv productions and so on, it has become more or less a standard that musicians are mixing themselves with a personal monitoring mixer. That is also not without challenges. Simply because a musician is not necessarily a sound engineer. A musician knows kind of what he needs in his mix, but he doesn't always know how to get there. It is very important to make it as easy as possible for the musicians to find a good mix. We will look at KLANG's approach for

that in detail. One of the most obvious factors of that approach that we chose is to have a very clean interface. A very clean view on what we do in terms of mixing for ourselves on stage, so the levels are very clearly color-coded, there are name tags and little icons, so it just takes one glance and we know exactly which fader we want to move. We don't have to look around on some little device and try to think about which combination of buttons and rotaries we have to use to get somewhere. It's just all there in plain sight Same thing for the positioning. It's very intuitive

with just a representation of the head, where we can just move the signals wherever we want to have them. That makes it quite easy. We can use multiple apps at the same time, so each musician can have their own control device to control their own mix. Again: That makes it much easier for us to not get in the way of each other and gives us give us actually a personal control. Those control devices are compatible with a whole range of devices that you might already have or your venue might have. It can be an iPad, an iPhone, can be a Mac, a PC, or even an Android device. So a whole range of devices can be controlled even wirelessly by using a wi-fi router. So no matter where you are on stage, if you're moving

around on stage, you can have your control with you and if you prefer to use a haptic control, even that is possible by just attaching a midi controller with the MCU format to your control device. Let's take a look at KLANG in detail. I don't want to give you a full tour of everything that we can do with KLANG, because as a musician you don't really need all of those factors, but we'll take a look at all the relevant controls that are interesting for you on stage during a show or during a rehearsal. So let's switch over to my KLANG.

First of all is that we have several app modes by pressing and holding the Config button. We have access to four different modes: Admin Mode gives us access to everything in our device that should be reserved for the sound engineer, or for the system integrator. The Show Mode gives us access to all the mixes, but locks us out of things like system settings so that's a view that can be used by sound engineers or techs on the side of stage to help with the mixes, or it can be used by a musical director even on stage. We'll get to that in a second. And then there's the Musician Mode, which locks us in one mix, so we only have the relevant information for our own mix. Personal Mode is very similar: it gives us some extra pro options for professional musicians, so let's start with the Personal Mode and take a little tour through it.

At first we select our mix, i'm selecting this very uncreatively named mix called Aux 5. Let's start with the faders. As i mentioned before: it's very easy to find the right faders because we can use a very clear color coding, name tags and little icons. That makes it very easy for us.

The rest works pretty much like any mixing board that you might know already. Fader up makes it louder, Fader lower makes it softer. We have Mute and Solo buttons. If we have activated the Mute, we can see from every screen a little Mute icon here. So in case we're wondering: 'Hey where's that cowbell suddenly going?', if you see a little mute icon you might have just muted that signal. Another factor would be a Solo,

which is great for setting up your show and and getting started with your mix. If you want to just fine-tune a couple of signature signals, but during the show of course we don't want to have one channel soloed. In this case we would only hear one kick drum microphone, which probably would not be enough for us to play a show with and in the same way as the mute, as indicated from every screen, the solo is also indicated with this red little icon here. So if we muted something and we want to

get out of that, we can either deactivate the channel that we soloed or we can use on the top right corner the Clear Solo button, which gets rid of all the Solos. On top of that we have made it quite easy to work with the gain structure. Let's say we are the guitarist. We turned up our guitar a

little bit and we feel: 'okay, i still need a little bit more guitar, but i kind of ran out of fader, so what do i do now?'. I can just do the intuitive thing and press this little plus button on top of that fader that is maxed out. If i press that, you can see, that all the other channels are going down and the master volume is going up.

So my guitar is actually going to 11 and even further, but i don't have to take care of all the gain structure issues. It's a very intuitive approach Same thing we can use, if we notice, that our faders are all too low and i'm not really using the whole fader range here. I can just go and press the plus button on the master fader and that will just elevatethe whole levels to give me a little bit more sound on our ears. I know that a lot of musicians try to work in a lower fader value here to always have head room to get louder and all that stuff. I would definitely not recommend to do that, because the more you're using the full gain that you have on your faders, the more protected your hearing is against accidental moves. If for example all of my faders would be down at e.g. minus 40 decibels and then accidentally i moved them up here

that would give me a very very loud increase on my ears. While if all of my faders are in this range here and i accidentally moved something up it would just make it a little bit louder, but not an extreme change. And those extreme changes are what are dangerous for the ears. This makes it quite a bit

easier. We also have the Second Function on the bottom right corner. If i press that you can see that the solo and mute buttons change to P and E buttons. P will lead me directly to the

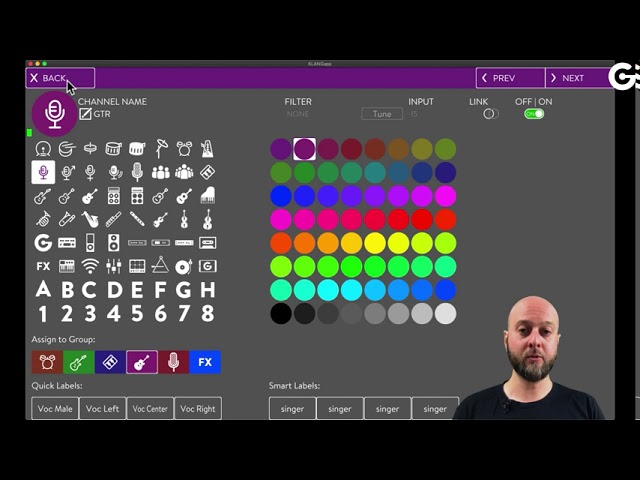

position of that single channel that i'm working on right now for some fine tuning of the positioning. and E will lead me directly to the naming. So if i want to rename, or recolor, or use a different icon for the selected channel, i can easily do that from here. There are even some quick labels which are preset by us for the different icons and smart labels, which remember the four last names that you typed in for each icon. So naming and changing that

is very, very fast for you. By the way: in Personal Mode, if you change a name: that is only changing the name for your view. So for example if you're the guitarist and you have a beef with the bassist you can call him Bassist of hell or Bassist from hell probably yeah whatever...

So now i can get a chuckle out of that whenever i look at it, but the Bassist actually won't see that so that's only for myself. That protects also the peace in the band. There's the second mixing view, that we can take advantage of if we press the fader button again, which leads us to the groups. We can assign up to 16 so called DCA groups. For our faders a DCA group is basically a remote control for multiple faders. so in this case my drum DCA is moving all my drum channels at the same time. If we take a look at the faders,

you can see that all my drum channels got three or two decibels louder than before. At the same time when i'm using one of those groups we can take advantage of the relative mixing functionality Relative mixing functionality means that, whenever i move up one group, the other groups are moved down in volume a little bit. You can see that and it also works the other way around. That is really helpful again to protect our hearing, because instinctively, when we are doing a mix for ourselves or when we request changes from our monitor engineer, we would always request more. That's a completely natural human thing so i would ask for more kick, more bass, more guitar, more keys and so on, but the problem with that is, that my overall volume will be slowly raising in my ears and again that's dangerous. Especially if

it's a slow change, because our ears get used to the new volume very fast and we don't actually notice how loud we are blasting our ears. That's very dangerous and having the possibility to work in relative mixes - so even if i turn up the guitar now quite a bit the rest of the signals will be turned down - so we're just working in balances between those groups, but our master volume stays more or less the same. So that is exactly what we want to do: we just want to change the balances but we don't want to change the full volume. Let's take a look at the positioning. Positioning is very

intuitive here. In the first view that we are getting is the stage view, or the orbit view. Here we have a top down view on a head. If i take a signal and move it somewhere inside the circle here, it would be just in stereo, so there's no processing, no 3D, no immersive sound for that single signal. It would just be going straight through in stereo. However once i move that signal out on that purple ring around my head, it is placed on a horizontal plane around my head. Very intuitive. You might ask: 'Okay, yeah,

but what are good positions that we can work with?' We'll get to that in a little bit. If we press the stage button again, we get to the elevation view. The elevation view gives us the possibility, to not only move the signals around our head, but also move them above our head and below our head. You can just imagine this as the screen folding around your head in a circle. This here would be right in front of your nose.

This would be beside your left ear. This position would be beside your right ear and then this point and this point would be meeting behind my head, so both are the same. Actually this one and this one is the same exactly behind us and then all of this area above here is above our head level and all of this area here is below our head level right. We also find the groups here again but in this case not for mixing purposes, but just to clean up the view as you can see now i see all the inputs that i'm using. If i focus on the guitars, i can only move the guitar but all the other signals are unmovable. That helps us to just always

have a good overview. Another option, as i showed you before, would be, to go directly from the faders. Press the Second Function key and go to the P function here, which leads us directly to the positioning of that individual signal. If i want to see all of them again, i just press the select link deactivation button. That is as easy and as fast as you can be with positioning your signals.

This is another view to rename, reorder, recolor and stereo link your channels. In most cases that will be already taken care of by your sound engineer or system integrator But in case not, this is where you can change it even individually for just yourself. So you can see the groups up here and the faders which are assigned to this group.

Assigning a fader to a different group is as simple as dragging and dropping it. It's a very intuitive workflow and if i click here i get also to the naming again so i can change the coloring or the icon and the name. And then i'm getting local shows. Local shows are snapshots just for my own mix. There's an equivalent for global show files, which would change the settings for all the mixes in the whole system for all the musicians, but in the personal mode, we would just be taking care of our own settings. So if i want to have three different settings for three different songs, i just take a snapshot like a picture, which will just store the current state of my mix.

I can take up to 100 of those. There are a couple of possibilities for recall safes. The mix recall safe will be set automatically. That just means if there's a global snapshot change and you don't like how that is done and you want to be independent of that, you can just recall your own snapshot and that will automatically recall safe your mix, so you're protected from the global changes and you only do your own changes that you want to do. You can also recall safe certain input channels, positions, volumes, Master volume for example and so on. Those are a little bit of the deeper functions. In many cases you won't even need that,

but for more complex shows that might be quite handy to have the possibility. There's also a possibility to use a cue function which can be very handy for a musical director. Let me show you that with this slide over here. So we have the possibility to just

copy temporarily one mix to another mix. For example: if the drummer of your production is the musical director and he knows a little bit about mixing and he can help his fellow musicians, let's say the keyboardist is having a problem with his mix and he doesn't know how to fix it and what he actually needs to do, the drummer then could just simply tap into the keyboard mix, hear what the keyboard is hearing, help him with some levels and positions and then go back to his own mix again without even having to leave his drum chair. It's a very handy thing especially when there's not so much personnel in your production so the keyboard doesn't have to call the front of house engineer to just run on the stage, plug himself into some device in order to help you. You can just take care of that. amongst yourselves on stage And then we have a EQ function for that i need to go to the show mode and select another device. Let me show you that We have integrated a let's say musician friendly and starter stage friendly EQ, because as a musician, as mentioned before, you're not sound engineers.

So we don't necessarily have the experience, or the knowledge, or the time to just fiddle around with the cues until it sounds good so our approach is, that we integrated a preset based EQ. I can select the Kick Drum preset and press the tune button. This gives me a preset EQ curve that will fit with most bass drum signals.

And i have just one control which i can just use with my finger on a touchscreen to increase the EQ changes, or i can flatten it out or even invert it. And i can tune it to my instrument that is in here so i can just intuitively move this around until it sounds good, then just leave it there and i don't have to take care of anything else like frequency gain and Q factors and all that stuff, so that makes it very intuitive to work with an eq. This EQ is global for the whole unit that you're using, so it's basically behaving like a mixing board. A kick drum has the same eq curve for every musician, so that should be taken care by one of the musicians. Could be for example the musical director So this is the quick and dirty overview for operating KLANG. In most cases that is what you need during a show.

There are many more functions, but those are not always relevant directly on the stage, because again as a musician we should be taking care of playing well and not just playing around with technical stuff. I promised that we would take a look at good starting points. So especially the panning positions around our head and above and below For the most part you can use that very intuitively but there are also a few things that might be worthwhile to learn and to just take a look at in order to get the maximum out of that. I will try to keep it as unnerdy and

unscientifically as possible and just go through very quickly with that, to just give you a couple of pointers. So what KLANG does is called a binaural In-Ear Monitoring. Or we could call it an immersive In-Ear Monitoring or a 3D In-Ear Monitoring. All of those work, but the professional term would be a binaural In-Ear Monitoring. Binaural hearing is what enables us as human beings to hear three-dimensional in real life. We only have two ears. We don't have ears all around our head. So our brain has to work with specific

factors to actually figure out where a signal is coming from. This is something that we experience on a daily basis. Basically since the first day of our lives. But one of the very important or famous factors, that is a good way to describe how binaural hearing works, is the famous Cocktail Party effect. I know that right now we sadly cannot have cocktail parties, but we used to have them and i'm very confident that we will be able to have them very soon again. So let's say

you and a friend are having a conversation at a Cocktail Party. There's a DJ, there's some loud music, there's a lot of people. Everyone is chatting, everyone is laughing, so there's a whole lot of noise level happening there. If we would actually take a measurement device and measure the volume, the voice of you and your conversation partner would not necessarily be louder than all the noise around you. But still, as you know, you will be able

to have a conversation with a friend how is that possible? Well your brain can simply focus on certain areas around your head that are relevant right now. So if your conversation partner is right in front of you, your brain will just focus on the sound that is coming from exactly the angle of your friend or your friend's voice and you will perceive his voice or her voice louder and clearer than they actually would be. All the other noises around us would be toned down in our perception, so we would not hear them or sometimes even completely ignore them. The ignoring factor is something that you probably know when you're in a room with an A/C. Usually you just don't even notice the A/C, but once somebody points out 'Wow, the A/C is annoying me a little bit...', then you suddenly hear the A/C

very clearly because your brain is basically just taking care that you only perceive consciously the relevant information. Everything that is not relevant, like an A/C, will be just ignored. That's a very handy thing to have and that would be very, very cool to have that in In-Ear Monitoring. That's the whole idea behind the KLANG

processors. So in case you want to be nerded a little bit, let's take a look at how that actually works. Our brain is constantly analyzing three factors of audio that is coming to our head: level, time, and coloration. Let's take a quick look at those in detail. So level: if a signal is coming from over here, i hear it louder on that side than on that side. So far so obvious and so intuitive right.

Our brain is using that all the time. The second factor would be time. If a signal is coming from here, i will first hear it there and then hear it there. you probably know about the speed of sound, so sound needs some time to travel through air or through different materials and every material has a very specific time that the sound is traveling per meter. So if a signal is coming from over here it will hit first this ear over here and afterwards, after it traveled over to the other side, it would be actually hitting the other ear. Little fun fact: that is actually a frequency dependent delay. so some frequencies of that signal over

there will arrive on the other side at different times than other frequencies but you don't have to worry about that. That's something that happens completely automatically. With our perception our brain is doing that all the time. And the third factor is a coloration of

the sound. The coloration comes through the individual shape of our earlobes. To a certain extent also the head and torso, but our earlobes are the most important factor with that so if a signal is coming from over there it will be hitting specific points in our earlobes and from there will be reflected into our ear canal. Depending on the shape and consistency of that point, every angle of sound that arrives at our head, has a very specific sound or coloration, or we call it a frequency signature. Our brain knows exactly how something sounds that comes from there, or from there, or from there. The interesting thing is: we don't even

notice that in real life. But i can invite you to do a little bit of an experiment later after we're done with this webinar. Because it's quite interesting to just see how our brain is actually dealing with all that and just to feel the mechanics behind it. So what you can do is just

take your phone and play some music from Spotify, iTunes, whatever and put it on a desk beside you so if you turn your head a little bit the music will sound pretty much the same, but once we isolate our hearing so you close your eyes and only focus on the sound and you just slowly turn your head, then you will suddenly notice that it sounds different in different angles relative to your head. Again our brain is constantly analyzing those three factors and then it's ignoring those factors in our perception so we perceive the sound linear even if we cannot hear sound linear because that's impossible due to our anatomy. Okay, so much for the nerdery here. If you have any questions we'll have definitely time to go into more detail at the end of this webinar where you can just fire away with all the questions that you might have. Another thing that is really interesting for us, especially to find the right placements for the signals that we want to put on our ears, is psychology. So when we were growing up as kids, we perceived the voices of our parents, who were taller than us back then, from in front of us and slightly higher. So some of the first important cues that

we perceived as human beings, as young human beings, were coming from this area over here. Also on an instinct level: if a big animal would be attacking you, it would be most likely coming from this threatening position here. So our brain is conditioned to always pay attention to what is happening in this area here and that leads us to a very interesting point to place signals.

So for example if i'm a singe, i definitely need to hear my own voice very well and i need a piano or something else for some harmonic context, so i can hit the right pitch with my singing right so in a conventional stereo In-Ear mix i would have to just turn those signals very very loud and that would not make my mix sound more beautiful. It would be just a functional mix. However if i place those important signals in our immersive environment into this point over here my brain will automatically always focus on whatever is happening there, so we don't even need to turn them up so loud and we still have a very clear idea on what is happening there and our brain will always focus on that. So that's one of the focus areas. There's a second focus area, which is behind me. It's slightly out of

my field of view and if you can see it here behind me a little bit lower. So for me that would be roughly this area here. Signals that are coming from that area are perceived by our brain as not important, but also not dangerous and this is where we can place all the other signals as a good starting point. So my drums, my bass, but also distorted guitars, big keyboards, playbacks and whatever is not in in your mix in there that you need to hear or want to hear to have a really nice and fat and rich sounding mix. so all those signals in the back here can be turned up quite loud actually in your mix.

This gives you a really fat mix, but all that stuff over there, that is sounding good and awesome, is never in the way of my center of attention. My literal center of attention in the front so that gives us some very, very good separation of signals independent from levels. So that is a very good thing to keep in mind. Keep your own signals in the front and slightly elevated and all the other stuff you can put to the back. You can also use the focus position for things like a

click. If you're a drummer, instead of just blasting your ear with incredible loud click, you can just embed the click in the mix. You don't have to have the click sound louder than all the other signals. It can be just placed in one of the focus areas here ideally with no other signal in the way of that so your brain will always be able to focus on that whenever you need the click. So whenever you start drifting a little bit and you need to click your brain will automatically try to find that anchor of your timing. So that's the positioning. We will take a look at that in a moment

in detail again with our live setup and of course we will listen to that, but before that, i want to talk about finding the right levels. Because i know a lot of musicians who, whenever they have to mix themselves and they're working with a personal monitoring system, they're basically just fiddling around with the levels and sometimes it works out sometimes it doesn't work out. But there are some good strategies to have a very quick starting point. An interesting approach would be to just move all the faders to roughly the same level right that could be minus 20 or minus 10 dB. I think -10 would be what i would

recommend for all the signals. So everything would be on the same level right now. We would already get some separation because of our positioning around our head. Of course that only works with a Immersive In-Ear Monitoring system. That doesn't work in stereo or even mono. I will show you that in detail

and the difference in a second. And then we just move the level for the crucial signals that we need to pay attention to. For example that could be the kick and the snare, maybe the keys for some harmonic context a little bit up. Maybe 4 dB maybe 3 dB above the rest, to just have a

little bit more precision and a little bit more attention to that. Then we take our own signal - in the example that i show here that would be the lead vocals - and move them louder maybe 6dB more or 7dB more something like that and like this we have a very very good starting point. So we can hear everything that's in the mix, we have a clear volume boost for the crucial signals that we need for our context and we have a volume boost for our own signals. So we can control our own voice, our own instrument in a very easy way. In this example here also i have muted the ambience mics and the reverb returns for now. That depends a little bit how you prefer to work with it, but when you're just getting started with your mix it might make sense, to just completely mute those signals for now and first take care of all the individual signals. Make sure that you have a mix that you

can have a good starting point with and then you start moving in ambience mics and effect returns. Why don't we just take a look at that and actually listen to how that might sound. For that i would ask you to wear headphones right now so if you don't have headphones right now just run! Run, run, run really fast and then we can listen to that... I have prepared a starting point here with all the signals being at minus 10 dB. You should be able to hear something already. So now you only hear my voice, now you hear the music.

All our signals are on the same level right now at -10dB First let's compare how that behaves in mono, stereo and 3D. You should hear a difference there already What you probably noticed is that in mono the mix is really really hard to work with because there's no clear difference between all the signals. It's very hard to hear details from the signals. Especially things like keyboards. They nearly disappear in the mix. Stereo makes it a little bit better, because we have one dimension now that we can work with, but in 3D we actually can hear everything quite clearly already. We're not there

yet but that's a very good starting point already. Even with all the levels just put to the same level at minus 10 db right now. Let's just increase the level ofsome signals now, that we need to rely on a lot. Right now we're in a vocal mix, so let's just do that for the vocals. so there's quite a bit of definition in the mix already now. For a singer's mix the lead vocals probably would have to be a little bit louder so let's take care of that.

Now the mix already has quite a bit of definition and I'm pretty sure that every musician would be able to work with this as a starting point and from there of course we can do changes. If some signal is too loud, or still too low we can start adjusting that of course, but our mix already has a lot of definition so within a minute or even less than a minute, we had a good starting point, that gives us everything that we need to hear to do a sound check and to e.g. warm up a little bit together. I've placed a couple of

snapshots already with exactly those changes here so we can compare them easily again. So let's try that. Let's do the same thing in mono. And stereo. Also take a look at that for the perspective of other instruments. For the vocals i have placed the lead vocals in the front on a slightly elevated position like i showed you before. I've placed the keyboards and the guitar, and most of the drums and bass behind us, so we have a very good separation already for a lot of signals, and have this natural focus just through the positioning. For the drums i have done that a little

bit different. The drums are completely in front of that. As well as the guitars. Only the vocals are moved out of the way. Let's listen to the drums. Let me switch that over. Okay. So much for that. Please let me know in the comments, if that made sense for you and If you have any questions regarding this. Because we're moving slowly towards the questions part of this webinar. So while you are gathering questions that you might or might not have let me just talk about two further factors that are important for you as musicians. There's one big thing that we can see all over the

world in stages, where musicians are just using one in-ear and the other one they keep it out. You probably had some discussions with your sound engineers about that already. That it's not a good idea for multiple reasons. The intuitive idea behind taking one ear out is just to have some ambience and to just have some localization.

That soounds like a good idea, but it comes with some side effects. First of all: When we're using KLANG, we're using a binaural immersive In-Ear Monitoring situation - we don't actually need that because we have the orientation and we have the spatialization and the separation between the signals and we can use much more ambience microphone signals in our mixes. So we actually have a common connection to the audience.

There's not so much of a need to take one ear out of that. But talking about the side effects, you can do a little experiment for yourself. If you have a headphone amp or something with a button to adjust the volume just put in music and put your headphones in. Wear both of your In-Ears, turn down the

volume to off and then slowly start moving it up until you find a comfortable listening volume. Then you just make a little marking on where the level is. Then you turn it down again to the off position.

Now you take one ear out and without looking at the button, you just move it until you find the same perceived level that you had before. The thing is, when you do that you will end up at pretty much exactly 10 db more. So there's a proven fact called binaural summation, that has nothing to do with the binaural processing that we're doing. It's just talking about the two ears. So it just says that if you're using both ears you need 10 decibels less volume than if you would just use one ear to get the same perceived volume. If we think that through, that means

if you take one in ear out you will blast your other side with 10 decibels more of volume. That is basically three times as loud so that's really dangerous for your ear. Be very, very careful with that. I would definitely recommend to never do that. And feel free to just do this little

experiment yourself. It can be quite shocking actually how much level you blast yourself with, especially if you, after you just turned up the volume and have just one year in, and then you move in the second year in then you will notice, how loud that actually is. So be very, very careful with that. Another factor that i want to talk about

before we get to the questions would be hearing fatigue. You probably know the situation: You did a soundcheck, you either did your own mix or you asked the monitor engineer to do a mix for your ears and you were fine and you could start playing. Then roughly after one and a half to two hours and especially like a longer rehearsal or recording situation, you certainly feel like something in the mix changed because you lost all the precision. Sometimes you would ask your sound engineer if you changed something and he would deny it, but the thing is what you're feeling there is hearing fatigue. Listening to a stereo mix is an immensely hard process for your brain. It's an active process for your brain so your brain actively needs to focus on dissecting the audio and just actually giving you all the information that you need to play.

And roughly after one and a half to two hours your brain is too tired to go on with that so it just gives up. It doesn't try anymore to separate those signals and the result is that you feel, that you lost all the precision in your mix. In an acoustic situation without any monitoring, or if we're using an immersive monitoring - which basically is very similar to an acoustic situation - it's a passive process for our brain so our brain is just in the background dissecting the audio making sure that everything is presented to you on a silver platter. Your brain just needs to focus on whatever is interesting right now and it doesn't consume more energy from your brain. So using an immersive In-Ear Monitoring also gives

you quite a bit of more energy that you can have for your performances. Especially when it's longer performances than two hours or one and a half hours. Let's move to the questions! I know that i just bombarded you with a lot of information here, so let's see if there are any questions If there are any things that might be unclear or if i should repeat anything or go into more detail for something. If not you can also just send us an email or send us a message on facebook later and we'll be happy to just answer all your questions. We also will most likely repeat this webinar again so there will be another chance to have live questions and be able to just listen into that. If you guys are sound engineers, feel

free to just forward this to your musicians and invite them to just join us for the next time with all the questions because it just makes your life also quite a bit easier if your musicians are more or less independent and they get to a very good result of mixing very quickly already. So i can see there are no questions at this time which is great so either you all fell asleep, or i did a more or less good job explaining it. Thank you very much for your attention thank you for your time stay safe stay healthy make sure to tune in on Thursday at 5 PM CEST when we will asked the question 'How do you immerse?' again. This time we have a very special guest: Pascal Schillo, the guitarist of Eskimo Callboy, a band that is using KLANG since i think more than five years already as a personal monitoring system on their concerts. They are a quite big band now, they play really big shows ,but they're really happy just with mixing themselves and actually have quite a bit of experience that they are happy to share with all of you. The strategies and

approaches to get a very good monitor mix out of it So, that's it from my side. Hope to see you again there and keep an eye out on our website for future webinars and future topics. If you have any suggestions also let us know because actually this webinar that we did today was a suggestion that we got from several people who saw our webinars, so we definitely react to that. There's actually one question in here from Guillaume: 'Hi, i'd like to know about remote osc control another webinar.' Yes, actually we did the webinar and it is

still online. My colleague Markus will send the link and post it in the comments. We will also repeat that. So there is a full webinar with a lot of information on MIDI, MCU, as well as OSC. Tom, hey buddy! 'This should be on youtube soon, so i can link it to musicians so they can understand what it is.' W are not sure when we will put it on YouTube.

It will definitely come on youtube but not right away. I think we're gonna leave this one online on facebook so there should be a permanent link that you just can distribute to your musicians afterwards. Let's see if i missed anything Hey Hugh, thank you very much! Actually Hugh was one of the persons who gave us the idea to do this webinar, so thank you very much, Hugh! That was very helpful and i'm glad that you enjoyed it. That's it from my side now. Have a wonderful day, stay healthy, stay happy, have a good drink and see you in our next webinar - i'm looking forward to that! Bye

2021-02-07