Responsible AI: Protecting Privacy and Preserving Confidentiality in ML and Data Analytics

Hi. Everyone I'm Sara bird from Azure AI and today I'm gonna be telling you about some. Of our responsible. AI capabilities. On am sure. When. We think about developing. AI responsibly. There's, many different activities, that, we need to think about and it's, important, that we have tools and technologies, that help, support us in each of these so. In Azure we're developing, a, range, of different tools to support. Us on this journey and so in this, session I'm going to be talking about technologies. That, help, protect, people. Preserve. Privacy and, enable you to do machine learning confidentially. However. We. Also have capabilities. Around. Gaining. A deeper understanding of. Your model through techniques like interpretability, and fairness, and for, adding new capabilities, to our platforms, as well as recommending, practices. That, allow you to have complete. Control over your end-to-end machine, learning, process, to make it reproducible. Repeatable. Auditable. And a. Process, that has, the right human oversight, built-in. So. A lot, of these responsible. AI capabilities. Are are. New and they are, actively. Being developed by. The research. Community and in practice. However. At. The same time we're, already creating, AI and we're already. Running. Into many of the different challenges in. Practice. As we do that and so we. Felt that it was essential, that we. Get. Tools and technologies, into. The hands, of, practitioners. As soon, as possible even if the the, state-of-the-art is still, evolving and so in, order to do this we. Have been developing a lot, of our capabilities. As open-source. Libraries. Because, this enables us to, directly. Code develop with the research community, it. Enables, people to easily extend, the capability, make it work for for, their platform and it allows us also to just, iterate. More. Rapidly and more transparently. However. And. 10, machine, learning is often. Best. Supported, you know particularly, in production, with, a platform, that helps you track. The process helps make it reproducible. And so, in order to make it easier for people to use these capabilities in that end-to-end, process, we, integrate them into Azure machine learning so, they can just be part of your machine, learning, lifecycle. So. If we want to dive in and talk, about today's topic which is how, do we really protect, people and the. Data that it represents, them. One. Of the big questions, that we need to think about is how. Do I, protect, privacy. While, using. Data and. You. Might immediately think, that you know you have great, answers to this and of.

Course The you know the simplest thing we can think about doing is making, sure that we have excellent, access control. And that we. Don't have. Unnecessary. Access, to the data set and, of. Course the next step to beyond that is we, can think about you. Know actually anonymizing. Values in the data so that for, the people that can look at the data set right they, they still can't see that private. Information about. Individuals. However. It turns out that. These. Are important, steps but, they're. Not enough even when. We use data being, the output, of that computation, the the model that we've built or the. Statistics. Can actually still end. Up, revealing, private. Information, about individuals, so, if we think of a machine learning an example of this I could. Have a case where I'm, building. A machine learning model that helps. Autocomplete. Email, as, sentences and in. The model there. Could be some very, in. The day said there could be some very rare, cases I. Have sentences, like my, social security number is and so, when I, typed. That as a user it might be that then the model sees that it lines it up with that in a singular or small number of examples, and it, actually autocompletes. My sentence with a social security number from the data set which could be a significant. Privacy, violation, and so there's. Cases where models, for example can memorize individual, data points and we definitely need to consider that but. Even if we think you. Know okay, that that's you know a specific problem to machine learning there's. Also challenges when. We look specifically, at. Statistics. And aggregate, information overall. And so let, me jump, over to a demo to show you what I need here, so. In this case I am, going to be demoing. In Azure, data bricks but you, could be using a. Jupiter. Notebook or, your. Python environment of choice and. What. I'm gonna do here is I'm actually. Going. To, demonstrate. How. I. Can. Take a data set here, and in. This case my data set is. For. Loan scenario, so I'm going to be looking. At, people's. Income and, trying to use that as a feature to decide, whether or not to offer them alone and what. I want to demonstrate is, that we can actually. Reconstruct. A lot of the the private information and the underlying data set just from. The the aggregate, information that we were using so, in this case here I'm going to. Assume. That I know the, aggregate. Distribution. Of incomes, for different, individuals, as. Represented. By. This. Chart, here, and. Then. What I want to want, to show is that I can actually take, this information, and, if. I combine it with a little bit of additional, information, about two individuals, so in this case these. Values, here, then. I, can. Take an off-the-shelf, Sat solver so here I'm using a z3, which. You can just pip install and. I'm. Going to use that sin solver to actually. Reconstruct. A data set that's consistent. With the. Published, information that. We know so. If. I run this Sat solver, then. What. We're going to see is that, we. Actually are. Able to reconstruct. A data set that. Is. Consistent. With all of the aggregate, information as well as the individual.

Information That we know and in. This case we can actually combine. That, with, the. Width. Or we can actually compare that against the real data set since we know the real information and, see how, well our attacker, is doing here so if. We look at the chart here what we can see is that for. Almost ten percent of people we were actually able to. Correct. Like exactly. Reconstruct. Their income, and if we write in the range a little bit and say okay well let's look at for example within five thousand dollars then, we're, actually able to get that correct, for more than 20% of people now. In this case of course the, attacker, wouldn't. Know, which, twenty, percent it has correct although. In, reality. What you could do is run, this attack many different times and start looking at sort of the distribution. Of possible values and so with. More computational. Power it is still. Possible to get more information, and. So now the question is you know what, exactly, do we do about attacks. Like these and. So. This is where I, think a really exciting technology. Is differential. Privacy. Differential. Privacy, actually. Starts out as a mathematical. Definition. Says, you. Know if if implemented, correctly you. Can guarantee, that a. Statistical. Guarantee that, you won't be able to. Detect. The contribution. Of any individual. Row in the data set in the output computation. And so, that, enables, us to exactly. Line. Up and say now you can't do this type of reconstruction, attack. And so. That. Enables, us to to guarantee that we can hide the contribution, of the Indian individual. And have a much stronger, privacy. Guarantee, and. So, the. Way that a differential. Privacy does this as I mentioned is it's, originally, a mathematical. Definition and. Since. The the, publishing of that idea. The. Research community has, developed many. Different, algorithms. That successfully. Implement, this in different, cases so that you can apply. It and, it. Works through through, two mechanisms so, I'm, going to want, to do some aggregate, computation. Whether that statistics, or build, a machine learning model and, the. First thing I need to do is, I. Want. To add noise, and. This. Statistical. Noise it hides. The contribution. Of the individual, so you can't easily, detect. It and. The. The. Idea here is that in. Most cases. Particularly. For this aggregate information we should be able to add a, amount, of noise that's significant.

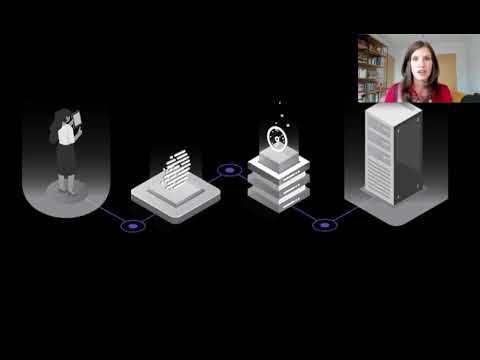

Enough To to get that privacy, guarantee that you can't detect the individual, but, small. Enough that, it's. A small amount of noise on the overall aggregate and so that aggregate, is still useful. For our computation. The. Second piece is that if, you could do many, queries, or the right type of queries, then you, might. Still be able to to. Detect, the, individual. Information and so we, need to calculate a. How much information was revealed in, the computation. And then, subtract. That from an overall privacy. Loss budget, and so. The, combination, of these two. Capabilities. Enables, us to have this much stronger privacy. Guarantee and. So as I mentioned this. Is a you know very active. Area of, research and, there's. Many different algorithms, that have been developed by the research community to. Implement this concept, in, practice. Depending on your. Particular setting, and so we. Wanted, to make. This. Easy. For people to use without it being a. Without. It being you've. Been required, to be an expert, in differential, privacy because I think it is such. A promising, capability. But on, the other hand it, involves, quite. A range. Of algorithms, to implement and so we. Partnered, with researchers. At. Harvard, to develop. An open-source. Platform. That. Enables you to easily. Put. A differential. Privacy in, your machine learning, and data. Analytics. Applications, and so we've. Developed this platform, and, it. Sits between the, user. And the query you want to do and your data set or your data store and so. When, you query. Through. The system we will, add. The the correct amount of noise based on the query and your privacy budget, and then, subtract. The information, from the budget store and allow you to track, the budget and so then we'll, give you back that query. Your. Aggregate results but with, that differentially private noise added so now you have the the privacy guarantee and so you can go. Forward and, use, that so. Let's go back, to the demo to, see what this looks like in practice, so. In, this case I'm. Going. To use, our open source system and we're. Going to add. The. Differential private noisy is to that. That. Income distribution, so here, you can actually see the the epsilon where I'm giving it a budget to do that and so, now we've actually added noise to our histogram and so let's let's, check that privacy, guarantee. So, in, this case I'm, gonna redo. My same Sat solver attack, but. I'll. Actually see a really different result which is. Unsatisfied. So. This. Is great it means that we have successfully. Protected. Privacy, at least against this type of attack although the great thing about differential, privacy is that there's there. Is you know mathematical. Proofs behind it so we also know that we are protecting, private if implemented correctly we're protecting privacy against, a variety.

Of Other attacks besides, this specific, one that I'm demonstrating here. And. Then it's, not enough however to just, use, the, to. Be able to protect privacy because we can also do that but just not using the data or just not. Having the data at all right and so the, second thing we need to investigate. Is. How. We actually. How. Well we're actually doing, in terms of that noise and so, let's run this and compare, so. Here's, the the comparison, of the. Non. Private, and private. Information and so. You can see overall. We're, doing pretty well however. It is, a small data set so there's, particular cases where you can definitely see the noise that's being added so that, might be fine for my, problem, it might be that I can and tolerate a fair amount of noise or it. Could be that in this case I want to give the query more, budget, to use so that I can add less noise or it, might be that I want to use a larger, data set or different. Aggregate, functions, to enable me to have, sit. On a different point in the the privacy, accuracy. Trade-off. Curve so. We there's a lot of options here, it's not a not a fixed amount of noise that has to be added so then, if we want to look at you. Know one of the ways that we can use, differential, privacy in machine learning what. I'm demonstrating here, is with our open source system you actually can. Generate. Synthetic data so, in this case we're, creating a data set that, using. Differential privacy that lines. Up so here's we're giving the budget and. It. Lines up with the overall. Trends. And patterns, that, we want to see in the data set at the aggregate level but. Actually, you know hides, the contribution, of the individual, as we discussed, and so I can, use the the system to generate my. Data set here and then I, can, take that and go and do machine learning however, this isn't the only option, I also. Instead, could be I. Also. And said could just use, differential privacy directly. In the machine learning optimizer. So that, as I'm pulling in the training data I'm, calculating the, the budget that I'm spending and feeding into that model so there, are multiple options of how you might, want to use differential privacy in, machine learning but. First this is a bit more straightforward where, we can just go and add it on. Top of the on, top of the aggregate, results. So. With, that I. Do. Want to mention that. This, this, project is part of a. Larger. Initiative, so. This is really just the first system, in that initiative where what. We want to do is, build. The. Software in the open source and use. This as a way to both. Allow more people to adopt it but also to advance the state of the art the. More places where we can collaborate and really try it and, and figure out why it doesn't work and then advance. The research and iterate. The. Farther, we can take this technology and, open. Source really, enables us to do that the other thing is because these are difficult algorithms. To implement, it's, great to have, them in the open because we can have experts, who can inspect, them we can verify them we can develop, you. Know tools that verify them and so it's. A really great place for us to. Really. Create a community around, this technology. And. So and the other thing I want to mention is that many, people when they see this immediately think about the data they already have and why, do I want to add noise that seems like that's just an absolutely, worst experience, and you, might still want to do that because, you, know privacy, is. Important, and it might, be that it's. Worth that privacy. Guarantee you to have a little bit of noise in. Other cases it. Might, be that because. We're trading changing. The trade-off curve here and you can actually use. You. Can use data that, you, might not have otherwise been able to use because. The, baseline requirement, is that there's a privacy guarantee to even use the data that means, that there's more data that's available that. Now we can happily. Use for the for the good of society for, the good. People. While, not, risking privacy, and so I think. We really believe. In. This. Initiative, as a way, for us to use. More data on the problems, that are important to people in society, without, having to make a hard. Trade-off between using. The data to solve those important, problems and protecting. People's privacy. So. With that I'm going to switch and talk about another. Family of technologies, that. Can. Work in a, in. Combination. With differential, privacy and so, these are, called. Confidential. Machine learning and as. I mentioned it's actually a family, of technologies and. They. Largely you know are United around the theme, of confidentiality. And. Enabling. You to do, machine, learning or competent or, computation. In a way that's confidential. However, there are different. They, have different trade-offs than they can be used in different ways and so, the.

First And sort of easiest. One to understand. Is. Having. Technology that's confidential, from, the data scientists, so you can imagine cases, where I want, to be able to design. My model, you know code up what it will be but then I, don't, want the data scientists, to be able to look directly, at. The data so, I want them to be able to train a model on behalf of that data but. They I don't want them to be able to directly. Inspect it so, that's. The simplest type of confidential. Computing, and. We. We have that capability. Coming. And. So that will enable you to have that guarantee. That the data scientists can't see the data now. If you want to go. A, step farther then you, can do confidential. Computing, or confidential, machine, learning. Using. Encryption. That. Is powered, by hardware, so, in this case. There's. A hardware, unit called an. Trusted. Execution environment. That, runs. Inside the CPU, and all. Of the computation. Is in. Stays. Encrypted, so this really completes. The encryption, life cycle where if, I was actually. I'd. You know data, is always encrypted, at rest only now and it's encrypted, over the network but when, it gets inside the CPU, you, actually have, to unencrypt it and so. Then you, do have that data exposed, to, the operating. System and, the CPU and you have to trust that so, in this case what. This, does is actually that, you can, you. Can instead, keep. The data and the computation. Encrypted, inside, of the CPU so now you don't have to trust the operating. System you don't have to trust the cloud and only, inside of this trusted, execution environment. Will you actually unencrypted. The computation, and execute, it but then your Rhian crypt before you put out the results and so, this enables us to. Build. You. Know machine learning models, on encrypted. Data and. And. Produce an encrypted model, or or. The same thing with training where now I can have. A model, in time that Enclave and I can send encrypted inferencing requests and get the response back and I, have a much smaller trust, boundary in terms of what I need to think about the, other thing that's interesting about this technology is that it enables, multi-party. Scenarios. Where now. Each. Of us need to trust the hardware unit and we can collaborate on building a machine learning model but, we don't have to, we. Don't have to expose our data to each other and we don't have to trust, each other so there's. A lot of interesting things that we can do on this hardware based technology, and the. Thing that's great about it is because it's inside, the unclaimed view actually. Because. It's running unencrypted, inside the unclaimed you can run a large amount of computation. And different. Types of computation, so it's, a works, in a lot of cases however. You do have to trust that that Hardware unit and you have to have special hardware and so, in. Some cases we, want to go a step farther. Perhaps if you are Stewart's of someone else's data and you actually sort. Of have a mandate, to minimize, the the number of times it's encrypted it might be that you actually, want to to look at ways where and you can do the computation and leaving, the data completely, encrypted and so. That's where homomorphic, encryption comes, in and the idea of homomorphic, encryption is, that. I'm. Going to leave the data completely, encrypted and I'm going to develop math. That allows me to actually. Operate. On the encrypted values so in this case certain. Types of computation, we, can do it as encrypted. 1 equals, encrypted, +, encrypted to result. In encrypted, 3 and so, this enables us to actually perform this computation without, on encrypting, anything and so you don't have to, you. Don't have to have trust and the hardware in the, way that you did with the the previous technology. And, so. We, have an open source library. Called Microsoft. Seal that. Contains, meaning grade homomorphic. Encryption. Them's and so it can be used to implement a variety of homomorphic, encryption scenarios. But. I'm, gonna jump over and demonstrate. Actually. How we can use this for machine, learning, so. In, this case. I'm. Going to. Set. This up and so what I've done is. I've. Trained a model and my model sitting in in, my model, registry. And Azure machine learning and, so, I'm gonna download my. Model from my model registry. And. Then. I'm. Going, into to, set this up in the cloud for. Inferencing, so we can actually host it in the cloud and then, send inferencing, requests to it but I want it to. Do this using homomorphic, encryption and, so the difference that I need here is I'm gonna create my scoring.

File To to, actually you know inference. With my model, however. I'm, gonna use seal in this case right so I'm gonna use my encrypted. Inferencing, server and I'm, actually going to build. That into my scoring. File so that now I can do my inferencing. With homomorphic, encryption. So. Then, now. I just actually want to use Azure machine, learning here, and I'm gonna deploy, my. Model, using, my, azure container instance, so. Now, the. Model is going to be set, up in the cloud so now I can we can actually you know move forward and start, calling it so, here, let's, test the service and. What. I've done here actually is I'm generating. The the keys so. I have my public and private. Key and I'm, going to set, up the the private key in, this. Argument for the public key in the cloud so I can't, actually do that now, let's, send a call to it so, this. Is the the. Features, that I want to send so I want to know if this person, would. Be accepted, for a loan, however. I want to. To the cloud encrypted, so I'm, going, to. Send. That and so this is the. Value encrypted, that I'm sending to the cloud and. We're. Going, to look at the response here. So. This. Is the response we received also. Encrypted, so, that, way it's hard for the. Cloud or an attack or anyone to know, what they're seeing here and so, then now. If we actually decrypt, the results you, know we can see the information so, we can get the prediction, and in this case this, individual, would be denied, alone. So. This is great it, doesn't work for all model types but it doesn't able us to now, allow. Someone to actually use a model, but without sharing. Their data to. The model or or, or to platform. That's hosting the models so this, is really a higher. Level of confidentiality. So. With. That I'm. Going. To wrap, up here and say, responsibly. Is a big topic and so we, have a lot of great. Resources both, on, what. We were talking about today which is, privacy. And confidentiality, as, well, as some of the other topics I mentioned, briefly, and, so you, can go to the responsible, a Resource, Center and we. Have a lot of different sort of practices, to help your organization get started, in responsible, AI development as well. As links. To all the tools and capabilities that, we just mentioned I also, mentioned that these, work either you. Know built into Azure machine learning or on top of machine learning so you can, go directly to, AML, and learn more about how to use our responsible, AI. Capabilities. Inside of AML, and if. You're interested, in getting. Involved in open DP or using. Our open source differential, privacy system, you can check. Out the open DDP. Community, or join. Us on github. We. Would love to have collaborators. And contributions. For the system and the. Same thing microsoft seal is available. On github and. We'd, love to have collaborators, and contributors, there as well so I hope. That this was a you, know a great session for you and that, you. Use these tools, and, that we, see you on github so thank. You.

2020-07-22 13:01