Quantum Noir Session 4: Quantum Technologies

OK, everyone, welcome back for the last talk of the first full day. We have Mikhail Lukin, a professor of physics and co-founder of QuEra computing. He will tell us about exploring-- oh, tell us about the QuEra device. Well, not quite, but it's OK.

So, yeah. So I would like to start out by thanking Bill and organizers for putting together this incredible meeting and also for invitation. So I will be talking about our efforts to build actually large scale quantum computers.

And the key challenge in doing that, as was realized already some time ago, is that it's actually hard to kind of scale up the systems at qubits and kind of, in particular, perform more and more operations without adding errors. So that is-- in other words, right now, there are systems in our lab and also [? Aquaria ?] which actually now have hundreds and now thousands of qubits, physical qubits. And with them, you can make a few thousand of operations. But the problem is that if this number of operations go, eventually what happened is that, you know, the errors that you make during these operations basically start to play a dominant role.

And the result, you know, these limits, basically they use fullness of this computation. So this has been recognized a long ago and whereas now state of the art system can make maybe a couple of hundred or maybe 1,000 steps, most useful algorithms will eventually require many billions of operations. So there is a big gap. And it is fair to say that at the moment it's really not clear if any physical system can ever be created, which can sort of make billion of operations without a single error.

So this is a fundamental issue, and it has been recognized very early on, over 25 years ago, where people immediately started to think is, can we use some kind of redundancy to actually detect and correct these errors? And this redundancy is the way how classical error correcting codes work. So you basically make a copy of one state. If you have just one logical zero you want to encode, you can encode it in three, for example, physical qubits. And then if one of them flips, you could use majority rule to correct it. So I should say at the time, it's actually almost 30 years ago by now, it was really not clear if you can actually ever do, even in principle, something at a quantum level, which would correct the errors.

And the reasons are quantum information can not be copied. Moreover, to detect the error, you have to make a measurement and normally measurement destroys quantum information. But nevertheless, it turns out that it's actually is possible to do quantum error correction.

And the way how you do it is you basically use entanglement to delocalize quantum information. So basically, if you want to encode one bit of information, what you do, you basically spread it around along many physical qubits and that way, you can encode the so-called logical qubit. So I must say, even the theoretically, the fact that you can actually do it, even in principle is kind of amazing. But by now, it's very well established theoretically that it is possible.

Realizing it in practice is, however, actually quite hard. And so basically, what you do, like, at least at the theory level, what you do after spreading this information, what you do, you measure certain quantities which are called stabilizers, which actually kind of detect if an error occurred or not. And because this information, quantum information is delocalized, it becomes hard to accidentally manipulate it, but actually contrary to that, it's actually becomes also hard to manipulate it deterministically.

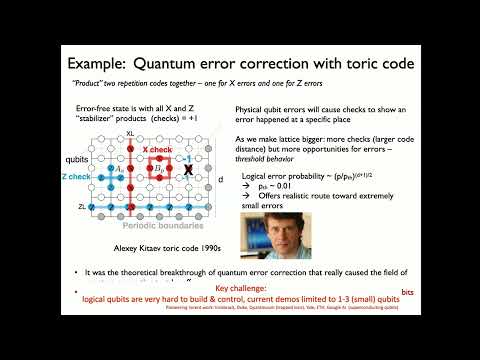

And that's actually a big challenge in the field to kind of illustrate this. Maybe it's a little bit too technical. So this is actually maybe by now the most famous quantum error correcting code, the so-called toric code.

And in this toric code, you basically in-- all qubits in this kind of grid encode logical qubits in a grid of physical qubits. And then what you do to check if you make an error, you measure the so-called parity check operation. So to begin with, this toric code is actually a product of two classical error correction codes, one detects X error, another Z error. But then you use this kind of checks, and these checks are products of four operators which can detect if there is a phase flip or if there is a bit flip. So, for example, if one of these bits flipped here, then you immediately, by measuring the checks, you will see, oh, there is this so-called stabilizers. They should be plus 1, but they are minus 1.

It's obvious that the error occurred here. And then you can just go ahead and correct. Remarkably, you can do it without destroying quantum information without revealing what type of state that you encode.

And as we make this lattice bigger, you have to more-- you have more checks. So you can say that code has a larger distance, right? So you basically have kind of more opportunities to detect error. But of course, there are more opportunities to create an error. And so basically, as a result, whether this error correcting codes works or not, has a kind of a threshold behavior. So basically, it turns out that if your individual physical error is smaller than certain threshold-- --and this threshold is typically around 1%, then by making this code larger, you can actually really beat the error, and you can make it as little as small.

So this was a toric or surface code invented by Alexey Kitaev. But as you see, this is-- actually building even one of these kind of logical qubits was a complex undertaking. And even though we know in the field that we will eventually need to switch from performing algorithm with physical qubits to performing logical qubits, up to now, this field is really in the infancy. So basically, because logical qubits are very hard to build and control the current demonstrations, at least up to, I guess, late last year, was limited to a very small number, one, two or three kind of very small qubits. OK.

So in what follows, I will tell you how we actually addressing this important frontier in the field. And we will be using cold neutral atoms to actually build this both physical and logical qubits. So this cold atoms will be isolated and kept in a vacuum. And there are few key advantages. One of them is they have-- naturally have very good-- I'd say some of the best coherence properties that you can think of. So it's also easy to create large number of neutral atoms, and you can also manipulate them with light, which will be very important.

But neutral atoms, at least in the gas phase, are also interact weakly. So if you want to do logic, you want to make them switch state dependent on each other so it's actually not obvious how to do it. And also, neutral atoms are hard to control individually. So for example, in this room, there are a lot of atoms and molecules. So if you could really keep track of each of them, you could build a very big quantum computer but it's obviously not completely trivial how to do it.

Not completely obvious. So motivated by these considerations, some time ago, almost a decade ago now, we actually started building here kind of a new approach to this scalable quantum systems. And in this approach, what we do is we utilize so-called optical tweezers, so tightly focused beams of light. And we focus the beam so tightly-- I'm sorry each of these beams of light kind of acts as a kind of a-- as a trap.

So it basically attracts the atom close to the point of highest intensity. And we focus these beams so tightly that each of this trap can host at most one atom. So if there are two atoms, they basically don't fit into each other. They collide and at least one of them is ejected. And so in the way how we build these systems, we don't start with just one of these traps at a time. We now start with hundred, actually thousands of traps and focus them tightly.

And then we try to create these arrays of atoms. But what happens is that there is an entropy in the system. So in each given attempt, what will happen is that we will have probabilistically kind of load, one trap or another, but not all of them simultaneously. And so to get rid of this entropy, what we literally do is we take a picture of these atoms, figure out which traps are full and which are empty, and then basically take these atoms which are in the full traps and kind of rearrange them in kind of any desired configuration we want. And so that way we can actually build arrays now, which are kind of-- have nearly more than thousand of atoms. And it's a starting point to really start kind of building quantum computing systems.

One thing which should be mentioned here is that in these traps, the atoms are separated by typically a few micrometers. And this is important because it allows us to, for example, individually address and control these atoms optically. But of course, at these distances, the atoms don't talk to each other. They don't interact at all. So to make them interact what we do, we excite them into the Rydberg states and increase effectively the size of the electron orbit by orders of magnitude. And that way, we enable strong and controlled interaction.

And so this work is actually a collaboration between my group, the group of Markus Greiner here at Harvard and a group of [INAUDIBLE] at MIT. So just a few more words about this kind of Rydberg excitation because it's a very important property of this approach. So we like Rydberg atoms because they combine two special features. They have relatively long lifetimes, kind of 100 microseconds and strong interactions. So the atoms in this Rydberg atoms interact very strongly. For example, van der Waals interaction is actually 14 orders of magnitude larger for n equals 100 as opposed to n equals 1 compared to the ground state.

And 14 orders of magnitude is a large number, and we can really put it to good use. So in particular, one approach, which we will be using is something which we call the Rydberg blockade. So in this Rydberg blockade, what you do, you basically excite the atoms into the Rydberg states with the finely tuned resonant pulses of light. And so if these atoms are sitting very far away, so then this excitation would just-- will be independent.

The atoms would undergo independent Rabi oscillations. But if these atoms come close together, then eventually this interaction will take over-- --and what will happen is that if the atoms are close together, past a certain distance, you will be able to excite one atom or another, but never both. So that's the essence of this Rydberg blockade. And essentially, what it means is that simultaneous excitation, resonant excitation of atoms block the distances smaller than Rydberg blockade.

And it turns out it is actually a very good mechanism to entangle atoms and do quantum logic because it's very robust. It kind of makes the interaction digital. It's either on or off, and if it's on, it's kind of basically almost infinite for-- in a leading order approximation. In particular, this approach is insensitive to things like position and the motion of the atoms.

So combining all of this, what I will be showing you in the following will involve us basically building some kind of quantum registers initially, then subjecting atoms to various types of electromagnetic pulses. Sometimes we will excite atoms in the Rydberg state. Sometimes we will just change the spin state, rotate the spin state.

Then eventually what we'll do, we'll take another picture of an atom but in this case, we'll do it in a state dependent way. So it will be a projective readout. So that's the approach. And so basically by now, this is actually an exploding field. So there are actually a couple of hundred experiments under construction around the world in both academia and industry. There are at least eight companies trying to commercialize this technology.

And to just kind of point out that there is a kind of wide variety of different approaches, some kind of exotic atoms, for example, in alkaline Earth atoms is a very promising approach. People start using this system in combination of something which is called optical lattices, and molecules are being explored in particular, including here at Harvard. And so these are some examples where some of these arrays, atom arrays start looking very fancy. This is an example of two species atom array in [? Hans ?] Bernien Lab in Chicago. And this actually is a picture of the array containing over 5,000 atoms.

It's a cesium atom in a lab of mono lenders at Caltech. So basically, this is very exciting frontier, and it actually touches on many different directions from quantum computing, which I will focus my talk on to quantum many-body dynamics, quantum metrology, and it's complementary to other exciting avenues-- --which are being explored, such as, for example, optical lattices that you might have heard about. So in our lab right now, we are kind of operating already a third generation of our atom array, and it's enabled by this device, which is called spatial light modulator.

It's actually a very similar device, which actually is used in this projector to project the slides. So it's basically a computer generated hologram where by just programming it, we can deflect the incoming light by an angle-- --and we can create some very fancy patterns, for example, a pattern like this, which actually then if you project into this vacuum chamber, results in this an array of over 1,000 of-- or it can be many thousands of traps. So this SLM is an amazing device. It has only one drawback.

It's actually slow. So to dynamically move atoms around, which is actually very important, as you will see in a second, what we do is we have a second channel where we have these two devices, which are called acousto-optic deflectors. So acousto-optic deflector is device where we basically send a sound wave and can deflect the light beam by a certain angle.

So for example, like some of these acousto-optic deflectors are used in supermarket barcode scanners. And we-- actually, we love this device. So in fact, we use them in pairs to move rows and columns of atoms independently. But actually we have now several sets of them, and you'll see they're used kind of for different purposes in our experiments. And so it's all of that is actually computer controlled. And as I already mentioned, we are now kind of operating a third generation in our lab.

So this third generation basically has larger system. It has five fidelities and it has much more advanced control techniques, which I will describe in a second. So with that, it has been actually a lot of fun over the last few years. So we have studied a lot of different directions. We did a lot of quantum simulations where we create new studies of matter, study their dynamics, explore topological physics. Actually, there are some applications to combinatorial optimization.

So as already mentioned, that there is actually a system like this is now available on the cloud for nearly one and ha;d years from our startup company, QuEra Computing and across the river here. But what I will do today, I will actually really focus on this kind of digital processing and addressing these quantum error correction frontier which I outlined, which is actually essential for scaling up. So one of the kind of invention, which is now dating back nearly two years ago was something which we now call reconfigurable architecture. So usually if you make a computer chip, so what you do, you basically first design it, figure out which transistors should be connected to each other. And then you basically go and use optical lithography, techniques not unlike the one which we use, to basically make this-- to create the chip. So in our case, quite remarkably, what we can do is we can actually have a situation where this connectivity of a chip is kind of like a living organism where we can change this connectivity on the fly.

And the key idea is that if you store the atom in the right type of state in particular, this kind of spin state, if you store a qubit in this spin state, so this qubit can live for a long time, it can live for seconds. But most importantly, this qubit can be moved around physically by just steering the laser beam, by steering the tweezer, which holds this qubit. So what it means is that we can create a situation where we first started with some configuration, some qubits are talking to maybe their neighbors-- --and then what we do, we actually perform some logic gates.

But then we stop this operation, change the connectivity, and then again perform some logic gate. And this can continue. So basically, this approach offers several unique opportunities. So first off, we-- now by moving qubits around, we clearly can have non-local connectivity. But more importantly, by combining that with optical-- holographic optical techniques, we actually can control, such a system very efficiently with just very few control knobs. And I will illustrate it in a second why is it the case.

So as one example, and it's already also more than two year old, we implemented this toric code, which I kind of described, but we implemented it on a torus. So you say, well, wait a second, you have a two-dimensional system. How can you create a-- this toric code on the torus? So in this toric code, what you have, you have this data qubits which store the information, and you have this-- --in addition, you have this ancillas which actually make this-- measure these checks, measure that the error has occurred. And this is a movie showing how we can make this toric code notorious. What you see is that this ancillas are being moved, and then we first do some local checks. And then there is this one long move which basically goes all the way across the system.

So what is long move does is basically kind of completes this torus, right? So basically, this ancillas can really measure things non-locally. So the important-- and details here don't matter. But in, remarkably, this circuit, involving many physical qubits and actually encoding two logical qubits, it turns out. So one of them corresponds to basically product of all kind of operator or of all individual powerless going one way. Another one corresponds to product of powerless going another way is actually-- is encoded by just two control knobs.

One of them basically moves this ancilla qubits vertically and another one looks-- does horizontal moves. So OK. In the last year, we actually worked quite hard to upgrade the system along several directions. So first off, we improved the error rates. We improved fidelities in particular of two qubit operations, which is now kind of on par with leading state of the art fidelities across several platforms. We also learned how to individually address atoms in array and also do it in parallel.

And the key is again, using these acousto-optic deflector to just illuminate a pattern of qubits and rotate them in certain way. And then also, along with several other labs in the community, we actually learned how to measure qubits in the middle of computation. It's actually important for quantum error correction to, for example, measure the syndrome measurements or to do a syndrome measurements. And what is done here is that basically we have kind of zoned architecture, which I will explain now in details in a moment. So where we-- in part of the zone, we store qubits and in another part, we do kind of entangling gate. And then if we want to measure some qubits, we just take them and move them.

And [? separate ?] zone is called measurement zone where we can really image them optically and determine their state. So all of this together-- --can be put together into something which we kind of think about as a kind of first logical quantum processor. So it has a storage zone, this entangling zone, and the readout zone. So actually, in some way, if you think about it, it really starts looking like a real computer. And in fact, in some way, this approach is reminiscent to something which is called von Neumann and even something which is called Harvard classical [? QBR ?] architecture.

So the key approach, the key idea, and the idea of this-- --that this approach works is, as I already mentioned, efficient control and in particular, efficient control now over logical rather than physical qubits. So to illustrate this, I will consider the simplest possible example, and this is an example of the so-called surface code. So it's the idea it actually doesn't matter exactly which code you use. But remember, in this logical qubits, the information is delocalized over physical qubits. And if you want, for example, to make a logic gate, two qubit gate between these delocalized information-- --so you need to somehow make sure that each qubit on the right side feels-- knows what the state of the qubit is on the left side. Somehow they all have to talk together and actually usually enabling that is very challenging.

And in particular, if you do it using conventional approaches, what you have to do, you have to basically make many, many rounds of checks, whether error has occurred in order to do it fault tolerantly, right? In order to really suppress the error. And particularly, the number of rounds scales as what's called code distance is a kind of a size, linear size of this error correcting code. But it turns out that there is another approach, which has been known for many years but kind of a little bit neglected. And this approach is involving so-called transversal operations. So in this approach, what you can do, you can basically just pick up this qubit and just bring it on the top of the other one and then basically in parallel, you can just make parallel set of gates transversally. That's where it's origin of transversal.

Basically, on each pair of the qubits and two of these blocks and then just move them away. And so it actually turns out that this so-called transversal CNOT is inherently fault tolerant because errors now don't spread. So if there was I error in one block, it can only affect the other block in one specific spot. And of course, by moving qubits around, we cannot only make qubits-- logic gates between proximal qubits, but we can also enable long range interaction. But most importantly, you can now start thinking about each of these patches as a kind of macro atom, the atom, which consists of several physical qubits.

But you can now start thinking about controlling each of these patch-- --each of the logical qubit basically as one entity rather than thinking about each individual physical qubits. So basically, in all of these pile, in all of these blocks, all physical qubits receive similar or the same set of instructions as kind of-- act as a kind of one big atom. OK. I'll give just a couple of examples how this approach works and one of them is this logical CNOT, logical, entangling gate with these two surface codes.

So the idea here is that we'll prepare one surface code in the plus state, another one in 0 and then just try to entangle them. And basically, the simplest approach-- so these are two blocks, each has nine physical qubits. So what we'll do is just we will prepare one of them in one state and kind of measure certain types of stabilizer, prepare another, measure another type of stabilizer-- --and then just bring them on top of each other, entangle and then just separate. And the question is, did you-- by doing this encoding, did you improve on your operation? And moreover, does this improvement gets better or worse as you grow the size of this encoding block? So note that the fact that even in principle this entanglement can improve as you grow the size is actually really extremely non-trivial because in fact, what you do, you make like this so-called Schrodinger cat state here.

You make, basically-- you make a system, which is bigger and bigger, and you prepare it into this kind of two superposition states. And so, OK, let's see how it works in reality. So here is kind of measuring entanglement, so-called entanglement witnesses for this d equals 7 for larger surface code, which actually indicates that these two are entangled. And moreover, if you now look at the error in this logical entangling operation, the error decreases as the system size grows. And so that's a key landmark feature of the air quantum error correcting code.

So basically the bigger you make the system passed certain threshold, the better it becomes. So there are various caveats to this observation. So one of them is that to actually obtain this result, we actually have to look not just at one of these qubits individually, we have to actually look at them jointly.

We have to decode them jointly. So this is the essence of this so-called correlated decoding, which actually turns out to be a very important feature of this transversal operation as we rightly learning. One other thing is that here, it's a limitation.

We just did one round of this operation. So eventually what one needs to do, one needs many, many rounds and of course, then decode this again, the circuit together. But this already shows two things. First off, it shows that this error correction principles clearly work-- --but also shows that kind of exploring them in the lab is actually an exciting scientific frontier. For example, this idea that this correlated decoding will play a role is actually-- despite of the fact that this surface code has been around for a quarter of a century, there's maybe, like, one or two papers on this.

It actually turns out it's-- correlated decoding is very, very important. So this is kind of another example in which we take a different code. It's called the color code. It's kind of in some way equivalent.

And we actually-- --what we do here, we do fault tolerance state preparation so basically prepare two of these color codes by just doing some kind of circuit, non-fault tolerantly, and then we use one this encoded circuits as a kind of ancilla to check whether the other one was presented-- was prepared correctly. And so basically by doing this kind of preparation, it's kind of like if you want a Ramsey experiment with logical qubits. So basically, if you measure-- if this one is in a state zero, then that with high likelihood this other one was in the state zero. And in fact, in this case, we can actually, for the first time, kind of at least in our system, show that this error correction improves the absolute error. So this is actually the kind of bar plot showing that the error, which we have at a physical level-- --so if we do logical non-fault tolerant, they are actually gets worse but if we do this fault tolerant preparation and error gets better.

So experiments like this has been done before, in particular, with ion traps. But in our system, we have much larger system, and we have this parallel control. In fact, this result is an average over four pairs which we do in parallel. And of course, once we have this four logical qubits prepared well, we can actually start making a circuit.

For example, we can prepare a GG state. Remember up to now, like, only one or two qubits have been our entangled logical qubits. And so here is the GG state from four logical qubits. And again, one can explore this non-fault tolerant preparation. One can do fault tolerant preparation.

One can also utilize various kind of error detection. But also this-- already this kind of baby steps show us these logical qubits are very special. So for example physically in order to measure this entanglement, you typically do a Ramsey experiment, but actually, you vary the angle of rotation. But it's actually not possible because error correcting-- error corrected qubits are basically digital, right? So you basically go into a digital mode.

So I can-- in fact, can start here, we did some complex tomographic measurement. So what it kind of shows is it really, kind of we're entering this regime of, like, exploring error corrected quantum algorithms [? of ?] logical qubits. And this actually is quite exciting. And one thing which somehow also really community, I would say largely missed is the idea that to make a progress, at least in a kind of near term in this area, you have to really think about multiple components of this approach at once. So I've heard, just before Bill was chatting with people, showing how Bell Labs was very special place where people from different disciplines talked to each other all the time.

So this is what's required here. And actually, the fact that Bell Labs is around, actually kind of resulted in a little bit of loss of this spirit. So basically, if you really want to do something useful here, you have to think about the goal you want to achieve, some application. You have to think about algorithm. You have to find the matching quantum error correcting code. You have to find the compiler and decoder, and then kind of somehow co-design it together with the native hardware capabilities.

So one thing which I would like as an example to give of this is implementation of the so-called non-Clifford operation. So non-Clifford operations are basically operations other than this kind of X, Z, Hadamard 45 kind of degree rotation on CNOT. So basically, this kind of operation, it turns out the Clifford operations, it turns out that they can be simulated classically.

So if you all only had Clifford operations, quantum computers would not have any advantage. So for quantum computers to have an advantage, you need this kind of non-Clifford operation, and they are called quantum magic very appropriately. And this quantum magic is actually generally very challenging to generate. So it's-- some of you who read the papers might have heard that there are this so-called T gate factories and there is all of this kind of things. But actually, it turns out that if you sort of try not to be completely general-- --but instead kind of utilize some negative capabilities of your system, you actually can inject relatively easily a lot of quantum magic.

And this actually is possible by using the so-called three-dimensional code. So actually the simplest of them is a code, which is where qubits are placed on a cube like this. And in this code, you can actually, by sort of defining the stabilizers in an appropriate way, you can actually use eight physical qubits to encode three logical qubits.

And most importantly, these three logical qubits actually, you can sort of operate on in a way to produce these non-Clifford operation. You can produces, for example, a so-called CCZ gate, which is kind of similar to a Toffoli gate that some of you might have heard. And actually, you can do it transversally. So, OK. So the way we will utilize this kind of idea to actually do a rather complex experiment, you run a complex algorithm where we will actually encode logical states in the plus state and perform some alternating series of the CZ-- --and CZ gate within a block, then entangled qubits, produce CNOT with this motion and then repeated many, many times actually kind of geometrically what we will be doing here.

We will start with these cubes, encoding logical qubits, and we will be building hypercubes. So in fact, this is our target, target logical connectivity, but physical connectivity for that is actually very complicated. And in fact, that's the kind of connectivity, which we will try to realize. So the reason why this is actually interesting and important is because if you basically make a circuit like this, so it turns out that it's actually hard, if you run a circuit like this and then you make a measurement, it produces samples. And it turns out you can show that at least asymptotically, these type of circuits classically are very hard to kind of reproduce. It's very hard to produce classical samples, which match quantum samples.

OK. So here, now we are starting to do this kind of sampling task. So it's actually similar to things like quantum supremacy.

You might have heard that Google have done, but in a different context. And so here, what we do now is we start with a simple example of 12 logical qubits so you can actually still simulate a circuit. So here, this dark-- the gray curve is ideal theoretical distribution. So this sampling here is like looking at a speckle patterns, basically.

You have some random pattern, which is produced by the circuit. And so here is what ideal circuit would produce. So when we measure just our own result, it's actually, well, maybe this thing corresponds to this big line, but it's very hard to see if it actually is really, you know, the same or not.

But if you now start adding this error correction, in this case, actually that error detection, you just basically only keep samples provided to your stabilizers who were in the right state. What you see like a shadow of this perfect classical distribution appears-- --and one can quantify it by looking, for example, at something like fidelity. And indeed, what you see is that if you kind of do more and more of this error detection, your fidelity is actually approaching one, which actually is a perfect value.

So clearly this error detection, error correction techniques is encoding here really helps. What we can now do, we can increase the circuit size. And we actually-- we went all the way to 48 qubits. And even in this case, we see that we have still a kind of a reasonable logical fidelity. So this is a logical-- this is the largest logical circuit we have done.

Remember, up to now, people have done one or two logical qubits. And in fact, clearly, this kind of-- this error is encoding. And logical operation and error detection clearly helps. In fact, it's kind of our result is like between one and two orders of magnitude better than the best possible result we could have ever achieved without fidelity. And also compared to other implementations, it's also kind of at least an order of magnitude better. So this is a movie which shows this circuit.

And what you see here, this movie has this kind of hierarchical structure. We first entangle locally, then we entangle more and more globally. And then here we kind of run out of space and then bring it and [INAUDIBLE] storage zone. Then bring the other atoms and then entangle them locally to encode, and then entangle them more globally, and then entangle them more globally, and entangle them even more globally. And then the last state, we will actually entangle them all together. So I would say by many measures, this is the most complex circuit which has ever been successfully executed on any quantum machine today.

But it's only a start. So, for example, one could actually explore what one can learn from this kind of circuits. And actually these circuits, it turns out, are the scrambling circuits. They explore physics similar to the one which is explored in black holes in a different way. And to actually do that, to explore it, what we could do, we could measure the so-called entanglement entropy. And so one way to do it is to make actually two copies of this circuit and then do what's called bell measurement, measure them jointly.

And from doing that-- by doing that, you can actually measure this logical entanglement entropy, which actually now has this kind of-- --when you do error detection, has this form, which is really very kind of classic. It's called pitch curve. This actually effect, which happens in a black hole.

What it really measurement, what it shows here is that this circuit is extremely entangled, but actually globally pure. So this is-- the fact that it comes back, the entropy comes back almost to zero, indicates that globally, the state remains quite pure. And so it really shows us already that this, we can start using this technique for probing physics of disentangling entangled kind of complex system. So I think-- and I kind of used up my time, but maybe I will give a little bit of an outlook. So we clearly see now that with the technique, which both in our group and also the community at large, which actually is a really fun community to work with, is exploring. We see quite clear path to devices which have maybe 100 logical qubits and could maybe do, like, operations with an error of maybe one kind of part per million.

But there is already some work in progress, kind of thinking about new ways to trap atoms, new, more sophisticated error correcting code-- --which actually now starts to show us the way to maybe more than 1,000 logical qubits. So of course, a lot of work and lot of challenges will need to be addressed. But to indicate already that I think we are kind of at a point of, I would say, a phase transition. So here is a drawing from our lab downstairs in [INAUDIBLE] building. It's a drawing of this GG experiments by students.

And actually now in this drawing, each line corresponds to a logical qubit. So it's really, we are making-- we made this transition, if you want. And so I would say this medium scale, like 100 of type QC systems would be a new tool for scientific exploration, but it would also accelerate the path to large scale quantum computers. And one hope is that they would actually start really help us to answer one big question, which is in the field of what really quantum computers can do, which is actually useful.

So this actually is really not clear, I would say at this point. OK. I've used up my time.

So thank you very much for your attention. And I would like to also thank the people whose blood and sweat resulted in this work. Thank you so much. [APPLAUSE] Thank you very much. So first question, that video you're showing-- Yeah.

--the dots you're moving around. Those are-- that's actually an array. It's a single atoms-- That's a single atom. So this is you encoding a logical qubit? Exactly. Just-- Many, many, many logical qubits.

Exactly. OK. 40 up to 48, actually. OK.

So question, I know one issue years ago, and I don't know if it's still an issue is coherence time. And then what you're saying is that if I scale this up, I can significantly increase my coherence time. Is there an upper limit to that or have we solved the coherence time issue? So yeah, that's actually an excellent question.

So the answer to your question depends on specific platform, which you basically use. So for us, if you just leave qubits idling, if you encode these qubits in the-- --like a right type of superposition of atomic states like spin states, coherence times can be very, very long. So in fact, in our experiments right now, it's a few seconds.

But if you work hard, I mean, you can certainly have it minutes or hours. However, this-- and it's great. It's a great starting point, but this is kind of limited help. The reason is that if you want to do a computation, you can not just let qubits idling. You need to make operations between them.

And when you make operations, that's when errors occur. So for us, we don't actually need quantum error correction to just preserve link coherence, but we need it to correct errors in operations. And that's where these error correcting techniques are very important.

So if all you want to do is to increase coherence time, you just need to improve the vacuum, right? Which is easy and cheaper than error correction. I'm sorry. Quick follow-up question. Yes. So with that, in typical memory, you've got your random access memory, which is only-- stores it very quickly so that way, you can do operations. And you have something that stores memory for longer, right? So you have different time scales of memory that exist from your registers to your RAM and so on and so forth.

We could. Yes, we could. So in our case, we have actually a long lived memory. At least at the scale, we are operating now essentially for free, right? So that's a difference, for example, from superconducting qubits where you can not leave the qubits idling for a long time. You have to make correction all the time just to correct memory error, right? Here, you don't need to do it. But you still need error correction if you operate on qubits.

That's the key. Hello. Hello. I'm Alejandro [? Puento. ?] A wonderful talk, by the way.

And I'm glad that you included Professors Bernien's work, because I have a question related to that. OK. Absolutely. I'm happy to answer. So his ancilla qubits are used to detect noise with the other qubits, the regular ones that are containing the information. Do you think this could possibly lead us into adventure where we learn about the noise within atoms, different types of materials, not necessarily the cadmium and the rubidium that he uses? But perhaps use it in silicon carbide or different types of vacancies where we do it in cold neutral atoms first.

We learn about the noises that are distinguishable to these types of systems. And then when we go to the superconducting side or perhaps the photonic side, we already know the noise generation that-- where the noise is coming from. Do you think that's a possible leap into the future, or do you think the properties are too strictly for cold neutral atoms? So the answer to your question is both yes and no.

So in each of specific system where we start, like, where we start building it, and actually, as you might know that my lab not only is working on neutral atoms but also working on, for example, color center and diamond. So basically, the kind of first thing what you do when you start building the systems, you study what your noise sources are, right? And for example, if you learn that your noise sources are, for example, like random magnetic fields-- --which vary slowly, you often can utilize various techniques, like, for example, dynamical decoupling to kind of mitigate it. So I did not mention it, but we use each of these-- during this movie, we apply probably, I don't know, a million pulses to do this dynamical decoupling. So these techniques, the set of these techniques is between these different qubits are somewhat common. There are differences.

But, like, we have-- while we learning about strategies in one-- basically, in that sense, advancing one platform really helps us to advance another. But yet there are also differences. For example, one key difference between these isolated atoms and, for example, color centers or superconducting qubits is that these guys here, they identical.

All of these items are the same so that's why we can send the same instruction with very little calibration. We want global calibration pulse, which would actually perform high fidelity operations. This is not the case for superconducting qubits, right? This is not the case for color centers. So there are these differences and these inevitably allow us in one approach to advance things maybe at a faster pace. But absolutely, we learned a lot already, which has been done, for example, with trapped ions or with superconducting qubits and that's super helpful and hopefully the other way around. Thank you.

[INAUDIBLE] So if I could sneak myself in again. Yes. Quick question here on timescales for the operations.

I imagine because things have to move around, that the timescale is much slower than what you'd expect for superconducting qubits. Is there any thought of combining-- Truly-- --or some tricks-- This is truly excellent question. So indeed, if you kind of look at the kind of physical level, the time scales which we have for entangling operations are actually tens of nanoseconds.

They are comparable to superconducting qubits. But the time to move atoms is slower and also to read out the qubits, it's also we need to collect photons, it's also slower. So basically just from this kind of consideration, so the kind of, you know, the superconducting qubit like what's called clock cycle now is around 1 microsecond. So here, the natural clock cycle, just based on this consideration, is maybe close to 100 microseconds, so it's a factor of 100 slower. However, what I mentioned when I said that this co-design, you really need to look at the kind of system performance as a whole.

So one of the things which actually we discovered stimulated by these experiments is that, for example, if you use something like a surface code, so normally if you just perform the operations on the surface code-- --basically for each operation, you need to perform multiple rounds of syndrome instruction because your syndrome instruction is noisy. So you need to verify. And so typically, for example, you need to factor at least on order of D, of a code distance.

And that, of course, adds to your clock cycle. So interestingly, if you move these qubits around and if you do transversal gate, we have very strong indication and there will be a paper on [INAUDIBLE]. Don't tweet it. We have very strong indication that basically for universal quantum computation, you can save at least this factor of D. So which makes already as we speak, and there are a lot of improvements which we can make, the clock cycles actually in practice as of 2024 are really quite comparable. So there is no doubt.

The clock cycles will improve on superconducting approach and in our approach. And I think the faster you do it, the better off you are. There is no question. I mean, so long as you do it well.

Doing it well is number one. So that really shows this value of this kind of co-design interdisciplinary approach. You really have to look at the performance of a system as a whole. Thank you.

Hi, sorry. I arrived on a red eye flight this morning, so the question may not be all that good. Sorry, sorry, what-- OK. OK. OK.

So first, I want to ask, what's the current capabilities of, like, all like of the state of the art, like, quantum-- Computers? Yes. I see. Yeah. Yeah. So to answer this, maybe [INAUDIBLE] I'll try to do-- squeeze in five minutes, which would probably be a couple hour talk. So, yeah.

So basically across-- and first, I mean, there are no bad questions, right? So all questions is actually an excellent question. So basically, the leading-- there are several kind of leading people-- that researchers are considered, like, leading systems now. So kind of early on, one of the first system where people started really doing meaningful quantum computation experiments are trapped ions. And trapped ions is kind of very similar to what I described except for this, the atoms are charged, right? And you can use this charge, for example, to kind of make the ions interact very strongly. And so in many respects, this trapped ions is still kind of very powerful and in some respects, even leading platform.

So, for example, in terms of just if you isolate two ions and try to entangle them, you can entangle them with the arrow, which is the best by far from any kind of approach. However, it turns out if you start making a system larger, if you keep adding ions, then these errors degrade. And in fact, it's very hard and no one has actually up to now have done experiments with more than maybe 50 ions in a kind of meaningful way. So scaling turns out to be very hard. So superconducting qubits, of course, is a very popular approach. As already discussed, the difference in superconducting qubits is that you basically start with the situation where all qubits are different, so you need to somehow tune them together.

So they are also solid state systems. And maybe, Natalie, I don't know if she talked about sort of ways to try to improve superconducting qubits, kind of there is a lot of work underway. So there are some groups like IBM.

They claim to have created systems with a couple of hundred superconducting qubits. But this-- like at the same time, these big systems also have fidelities and error rates, which-- fidelities which are way too low and error rates are very high. So I would say the leader in this field is Google, who has done a number of fantastic experiments. But these experiments are limited right now to something like 60, 70 qubits.

So there are other approaches which are being considered. So, for example, people talk about photonics. The problem is photons don't interact. And to make the kind of computation between the reliable computation between photons actually turns out to be very challenging.

It is in principle possible by using linear optics and photon detection. But, like, doing kind of photonic experiments at scale, I would say still remains-- like, at large scale like scale of maybe 100 or more is still a little bit science fiction. So-- Oh, and also, can you go back to the part about the non-Clifford algebra? Yes. Yeah. Yes. So is there-- there's no system-- there's no successful implementation of non-Clifford operations yet, right? Well, this is what I've showed.

So this is this scrambling circuit which we implement-- Mm-hmm. --contains basically-- contains dozens of these CCZ gate. So CCZ gate is non-Clifford. And so these circuits which we have made basically have up to about 100 CCZ gates. So there's a lot of-- and it's non-Clifford operations on logical qubits. OK.

Yeah. I will be very quick, professor. Second but last. OK. Yeah. I'll make it really quick.

Yeah. You had this apparatus where you had the SLM. Yeah. I was trying to understand that topology. The role of SLM is to read the atoms or actually arrange them? That was my-- So-- You had the spatial, like, modulator. Yes, yes, yes.

So basically, there are a few-- Yeah. --things. So the SLM basically-- what SLM does, it creates kind of a backbone array of traps, which are static, which we can kind of fil? Or empty by moving atoms around, right.

And then we have actually now several of these-- --so this simplified version, but we now have several of these crossed [INAUDIBLE] which are used to move atoms around, but also to address atoms individually, right? And basically what we do is by using this kind of AODs, what we can do, we can just pick the atoms from some subset of tweezers, SLM tweezers and move them to another subset. So that's kind of the approach. I see. And this is a system you designed? Like, these are off the shelf component or actually you build it? So these are actually, amazingly, are off the shelf components. So-- Interesting.

Thank you. And it's amazing. It remains to be a question how far we can push this technology as we scale up.

But at least sort of at the level of-- But SLM actually has a resolution to capture the atom, size of the atom. That's what you're saying? Yes, exactly. That's amazing. They created SLMs now with thousands, maybe 10-- over 10,000s of traps, extremely homogeneous in any configuration. Yes.

Yeah. They are very powerful. Thise optical techniques are special. That's one message from my talk. Yeah. Thank you again for the great talk.

I have a very simple and short question. Yeah. When you are talking about the hyperfine qubits with the T2 time of two seconds, right, and you mentioned that you can move them around using lasers. Yeah.

I am assuming that the decoherence time depends-- might depend on the wavelength of the laser. And-- Absolutely. Is it, like, a continuous laser, or is it-- do you [? pulse? ?] So we work with lasers which are CW, which are continuous.

Continuous. But we sometimes-- for example, when we do it-- like, and you mentioned it, but for example, when we excite atoms in the Rydberg state-- Mm-hmm. --we actually turn tweezers off for a very brief time. It apparently does not do anything because atom does not have time to fly away, right? But for example-- but all of this-- and all of these are CW lasers. Of course, at some point, you will-- you need tens of watts of power. Actually, the most powerful laser in our system can produce up to 100 watts.

Wow. It's not actually used for tweezers right now. It's used for this Rydberg-- for a very fast Rydberg excitation.

And you are completely correct. So the degree to which we can preserve coherence depends, actually, on the color on a frequency of the laser, which we use for trapping. And kind of the generic rule is that you need to be as far detuned from the transition from a resonant transition to basically avoid any kind of unwanted scattering. But of course, if you detune very far, your traps become very shallow.

So there is a little bit of a compromise. But basically what we do to enable this few second coherence times, so we detune lasers very far, but then we also make use of this dynamical decoupling techniques, which I mentioned to basically carefully echo out any perturbation. So this is actually-- this is a T2 star. It's not T2 star.

T2 star, I mean, it depends on the conditions, but it's typically subsecond. And as a quick follow-up question, I'm sorry about that. Maybe I missed that. When you say, like, you apply gates, how do you apply gates exactly? Is it the same thing as the superconducting qubits-- No, no, no, no, no, no. It's very different. So what we do is-- well, OK, to apply single qubit gates, we just shine light on atoms in the way to basically change their spin state.

Oh-- And we use typically what's called Raman transitions, which basically rotate this, you know, spin qubits. So at the same time, if we want to do two qubit gates, what we do, we excite atoms in a Rydberg state with lasers, but only very briefly. We excite them and bring them down. And this-- through this blockade, we have almost a mechanism to create a kind of digital conditional phase shift. So this is actually very different from superconducting. Thank you again.

Thank you so much. So let's thank Professor Lukin again. [APPLAUSE] And so I think right now you guys can go put up your posters. I'm not sure if folks are going to-- --outside. Oh, they're going to-- OK. So they're going to meet you outside to show you how to get down to the foyer for CNS where the poster session will be.

2024-08-16 18:03