Modernizing your Data Warehouse with Data Ingestion Preparation and Serving using | BRK3224

Everyone welcome. To day two of ignite my, name is Matthew Hicks I'm a program manager at the azure data team at Microsoft and today, I'll be talking about Azure synapse analytics. Specifically. How, to modernize your data warehouse using, the great benefits that are being introduced this week as part of ignite, raise. Your hand a few if you've heard of either synapse analytics through the keynotes or through a previous session today, awesome. And who attended the session before this one about AI and ml awesome. Okay cool thank you so much so, we're, really excited about about this product but with this offering and, this, is a 300 level session focused, on the, modern data warehouse pattern and how it's being improved with this offer with this with, these improvements in the service there's. Many more opportunities to learn more so afterwards I highly encourage everyone to check out our our booths in. The hub as well as check out our sessions that we have throughout the week or, happy to answer any questions that you have throughout. This presentation if you have any sort of solutions. Specific, or scenario specific questions we'll, have Q&A at the end also be available afterwards but. Throughout the presentation I'll have opportunities, for for. Any urgent questions that you might have. So. As, your, synapse analytics as, you may have heard through, the keynotes and other sessions this is a address. Equal data warehouse evolved, this is a limitless, analytic. Service with, unmatched, time to insight this, brings all the benefits of the the g-eight data warehouse service that you know and love today and it. Combines big. Data and data integration. Into, a single, experience, with a single pane of glass for, authoring, monitoring, and securing, your entire solution from the prepare stage of bringing, your from ingest, stage of bringing your data all the way to visualizing, it in power bi. So. Why. Are we doing this so we've heard from customers left and right as, we work with them every single day that.

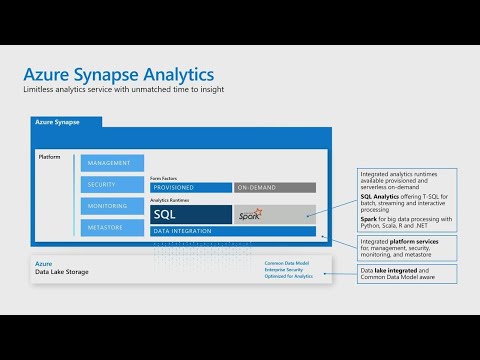

It's, It's a little bit tough to build, the modern day to warehouse pattern when you have these separate, services that you kind of just stitch together sometimes, you have to. Have. Two different worlds of Big Data and relational. Data that, have their own benefits individually, individually so, Big Data you know you bring a lot of data into the lake you. Land it there it's really fast to explore, and quickest start on the data Lake using big data and. Separately. As you're sorry. Add data, warehouse has its own benefits with some great proven security. Really. Quick response time dependable, performance. But. Using these systems together is, almost like trying to bring two different worlds together there's. Not really one service. Before this before, this offering that really combines these in a really coherent, way, so. With Azure synapse, analytics we're bringing these two worlds together. Basically. Bringing. These two kinds of data in, a structured unstructured data big data relational. Data all into one experience, so you can operate. On the same data with. Different kinds of workloads and within, your comfort zone meeting you where you are with these different scenarios. So. Let me walk through just, a quick breakdown of what's, in Azure synapse analytics. So. It all relies on you know having, a data lakes of storage as your as your as a storage system you. Know you have data that you've brought in as part of your existing modern data Ross you. Know solution and you're bringing that in and you. Get, the benefit with Azure. Data Lake storage of having, enterprise, security out of the box you. Get a, storage, solution that's optimized for analytics, and it's, integrated. With a common data model that is continues to evolve and and do, great things with, across. Microsoft. Now. Four. Areas through. Which this, service, really makes it a a uniform. Great single, pane of glass experience, is with, regard to managing your. Entire solution so end and when you're developing your bottom two data warehouse pattern you get the benefits of a single story from management of all, the different components and connections, and things within your solution, security. One place to secure all. Of the aspects of your solution in this model, monitoring. Through a single view from a top level you can monitor your solution level all the way down to what's, going on the specific job that's running as part of this larger pipeline let's say and. Meta. Store so this common idea of what metadata exists, in the system you. Want spark and sequel or other kinds of engines to have knowledge. Of a common, set of tables. Or metadata in the system. And. Also. Data, integration, is a major. Part, of synapse, analytics. Who, here has to use data factory before, that's. A lot of people okay so it, is a key, part of any. Analytic, solution, that, is you know heavy and operationalizing. And data movement and sort. Of orchestrating, activities. Or data movement within within, a juror. And. All of the 90-plus connectors that make it really compatible with many different kinds of solutions within the cloud and outside of the cloud you, get all those benefits with address and apps analytics as well in.

Terms Of being able to develop those, pipelines, orchestrate. Them configure, them as well as maintain, these connections with things outside, outside. Of your solution. Sequel. And SPARC are, also these, are these are two separate, engines, that we're bringing in into this single. Offering. Of address NFS analytics that are really nicely, combined. Because they understand the same. Sort. Of underlying data and you're able to monitor them together, provisioned. And on-demand compute. Allows, you to, choose. Whether or not you want to without, having to spin up any resources beforehand, quickly, run a single query against data that you've landed in the lake or. You can have provision resources that allow you to bring, in all of this information and. And. And, have dedicated capacity, ready for you to have. A have a system that relies on on that capacity, being available for you. And. Furthermore, you know languages that you're comfortable with sequel, Python net, we. Are meeting developers, where they are in terms of the language that they're comfortable with so, if you're a dotnet person, you can use spark net, that's a great, new feature that's coming in with, address and apps analytics of, course sequel in Python if you're used. To you know using T sequel for example or Python. In your spark notebooks or any. Other of these solutions, these, are these are really great. Compatibilities. Now. What no you, know no offering like this is complete without a really, awesome experience, and, so, our experience we're. Proud to introduce this week the azure synapse. The studio this, is the this. Is the place on the web where you're able to go and access your. Your. Solution from a single pane of glass being, able to. Create. Your entire modern-day ross pattern congestion to, preparing, to analyzing to serving, and even visualizing, a power bi all without leaving that, one studio.

So. This is a code, free and code first developer. Experience. Whether. You're you, know someone, who's just getting started with trying out building these monitor to where our solutions or you're someone who's managing. The large solution, a complex, solution with many pipelines and so on this is something that is intended to scale and allows. You to build these solutions and monitor them within, one experience. And. What this enables is this enables analytics. Were close of any of multiple. Kinds at any scale so the idea of you know leveraging, AI or ml just, as you saw in the previous session or, intelligent, apps or business intelligence where, IOT these are all different kinds of workloads that will work within the solution really gracefully, because you have this one place to, develop and you get the benefits of these tried-and-true, systems, like data factory and other components within within this synapse analytics. So. Let's, talk about modernizing, modernizing, your data warehouse so when, we talk about the modern data warehouse pattern it's, usually in the context, of a few other reference architectures, that we talked about when we when we have these discussions with customers so three, reference architectures, that we talk about in Azure our modern data warehousing advanced. Analytics and real-time. Analytics, so. Model, data warehousing is your classical you, know bring in your data. Prepare. It make sure it's in the form that you want analyze. It and serve it in the form that's ready for either visualization. Or the next component, of your overall solution advanced. Analytics adds to that it provides you, know insights. And ml and other, other ways of really taking, the next step, of providing deeper insights into your data and real time analytics adds on, to that nbw pattern of providing, a streaming component, as well now. Today in the talk what else I'll be focusing, on the modern data warehousing reference. Architecture, and, of course you can build upon that within this experience as well. So. When you're talking about modern data warehousing there. Are three categories, of these of canonical operations, that we talked about the, first is loading and ingesting data so. Wherever, your data is you're. Wanting to bring that in and lend that in the lake your. Next step is to prepare and analyze that data. After, you've explored the data to see how you want to do, more fit or to transform it or to clean it up use, something like data flow or other to prepare. That data and then, you can analyze it using something like spark, notebooks. Or spark, jobs. Or. You. Know even you, know sequel or many other compute. Offerings. You. Can serve and visualize, the data as a final step whether. Or not you necessarily want to visualize as the final component, of your MDW pattern it's, basically making, that data and now that you have it in the form that you want serving. That in, a layer that is ready for whatever that next, use case is so if you are wanting to have, a dashboard that's used by many folks, in your company you want to make sure that you're serving that that, prepared. Data that, analyzed data that ready to serve data into. Place that can handle the scale of of that, next phase of your solution. So. As we think about as you're synapse and how this relates to those phases so at the top we should we see like a broken down sort, of a detailed, version of of those, steps coming, to going from ingesting and storing data from multiple sources preparing. Data analyzing. The prepared, data serving, it and building power bi dashboards, and reports today. Through the demos I'll be focusing, on, Azure. Synapse. To do a monitoring. The monitoring component of our platform, sequel. And Python provisioned. And on-demand. Form, factors for compute well. Take a look at some sequel and spark data. Integration and lastly. We'll show or as. Part of this as well will show they looks ridged into and will also show up utilizing, this in power bi. So. Synapse. To do is what. Will salt will see during the demo this, is the. This. Is the central. Place for all of your modern data warehouse development within, adjure synapse analytics it. Combines ingestion, preparation. Analysis. And serving, it. Allows you to develop notebooks, sequel, scripts pipelines. Power bi reports and more all within one place and it. As I mentioned it provides a single pane of glass for authoring monitoring, and securing, your, solution and. And, allows you to to really see visually and I'll show you right off the bat sparking, sequel can operate on the same data with no need to really duplicate you can ingest, your data and choose to query, it however you like with spark. Or sequel as well as many other ways, so. Let's let's. Let's dive into it so ingesting. And storing data from multiple sources what, I'll show is.

How You can bring in data from Azure storage. In. This in this case bring, it into a toast into these. Are these happen to be park' files so they're your text files of a certain format and, you're, able to explore that data, using. Sequel on a man to make sure that once you've ingested it it's in the form that you want so, let's dive into it. So. What, I have here is the azure synapses, studio this, is I have. My own workspace for, taxi cab insights and. What we see here is right off the bat I'm able to start. My solution, building right away with, what, you might be familiar with from data, factory, copy. Data, Wizards so as I, want to you know bring in some data it. Allows me to step by step really easily without, having to move any code specify. Where my data is coming from it's, it's how, do I connect to it what. The data looks like in terms of its schema, and how much of the data I want to bring in where. I want to land it and in what format so I have full control over that as. Well as you know setting, up my. Options in terms at you know whether or not I want to retry and so on for for bringing in this data and out of this wizard what I get is a component in a pipeline that allows me to bring to copy that data to bring it in to ingest that data into, my solution, and then, I can orchestrate and, sort of automate that as I as I as I wish so. Just, to give a quick glimpse into that I'm, choosing, my source adls account as my, ingestion. Let's. See I'm gonna browse my taxi. Data, I, will open. Up the taxi cab data set here I'll go to the yellow cab data set. Which. Has information about the. The sort of the trips and the fares and the, tips for, years. And years of New York taxi cab it's an open public data set so. I'll open up one of these and. Oops. Click. One of these. Choose. My, yellow, cam dataset. And. Then I'm allowed I'm able to basically, choose. My file format I'll specify that it's part K and I'm, able to continue and and and build this pipeline so. This. When. It's when it's complete. It it. Ends with a a, copy. Activity, within a pipeline and. So that. And I'm able to explore the data and so on so once, that data is landed, what. I'm able to do is explore. The data so let. Me show you a few things and you might have seen this in previous sessions I'll I'll show you real quick. This. Idea of being able to explore your data really, easily here develop. Activities. And, develop sort. Of sequel scripts or notebooks or many other ways of operating on your data and then, finally, orchestrating, your overall solution so this is really easy in these three main areas. Here so, within. This data tab what I'm going to do is go to my data set that, I that I've ingested, I'll, open up. One. Of these files. That I brought in on these park' files and. I just want to make sure that this data is in the form that I want it I, might, have to do some data proper cleansing so let's, let's see how I might do that so I'll open up this. Park a file and, I, have the option right away I can right click on this file and I, can say new sequel script, this. By itself is is really, demonstrating, how we're bringing together, two. Things that were previously, very separate, you know exploring, your data and, performing. Some operations, that have to do with analytics or data prep on it right away so I'll, create, a new sequel script and. We'll see is that I'm not connected to you, know a database, or a data warehouse I'm connected, to sequel, analytics on demand this. Allows me to query. This data. This. File that's in my data Lake using. T sequel in, an on-demand fashion, so.

As I'm running, this what it's doing is it's grabbing the park' file that, I specified its ingesting, that it's it's processing, the data and returning some, information. About it and that allows me to sort of take a look at that data right away see, what columns or rows I want to get rid of or adjust, and what, that allows me to do, is see. This data in a nest in a structured, way in a tabular way but. What I can also do within the same experience, is. Visualize. Really. Quickly what, that data looks like so, I can customize I want to see you know the overall fair amount as well as the tip amount based on the day and it looks like on some of these days tips. Were quite. High some. Some of these times and so. What, did this allows me to do is the. Table, view allows me to see okay, are there columns I don't really need are there rows I don't really need looks like there's some rows with some some, null. Or empty data. It, looks like there's some columns I can go ahead and get rid of just to make sure that my data, set that I'm that I'm gonna feed, into my next phase, of my pipeline is. In the form that I want it so. And the, chart allows me to quickly get some trend or insights data. So really, it's bringing together what. Used. To be used to take a lot of work to have. This kind of benefit and it's, all within one experience, here. So. What. I'll do next is say. Okay I want to clean, up this data I want to make sure it's prepared and ready for the, next phase of, my, solution, so, what I'll do is use. Something, called data flow which. Is a code. Free way of preparing data. And. Let's. See. So. With data flow what I'm able to do is. Create. This code free visual. Way of. Showing. Where my data is coming from how. I want to filter it or. How I want to aggregate it or modify, it in any way they're. Actually really, there are many ways of. Of.

Morphing. The data as I'm, getting it ready to feed into the next phase of my pipeline so, in this case I'm using data flow to, filter out some columns and filter. Out some some years of data. And output to, the, yellow cab sink which is where, I'm putting, it to be ready for the next phase of my pipeline. So. As Y as I've developed that, what. I'm doing you couldn't get back here. What. I've done is I've brought in the data and I've explored it to make sure it's in the form that I want and, I've. Also prepared. Data by, using, data. Flow to, analyze that, data set, see, what's see what's wrong with it and see how I can clean it up and then, I've what, I've done is as a sink I've landed this in a spark database, there. Are a few other ways you can prepare your data as well besides the data flow you can use spark notebooks or job definitions, you, can use sequel queries this, allows you to basically have have full flexibility, into which, technology. You use to, prepare your data there. Are many options besides, these three but these are the three that are. Most, commonly sort of requested, and used at a high level to prepare data generally. So. With. With with regard to the data flow the, the, mapping data flow is what we saw on the screen during that demo this. Is code free transformation. Of the data at scale and. The. Wrangling data flow is another option that allows code free data preparation that scale, we. Often see, these two activities happen, you, know alongside each other in a, modern-day to warehouse patterns, there. They have their own benefits you. Want to transform data if you want to basically bring in some data but before it goes on in the next phase you, want to join. Some data sets or perform some lightweight sort of aggregations. Or joins or other other, operations, in order to make sure it's ready and optimized. For the next phase of your solution and wrangling, data flow is you bring in some raw data let's say you're not really sure what what that what, it is that would look like and you want to make sure that that. You're that you're correcting, whatever problems, there are with ingestion or with the source of the data as well as making sure that you're not bringing in some, extra data that's just taking up space that you don't really need for, the next phase of your of your solution and. So, we've seen sort, of ingesting storing exploring, and preparing that data and. The. Next phase would be analyzing. That prepared data so. Within, the studio what. I can do to accomplish. This is use. In. This case a spark notebook as what all as what I'll show you have many, other ways of analyzing that data using, T.

Sequel Or. Basically. Any other compute, component, that you might be using with data factories today so if you're any developing a modern day to warehouse pattern whatever. Using for compute whatever, that may be. Can continue to use that within every synapse analytics, that's one of the main draws that, we have that, we have for synapse analytics is you, have a pipeline that you use today in data factory that same exact pipeline can work in as your synapse analytics in this case I'm showing analysis. Of my data being done in in a notebook I'm bringing, in data that's. Stored in SPARC databases, I'll, analyze it in a notebook and then, I will output I'll land, that data in a database for sequel pool, sequel. Analytics within, synapse, analytics. And. What that will allow me to do is have, that data ready to be served and. Ready, for, use. By a power bi visualization. So. Let's, let's go ahead and so. Before, we dive into the actual demo so power. Bi are, the power bi integration story that we have today is. Not a subset, of power bi functionality, it's not a separate version of power bi or anything like that the, power bi that you know and love today you. Would continue to use but, with the connection, that you have between that power bi workspace let's, say and your, synapse analytics workspace allows, you to build those reports, in your existing, power bi workspace and, you can build those reports right within the. Studio UI so, the same UI that you use to. Explore. Your data to, ingest that data to analyze it you can also use to build the reports themselves so, each of those phases of modern arrest pattern can be done within one experience, so. Let's go and take a look. So. I'm back in my workspace and what. I'll do is, navigate. To, a, notebook, that I have. It's. Actually navigating over there. So. This is a New York taxicab, data. Set analysis, notebook that I have what it's doing this is bringing in data from. My. From. My sequel, from. My spark database. That I've landed, the data in using that data flow that we saw and I'm, analyzing, it using PI. Spark and it's. Generating, some visualizations as I'm going along to make sure that I'm producing the data that I want but, it's also outputting, some, data into, sequel. Sequel. Pools which allows me to have that ready have that served data, ready to to be used by things like power bi or my next phase of my analytic. Solution. So. We see that it's it's calculating. Tip amount by passenger, it's, visualizing. Some data that shows me some really interesting trends, about fair. Amount versus tip amount and so on so I'm getting some really interesting data out of this and. What. I all do is the. Next step is let's see how I can visualize this, the data that's now being landed, into sequel data warehouse so, what's. Cool about this is right in this develop, section of the studio not. Only can I explore you know and develop all my sequel scripts and notebooks that I've used throughout this but, I can also within.

This Power bi experience, within this power bi section I. Can. Explore. And, see. Existing, reports, and add, on to them. So. Who here has used power bi. Awesome. Ok so this should look very familiar what. I'm doing right now is I'm loading a power. Bi report that allows me to see some, existing visualizations, that I have and. What, I can easily do now is add on, another. Another, visualization, to. Basically say know hey I want to see, let's. See I. Want. To see the fair amount. And. I want to see that by. Pickup. Date. Let's say. And. What this allows me to do is see this visualization, and. Automatically. Sort of I can use, this embedded, power bi experience, I can save it I can view it I can refresh it and this allows me to. Basically. Produce. These reports, right within power bi within, this context, of the overall analytic, studio experience. So. Let. Me go back over to. This. Presentation. The. Next step after I've built I've I've developed, all these different components of my overall solution is I, will throw the I'll put these into a pipeline that will allow me to say you know I want my ingestion to be followed by the data prep to, be followed by the analysis. And then finally landing that data into, the into. The basically. The the. This. The place, where it can be served the sequel pool, now. This. Pipeline once built I can trigger this pipeline based on schedules, based on events, based on plate and data windows. But. One thing that you'll see at the bottom is that just, like you're used to with data factory this. Entire pipeline as it's running is leveraging, the links services, that. I. Defined. As part, of you, know connecting, my workspace to things outside of it so just as if you as you develop a data factory and you connect that to data sources and sinks and sources, of compute you're, also doing this for links services so within. The synapse analytics. Workspace so, basically connecting to different sources. Of data and, having. Data being, processed. And. So once you've built that pipeline the next step would be to parameterize, that pipeline and make sure that it, operates, on the on the part of the data that you want it to that it feeds. In some information about, the, context in which the pipeline is running you, can schedule that pipeline to run periodically. Based. On any kind of trigger that, we discussed as, well as monitoring, the overall solution so you can monitor how your pipelines are running and the, benefit of, outer.

Setups Analytics with regarded monitoring is that you can dive in and see. Okay this pipeline may, not have run well what. Caused that problem I can click into certain aspects, of my solution, because. I developed it within that studio and I can debug right away. So let's go ahead and take a look at, operationalizing. This pipeline oh. And. Here we see that visualization that we just created so it's a lot of data and here we see that there's quite a trend a fair, amount by pickup date over time. So, what I'll do here is I'll go to, my home screen, and we, see that I have this operation, this operationalize, orchestration, section I have. These, pipelines, here, the New York taxicab pipeline, that, that will that we're loading here. Allows. Me to and let me make this a little bigger so you can see it. What. We see here are copy, activities, that I created using the. Copy data. Wizard a data. Flow and that's, an alternative I'm showing also a notebook that, could be done to prepare data but. This data flow feeding. Into copy. Data and store procedure activities that, allow me to serve. That data and have that data ready for, the next phase so that whenever this pipeline is run my power bi report is up-to-date with the latest data. So. How do i trigger this so I can add a trigger here I can trigger this pipeline now or I can create a new trigger to say I want this to run let's say. Every. You, know one. Day. At. Zero. UTC, and. What, that allows me to do is basically. Have this run daily as your UTC and this, pipeline will automatically run now, when, I want to monitor, how, my pipelines, and how my solution as a, whole is operating. What. I can do is go, to the monitor section of my, studio UI and, what's. Really great about this is I, see. Just. As I as I do in ATF a list of pipeline, runs to see how was my overall solution doing. See. One. Second. So I can see how is my overall solution doing, I can. Also see you. Know if I if I ran and you know this pipeline within the past week let's say it ran a few times I can see something that's in progress I can see what's, failed what's succeeded, but I can also see other components. Of my overall solution so in this case spark, applications, I can, see that in the last 30 days there were some failures, and successes some things queued if, I want to investigate what happened what I can open up is the, details, of this activity and then get some more information about what's happening this, is really rich rich insights about allow. Me to see this information and act, on it right away. It allows me to also launch the spark history server UI if you're familiar with spark that, allow me to really debug, and, investigate. Some issues, right away. So. On. A whole what. We've done is we've seen all the different ways through in through, one experience, how we can ingest data prepare. It analyze, it serve. It and visualize it all within one experience. So. If you have any questions please. Let me know let, me first talk about some. Of the upcoming sessions, so we have two, booths in the hub area.

We, Highly recommend that you come by will happen to answer any questions that you have as. Well as talk a little bit more we have some running demos there as well and, we have a lot more information we can share we. Also have more, sessions so, later. On today we, have securing. Your data warehouse with, Sena synapse, analytics as, well as talking about on-demand capabilities, so when I show that on-demand functionality. We talked a little bit more about that as well as well, as cloud data warehousing. There. Are a few more so has Wednesday, and Thursday and Friday highly recommend you guys take a you, all take a look at this feel, free to sign up one, thing I would I would really recommend is there's a lab that, we have on Thursday, afternoon, where you can actually sit down and try this product right, away so. There. Are a few slots, I would I would make. Sure you sign up the spots, are going quick but, that allows you to really sit down and try this product out right away and see how easy this makes your monitor warehouse development and again. Stop by our booth we have a lot more information we'd love to talk with you more, let's. Talk about some any-any q and A's any questions, yes. Existing, see ICD so, we. We. Do we, do have a, more. We can share on that if you stop by our booth with. Get integration capabilities, that, will basically allow you to connect your workspace, with a. Git repository to keep track of you know who's making changes to your overall production pipeline it's, very important something that related, factory offered, and it was you. Know a game, changer for their customers, I mean something that that we're going to deeply integrate with as well and, see ICD from the point of view of making sure that as you make changes they, go through a proper. Build process you're able to to, make sure that they're integrated with your system that's absolutely you know on the roadmap and something that we're focusing on very much yes, yes. I'm. Sorry. Always. Encrypted so that's a great question so the, question was is always encrypted something. That's supported with this solution so, with the benefit of data. Like storage gen to you. You're, able to configure your storage. Account to be always, encrypted so this is something, that because, the data is outside the workspace within, with this model the. Work space itself is mainly for your solution, development, and. Where as your data you can secure separately, and you get all the benefits of the enterprise grade. Security that data like storage n2 offers. Let. Me follow up with you offline about that I don't know. So. It's not it's not it's not power bi embedded, that, product that you mentioning this. Is actually if. You have power bi today, and you have a workspace that exists today just. As you were able to connect your workspace with data sources and sources of compute you can connect your workspace to your power bi, workspace. That allows you to eat, and build those reports, that, you have sort. Of power, bi online today. Yes. Sure. So the question was about data governance and sort of a catalog, kind of concept that's, something that we're very interest. We're very engaged, in and and looking. Into as we develop this further we, don't haven't we don't have any specific information to share other than data, governance and, data lineage and data and from catalog, information is, very important to us and we expect that as this, as this product, grows and as this evolves we, expect this experience to to really grow in that area. So. Tasha. The. Questions yes. Yes. So that's that's absolutely. I'll. Give a two parter answer so out of the gate what, you'll get is are the same kinds of places, where you can land your monitoring information that you get across address today so the common approach for monitor so the question was the, monitoring.

Information That's generated, as part of my solution can, I ingest that and really process that through other parts of my solution as well so across. A sure there's. A germ month the asthma monitor story incorporates, log analytics. Landing. The the you know log information and event data and alert, you know triggering data into. A central place where you can then process that and use that so the first part of the answer is will, be fully compatible with log analytics and and as our monitor story but, we're going even, further so one. Thing that that you you'll notice is when. You use TC pol to to. Process data you. Can use your DMVs. And other ways of analyzing, what's happening in system right away and a lot of people love. Using that to, really understand what's happening system and build reports and so it's really using, the system to analyze itself, that's, something that we really are embracing as we as we explore the monitoring area. Yes. So. I think so. Data, bricks and after, signups analytics it's not really any either or this is something where if. You're using data bricks today and you love it and there's some features, of it that you absolutely want to stick, with by, all means absolutely, do so what we're presenting with outer synapse analytics, is one, experience that brings relational, non-relational, data. Together, but. What that that doesn't necessarily mean that if you want you to spark without your sin x in Linux you have to use you. Like for example you can't use, a one service or the other you can connect to 90, plus connectors. Of data, sources and compute, opportunities. To bring in each. Of these kinds of components into, your overall, solution, so. Nothing, about this is sort, of you know indicating. What you can, and can do with regard to with what components, you use we're, absolutely it's a tight and efficient that we have with, data bricks and it's a continual, will continue to evolve that integration story. Yes. Logic. App integration let, me follow up with you on that I'll, get more information if you if we, if we chat right afterwards Thanks.

Hi. Yes. Sir. A question so first. We. Officially, cannot tell the timeline but you can guess as soon as we go preview the, next stage is running to. Believe. It's powered by spark yeah. So, data flow the wrangling and if I believe it's powered by spark. Yes. Delta. Lake Delta Lake is, supported, yes yes, so within your notebook you can leverage within. The notebook authoring experience or spark. Job development experience you can leverage Delphi. Can get the benefits I. Believe. We'll. Have to look more into that for you in and then talk a little bit more afterwards if that's okay I'm. Not I'm not to, understand. From me with that area yes. Data. Factory that's a great question so data factory is an existing GA. Service, it'll continue to evolve the, benefits you, know if you're on data factory today will, continue to absolutely 100% support. And continue to evolve and improve and and, great things are happening with data factory now. The benefits, that you get with regard to data transformation, and data orchestration, and and, you, know data integration those. Benefits are also present in addressing EPS analytics with all these added benefits of using. Spark and sequel and authoring, your moderator Rus pattern within one experience, but, yeah. So this is not we're, not sort of like this is not replacing or competing or anything like that with with data factory yes. That's. A great question so I didn't we. Don't focus, too. Much in this presentation on the security side of things but the, goal here is that when, you have access to a workspace. There's. A lot of things you can have in the workspace and so it's very important they have fine-grained, access control, and, so it's easy it's it's it's not difficult to share but it's also you. Know you know you know you know not everyone has the same permissions, on the entire core exercise right the own to be able to say you, know let's. Say everyone should with. That with access to the workspace should be able to build a power, bi report, or should, be able to run a notebook than in that process. Is the data that you've prepared. But not everyone should have access to to, mess with the raw data that's being used as part of a production pipeline let's say or mess with the actual construction of a production pipeline so find grain access control for the components, of your solution your, data which you can secure using adls gen 2 as. Well as sort. Of the visualizations. And other aspects of your of your pipeline that's something that we care deeply about yeah, sure yes. That's. A great question so with. Regard to how you how you you. Know when when do you decide to process, your. Data if you have data coming in you want to make sure that, you're, you're you, know you're only looking at the data that's coming in and your pipeline is only running when asked new data to process and so on what, we're talking about there is you. You build a pipeline and you decide to set up a trigger that's based. On certain conditions now what I showed in, the demo was, a very, simple time-based, trigger that runs one you know every day at zero UTC but, you can also set up a trigger that is based on an event like, a file being added to a certain folder and your data like storage end to account let's say so. If someone other another. Solution. That's connected to what you're building is landing, data in a certain location and you want your pipeline to kick, off right, when it has new data to process that's absolutely something to configure as part of you, can configure as part of the the trigger setup. Configuration. That's. A great question yes yes. So. The integration with Azure, Active Directory with. With Azure synapse analytics, yes. So, what. I when I was using the address in Apps studio, I was, logged in as myself in, my ad. Identity, so what that meant was that when I was opening. That Park a file and analyzing. That using T sequel with a tsuba, on demand it was actually using my identity to say if. I had access if I didn't have access to that file that script wouldn't have run if. I right clicked and said you know process in a spark notebook and. I run that notebook it, would be my identity that's used to really pass through and say this. Is this, is Matthew trying to access that file or it's not so it's very deep ad sort, of integration throughout throughout that stack. Within. The within that that, experience so the.

The. Question is is, there a way do, I have to use what, wish what was shown in terms of visualizing the data or or landing the data in a certain place in order to get the benefits of all. This analysis, and of the modern day to Ross pattern no you any, of those components can be built independently, so, you can even for, example have, your notebook process, data land, it wherever you'd like and. Then you can even use a copy activity, right after that to take the data that it was was created with your notebook and land, that wherever you'd like for further processing, so. It doesn't always have to end in power bi visualization, that's what we chose to do in this demo but you can also have it land wherever it's needed for the next phase of your solution yes. CDM. Integration common data model identification, so, we have more information we can share with you afterwards. And centers rejected. Microsoft. If you see. So. Let. Me follow up with you offline on, that one and, we'll have more information we can chat. Thank. You and. Yes. Yes. Yes. So. The question was once, I create a power bi data set can I use that another on other workspaces mean power bi workspaces as well yes absolutely so that's that's something where you. Know it's it's we're not doing anything special with power bi you know as you get output to, to power into, the power bi dataset let's say you're. Able to leverage that later on yes any. Other questions. So. Because. We know that. The. Data sets that you create require, data from multiple different data sources which could be multiple different workspaces. And vice, versa right so. It will be a multiple thing so, where you saw the link services which, is what Matthew showed that is where I can go and keep adding. Let. Me let me talk with you afterwards this has to do is sort of our catalog story and our plan for that area so let's, try difference yes. Yes. Yes, so you. Can leverage L Delta Lake capabilities, we can chat more afterwards, if you'd like to learn more a little more about that yes yes. Yes. And, actually that's that's what I use right when. I was analyzing that that park' file I right clicked on a parking file and I was like I said, analyzing, the sequel on demand and I was able to analyze that Park I file right off the bat yeah. Yes. So. That's. A great question and, that's, that's a reassuring, because that's that's absolutely something that we're that we're looking at right now as we as we build out our monitoring.

Solution, Being. Able to add, a solution, level say not, only what's happening the system like what failures in, your system are happening or how can I improve things but also what. Is what, is consuming. The most from. A usage point of view but also an efficiency point of view is there, if, I provisioned. A lot of capacity that's not being used or, have our. Folks. Submitting. Queries and then cancelling them, you. Know sort of wasting the processing time that was I was already used so that's absolutely something we're looking into and we'll have. More information to share later on that. Yes. So. And. The. Cluster sizes are so. We're talking about sort, of spark pools that you are. Able to create to power your notebooks or your spark jobs or other activities happening with regard to spark, you. Know you're able to say. You know here's my minimum size here's my maximum size here's, here's the size of the nodes that my particular, workload needs if I'm memory. Intensive. It's. It's it's really easy to configure using. Using our users, but. Of course we also offer API is in SDKs to make this programmatically, accessible as well so you have the option the flexibility, to either have a nice, experience. Interactively, or if, you have a more robust sort of you want to control this manually and you want to choose when your, your ranges change you can leverage, those, controls programmatically. Yes. Yes, absolutely, yes. Well. Security. We'll. Get back to him that was good question really, these are all really good questions yeah. Yeah. So. So. There are there any other questions. That we have I'll, go ahead and step down from the stage now and take more questions feel, free to also stop by our booth we have many more sessions throughout the week but thank you all so much for your coming, and let, us know if you have any questions.

2020-01-20 01:03