Microservice Architecture and Serverless Technologies Implementation in Health Tech

Hello everyone, I'm Milos Kowatschki. I'm an Engagement Manager at Vicert. I was previously a Software Engineer and now I'm managing customer relations and making sure that projects are delivered successfully. With me, I have Kresimir, who is our lead DevOps Engineer. Could you tell us something about yourself? Sure, my name is Kresimir Majic, I've been in IT for about 10 years. Started out as a Linux Engineer, then graduated to a SysAdmin, and I transitioned later on to a DevOps Engineer, which I instantly fell in love with because I like automating stuff and putting myself out of work. Great to hear that.

Speaking regarding serveless technologies we are living currently in an era where more than 83 of enterprise workloads currently work in the cloud environment and more than 30 percent of the budget for each IT company goes to the cloud environment. Now, in recent years there is actually a trend to refactor the infrastructure to work on microservices architecture in parallel to monolithic architecture that was more or less most common in recent years. So what are the challenges to actually do the refactoring from the monolithic architecture to the microservice architecture and what are the greatest challenges to tackle in that aspect? In my opinion, the biggest problem is trying to determine how complex you should go. If you look at for example DevOps job postings uh they're basically filled with Kubernetes requirements and i think Kubernetes is both overly complex, and secondary a relic from the past because in the past you had this need where you had to orchestrate containers but all you had was bare-bones servers so you made this cluster that was Kubernetes and it basically shifted the workload around those servers and then they somehow just pushed that up to the Cloud. So now you had cloud servers but you still had Kubernetes that split the workload over to them. Since we now have the option of creating serverless containers and simplifying that a lot I simply don't see the need for most of the current setups that employ Kubernetes.

I think it's overly complex, I think it should get abandoned and the problem is that people usually don't want to tackle Kubernetes I mean people outside of the DevOps world. It's difficult to get developers involved in how your pipeline works how infrastructure is tied together when they have to untangle a huge complex mess that Kubernetes can become very easily. So basically I avoid it whenever I can and how complex is actually tackling the network infrastructure in that aspect as well because we know that communication between internal services could get to really complex points. So how do you transfer all that to the Cloud environment

and how do you ensure that the networking is working properly and that you have the bandwidth and latency that software requires? Well the way AWS is structured - they have these huge, well they're not even really data centers, when talking about data centers you imagine rows of servers and the way current AWS data centers look like they're like a container. How do I put this - Have you ever seen those shipping containers? Yeah. That's basically it. They just have you know the power input and the container is filled with CPUs and memory and storage and whatnot, so you can't really walk among that you know, racks of servers, it's no longer like that. So since all of it is so packed tightly together, the bandwidth and everything, it's not as costly. The latency is not as big of an issue as it was before when

you actually had to go from one server to another. It's also tightly packed now that you really don't have the impression that you're going to a different server, that you're abandoning your own server and going to the other one that hosts another instance. AWS does charge bandwidth and in a different way, there are ways around it. But sorry what was the question again? How do you integrate networking with services? How complex is that? I'd say that you have to think about the infrastructure from the start and not try to cram whatever you came up with afterward. So this is basically

cloud-native thinking, a cloud-native approach, so if you know what the requirements are and how you're going to pull them off then it's not a problem. The problem arises when you don't know how to do something and then you try to hack it and in the end, it becomes messy. Yeah, that makes sense. Concerning the databases - what I read is that for example Aurora Serverless,

it's for variable workloads mostly, so if you have let's say a stable number of queries going to the database - is serverless something to consider or you would rather go with RDS? I would definitely try to go as serverless as possible that's basically my motto so whenever I can abandon an old way of thinking I try to do that. But that mostly depends on what our requirements are. If we have a service that needs access to the database throughout the day then we wouldn't really be able to use Aurora. However, if you have a service

that basically uh does a dump of data maybe at midnight or, I don't know, once per day basically, then it's no problem integrating it and spinning up the Aurora DB to serve that need and then maybe you can spin it up for the several hours, for example when the doctors are checking exams or something like that you would spin it up daily when the data is being dumped, and then maybe for two or three or six hours when some work is actually being done, but that means that you can keep it down for, I don't know, 18 hours a day and all of the weekends and everything, besides you can even build in a switch that like we're using with containers now if somebody does a commit it spins up the instance and keeps it up for maybe two hours or something. I presume that in the case of patient portals for example that is quite common in healthcare something like Aurora Serverless would be compatible because you don't have a stable number of requests from patients, it's a very variable kind of workload, so that should be complementary to that aspect right? Yeah I guess it's difficult to estimate how the workload is going to look like ahead of time. Now if you can do that's great but in our situation some of our developers are in a different time zone so we have both U.S and Europe-based developers. So that fact alone contributes to the amount of uptime that's required for the project to work properly and it kind of goes against the logic of being fully cloud-native and using serverless as much as possible because if we need the database up so that our developers can work on it and then the US-based developers can work on it as well that's basically two shifts out of 24 hours per day. However, that requirement is only there for the dev environment so we can turn off the database in staging, in production, in testing or whatnot in order to set well. Obviously not production, but you get what I'm saying. Yeah

now many of our clients are concerned whether they could achieve the compliance in aspect to HIPAA compliance as well as part 11 CFR concerning the data at rest and the data in transit as well duties serverless technologies. Are they capable of achieving compliance up to the standards? Basically, the legal system requires absolutely I believe there's also a step above healthcare requirements which is the government's secret requirements, that's not a technical term, I mean just that AWS when they were developing their services they were not thinking that they need to be capable of providing compliance for just the health care industry but one step above that, which is the military and the government, of course they do have separate data centers for that type of requests to serve those customers, but the point is that they absolutely can do that and they need to be able to do that in order to provide service properly. So it's just a matter of are you going to implement it. In a proper way can you actually deliver because they are certainly able to provide everything you need to to make it happen to be compliant so what you're saying practically is that instead of building that security on your own you can just use the security that was built up in the AWS from the ground up and you will not have to have that kind of concern at all and the more you leverage your workloads on AWS the more security concerns you are basically passing to the cloud environment because I know for example when you run an EC2 instance um you have to take care of the security and instance-level. Yeah so the security goes, the responsibility for security goes two ways. AWS is covering their part making sure that nobody would access the physical premises where their hardware is located but there's also your responsibility not meaning you exactly, but any DevOps guy or even a developer who develops software that's going to be stored on that hardware. So they secure their part but you also need to secure your part um imagine if you had a car and you park it in a garage and the owner of the garage guarantees that nothing is going to happen to your car while it's in that garage. However, when you take it out you're on

your own basically there are no guarantees at that point. So even though they allow you to let's say create the most secure thing possible, you can still make it publicly accessible without a password and there's no real way of stopping that, that's up to you completely. So you need to know what you're doing but the point is AWS will support you pretty much in every way possible. I mean they will allow you to be as secure as needed so there will be no constraints from their end.

Okay that's that sounds reasonable and concerning the costs because most of our clients are speaking about it and they would like to know how cost effective going serverless is and whether that will significantly reduce their costs that are currently being spent on the infrastructure and also on maintenance um there are significant uh significant how do i put this you can definitely save a lot of money by implementing serverless properly and the key word here is properly so if you keep serverless infrastructure up 24 7 then you're going to be losing money even that is not a deterrent in some cases because the amount of simply simplification that it allows is worth it because you no longer need to spin up entire servers you no longer need to maintain them you can just keep one container up uh throw out anything you don't need and it's much easier to deploy. However in order to make effective uh price reductions you would need to think about how to turn stuff off. Basically that's the biggest advantage of serverless because it can spin up pro spin up quickly and take itself down when needed so you can basically schedule your infrastructure I don't know if you have a consistent office hours you can schedule it to start five minutes before you go into the office if you really want to be showing off you can maybe time it so that when you pull your key card through the entry in the building. You can make that a trigger to start up your infrastructure, even to make you coffee or whatever, if your coffee maker is plugged into the network, I mean the possibilities are endless but you get what i'm saying. Yeah the key point of serverless is to shut it down. That's how you make savings. I know the developers are sometimes concerned regarding the cold start, could you elaborate on that aspect definitely um currently our pipeline is pretty much being told to retry every 30 seconds just to make sure if the deployment if the new deployment is ready. So for example um let's say we deployed a new container then hold the API the back end and we need to migrate the DB. What we do is we place a timestamp as a response in that container

and then the pipeline knows which timestamp it gave to the container and when it gets it back as a response that means that the container is up and it can now do the work that it's supposed to um I believe it takes like two on average about two minutes for it to get deployed and for the networking to propagate to set everything up and that's basically how long we need to wait um I am not sure about Aurora, but I think it's somewhere along those lines it's all under five minutes, the point is when it's automated you don't really care how long it takes you just need to time it properly now that's all assuming that the workloads are evenly split or predictable okay, and when you're building this how would you, I would say prioritize things because I know that many companies are currently transforming their workloads to be cloud compatible and they often say where to start and from where to start exactly in the infrastructure aspect? What is the easiest way to start that and how would you prioritize what goes first and what goes next? Whenever I'm building something I try to make it as simple as possible with the least possible amount of moving parts so that it doesn't scare away people who try to get involved. People always say that DevOps is not a position it's a mentality. I don't really agree with that, but I get what they're saying. It's not easy if you let's say have a full stack developer or at least somebody who knows both front end and the backend, it's very difficult for them to grasp all the concepts of building infrastructure networking and everything security as well, and then to build infrastructure and pipelines and automate all that. It's just too much work for one person. So somebody can maybe scratch the surface on all of those areas, in all of those areas but not really be excelling at all of them.

When I build stuff I try to make it with the least possible amount of outside dependencies basically anything that would scare people away, and I try to, I don't know, for example, hold all the variables in one place and not making people go through five or six different UIs, where one would show you, I don't know, monitoring, the other will show you logs, the third one would be the API interface that you know swagger or whatnot. I try to simplify it as much as possible uh i prefer mono repos where everything is in just one repository and there are no outside dependencies so whenever I can, I offer that, even when the project is done i see i try to review it and see if there's anything we can throw out um does this variable consist of some other variables so that maybe we can compile it on our own if it for example has the AWS account ID and the region well I already have both of those variables I don't need this one as well so I can throw it out and just use the two previous variables to compile the third one basically stuff like that so just minimize everything and as Elon Musk would say - "The project is not done when there's nothing to add but when there's nothing to throw out", and concerning development many developers say that it's hard to debug the code in work. When you're working with a serverless environment, how difficult is that really and are there any best practices to have easier debugging and easier development time? That's a great question. What we've settled on is basically abandoning local development stages so we do not rely on our developers' computers to run anything from the start we opt for a development stage that's entirely in the cloud and we even try to mimic production as much as possible so that there's no split. Let's say when

okay we're done with the development phase we're going to push it to testing or QA and then when we do that or when we go from testing to staging that there's a huge shift and now all of a sudden we put on some securities and restrictions and whatnot we try to avoid that we basically try to build it production-ready from the development stage so that everybody knows what to expect later on so we encrypt the buckets the databases everything we try to hold the logs for a bit longer, I don't know, making backups as rapid as the production would have them, and I don't know, basically mimic production in every way while still trying to keep costs low. That way our developers are allowed to use whatever they're comfortable with uh they can be on Windows or Mac or any variant of Linux they prefer. They can use any tools they want they can basically do whatever they want because their development stage is in the Cloud and it also allows day one pushes to production because you just need to clone the repo push something there and we usually do the pull requests between branches to promote stuff from one stage to another so in theory if you hire a guy day one they could maybe add a comment in code and commit that and then just do the pull request through to testing staging and production and their comment would be visible you know in production and when it comes to QA is it easier or uh it's more difficult to QA on the serverless environment that I guess, that depends. Working from home kinda introduced another barrier let's put it that way. I mean I definitely love working from home but usually, when you're in the office you have the single IP as the source and then you could restrict your infrastructure to just that ip, and then you're pretty safe because you know that only people from the office are allowed to access it. That's that. Now you either need VPNs or you need some other securities to limit who can access your stuff and how so you can use http headers you can use VPNs but it's not as simple as it was before. When you know what the office ip is static it's always the

same, you just limit that ip limit access to that IP, and you're good. So I guess in that regard it is a bit more difficult but it's definitely not an issue it's a minor thing really Okay. When it comes to optimizing the microservice architecture um what are the best practices that we have encountered so far in optimizing? Well usually in my personal experience it was related to how the processes work. So I try to log everything for example in a container startup usually i use Nginx as front, and everything goes through it, and if i use for example OpenSsh to allow the Ssh access to that container, we're talking Fargate here, um will be the only other process that handles a port on that container. However everything else

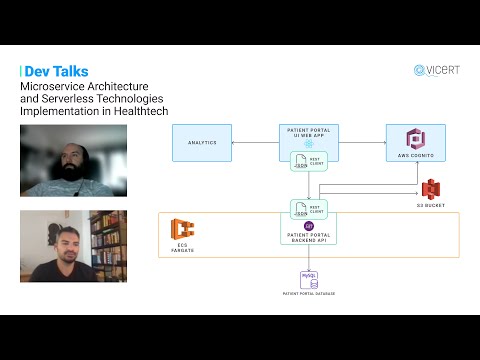

is sorted uh through Nginx ,and I remember this example, it took like four minutes for my container to spin up and I couldn't figure out why. So i try to log everything that happens in it and the docker file will basically um spin up my OpenSsh it would spin up the back end service it would, I don't know set up the OpenSsh service create the configuration everything and in the end it would start Nginx and then Nginx would be the initial process and all of that happened in two seconds maybe. However it still took like four minutes for my container to be visible and I just couldn't understand it, so i started logging the AWS Health checks that arrive from the load balancer to see what went on why is it taking so long and it turns out that obviously there's amount of health checks that need to pass that go from AWS to our container just to make sure that it's responsive and it's ready to receive traffic so first I minimized all that but even with having just two positive consecutive health check passes that are I believe five or ten seconds apart it still took like three minutes and when i realized that this has nothing to do with me I finally contacted AWS to ask them what's the deal with the container uptime, why does it take so long to spin it up, and they basically confirmed that it takes their infrastructure about two minutes, two to three minutes to tie everything together and allow access to the container. Basically, those health checks would only start after about two minutes or so and that's why it took that amount of time to spin it up so there was nothing I could do anymore to to to enhance that that was as fast as it could be and, yeah, so I guess you would need to know a lot about how stuff works in order to eliminate everything you can so I wouldn't really say that I have a process it's just observing how stuff works and seeing if there's anything that could be eliminated okay thanks we are witnesses in healthcare that many applications are actually used to process images and I would like to see how we utilize serverless architecture to complete that goal? Okay so what we have here is a diagram of how our infrastructure looks for one of the projects that we worked on um what this is about is having images that need to be reviewed so our AI is going over images it tries to diagnose them and whatever the outcome is we also want to review that later on so we need to have doctors going over it to see if they agree with the assessment. We need to have technicians go over them to see maybe it was blurry, maybe the camera didn't work right, whatever the issue may be. All of it needs to be reviewed. So the works the workflow itself is file-based so this allows us to

have modular projects that go across teams so the endpoints are basically just S3 buckets, they fill up a certain bucket with their images they just dump them or the camera dumps them, it doesn't really matter, the point is the event of a file being created in a bucket is a trigger. So once a file arrives let's say we have a prefix and a suffix, that we will filter it from, so let's say a jpeg image comes in and it goes to a folder that belongs to one client one hospital or a clinic or what not, that would trigger a certain event that would go either to SNS or SQS directly, basically it would create a message that would trigger a lambda and that lambda would pick up those images or a single image, store them in a bucket for safekeeping, and it would also call the API to put that in the database to make the database aware of the images that are now in place. Also, the API would then allow the front-end application that's stored in CloudFront to have access to that, to that image set, and then we would also generate an email that will contain the link to that exact location where those images can be seen so once everything is tied together an email would go out and notify whoever needs to be notified with the exact deep link so that you can just click it and visit the site that has the images ready. We also tie the Cognito into the whole thing as well so that you need to be logged into our Azure DevOps project or basically just Azure um and if you have sufficient permissions to view this it would allow you through so you have a lambda function that is being triggered once the file is added to the S3 bucket um and then that file is processed uh right um yeah and and and then we trigger another lambda function that again does some um processing from the SQS. The main logic is that when images arrive a lambda is trigger that does its magic pulls the images makes a copy stores them in DB and whatnot and there's another lambda that is pretty much just generating the email links you don't want to cram too much stuff in your lambda you certainly can do that by having multiple different triggers for the same lambda but you don't really want to complicate lamps too much and and have them determine whether I'm called from this path or that path and then i differentiate how I'm going to behave it's certainly possible but I like to keep things simple, so in my view it's better to have separate lambdas where one lambda does one thing, and you know, depending on how many things you need done you have that many lambdas, but the point is not try to over complicate assemble a single lambda. The idea is to

keep minimal functionality along with the function in order to easily maintain that and also have separation concerns in global exactly. Okay, thanks.

2022-06-06 05:23