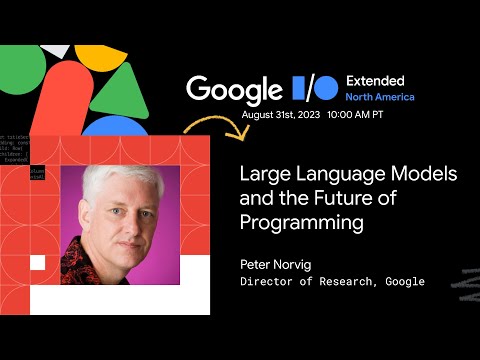

Large Language Models and the Future of Programming by Peter Norvig

thank you great to be here I'm I'm live from uh Mountain View Google headquarters and you heard a little bit uh from Paige about where we are today with the code in the Palm 2 model and I want to step away from that a little bit and imagine where we might be in the future and to do that let's start with where we are today with traditional programming you instruct a computer through a programming language uh programming has evolved today from where it was a few decades ago it used to be primarily a mathematical science and now so much of programming is more of an empirical almost a natural science it's probabilistic it's dealing with uh uncertainties in the world it's observational and we train it through examples of uh showing it the kinds of things it should do rather than saying step by step here's how you should do it so that's a change in how we do things and we need tools to keep up with that I want to uh just uh show you the type of progress we have so here's uh you probably recognize this is the next kcd cartoon this is from 2014 and it's sort of emphasizing the lack of communication that sometimes happens between a programmer and a manager so here the manager saying uh we want an app where you take a photo and check whether you're in a national park and the programmer says sure that's simple I can do that in a few hours and then check whether the photo is a is a bird and in 2014 the programmer says oh well that's a research team it'll take me five years but in 2021 uh this guy says well I can do it in 60 seconds by calling out to these uh uh generative AI models uh he may have exaggerated a little bit looks like the video is actually a minute and eight seconds so uh close to a minute but uh a little maybe a little bit more so we've come a long way there's so many things we can do now but we need a way to be able to call and put all these pieces together so I think in the future programming is going to we're going to think of it more as a collaboration rather than an instruction it's not uh the the programmer telling the computer what to do it's the two of them working together to explore the space of the problem we want the computer to automate more of the task but but we we don't want to give up that control in fact we want the human to have more control over the task so the computer's doing more and the humans doing more we want the communication to be more natural yes some of it may be in a programming language or other formal languages that some of it may be in natural English some of it may be in diagrams and so on and we want that to flow back and forth we want them to be more feedback uh from the program on what's Happening from the users we want to be able to explore choices faster and respond to change and we want more evidence of correctness so right now we have test Suites and so on we want to do a better job of that to eliminate bugs now uh a lot of the emphasis we've seen so far has been on code how do we write new code to uh uh with these large language models but as developers you know that's a small part of the task in in this breakdown it was about a third was writing code and the rest of it we don't have as much support for so we need to concentrate on that as well just writing code is not enough and the types of problems we're dealing with are are different you know it used to be the typical problem was you write software for a bank uh and there's a thousand different regulations and you've got to get them all right but in the end there is an exact answer down to the penny of did I balance everything properly now we're dealing with with problems often called wicked problems where there is no clear problem definition there is no clear solution there's uh many factors and and multiple stakeholders that come into play and you have to please all of them so just defining the problem can be hard let alone trying to solve it and that's the kind of thing we want to provide support for now over the last year several people here uh Andre karapathi was one of them uh saying well the hot new programming language is English and there's definitely something to that but English by itself is not enough but let me give you an example uh so this was several years ago uh I got alert on my phone says depart now for return rental car to Logan Airport so I thought well this is great it's a very nice integration this is not AI this is before AI so this was just traditional programming but somebody had to parse my email from the rental car company and coordinate that with the calendar and the maps and the alerts on my phone and that was all done just right but then they had to spoil it all by saying time of travel 23 minutes by bicycle now in some sense that made that was a a good suggestion because I commute to work by bicycle most days and it's about 23 minutes so that makes sense but it didn't really understand what it meant to return a rental car uh and so whose fault is that well if I look at how the program was specified nobody's job was to parse and understand what the event was it was just to put the right events the right Maps up at the right time so everybody did their job right but it still failed it's because the world is open and we thought that this was about calendars and maps and events but it's really about anything that could happen anywhere in the world and so no traditional programming approach could handle all those possibilities but maybe this AI based approach can and that's how the world has changed I started to ask these large language models if they could do this and a couple months ago all the models failed you said I have to return rental car to the airport should they go by bicycle and they all said these kind of hedge things well it depends on many factors the distance the weather and so on uh because they they're trained not to give bad advice but uh this last month finally I get uh barred to say no you should not go by bicycle uh so these models are continuously getting better so how can we combine the best of these large language models to handle these open world Wicked problems with traditional programming languages where we have some idea of correctness now the eminent computer scientist Edgar Dykstra said in the discrete world of computing there's no meaningful metric in which small changes and small effects go hand in hand and there never will be uh and if he's right then gradient descent that we use to train these models is doomed because uh how do we make a large language model better well we we notice an error we make a small tweak to one of the parameters and hopefully that decreases the total area and we keep on making those small changes but Dykes are saying that won't happen and he's got a point right so you take a program you change one bit in the program and it could do something completely different uh so maybe that means that the neural net will never write code on the other hand Arthur C Clarke said when a distinguished elderly scientist States something is possible he's almost certainly right but when he says it's impossible he's very probably wrong uh so so baby dijkstra wasn't quite right and Ken Thompson one of the developers of eunuch said when in doubt use brute force and our TPU say yeah I got this so even though it is really difficult to explore this space of programs and one bit can make a big difference it's still possible and it still works most of the time Chris Peach teaches the introductory computer science class at Stanford and he says large language models solve 100 of the exercises and we've seen uh multiple uh uh types of approaches to this uh I want to look in depth at deepmind's Alpha code uh and here's a sample that they uh solved so the problem is described just in terms of informal ending English and there's a couple examples of input and output and now you have to write a program to do that I won't make you read all this but I'll say very quickly how this problem works so the idea is you're given two Source strings a source and a Target and it's asking you can you uh produce the Target string by typing the input source except that for some of the characters you can replace it with a backspace and that backspace means that you don't see that character and you don't see the previous character if there is one so if you got a b a b a as an input you could type three Backspaces followed by the B and an A and that would give you the target so you should say yes there's other possible places to put in the Backspaces that would give you the same result and the answer should be yes regardless now here's the the program that Alpha code comes up with and it gets the right answer so that's pretty impressive and it's uh not that long a program and not too hard to follow but I decided Well I'm going to do a code review so the first thing is uh you know if a novice programmer had written this the first comment would be well you have to document this put in Doc strings and comments for your functions uh and maybe it's better to make this be a function and not straight line code it's because it's easier to test functions uh and uh little things like just the spacing goes against the Pepe python standard uh and the variable T is used for two different things that's not great and these one letter variables maybe should be more descriptive and these 10 lines are a little bit verbose and here's a strange thing so they set up this variable C which looks like it's going to be used as a stack as you pop things off of B you store them on stack C but then stack C is never used anywhere else in the program and so I think what's Happening Here is it's been trained on past programs it it identified that this problem has something to do with Stacks that was great and it says well often when I'm popping things off one stack I save them someplace else so let's do that just in case uh and then I never went back and recognized that it didn't need that staxi after all uh and then uh it's an inefficiency here in doing the popping and uh a little bit of verboseness and when I look at this I see this uh Lake Wobegon effect of half the programmers are below average so half the output code is below average and there has been some attempts to say can we train these models on only only on more high quality stuff uh but it looks like we're kind of right in the middle and and we want to do better than that so the question is how can we get there so here's an imaginary collaboration with some future automated programming tools some add-ons to uh to Palm two to Alpha code to make it better uh so say it produced that first uh result I want to be able to say to it uh so I you know I know this came this problem came from a programming competition and often people in programming competitions go as fast as they possibly can uh and don't bother encapsulating it as a program but I want you to do that and I want you to put in the doc string so it can do that for you uh and then I can say well your code's a little bit confusing and it's not obvious that it's correct can you show me another version that is obviously correct even if it's inefficient and so here's one uh which says well I can generate all possible outputs that could result from either typing a backspace or not for every possible character and then I'm just going to see if the target is one of that sad of possible outputs so that's more obviously correct uh but inefficient that it looks at all possibilities uh and then I can say let's have some tests these are the four there are rather three tests that were given in the example uh from the problem and I can ask system generates some more tests and maybe can be clever in the way that it comes up with the good tests for for this particular problem and then I can say now try these two two uh uh approaches and yes the obviously correct one takes 10 times longer but they both pass all the tests so that gives me some confidence uh that the efficient one implements the same function as as the more obviously correct one and then I can go through and say uh well is it better to scan uh right to left or left to right and part of the key to understanding how this works is saying if you scan left to right you never know should I output a character or should I backspace over it but if you can right to left it's uh oh is the case that I can't output a character if it's not the one that's expected because there's no further possibility the backspace over it so you want the system to recognize that write a function based on that but we'll we'll skip over all the details I wanted to show here the system giving a very long and detailed explanation a sort of a proof of why it's correct but the details really aren't that important what is important is that you can have a conversation with it uh and here it says you can have a recursive version that tries all possibilities or you can show that you can take a greedy approach and that will always work and then you said well if the greedy approach always works then it doesn't have to be recursive and I won't have to worry about overflowing the stack so I can write this non-recursive version uh and then I can go back and say you know that first version is list rather than strings was that a good idea and the answer is yes because python strings are immutable and and lists can be popped and it can finally come up with this version which is sort of a third the size of the original and is is uh more clear and precise and so we hope we could arrive at something like that so that's the imagined conversation and the question is can we get there can we get it to be right by training it better and what processes would lead it to be able to have that kind of conversation uh and I'm encouraged and here's a a collaboration that was uh posted by this guy Daniel Tate uh on uh he said I I asked this system to create a game for me and it was able to do it so he said can you invent a logic puzzle similar to Sudoku that doesn't currently exist uh and I came up with an example he didn't like that so he said invent another one and it came up with this one uh and then he said uh that seems like a fun game uh can you make a playable version using HTML and JavaScript and it could do that and then it said can you make it a little bit prettier with some CSS and it did that uh and here's the game so there's this array of numbers and uh on the rows and columns there are totals that you're trying to hit and the idea is you delete some of the numbers so that all the totals add up in each row in each column uh and and that was what the program produced and then he went back and forth a little bit to say let's make it a little bit prettier he didn't he didn't think this was uh beautiful enough and this idea of circling the ones that you're going to keep and xing out the ones that you want to get rid of uh that seemed like a nicer approach so in the end he got a program he he could have written it himself he could have come up with the idea himself but uh the system did maybe half the work and and he did half of the work uh and that was a great collaboration so how can we make this type of collaboration better and so remembering that writing code is not enough we want to look at the whole software life cycle and say can we speed up that whole cycle uh you start with a strategy a design and mostly what we've been looking at so far has been focusing so tightly on this development part of uh writing the code uh but that's just one part you have to go on to testing and deployment and maintenance and all these parts are important and the development is maybe a third maybe less so so here's a picture of that life cycle and notice that at every step you've got teams of people involved and you've got documentation that's produced and there's only one of the steps where you're producing some code or maybe a neural net and the cool thing about the neural net is you can back propagate if if there's an error you can go back through the parameters and adjust them to eliminate that error but you can only do that through the neural net you can't do that everywhere else and so I'd like to see a system where you can back propagate through everything if the designers came up with a strategy for the user interface and now the world changes somehow suppose people are using devices with different size screens so the assumptions that were in that original document are off I want the system to be able to notice that error and back propagate through everything I don't want these design documents to be dead that are stored on a shelf somewhere I want them to be live and to be interrogated by the system and to be updated when necessary and I think if we have that everything will be a lot more flexible more responsive to the user so one great example of this approach is by Danny tarlow and his team at Google a system called Didact and the key here was that it was trained on the process of software development rather than just on the final code that solves the answer and so if you look at the process there's a developer they write some code they run some tests they submit it for review comments come back from the reviewer changes are made by the developer so the didac system has changed is trained uh in a sort of reinforcement learning manner on this whole workflow not just the final code but where did the code start what are the comments that led to it being changed along the way and that allows you to fit in better to this type of workflow and to avoid some of the mistakes that were made along the way another approach I think is really important is probabilistic programming it's never quite taken off the idea behind probabilistic programming is you describe a model of what might happen and it's probabilistic because there is uncertainty in the world uh and it's relational rather than just saying here's how to translate inputs to outputs it's saying here's the measurements I have and here's what I know about the relationships between things and maybe I only know the output and I don't know the input but I should still be able to describe that and then have the system uh use kind of Monte Carlo approaches to be able to write code that can optimize based on this probabilistic model and so I think that will be important for a lot of these Wicked type problems that don't have a a deterministic specification uh the idea of hierarchical decomposition is very important and we've seen that where as a problem problem gets larger as there are more tokens the large language models tend to break down and the traditional approach to that is hierarchical decomposition let's say let's decompose the problem into sub pieces solve those pieces individually and then solve the problem of combining them in this work but parcel looked done largely at Stanford takes that approach and I think that's going to be crucial to making success and being able to scale up where we can go uh and here's just some examples of uh of how that system works uh how it can take multiple different languages uh a robotic planning language a theorem proving language uh and it's fluent in the Python language and so on uh and it uh is able to take each one of those understand and translate between English and the formal language and go back and forth and then combine them together and I think this idea of code is a very powerful language so so English is an amazing language and all these other natural languages are amazing and the large language models learn so much just from our history of what we've written down but uh code also has a special point and we've seen models like Palm 2 take advantage of that to say because there's been this consistent problem that the large language models just aren't very good at arithmetic they can do one and two digit numbers but when you get into the three year four or five digits uh they tend to make mistakes and recognizing that uh Bard will when given a problem we'll write a piece of code to solve it and here it's done that in one way one sense it's done that exactly right it's taken this problem if I sell 378 apples for 39 cents each and 224 oranges for 43 cents each how much do I make it wrote a program calculate earnings that does that exactly right unfortunately it didn't quite uh get the connection between the code that it wrote and the way it describes it exactly right and it ends up saying you'll make 243 and 74 cents when it should have said 243 and 74 dollars right so that conversion between uh cents and dollars uh it it messed that up in the process of going into and out of the code so we need to just do a little bit better on using the codes as a check but making sure we understand how that transition goes from the the general model to the specific code model and I think we're almost there and I just want to say sort of as a last point that this idea of compression encourages generalization and so what do I mean by that well so there's a lot of stuff happening out in the world and most of what gets into a large language model comes from what is written down in books and on the web and so on and so somebody decided this was important enough to write about and that's what gets put into the model so that's one part of of compression and whether it's written language or photos or video somebody had to decide this is worth putting into the model and then we have a further point of compression where we say our models are going to have a certain number of parameters whether it's a certain million or billion if there were too many parameters then the model would just memorize the input exactly and would not be able to generalize if there are too few parameters uh then it can't uh hold all the information it has and useful information starts to drop out so you've got to get that just right to get the right level of generalization and then there's this further step of going from a question to an answer through an intermediate representation that generates code or some formal language and and I think that's a really useful step and we've seen some examples of that and it's useful because yes the Intermediate Language could just be English or some natural language but then we have no way of verifying that it's right you take a paragraph of English you know you can kind of ask does that make sense but you can't run it and execute it and get an output and you can't uh verify it for uh syntax errors or runtime errors but with a piece of code you can't uh and so forcing your answers to go through code gives you this extra level of testability and reliability and so I think we'll see more and more of that and maybe you know here I show uh Python and numpy as the Intermediate Language it doesn't have to be that we can be developing other formal languages that have the property that they're checkable and executable and have our large language models move through them and I think that's a great area of research it's figuring out what those languages are so why don't I stop there and open it up for a discussion okay uh is there a good way to use prop for llm to better dealing with different program lib API versions oh that's a great question yeah and I think that's uh an issue right now because training these models is uh is so complex that uh it isn't uh always done on a regular basis and so often you end up with uh it's been trained on uh older versions of libraries and it's not up to date I guess the best thing you can do is just uh make sure you specify version numbers in your description and you know say I want such such an API version 3.4 and make sure you pay attention to the version number and so bringing that to the model's attention is a good way to do that but the models uh aren't built to consistently worry about that in the way that say uh kubernetes container is uh so so I don't have a great answer right now uh uh got answered from Bard for hugging face but Bard just gave me some code for old hug and Page version yeah right so that's going to be a problem until uh the models are updated to the to the latest versions uh and you use we've seen some places where uh some of the systems are now trying to do that are trying to put in a uh fine-tuning for to keep things more up to date so hopefully in the near future that will work out better question I want to llm that specifically knows about recent flutter not old flutter uh how hard is it to get it to predatory's Modern state of the world so I think that's the same the same question uh can the relative age of training data inform the llm for bias yeah that's so that's really important and that's always been true right so I've been involved with Google search uh for two decades now and and we've always had this trade-off of how much should we trust uh old versus new uh you have more uh data on the old uh you know Morris signals on whether people trusted or not uh and then there's a new and and maybe the new is better and and up to date uh but maybe the new it just comes from uh spammers and we should we should stick with the the old and reliable uh so that's been a problem for search optimization and it continues to be a problem for these large language models so it wouldn't be enough just to say let's attach a date to everything and Trust the the more recent stuff more uh you want to look at them more recent but you also want to worry about uh is the spam and you always and you want to uh not lose the high quality stuff just because it's old well you do a new course like your Udacity design of computer programs cs212 but showing how you would would use llm's copilot yeah that's really interesting uh I had a I had a lot of fun doing uh cs212 at this Udacity class uh I'd like to do something like that again and and I think you're exactly right that you know I sort of used Bare Bones uh python in that class because because I didn't want to have it focus on specific Technologies I wanted it to get outside of the core of what programming is but you know as I'm saying now maybe what programming is has really changed and and maybe there should be a class like that so uh you know I've got a few notebooks up on my uh pytudes uh uh site on GitHub and there's a couple of them that uh start getting into large language models uh I was playing one with one over the weekend uh uh a little game that that uh Sergey balangi a professor came up with and uh so the the game was uh think of two words where uh one word you get by just dropping a single letter from the other word and then write a crossword puzzle clue for that and see if people can guess what the two words are uh and so you can easily say well what pairs of words are in the dictionary that are where one is drops one letter from the other but then you know you get things like uh cats and Cat uh where this cat drops the s from cats and that's not really interesting because they're too closely related so I said I want to come up with interesting pairs uh that are not closely related what's the easiest way to do that oh I'll just uh download some word embeddings and then create all pairs and sort by the largest distance of the word embedding so then you get two words uh that are one letter off but are very different from each other and that seemed to work uh so that was just a super simple example uh and I think it's interesting to go through to go back and so you make a great suggestion I should take some of the examples from from 212 or take some new examples and say how would you address them today that's quite different from how they were addressed a decade ago so thanks for that suggestion please expand on the Monte Carlo Carlo methods for programming okay so here's this idea of uh you You observe something in the world and you know when we write a regular program we'd say we're going to give you the input and the program's going to compute the output uh sometimes you say well the input's hidden we don't know what that is so you know the input is uh the state of a patient's health and then the output is uh their blood pressure and their results on these medical tests and you want to say what's the input given the output so that's not like regular programming where you go in the forward direction is where you're kind of making inference in the backwards Direction and one way to do that uh is saying is the Monte Carlo approach is named after the casino in Monte Carlo where you have gambling games where you roll the dice or spin the roulette wheel and so you say let's take a a random possible start state and then run it according to the rules of the game and say does that produce the output that I observed if so then that's a possible start State and then you just do this thousands or millions of times and now you get a probability distribution over here's the likely possible starting states that could end up in the correct output state so it's kind of uh rolling the dice because you don't know where you are and then Computing uh where you where you likely arrived at based on that is it reinforcement learning training uh getting over supervised learning approaches for training llms yeah so that's really interesting so I I think the big breakthrough for llms is that we're we were able to mostly uh train them with self-supervised learning so what does that mean it means we gave them a bunch of texts uh and you know normally in supervised learning you have a person come through and say uh here's a problem and here's the answer that's too hard and expensive to hire people to do all of that so instead we said we're gonna self-supervise we're going to make up Problems by saying here's a sentence we're going to blank out one word in the sentence and ask the system to guess what that word is so it supervised in the sense that there's an input a sentence with the blank in it and an output the correct word to fill in the bank blank and the system has to Guess that correct output but we didn't have to do any work because you can Auto automatically blank out anywhere you want so it's really easy to come up with these lots of supervised training examples and that works pretty well so it learns by just filling in the blanks of the words it learns what these words mean more or less it learns the syntax of English it learns some degree of argumentation as a Paige was describing in in the video earlier uh but sometimes it's not quite enough right because sometimes there's lots of words that could fill in the blank or sometimes you want to evaluate not just what's the one word here but uh what's a whole possible paragraph and they are just kind of looking at filling in the blanks doesn't tell you the right answer so we do this uh reinforcement learning with human feedback which means we ask the system a question you know sort of after we've trained it up on the fill in the blank one at a time we asked the system a question and say come up with two answers and then we show that to a human judge and say which of these two answers is better and then we adjust the system so that it produces more of the better one and having that human in the loop is important because we don't have anything that could answer uh you know we don't have a supervised way of saying which is better these two so the human has to be there uh and then uh the reinforcement learning says uh you know what led to this uh you know so supervised learning says we're only looking at one step at a time reinforcement learning says we're looking at multiple steps you generated a whole sentence or a whole paragraph uh and now we're just saying this is better than that and the reinforcement learning has to say given that feedback of this one was better which steps along the way were the crucial steps that that led me to get there and that's how uh we we improve these answers on paragraph level questions and that's how uh programs that play go or chess uh learn to make better moves only being told did you win or lose the game in the end and they can figure out which Step along the way was the most important step hey Peter it is great to have you here thank you so much uh I've got a question for you great as as good Engineers we we kind of know that one of our jobs isn't just to look at the rosy side of everything but it's also to look at the risks to look at the pitfalls and to figure out how do we mitigate those risks and pitfalls and I I guess my question is what what are those risks when we're talking about using uh machine learning systems or llms or or whatever as we're bringing it into this this coding process and yeah what do we do about it right so I think that's right so so I think there's some risks uh specifically from the technology I think more of the risks uh really come from the problems rather than from the Technologies or the solutions and I think there's a lot of confusion about that you know so people are saying oh I'm worried about these AI programs because they can make mistakes uh and it wasn't the AI program's fault it was that you're asking it to solve problems in which it's much harder and it's easier to make mistakes right and so any result uh could have a problem right so there was a lot of discussion over these AI systems that help judges make uh parole decisions so they predict is this defendant going to recommit a crime uh if they are if the prediction is that they are then the judge will be less likely to let them have parole and uh and so a couple things happen one is you say how well do these systems perform and the answer is well pretty well uh and in fact they do better than judges uh with these kind of fancy AI systems and even a simple system which has you know a simple system that could like fit in a 280 character tweet that also does better than a human judge right so the simple system could be if the defendant has two prior convictions uh or their age is such and such and they have something else uh then yes else no right that performs better than a human judge right so you blame the AI system when it's not perfect but it is doing better than the alternatives now the other thing you want to worry about is not just the overall performance of the system which gets to a pretty good level but also the fairness of the system uh and and there it starts to get controversial because you start asking well what do I mean by Fair well one thing you could mean by fair is calibration saying if I say uh you're 60 likely to recommit a crime then that should be equally true whether you're black or white or male or female and uh North Point the company that made this system says well look you know we did this test we're well calibrated you know it's it's almost the same regardless of what group you're in therefore we're Fair stop bothering us pro-republica the journalists who criticized them said yeah you're right you're well calibrated but I don't think that's the most important point for fairness I think the most important for important point for fairness is equal harm so when you do make a mistake either false negative or false positive who does it hurt and then they showed that it hurt black defendants twice as much as white defendants and so they said that's why we think you're not fair now as an engineer you might say okay there's two metrics let's just optimize both of them unfortunately the way the math works out that's just not possible in that the two metrics are always traded off one versus the other and so now you're left at this point where the answer for what's right is not really a programming question or a math question it's a societal question of you know what do we as a society think is fair and if you could tell me that then I could go implement it but that's what we're arguing about is that we don't know what's fair so I so I think in cases like this what's really important is bringing all the stakeholders into play uh you know so very early on don't just say uh oh well I got an algorithm that's really uh fast it's uh order n so I'm going to implement that but say what is it that we really want and who's being hurt and who are the stakeholders and and typically you look at this at three levels right so you say uh first I want to build a system for the user in a case like this who's the user well it's a judge so you say well I want the judge to have a nice display with the pretty charts and graphs and and so on and you know the font should be really pretty and the output should be clear yeah great but don't end there then say well who else is involved well the other stakeholders are the defendants and their families and if someone recommits a crime it's the victims and their families and so take them into account take their viewpoints into account and then look at society as a whole and what is the effect of mass incarceration or discrimination uh at a societal level and so as an engineer you know you thought my job was just to make a pretty screen for the judge but really you want to say my job is much larger than that I have to take all these things into account or you know similarly if I'm building a self-driving car yeah I want the person in the car to have a great experience but the other drivers and the pedestrians I'm also responsible for and at a societal level it's uh you know am I causing more traffic am I causing people to live farther out in the suburbs and uh and disrupt uh urbanization uh are people losing their jobs uh those are all parts of the problem too so kind of expanding uh your viewpoint on what's important I I think is one of the key lessons great thank you very much uh we'll integrate llm outputs to a Knowledge Graph improve the performance of llm for coding yeah so that's a great question uh so you know uh I've been doing AI before it was cool and in the 80s we were essentially building knowledge graphs uh and we didn't have this idea you know there was no Wikipedia to download and so on so it's kind of well we had to build everything by hand and we were limited in how far you could go and we felt we hit this asymptote uh you get to the point where if you add one more fact you're as likely to break as many things as you are to fix uh so there are huge advantages to just using text which is kind of freely available in mass quantities but there are also advantages in having something that's better curated uh and which you have more trust in and a knowledge graph is one way of doing that uh I think it's not the the only way I think another approach is to uh integrate out uh third-party data sources or apis right so you know it makes sense that you should be able to ask a large language model uh what's the capital of France and it should know Paris but I don't think it makes sense to expect a large language model to say uh what's the capital of uh every County in every country in the world I think it makes more sense for the large language model to answer that by saying well I don't know uh but I know a reliable Atlas where I can look that up and and so I would like to see more of that type of integration and some of it might be a knowledge graph that we've built and put together but more of it I think might be just these third-party apis to say for this question here's where I want to go to try to get the answer let's see uh uh do we have more questions uh hi um here and I've been a huge fan of your work I studied a lot of the courses that you thought on Udacity and I gained a lot of knowledge um I'm really an advocator of democratizing AI and I would like to know your advice on how to make it more accessible for other people thank you yeah that's great uh I think we definitely gone a long ways uh towards doing that in that you know it used to be you had to be an expert programmer and you had to sort of go through grad school to be able to even understand how any of the tools work uh now we've got these interfaces where you can you can ask these large language models anything and you're interacting with them uh I think and we also have uh some tools that make it easier for people understand what's going on so to me you know education is is really the key to democratization to show people this is how the technology works this is how it affects your life and you can be a part of it it's not something that you know these experts way out there do this is something that you can do and so things like uh the pair groups explorables where you can uh play around and you know build a little system to do classification uh you know so build a system that says uh well well this is one signal and this is another signal and I'm going to build a visual system that recognizes that in five minutes you can do that and you kind of get the idea uh that one is possible for me to do that and two how do the systems break you know maybe I train it uh and it starts picking up the background rather than picking up the foreground uh so now you know that that's a possible failure mode for these types of systems uh so I think making systems that are easy to get started that are accessible and interesting to people and just pushing that and showing that someone like you can do this uh and and that's something I've tried to do throughout my career is is to welcome more people in and and I'm glad I was able uh that that you were able to connect with that and and other students have and I think we need more of these types of introductory materials to get people started because once they get started and they get excited uh then they're on the right path and they can get there but it's uh this introductory part to tell people this is something that could fit for you it's not just for these other people I think that first step is the most important one what are near future Trends regarding llms in your opinion uh one of the things I'm really excited about is this multimodality and and Paige mentioned that you know so I said that uh we have this kind of compression that we write down what we think is important about the world and then we train on that uh and and so far most of our systems are built on either on text or on images uh but I think when we get another order of magnitude increase in processing power then we'll be able to do videos as well and I think that's interesting because I think video is a a less biased view of the world you know when I write something down it's what I think is important that I wrote it down when I take a photo I click the shutter and point the camera in the direction that I think is important but with video sometimes it's just a stream that's just coming and so it's more here's what happened rather than here's one moment that someone wanted to capture and so I think we'll learn a lot more about the world uh when we have access to video hello this is Noble um thanks so much for such an insightful talk uh you know this is a general machine learning related question but going off of that theme of fairness bias uh that was asked earlier there's a tension in sort of Enterprise contacts or sort of real world context there's a tension between accuracy and fairness of eyes right when practitioners try to apply these Concepts in the real world when so for example when you constrain optimization for fairness or bias you sacrifice performance or accuracy what's your thinking Behind These trade-offs and how uh what's what's your customer guidance there or Council there I yeah I don't think that's neces necessarily A trade-off right so I mentioned there are cases where where yes there are trade-offs if you increase one thing you you decrease another uh I think for a lot of these fairness issues uh I think of the trade-off more of uh in development time rather than in accuracy uh and there's no reason you you know you you can't improve everywhere but you gotta just make these choices of who counts uh who am I going to spend the engineering effort to try to improve the uh the experience for and and it's definitely true in machine learning uh but I think it's always been true you know so anytime we make product decisions uh you know before there was machine learning before there were computers uh we're always including somebody in excluding somebody right so you know I sell a product a toaster and it comes with an instruction manual if the instruction manual is only in English then I've left some people out now you know I get feedback from my customers and I say okay I'm also going to uh make the instructions in Spanish uh now I'm included one more group but there's still other groups that are left out and every time I make that decision uh so it's not really a trade-off of of of accuracy for fairness it's more is it worth the time and effort to go and and get that Spanish translation and is it worth it to then do the third and fourth and fifth language uh and I think that's what what Enterprises have to focus on uh and I think they'll see a reward from uh treating all their customers fairly uh they'll gain new customers and they'll gain respect from the existing customers to saying yeah I like that you're sticking up for fairness and that you're supporting everybody thank you Peter for that uh fascinating talk um great opening session for us and I love that uh Lake will be gone uh slide there so um and thank you for all the great questions coming in from the audience from from the other speakers um

2023-09-09 07:24