CMS 2021 Pre-Rulemaking Season Kick-off Webinar

All right. Good afternoon, everyone. We’re at two after the hour, so I think we’re going to go ahead and get started and get some introductions and housekeeping things out of the way. And then we’ll go ahead and start our presentation. So good afternoon. My name is Kimberly Rawlings, and I’m the co-lead for pre-rulemaking, along with Michael Brea.

And I also am the lead of the Measures Management System (MMS) that supports this task with the support of Battelle as well. So I want to thank you all for taking the time out of your busy schedules and joining us for today’s presentation. Next slide. Just as a few quick housekeeping items, you know, all audio lines are going to be muted during the presentation. Please feel free to put any questions into the Q&A chat function—or the Q&A function within WebEx. We are going to have plenty of time at the end of the presentation to answer some of those questions.

Also, this meeting is being recorded, and we’re going to upload that to YouTube and put it on our CMS website. So if you have any colleagues or if you want to revisit anything, you can feel free to view it on our website shortly, and we will make sure to send out the link to the recording as well. Also, not quite housekeeping, but we get this question an awful lot. The slides for this presentation are already online. The easiest way to probably get them is to just Google "CMS pre-rulemaking," go to the website. And then I believe at the bottom we have those—we have the slides already available and published online for your review and reference.

Next slide. So we have a lot to cover today in the next 90 minutes. First off, we’re going to kick off a small presentation on the CMS Quality Measurement Action Plan.

This is really just some background for you as you’re thinking through your measures that you want to submit for consideration to put on the MUC List. It will help give you an idea of where CMS’ priorities are, et cetera. Then we’re going to follow that up with a presentation that just gives some background and overall view of the pre-rulemaking process, including a reference to the CMS needs and priorities document. Then we’re going to have a couple of very short presentations, one on the MIPS journal article requirement.

Every year we get a lot of questions around this, and so we find it helpful to kind of—to hit that off at the beginning and answer some questions. Same thing with eCQM readiness. We, of course, welcome eCQMs, as you’ll hear in my presentation on the measurement action plan. Digital measures, eCQMs are a great focus and priority for CMS, and so I’m definitely looking forward to getting some for consideration. So we’re going to have a presentation on eCQM readiness and the documentation needed there.

Hopefully, you’ve heard by now that we have a brand new submission tool. We were using JIRA, and then we had this interim awkward phase of a spreadsheet. So we’re happy to have a brand new IT system that was created specifically for this pre- rulemaking process, and so you'll have a very short demo around MERIT. We’ll cap all of the presentations off with a short presentation around the MAP. And then as promised, we will follow up with some questions and answers (Q&A).

So with that, we can go to the next slide. I think we’re going to go ahead and get started. Oh, so previous slide, please. Thanks. All right. So to kick us off, I really just want to provide a—provide you with an overview of the CMS Quality Measurement Action Plan.

It's an ongoing multiyear strategy which really aims to advance the CMS vision for the future of quality. It’s important to note that this is CMS-wide. Some of these actions, of course, might look different from Medicaid or CMMI models, or the Medicare quality programs that are covered under pre-rulemaking, but this is our unified plan for the future across all of CMS. This might seem like it kind of popped out of nowhere, especially if you weren't able to attend this year’s virtual 2021 quality conference, but I want to assure you that as you’ll see hopefully in the coming slides that these are really a lot of the same goals that we’ve been talking about for the last year or two, especially as we’ve talked more and more about Meaningful Measures 2.0. The vision hasn’t changed.

We’ve just kind of morphed and evolved a bit in how we’re thinking about our quality goals and how it relates to Meaningful Measures. And so next slide. And so I think at a high level, you know, our goal is to use impactful quality measures to improve health outcomes and to really deliver value.

The value comes by empowering patients to make informed care decisions. The value comes from reducing burden to clinicians and providers, and the value really comes from focusing on outcomes on the highest—focusing on outcomes of the highest quality at the lowest cost. So really that’s our vision for doing this. Next slide. So we have a vision and we need to back it up with some goals, and so we have five major overarching goals of the CMS Quality Measurement Action Plan. We’re calling ours kind of an Action Plan as opposed to the HHS Quality Roadmap.

If you remember, that was published in late spring-early summer of 2020. There are many features that I think are the same, and the end result has many, you know, and as a result the end will have many features that are the same, but we’ve been working on this for over two years. It’s really an action plan because there are steps that we are trying to operationalize, both through our programs and our measures to move forward in this area that, you know, we are going to call kind of the quality measurement enterprise. I hope—my hope here today is to honestly not say anything new, or not have you be surprised at all, but really to instead just hear all of this being put together in a kind of concise way of all the different things that CMS has been saying over the last couple of years. The first is to use the Meaningful Measures Framework to streamline quality measurement.

That means to make it more efficient, and I think we’re all very familiar with this, making sure there are high-value measures with the least amount of burden. The second is to take our measures and leverage them within our quality-based programs to drive both value and improved outcome. Year after year we make kind of iterative changes in both the value-based programs, as well as the measures that are used in them, and we’ll definitely continue doing that. The third is to really transform quality measures to make them more efficient and move to the future, which I think as CMS has been saying, and I think you saw with the 2020 MUC List, is really around digital measurement, as well as the use of advanced analytics to propel that digital measures—to propel digital measures, analyze them, and maybe even change what measures would look like based on having those advanced analytics, neural networks, machine learning, et cetera.

The fourth overarching goal is to use these measures to empower patients in making the best healthcare choices they can. This is through patient-centered measures, shared decision-making, transparency, et cetera. Last year when I would present this there would only be four goals. We heard—we kept hearing the question, “So where is equity in this?” And we certainly recognize that equity is a fundamentally important goal, and I think you’ll see equity woven through all four of these first goals. But ultimately, it is critical that we also call equity out by itself, you know, and especially in light of COVID. I don't think anyone will debate the importance of equity, and so we added this fifth goal to help ensure that we leverage measures to promote equity and close gaps in care, with the ultimate goal of closing gaps in health outcomes.

Next slide. So I’m going to move through these two next slides pretty quickly, because I’m sure that you guys are all very familiar with Meaningful Measures 1.0, but I just wanted to show this graphic so you could kind of have it in your brain as you see Meaningful Measures 2.0 in just a second, but as you’ll see, it’s kind of a complicated diagram.

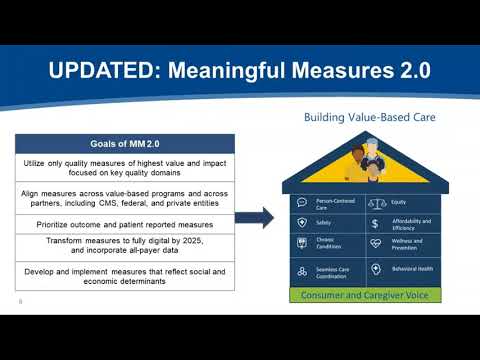

There are six domains, 19 Meaningful Measure Areas (MMAs). We’ve heard that it’s complex but, you know, in its complexity and as we were using it—we also, you know, went a really long way in setting CMS’ priorities and really trying to move measures forward. Next slide. And so since kind of starting and launching Meaningful Measures in 2017, we feel like we’ve really made a lot of progress and a—and really come a long way in reducing the number of measures, increasing the number of outcome measures, decreasing the number of process measures, et cetera. Next slide. And so this is Meaningful Measures 2.0.

It has changed a little bit over the past couple of months, again with kind of the addition of equity here, but our real goal here was to really simplify. There are now eight key domains that we think are important—person-centered care, equity, safety, affordability and efficiency, chronic conditions, wellness and prevention, seamless care, coordination and behavioral health. All of this which kind of has this true north. Which is the consumers of healthcare and their families and caregivers and all of those that support them, but they’re also at the bottom of this kind of house structure as well. Because while they both are true north, they’re also very much our foundation as well. And so the goals then are to utilize only impactful measures focusing on these key quality domains, aligning measures across all of our value-based programs, across our federal partners, and as much as possible, across private entities as well.

You know, really prioritizing outcomes and patient-reported outcome measures (PROMs), and we’ll talk about the... ...to digital by 2025 as well. And so now, next slide. I just want to briefly touch on each of these five—each of these five goals. And like I said, the slides are online that you can always reference.

I’m not going to touch on every point today, but please feel free to, you know, go there and look at the slides more closely. There is also some information on these on our Meaningful Measures website as well. So first is using Meaningful Measures to streamline quality measurement, really to simplify it with the objective of aligning measures.

First, starting with alignment with CMS which I spoke a little bit about already, you know. We’re already working across the programs and have multiple workgroups, et cetera, focused on alignment so that we can align as much as possible across CMS programs, while still recognizing that some populations, some programs really do have specific needs. We’re also looking to align across federal programs, and in particular we’re working with the VA and the DOD on this.

And then finally, really thinking about alignment even broader than just the federal government and looking at the great work happening by the Core Quality Measures Collaborative (CQMC) which is a collaborative between CMS, AHIP and NQF who have all come together. There are many people across this—I guess we can call the quality measurement ecosystem who participate in the Core Quality Measure Collaborative or the CQMC, which is really trying to identify measure sets for specialty areas that align across all payers. I believe at this point there are approximately ten of those measure sets that have been finalized, and CMS is of course as a partner in this, is very committed to finalizing those measures in our programs as well. And, you know, as we talked about before, one of the objectives of doing this is really reducing numbers.

So, you know, leveraging the Meaningful Measures Framework and the principles of these goals to really reduce burden, promote alignment and make sure that we have the most high-value measures possible. Next slide. So we have measures and we’ve talked about, you know, kind of what are the right measures, how is CMS going to determine the right measures, what’s the framework that’s used, et cetera. And so our second goal is to really leverage these high-value measures that are aligned across our programs to accelerate the ongoing efforts to streamline and modernize our programs, while still continuing to reduce burden and promote strategically important focus areas. So if we look at like the MIPS program as an example, you know, that we’re promoting and continuing to get feedback on the MIPS value pathways which are really meant to be cohesive sets of pathways that are around the specific target area. You know, right now in MIPS you have literally hundreds of choices and thousands of permeations of what you can put those choices together from quality measures and improvement activities and costs of promoting operability.

These will help narrow the—narrow these choices, and so more providers will be reporting on the same measures and will weave together the same themes around quality improvement activities, cost and promoting interoperability. So we’re looking at all of our programs across CMS in a similar fashion and really trying to identify ways we can continue to modernize and streamline them, to make them most relevant to not only providers, but also, you know, patients and all of our stakeholders. And sometimes that means not changing the program, but changing what we do with the data in the program. So we’re working to provide additional confidential feedback reports within our program, specifically around disparities.

You know, so like providing confidential feedback reports on how organizations are doing for disparities so they can start closing those gaps, and then incorporating robust measurement into their value-based care structures. Next slide. Our third goal I think is the one that captures people’s attention the most, as it has the most bold kind of action item to kind of kick it off. Transforming all—transforming to all digital quality measures by 2025.

You know, CMS has been doing a tremendous amount of work in promoting the concept of digital measures. You know, really we’re looking at advanced data analytics when it comes to quality measures, too, because we think it may be able to kind of fundamentally shift the conversation around what measurement is. The objective here is really using data and information as essential aspects of a healthy and robust healthcare system, creating, you know, learning networks and learning health organizations so that we can provide more rapid cycle feedback than the traditional like one, two, even three years of quality measures before you get those reports back. By doing this we can make both receiving and sending quality measures easier. We can also then use digital capacity to make sure that we are including all payers as well. You know, as are stated, our goal is to transform to all digital quality measures by 2025.

You know, we recognize this is a very lofty and aggressive goal. And quite frankly, I don't know that we would probably get there, but I think it’s important to sometimes set aggressive goals so that we can make some progress. It’s really important to note that digital quality measures really embrace more than just eCQMs.

eCQMs are rather just a small subset of that. We have been accelerating the development and testing of eCQMs using FHIR-based technology. We think that at least for now that’s the way that CMS is going to be, you know, is going to be moving forward. When we think about digital quality measures or dQMs, you know, they really utilize data from one or more electronic sources to improve quality of care and the patient experience, improve the help of—the health of populations and reduce cost. They are self-contained measures, kind of software packages deployed as components of a service-oriented architecture which use structured and standardized data.

And then they also, you know, query the data needed. For example, from FHIR APIs, which I just mentioned, calculate the measure score and generate the required measure reports. And so data sources for dQMs or digital measures can be a whole host of things. It can include administrative systems, care management systems, obviously EHRs, different instruments, you know, medical devices, wearable devices, patient portals, Health Information Exchange organizations or registries and other—and others, you know. I wanted to spend some time on this slide and kind of explain exactly what they are because, you know, like I said, a lot—everything I’m going over in this section, it’s a good overview of where CMS is headed, but it’s also what we’re really looking for as we review the measures that you’re going to be submitting to us by May 27th as well.

And if you’re at all familiar with the 2020 MUC List, many, I think a vast majority with the exception of some of the COVID vaccine measures—the vast majority of the measures on the MUC List this year were digital quality measures (dQMs). Next slide. So this fourth goal I think really speaks for itself. You know, as we talked about with the Meaningful Measures Framework, the consumers of healthcare are really at the center of everything we do.

They are our driving force in both guiding our efforts as well as the foundation, and so it’s critical that we ensure that their voice is heard; that their voice is represented in our measures and in our programs, and that we support all of their choices by increasing transparency of data in public reporting so they can make the most informed decisions about their healthcare. And so we’re working to develop an increased number of PROMs that’s prioritized by the CQMC as well. And to kind of support increase in transparency of data in public reporting, in the last year we have sunset our legacy Compare Sites and modernized the way that we provide and display data for patients and caregivers, as well as providers and researchers. So we continue to work on this but, you know, we also believe that we have come a really long way just in the last year as well. Next slide.

And then last, but certainly not least, we have our fifth goal of leveraging measures to promote quality—I’m sorry, to promote equity. Again, you know, I think we can come back to our, you know, kind of confidential feedback reports, you know, stratified by dual-eligibility, you know, working on increasing and expanding the number of feedback reports for that. We also realized that, you know, that this isn’t something that can be solved in a year or with a single initiative or through a single program.

And so really we’re working on developing a multiyear plan on how we can approach and how we can promote equity through quality measures, and we’re doing that in partnership with, you know, with various offices and centers across CMS, including our Office of Minority Health regarding the HESS measure that they have, which is surrounding, which is surrounding equity. So I think this is a budding topic that is incredibly important that, you know, you will see a lot more information and a lot more action items on over the course of the next year or so. And so with that, I would like to turn it over to Meridith who’s going to kickstart our presentation on pre-rulemaking.

Great. Thanks so much, Kim, and thank you, everyone for joining this afternoon. I’m Meridith Eastman and I am the pre-rulemaking task lead at Battelle, the Measures Management System (MMS) contract for supporting CMS. I’m going to provide a brief overview and some background on the pre-rulemaking process, and I’ll also show you some important pre-rulemaking resources. So Section 3014 of the 2010 Affordable Care Act (ACA) created a new section of the Social Security Act which requires that the U.S. Department of Health and Human Services established a federal pre-rulemaking process for the selection of quality and efficiency measures for use by HHS.

The process we’re discussing today is the process by which quality and efficiency measures are accepted for use in certain CMS programs. Broadly speaking, the steps of the pre-rulemaking process are on this slide. So CMS annually publishes the measures under consideration (MUC) List by December 1st. Then the consensus-based entity, currently the National Quality Forum (NQF) convenes multi-stakeholder groups under the Measure Application Partnership or the MAP, a process that we’ll discuss later in this presentation. The MAP provides recommendations and feedback on MUC List measures to the Secretary annually by February 1st.

This input is considered in selecting measures and some measures may be put forth in a notice of proposed rulemaking in the federal register for public comment and further consideration before a final rule is issued. Not all measures need to go on the measures under consideration (MUC) List. For example, if a measure is currently in use in a CMS program, it does not need to go on the measures under consideration (MUC) List again. There are a couple of exceptions to this statement. If a measure is being expanded into other CMS programs, it must go on the MUC List. Also, if a measure is undergoing substantial changes, it must also go on the MUC List.

You may resubmit measures that were submitted on a previous MUC List, but that were not accepted for use. It’s important to note here that measure specifications may change over time. If your measure has significantly changed, you may submit it again for consideration.

Measures are submitted to the MUC List using a web-based data capture tool that Kim mentioned called CMS MERIT, which launched this year and which we’ll discuss a little bit later. So the pre-rulemaking process we’re discussing today applies to these 19 programs, which are the Medicare quality programs covered by the aforementioned statute. Measures in these programs must go through the pre-rulemaking process we’re describing today. Other CMS programs have their own processes for selecting measures.

So how does CMS decide which measures to select for these 19 programs? Measure selection considerations that are listed on this slide include does the submission align with the quality priorities? For example, we just reviewed the MAP. Is the submission a digital measure, or is it an outcome measure? Is the candidate measure fulfilling a Meaningful Measure domain gap for this program? Does the measure improve upon or enhance any existing measures in the public or private sector? And if so, could the original measure be removed? Is the measure evidence-based? Is it fully developed and is it fully tested, and would the measure be burdensome to operationalize? And lastly, is the measure endorsed by a consensus-based entity? So Kim started this presentation by reviewing the agencywide vision and goals for quality measurement. Each year each CMS program that undergoes the pre-rulemaking process identifies its own program-specific quality measure needs and priorities. The 2021 summary of program-specific needs and priorities is now posted to the CMS pre-rulemaking website.

I will take you there in a few minutes, but as an overview, for each program the needs and priorities document summarizes the program’s history and structure, information about the number and type of measures that are currently in use, high priorities for future measure consideration, and any program-specific measurement requirements. This slide is a little detailed, but it’s meant to give a general idea of the overlapping and cyclical nature of measure implementation, which starts once a measure is completely developed and tested and submitted to the MUC List for CMS MERIT, the data capture tool for the MUC List. Again, the MUC List is published annually by December 1st, and the MAP makes recommendations by February.

Those recommendations are taken into consideration and some measures are put into the proposed rule for programs. A final rule is issued, and then measures are adopted in the field. On this slide we actually are showing two overlapping MUC cycles to indicate that we always have a hand in at least two MUC cycles at any given time of the year.

For example, MAP recommendations are made annually by February, and by that time we will have opened CMS MERIT for measure submissions for the next cycle, so it’s always an area of activity. This slide illustrates a trend of decreasing number of measures on the MUC List over time. There are a couple of reasons for this trend. First, we’ve honed in on our messaging to clearly communicate needs and priorities, and second, there’s been an increased focus on outcome measures and measures that are patient-centered and patient-reported.

If you’re interested in seeing MUC Lists and MAP reports over time, they’re all available on the CMS pre-rulemaking website for your reference. Lessons learned from the 2020 pre-rulemaking cycle that we wanted to share today reflect the trends depicted on the previous slide where we saw fewer candidate measures being accepted for the MUC List, with an emphasis on testing results and eCQM readiness, the latter of which we’ll talk about a little bit more this afternoon. As previously noted, gaps in Meaningful Measure domains have driven measure selection as have agencywide and program- specific priorities for outcome measures, patient-reported outcomes (PROs) and digital measures. So before concluding this overview of the pre-rulemaking process and considerations, I want to make sure you know where to get more information about pre-rulemaking.

The CMS pre-rulemaking website has a number of resources that you may find helpful. I’m actually going to pause here and share my screen to give a brief tour of the website. Just give me one moment as I share my screen. Okay, here we are at the pre-rulemaking website. We have a table of contents up here, which can help you navigate to the section you're most interested in.

We have an overview, some guidance for this year, information about measure priorities, a high-level events calendar, instructions for submitting candidate measures, information about the MAP. And then here at the bottom I’m going to pause here. We have the links to the previous MAP reports and links to previous measures under consideration (MUC).

And lastly, and perhaps most importantly, we have some additional resources that may be helpful if you’re interested in learning more about pre-rulemaking. This is where the webinar slides for today are posted. We also have a recording of past CMS MERIT webinars here. There is also a pre-rulemaking FAQ, which is really helpful, and I encourage you to take a look at that. And then lastly, right at the bottom here we have the 2021 MUC List program-specific needs and priorities. So that document shows the program-specific needs and priorities for all of the 19 programs that are part of the pre-rulemaking process.

So I will stop sharing my screen. And with that—oh, one more thing. You can always reach out to the Battelle pre-rulemaking team at the email address on the screen here. It’s MMSsupport@battelle.org for any questions regarding pre-rulemaking. Now, I’m going to turn it over to Julie Johnson who is going to discuss a specific MUC List requirement for the Merit-based Incentive Payment System, or the MIPS program. So Julie, over to you.

Thank you. The next slide, please. CMS is required to submit proposed MIPS quality measures and the method for developing and selecting these measures for publication to an applicable, specialty appropriate peer-reviewed journal including these measures in MIPS.

This is in Section 1848 of the Act under Section 101(c)(1) of the Medicare Access and CHIP Reauthorization Act, or other known as MACRA. Measure owners submitting measures into CMS MERIT have to complete the required information by the Call for Measures deadline on May 27th. Next slide, please.

The intended benefits of this requirement is to provide clinicians with information on clinical quality measures (CQMs), including specialty who would not otherwise have access to or any involvement with the MUC or MAP processes that we’re going over today. Eligible professionals (EPs) will be more aware of the types of quality measures that can be reported to the CMS quality programs. And in this peer-reviewed journal article we’re to list out like the different steps that proposed measures go through before they are actually included into MIPS. Next slide, please. The MIPS Peer Reviewed Template must be completed and uploaded as an attachment to your measure submission in CMS MERIT. This is the standardized process for collecting required information, and the template is subject to change each year.

Some of the information requested in the template may be listed in the specific fields in the MERIT tool. To ensure that CMS has all the necessary information from the measure owner or steward that’s submitting and to avoid delays in the evaluation of proposed MERIT measures, please fully complete the form as an attached Word document. The information in CMS MERIT must be consistent with the information in the template, the completed form.

You can also access the template and examples of completed templates on the CMS pre-rulemaking website that Meridith just walked you through. They were included in that link. They’re included in that section where you could download a whole bunch of materials, and you can also get the template and these examples from the Call for Measures materials, which are also included in the QPP Resource Library. That website is QPPCMS.gov, and the URLs, they’re provided right there on this slide. So this completes my portion, and I will hand it over to Joel Andress for eCQM readiness. Thank you, Julie.

Good afternoon, everyone. My name is Joel Andress. Electronic clinical quality measures (eCQMs) submitted to the Call for Measures have additional considerations and requested information so that CMS can ensure that the submitted measures are ready for implementation in MIPS. We refer to this as our eCQM readiness check.

We divided the work into two steps and are providing here resources that have all the information I will present, and this deck lists those resources after we cover them. Okay, next slide, please. Thank you. Before a measure can be implemented in MIPS, CMS has three key questions that need to be answered about characteristics of the measures. That’s step one. For more details than are provided here, please see the Blueprint which we provide a link to below.

First is the measure feasible to report? Are data elements that populate the measure available in structured fields in the EHR? You can use NQF-developed feasibility—an NQF-developed feasibility scorecard across at least two different EHR vendors in order to establish the feasibility of reporting. Second, does the measure produce valid results? There are two ways to do this, and you should consider using at least one approach. First, data element validity. Kappa agreement between manually abstracted data from the full record and electronic extracts from structured EHR fields for a set of patients allows you to establish the validity of the data elements that you’ve identified for your measure. Secondly, you can correlate provider scores on new measures—on a new measure with existing gold standard measurement, such as a previously developed and established quality measure.

This allows you to establish clinical score level validity. It’s important to keep in mind that these are two different levels of validity that establish different elements of the measure. Other considerations include performance. How does the measure look? Is it topped out? Is there a variation in performance across providers? Is there room for improvement, an indication that incorporating the measure in the program will potentially result in better care for patients? Next, we can consider risk adjustment.

How does—is risk adjustment necessary for the measure? How does the risk adjustment model that you’ve incorporated perform, and does it incorporate all of the necessary elements that we believe are potential confounders for assessment of quality? And then finally, what exclusion criteria should be incorporated within the measure, and what is their impact on the measure scores as implemented? The third question is whether or not clinician-level quality measure scores are reliable. Put another way, is there a precision around a provider quality measure score? We use signal-to-noise analysis to estimate there at the individual clinician measure score level. Next slide, please. Thank you. As part of step two of the readiness check, we look at the specification readiness of the eCQM for implementation in a national program. This includes three key requirements.

First, the eCQMs must be specified using the Measure Authoring Tool (MAT). This ensures it uses the latest eCQM standard, the Call for Measures requests on that number and that package for all submitted eCQMs. The eCQI Resource Center has information about current standards and should be considered as the source of truth.

Second, the value sets for eCQMs must be created in the National Library of Medicine (NLM) VSAC. The eCQM logic must be tested using a Bonnie tool, which—I’m sorry. Step three. The eCQM logic must be tested using a Bonnie tool which uses test cases to verify the measure’s logic is working as intended. For inclusion in the MIPS an eCQM must have test cases that cover all branches of the logic with a 100% pass rate in Bonnie.

The Call for Measures requests that Bonnie test cases for the measure are showing 100% logic coverage, and CMS has found that Bonnie cases should be included. Next slide, please. Here you can see a set of links to resources that were referenced in the two readiness checks steps above.

This includes the VSAC, Bonnie, the Blueprint and eCQM testing requirements related to feasibility. Thank you, and now I’ll turn it back over to Meridith. Great. Thank you, Joel, and thank you, Julie.

Next, I’m going to spend just a couple of minutes introducing you to CMS MERIT, which is the data capture tool for the MUC, measures submitted on the MUC List. It launched in January of 2021. So, as I mentioned, CMS MERIT is the tool for measure developers to submit their clinical quality measures (CQMs) for consideration by CMS. Additionally, CMS MERIT is used to facilitate searches of measures from current and previous years. It also structures the workflow for CMS review of measures submitted to the MUC List. So we launched MERIT on January 29th this year, and it is open to accept submissions through May 27th at 8:00 pm ET.

CMS MERIT offers several features that will improve the MUC List entry and review process. CMS MERIT conducts automatic completeness checks for required measure information. Users can see partial submissions and return later to complete measure information.

Submitters and reviewers can easily track progress throughout the review process, and CMS MERIT does all this in an easy-to- navigate interface that incorporates principles of human-centered design (HCD). So I’m going to pause here and actually take you on a very brief tour of MERIT. I’m going to share my screen. Okay, so this is the login page for CMS MERIT.

Before I actually log into the tool, I’m just going to pause here and show you a few helpful resources that are posted, without even needing to log in. First is a link to the pre-rulemaking website that we looked at a few moments ago. Next, we have a blank MUC List template that actually has all of the measure fields that you need to submit in a Word document that you’re welcome to review before submitting your measure into MERIT, and we also have a downloadable QuickStart Guide that walks you through the submission process. If you need a MERIT account and don’t yet have one, you can request an account by clicking this link right here.

So I’m just going to log in. And MERIT does use two-factor authentication (2FA), so I just need to get to my Google Authenticator. And here we are, we are in MERIT.

So this is what you see when you first log in. The prominent buttons here are to help you submit a measure, so that’s the button you would press to get started. And then you also have the option of downloading that Word document to help guide you through the process if you’d like. As I mentioned, not only does MERIT help with submitting measures, we also have the list of all measures that will be submitted.

You can see those in CMS MERIT if they’re submitted, as well as past candidate measures. And MERIT is also going to help structure the review process of measures submitted for the MUC List. So that’s what MERIT looks like. I will stop there and head back to the slide deck. And you may notice as you log into MERIT and you start submitting your measure information, that there are required fields and those are denoted with a red asterisk. And if you submitted measures in previous years, you will see there are more required fields than in previous years.

These additional required measure information fields were added to support CMS in addressing the U.S. Government Accountability Office (U.S. GAO) recommendation relative to systematic measures alignment—assessment aligned with CMS quality objectives. They've been added to standardize, streamline and align required information for stakeholders and developers to assist CMS in prioritizing measures for development, implementation and continued development, and to enhance the existing endorsement and measure selection process. If you are submitting a measure to the MUC List this year, or would like additional information on CMS MERIT beyond the very brief tour you just received, I wanted to share a few resources.

First, as I pointed out on that login page, you can download a QuickStart Guide as well as a Word template of measure information fields and guidance. We have a recording of the CMS MERIT demonstration for submitters that was conducted back in February, and that’s available on the CMS pre-rulemaking website in that “additional resources” section. And we also have a CMS MERIT Tips & Tricks Session scheduled for April 8th and that is targeted to measure submitters.

And lastly, of course, you can always reach out to the Battelle pre-rulemaking team at: MMSsupport@battelle.org for any questions or difficulties with CMS MERIT. So with that, I am going to turn it back to Kim Rawlings who will talk in greater detail about the Measure Application Partnership (MAP). So Kim, over to you. Thank you, Meridith. So after measure developers submit their measures through MERIT, you know, the submissions go through a review process by CMS program leads, CMS leadership, HHS and OMB.

I know it’s a bit of a black box, but that’s essentially what happens all the way from, you know, May 28th the day after you submit the measures until the MUC List is published no later than December 1st. So when it is published as a MUC List and it is per statute then, we need to have it reviewed by a multi-stakeholder group, and so CMS is contracted with the National Quality Forum (NQF) to accomplish this review by a multi-stakeholder group. And that group here is called the Measures Applications Partnership or the MAP, which I’ll quickly review today. Next slide. So the main role of the MAP is to really provide the HHS Secretary with recommendations for each and every measure that CMS is considering on the MUC List, with kind of the ultimate or overarching goal of really promoting healthcare improvement priorities.

So the MAP helps to inform the selection of measures, really provide input to HHS, help identify gaps in measure development, testing, endorsement. Also, conversations about gaps in individual programs as well. And then also really encourage measure alignment across public and private programs, settings, level of analyses and populations, et cetera, with the idea of promoting coordination which we talked about being, of course, very important with Meaningful Measures and the CMS Quality Measurement Action Plan, as well as, you know, reducing the burden of data collection and reporting. Next slide.

We really believe that this input is truly invaluable. The structure and process used by the MAP really facilitates conversations between stakeholders and HHS representatives, while still working to build consensus among a very diverse group of stakeholders which are external to the government to make those recommendations. So as a result, we really hope that this extra level of review, you know, we hope that the proposed measures that are kind of on the proposed rules are then kind of closer to the mark, because they’ve already been reviewed and discussed by stakeholders. It also reduces the num—the effort required by stakeholders to submit comments on proposed roles.

I think it’s really important to note that while we have the MAP with this structure and it’s over a hundred stakeholders involved, when we look at all of the various committees and workgroups, that the process still includes two written public comment periods, as well as the calls themselves are all made public where stakeholders can make verbal comments as well. So that really allows an extra layer of public comment, and an extra layer of transparency to the entire public, while still reaching consensus from this group and helping to support us. You know, having our proposed rules be a little bit closer to the, you know, closer to hitting the mark. Next slide. On this slide you can really see the structure of the MAP itself. Starting at the bottom there are three setting-specific workgroups who make the recommendations on each measure of the MUC List with input from the Rural Health Workgroup which reviews all of the measures on the MUC List to identify how rural health patients and providers may be negatively or positively impacted by the possible implementation of these measures.

Then the measures and the recommendations from the setting-specific workgroups are reviewed by the coordinating committee who adjudicates the recommendations and really helps to kind of strategically guide the overall MAP process. You know, as an example by like reviewing and continuing to update the like selection and evaluation criteria, among many other things. Next slide. It is a necessity that the MAP reach consensus.

I think over the past several years we have learned that that is a bit more complex than just “recommend” or “not recommend.” But sometimes, you know, the MAP loves a measure but, you know, has a specific concern or an item that they would really like to be mitigated before it would go into, you know, before it would go into a proposed rule. So we have four decision categories here which are standard across all of the workgroups and committee.

Each decision must be accompanied by a rationale as well. Before I get into the decision categories, you know, it’s just important to note that the Rural Health Workgroup does not make recommendations themselves, but rather kind of provides, you know, provides some kind of food for thought, provides some information about, you know, what it would look like if that measure were implemented in a rural setting. And then that information gets fed to the specific, setting-specific workgroups.

So the four decision categories, and I think this has been stable for the last couple of years is “support for rulemaking, conditional support for rulemaking, do not support for rulemaking with potential for mitigation, and then do not support for rulemaking.” As I stated, you know, each of these decision categories needs to be, you know, needs to be accompanied by a rationale. So as an example, if the category—if the decision category and recommendation for a particular measure is “conditional support for rulemaking,” then that needs to be accompanied with an overall statement of rationale as well as, you know, a specific condition or two or three that would lead for kind of complete support. So oftentimes we’ll see, you know, “conditional support for rulemaking with endorsement," or something along those lines. Next slide.

So to kind of kick off the process and to help the MAP workgroup and committee members in their review, the NQF staff do conduct kind of a preliminary analysis of each of the measures on the measures under consideration (MUC) List. They use a set algorithm that really just asks a series of questions about each measure, and that algorithm was developed… As, you know, was developed and it’s kind of based on the MAP measure selection criteria. As I stated, the MAP coordinating committee takes a strategic kind of look over the whole process and so it was approved by them as well, and that’s what is used to evaluate each member. It’s really, you know, the intention of it is to really provide MAP members with kind of a high-level snapshot of the measure and kind of start, you know, kind of give a starting point for the MAP discussions and for voting as well.

Next slide. And so here you can see kind of an overall timeline of the process. You know, I won’t go through this in, in great detail. As you all know, if you were following this last cycle, we did not exactly follow the timeline, but we’re hoping with 2021 that we will be back on track with this timeline. So essentially, the list of measures does, you know, is released and published by CMS on or before December 1st.

There is some initial commenting, you know, in November if it’s published earlier, you know, very early December. Then usually like the second week of December we have those in-person workgroup meetings to make recommendations on the MUC List. Again, we have another written public commenting period based on the recommendations made by the workgroup.

And then in January we have the MAP coordinating committee who finalizes the MAP input with final recommendations from the MAP being made to the HHS Secretary no later than February 1. And then later we’ll kind of—later the MAP publishes a more lengthy kind of narrative report to support the discussions and support the recommendations needed. Next slide.

So I don’t think that it has started quite yet, but I think that it will in the next couple of weeks. I’m sure that you guys will all be hearing about this, but approximately one-third of the seats of MAP are eligible for reappointment every single year to keep ideas fresh and make sure that various stakeholders have an opportunity to hop on and have their voices be heard, and have a seat at the table. So in the April-May timeframe every year there is a formal Call for Nominations, which you can find more information about that process at the NQF committee nominations webpage.

And, like I said, usually there are several kind of listserv announcements and things like that sent out, so hopefully we’ll hear about that soon. Nominations are sought from both organizations, as well as individual subject matter experts (SMEs). So with that, I’m going to turn it back to Meridith. Thank you, Kim. I think that concludes the presentation portion of this webinar, and we now are opening up the floor to questions.

I know that some have been putting questions in the Q&A feature of WebEx. You can also raise your hand to ask a question, and I’ll sort of toggle through the instructions for those as we move forward. Kate Buchanan of Battelle actually is going to help us moderate, so Kate, have we gotten any questions? Yes, so we have received one question in the chat function.

The question was during Kim’s presentation. “How do see the balanced tension of potential integration/phasing of ML/NLP process in actual eCQM specifications, given traditional standards approach? Given traditional standards approach if it is codified, discrete data fields within EHRs?” Thanks for this question, and I’m going to start off with Joel. I think you’re also still on the line. You are the EHR and eCQM expert so, you know, please do feel free to chime in here. Okay, thank you. I apologize, but I think I missed the first part of the question.

Can you repeat it, please? Absolutely. It was, "How do you see the balance tension of potential integration/phasing of ML/NLP processes in actual eCQM specifications? And the rest of the question is: "Given the traditional standards approach if course is codified discrete data fields within EHRs?" Hmm, well, they don’t go small with the first question, do they? That is a really great question. I don’t think I have a good answer for you for that.

Here is what I would ask. Can—this is from Mike Sacca? Yes. Can you send that to us by email? Do we have an email for them to send questions? Let me try to get back to you with a good answer as opposed to one that I try to come up with off the cuff. I think that’s—I mean, that’s a good question.

I just feel like I’d be pulling something together on the fly, if I tried to answer it here. Meridith, should we recommend the questions be sent to MMSsupport@battelle.org and we can forward to the appropriate people? Absolutely, that's perfect. Yeah, I’m sorry, this is Kim. I mean, I think, you know, we will, of course, have a copy of all of the Q&As—all of the Q&As and who sent them but, you know, if there are ones that we aren't able to answer on the call, you know, we can proactively, you know, see about shooting you a private email to answer a couple of these that are more specific.

But by all means, if you think of additional questions after the webinar, you know, you can feel free to shoot it to MMS. But if you’ve already put the question in chat, we will try and get back to you via email if we’re unable to answer during the call. Mike, I think you're on a bit of a roll here. I see your one about PROMs as well.

I think we’re going to just want to get back to you on that one as well, after we just give it a little bit more thought. I am checking the chat right now. I do not see any hands raised, and I’m not seeing—oh, let’s see. Oh, yes, here we have another question. Oh, yes, here we have another question. “So for digital quality measures (dQMs) could you say more about the centralized data analytics tool you mentioned on Slide 11? Would CMS tools be used more broadly than MIPS, e.g., for CMMI initiatives, Medicaid or other programs beyond Medicare?”

So I’m scrolling back to Slide #3. Yeah, I mean, so—I’m sorry, Slide #11. You know, so we do have here, you know, as one of our action items to leverage, centralize data analytic tools, to examine programs and measures. You know, and I think for us this is an effort to better or, you know, always increase our evaluation of not only our programs in the sense of modernizing our programs, et cetera, but also, you know, using the various tools and data and information to really examine the individual measures themselves.

I think with the transition to digital measures, it makes all of that data more readily at our fingertips right now. You know, sometimes it takes us a couple, you know, us at CMS even, you know, several years sometimes to get that data ourselves. So, you know, by moving towards digital measures, you know, we’ll be able to more readily have that information used more centralized data analytic tools in a more real-time fashion to be able to examine programs and measures.

I’m not sure if there’s anyone else on the CMS end that would like to add to that? Kim, I’ll add that the original questioner followed up with, “I should have said to you that I’m specifically interested in using the centralized data analytic tools for measure calculation after the data elements are gathered from various sources.” Yeah, thanks. I think—I don't think that I have anything else to add to that, but thank you, but also a great question. Our next question is: “What is the timeline for implementation in the CMS MIPS program assuming the measure has been recommended, endorsed during that meeting, i.e., for measures submitted May 2021 when would they go live in MIPS programs?”

So this is Maria. If I’m understanding the question, if something was on the MUC List this year, it would be proposed in rulemaking the next year and data collection, you know, when that would begin I would assume is going to depend on the situation of the rule and what’s designated in the rule, but it’s not going to be in the same year. That’s for sure. Does—did I answer your question? Maria, I’ll follow up to see if the original questioner has any additional information.

And before we get in—before we get into the additional questions, I just wanted to remind people that they can also raise their hand to— and I have their lines open so they can answer a question—ask a question. And then we had one question. And then we had one question. “Does the publication requirement apply to MIPS QCDR measures, only to new measures” is the question? No, it doesn't apply to measures that are being submitted by QCDRs or qualified registries.

And I am currently looking through the chat to see if we have additional questions. I am not seeing anything at the moment. Oh, and Joel, I’ll turn it over to you to respond to the FHIR specification questions.

Sure. So there’s a question from Mike asking if I can talk diplomatically about how or if FHIR specifications—eCQM specifications can be used in lieu of QDM CQL-based specifications.” It’s just hard to hear.

Given that certain developers are trying to be forward-thinking while understanding there’s uncertainties and exactly when there will be a firm cut over to FHIR specs for sure. So I think right now we’re in the process of looking at how to convert our—well, we’re in the process of beginning to convert our own eCQMs for the purposes of testing and making—taking advantage of tools that are currently under development such as the MAT and Bonnie on FHIR. Those are still undergoing development, and we don’t have a specific timeline that I can give you right now for when those are going to be, you know, completed and ready for full transition. I think our expectation is that we’re going to continue. You need to continue using QDM-based specifications with the expectation as, I’m sure you’re aware, we will be converting over to FHIR in the future. There is going to need to be a period, or at least some period where you’re able to take advantage or use the QDM specs and be able to crosswalk over to FHIR specifications.

I think right now we’re not in a position to where we can accept FHIR specifications, because we don’t currently have a receiving system in place to accept them, but any shift in, you know, in the specification requirements are going to be reflected not only in the annual updates, but also during the annual update process, but also through linking. So, you know, there’s going to be a leadup time where we’re making the public aware that we are ready to receive FHIR specifications and take advantage of them in the programs. So while I think it’s certainly worthwhile to be thinking about how QDM-based specifications can be ported over to FHIR, we’re not in a position right now where we can use them within the programs.

I hope that was more helpful than my previous answer, which is essentially giving me a chance to get back to you. Thank you, Joel. I want to do a last call for any pre-rulemaking questions. As a reminder, you can either raise your hand, and we can unmute your line to ask a question, or you can type in the chat function. Also, if you type the question into the chat and we need to follow up with additional information, we can follow up with that information. We have all of the questions and the person who asked in our Q&A.

And Meridith, I’m not seeing any new hands, and I’m not seeing any new questions in the chat. Great. So thank you all for those good questions, and thank you, Kate, for helping moderate that session. This last slide that we want to share is just the contact information for the points of contact for pre-rulemaking.

In addition to the email address we’ve mentioned a couple of times, MMSsupport@battelle.org, you can also reach out to the folks listed here, and we thank you again for your time. Everyone have a great afternoon.

2021-04-27 17:19