Real-time streaming in the cloud: How Azure can help your business move faster - BRK3356

Oh thank, you for being here thank you for sticking around for Friday, it's, been a long week I'm sure for all of us my. Name is Dan Rostova, I'm the lead program manager for AdWords messaging services and I'm. Here today to talk about one, of my favorite services about, event hubs and how it fits into a streaming architecture, in. In, any application you're building an azure a lot. Of this talk is going to be a recap of, things we've delivered over the last year and. Then kind of a different way of telling the story of what the service is and how it works and how you should think about it we'll, have plenty of time for Q&A I believe and then we'll talk, about a few new things towards, the end of this is anyone in here are using event hubs today or any of the streaming services natcher cool. A couple people good, so. I've. Been think about how I want to talk about streaming and how. I want to talk about these services and I've kind of changed my mind about it a few times over his over my history with it so, the. First thing I think I'd I thought I'd throw up is a simple. Definition, from. I think from Wikipedia, which. Is a definition, of a stream obviously, the, first one's not really the thing we're talking about the. Second one's close the. Third definition is pretty spot-on and the, fourth one has nothing to do with this. So. If you're here for any of the other three I'm sorry so. A stream in our context. When. We think about a stream in computer science this is the Wikipedia definition is, that, it's a sequence of data elements made available over time and. This is interesting because it's not like. Normal data in the sense that it is unlimited so. Usually. When you look at how you process data a function, for instance takes, a parameter and that's some set of data I suppose, some, programming environments do give you the concept of stream streams are really powerful the, UNIX shell is, all built on pipes and filters it's a similar concept but. So a big, distinction here is that streams aren't just batches of data they're, not just chunks of data there are potentially, unlimited sequences, of data and that's, what we're going to talk about today but. To put it in a more immediate. Context. Streaming. Is all around us right. Who's. Familiar with this kind of stream, this. Was one of my hotels sometime this week I think I. Took, this picture just because I thought it was a good analogy here and. Stream processing, or streaming, analytics is around us too when. You get in the shower and you're turning on hot and cold water to figure out how warm you want that you, are effectively, a stream processor, there you, are feeling the water and deciding do you want it hotter or colder so you're gauging the temperature and then you're actually making changes so. This is actually a pretty good analogy for what streaming is and how it works in this context, and what we're going to be talking about today and, it might seem a little far-fetched but, I'm, going to carry this analogy for quite a while actually but.

To Map this to something you might be more familiar with think, about having an application, and you have users using your application they're. Interacting. With your application, it could be click streams they could doing a lot of different things they're. Creating, activities, that you can track and you, can see how your users are interacting with your application, in real time so. This is the exact same thing here you would be taking the temperature of your reader of your users are they happy are they upset you, know is it time to do a customer, interface intervention, before things go bad for them, anyone. In here. Interested. In knowing how their customers are doing in real time it's. Something we're very interested in Azure, we. Don't always do it perfectly I suppose, so. No. One's interested in how their customers are doing that's awesome I know it's Friday but. Are. You hiring. So. Another. Another good part of this analogy anyone ever be, in a shower anywhere, and it gets suddenly hot or cold when someone turns on an appliance or flushes, the toilet or starts the laundry or something yeah. There's a reason for that it's a change in pressure so. You're changing pressure and that's in that water system, and the side effect is you're you're screaming in the shower either from hot or cold I decided. Not to put a photo up for this one that's. Better for all of us but. We actually have solutions. For this built into to, our streaming platform, into event hubs which is our streaming platform in Azure and again. The water analogy is the right way to think about this we'll talk a little bit later about analytics, and processing that's not really that's attached to this but it's not the piece I'm here mostly to talk about. The. First way we do this is with our protocol layer we. Support two different protocols, AMQP, and we actually support the Kafka 1.0 protocol now AMQP. Is our native, protocol, and it's got a sophisticated. Credit system what. This means is that when a client and a server connect, so, you open a connection to a server you, are exchanging some, credit information some some number with the server and the server is exchanging a number with you and what.

That Gives you is the ability to say okay here is the amount you can send me before. I don't, want anymore and that, way you won't get flooded or the server can say here's the amount you that you're allowed to send the server without. You, know /, flooding it. Might sound a little strange at first but how the credit system works basically is that you. Get a number in the beginning it's usually about a thousand for us and as you're sending and the server is acknowledging, which is all happening asynchronously, you. Never see any of this stuff your, credits are being refilled, and this, way you don't get into a place where you sent a million things and then none of them worked and you don't know where you are so this, way you can, control your limit of how. Many things need to retry or how many might fail and if, you respect, this credit system which our clients do and all the languages we have them for now. Java.net. Core, and standard, Python. Node, a few others. Then. You will actually be in a steady state where you can keep producing and not, having any sort of flow control problems, where. Your producer. Starts backing up. After. This we have another kind of flow. Control mechanism which, is called throttling, and we. Actually throttle, based on what capacity you've, purchased from our service and that's, a way that we can actually tell you hey your, credits are fine but you're actually at the limit of what you're allowed to send what you've purchased and then, we'll start backing. You off from that so, if you ignore first. We'll starve your credits we just won't give you credits fast enough but. If you wrote your own AMQP, stuff or made, a lot, of clients you could actually step. Outside of the credit system there and, cause. Problems so then we will just start throwing you exceptions, we will just deny, your traffic after a while and this is running in a window it's a pretty short window it refreshes, very, quickly so. It won't, be hurt too bad by spikes, but. It will be hurt by growing traffic over time and. Then. Finally as a last resort we do have hard limits on the back ends of our servers these. Hard limits we don't really advertise we, don't really tell you about if you get a dedicated, cluster, we will tell you more about it and you can set them. But this is a way that even if you ignore even. If you you know do. Bad things we won't actually hurt our servers, so, we can have survivability. Of our service even, in the face of like DDoS or something like that so. These are kind of three ways that we regulate flow control and pressure in our system but. That's only a piece of it that's us and you need to think when you're building any sort of streaming pipeline, I mean, if you're really here to talk about messaging. And and the. Actual pipes themselves I think that's great but it's probably not reality. You probably want to do something you won't probably want to get that data from someplace, you're collecting it to some dashboard, or some sort of sort of analytics or you're making decisions so. You need to think in full system sense and rather, than just the sink you need to think about like the plumbing in your house has. Anyone ever seen this happen in a, sink or a bathtub or a shower water. Backing, up in it, yeah. That's, a that's a common problem I bought. A house recently now I have to deal with all this stuff myself, this. Is back pressure so, this is the exact same thing that happens in streaming, systems is you, get back pressure and back, pressure as a result of of higher pressure in the system than in the supply for that system so, for instance if you in that.

Picture I turn down the sink and just left it on for a long time and in. 30. Seconds or something and started to fill up that sink you, know so there's too much water coming in and not enough water draining, out so it's an imbalance you. Know you could think of that sink as the as you like your your topic or your event hub your your stream is is. Getting back pressure. Back. Pressure and data pipelines tends to show itself first. In latency, and latency, will pop up in a lot of places it, might be latency, and sending you can't send as fast as you want to for some reason and. You might not be able to figure out why it, might be latency, and reading you can't read fast enough or it might be discrepancy. Between the two you're sending a lot of data but while you're reading is old, and, you can't really, figure out why all of these things are symptoms, of back pressure somewhere in your pipeline and so, they're things you need to be aware of and, that the dangerous to these are that, any any. Stream. Can only hold so much you, could think about something. Like an event hub or even, if you use a cue or Kafka or something else it's. A bucket you're putting water into this bucket and sooner, or later that buckets gonna fill and the water is going to overflow and what that will show as it's going to be data loss so. You will not be able to read the data fast enough before it expires so, if you have like a one day retention, of data it's a one day buffer and you're writing too much and, you can't read that fast enough you will eventually lose data and in, a lot of streaming systems the best part is you won't you won't see that you're losing it you won't know, so. That's one thing to be aware of for for, for streaming systems of all sorts, thinking. Back to one of those flow. Control mechanisms, we use for throughput units this is the thing you actually purchase from us most of the time it's. An. Allowance. To be able to send one megabyte per second or a thousand messages and, to. Read two megabytes per second or four thousand messages just. So we can support small message workloads it. Includes 84 gigs of data per day so, this is the thing you purchase you. Purchase it for three dollars an hour and you can purchase a lot of them so. We actually have a feature to alleviate, back pressure and this feature is called Auto inflate it is, until, it's been out for a while but we didn't turn it on to be the default until. I think next, week or the week after it will be the default and. The idea what this feature is if you look at this. Portal picture you can see it's. Got the throughput units it's a slider you pick how many you want but. The the first problem people usually run into with our streaming platform is everything's going fine for months and then they hit that limit of that 1000 messages a second, like three or four months later they, start getting errors and they don't know why and, when auto inflates gonna do is let you set a policy to, grow your stream one, megabyte at a time so one pay increment, at a time up. Until some limit that you set so if you say I only want to pay 30 cents an hour you can set it to 10 and you're done at that point so.

This Is kind of a way, we're alleviating. Back, pressure within your stream now. We're, making the sink empty quickly here but, you have to remember it's emptying into something else and we'll talk about that a little bit. Real. Quick I want to talk a little bit about the difference between queues and streams anyone work with any messaging. Cues in their history. Yeah. Anyone, ever use email yeah, that's a messaging queue of some sort. So, when. You look at the basics of a queue I'm not gonna talk about queues today but, the very basics, are that, you have something that's sending messages to a queue the queue is acknowledging, them and saying hey I have your message the, recipient, comes up and reads the message from the queue takes, a lease or a lock on it and then, decides that they're done and sends the acknowledgement back which, would complete the message and now the message is out of the queue this, is pretty similar to computer. Science queue you would think about I mean it's subtly different but you you push things on to it and you pull things off of it and they're gone so, that's how a queue works and logs. Are not that way streams, are not that way so, when we look at how streams work for data processing when you look at any of the big streaming, platforms, today event, hubs Kafka even. AWS, Kinesis it's, really a log and so, what is a log it's an ordered sequence of Records and it's, append only and it's, stable meaning it's immutable so you can't change the records that are on there they just keep being written to it and this, actually gives us some advantages, over queue in certain scenarios in streaming, scenarios in particular, for. One it's a client-side cursor so. Instead of just asking that you give me the next message you connect and you say I want message four, and forward, so, you start, at a point and then you move the cursor forward, the. Other is that they're non-destructive. Reads, non-destructive. Reads can be really powerful in data pipelines, because you don't want to keep losing that mess of that data and you don't necessarily want to keep replicating it's the cost of doing that's going to be pretty expensive, and the. Other thing to know since these are immutable and they're stable is that you're getting order, within this log, so. The. Item number six will always be item number six and will always be followed by item number seven no matter how many times you reread this so, you do get order so. This is a very simple idea of a log all. Of us if we are in this, industry have seen logs of some sort or another. What. Is popular, now and what event hubs does is move this to a partition. Log we, have multiple partitions they're. All independent so there's still independent logs they, do have all the characteristics I just talked, about so there's ordering, within those, partitions not. Between them though and. This actually solves a few other problems, another. Problem that this solves is that when you have only one part, you have only one cue that, becomes a resource that you're fighting over kind of like a database table like a lock on a database table eventually. A relational, database is going to hit a scale limit where you're fighting more for the resource that is the lock then, you are actually doing work and this, this is the way around that it's partitioned. Consumer, so, this is how we solve that problem, about, competing consumer, where everyone's fighting for the resource and this, way you would connect, to and read all of the partitions.

In Parallel, what. This really lets you control is your degree, of parallelism, so how, much parallel, reading do you want to do how much parallel processing, do you want to do so, people mistakenly think that partitions, is about throughput, in, a way it is but not in the stream it's the, the throughput limit, is going to always be your processing, and one. Cool feature you get from this is that you can actually get. Data locality so you can't actually pin data to a specific, partition, with, partitioned key so. How this works is that you send your data and we just hash it so. A's values, go to if you tag something with a it goes partition, 0 if you tag something with c e goes to partition 3 this, is a stable hash so it'll always be the same on these, partitions and that's. Kind of how this works so, why would you do this right why do you want data, locality when. You're doing this the. First reason is because everyone likes to be with their friends right that's always a good reason I guess but. A little bit more than that is that when. You're doing computation. On that data you're thinking about proximity if you have multiple servers reading from these multiple partitions and that's, the whole idea of compete, of a partition consumer, so you can scale out with multiple readers and have more compute working well. Now if, you need to join, across these the. The painful part here is now your compute units need to talk to each other so, they need to be able to share data between each other or everyone. Needs to read from every partition, which puts you back in the place of having just one Street. One partition, in the first place you've not solved any problems there so even. If you're using a partitioned, log or partition stream like event hub or Kafka if, you're not careful with this you're going to just push the problem further downstream. You know so the drain will still clog it will just because something even further down which is probably even Messier to, deal with so. Data proximity, is important. The. Way to think about that really is if you need to read across multiple multiple, partitions and most processing, frameworks will let you do this some of them do it invisibly, or kind of invisibly, like spark or.

Stream Analytics but. You. Are paying a cost for that and and, that's the cost and. To. Keep in mind here you have to remember that partitioning, is really, about downstream, throughput, so, it's not so much like I said it's not so much how many megabytes per second do I want to send through my event hub its how many megabytes per second, can I read per compute unit that's reading from this stream you. Know like if you're sending to a sequel database further down you have to acknowledge home how much can I send into that sequel database, so. That's the thought of the day right now it's just think about that. The. Next thing I want to talk about well I'm on this subject actually of partitioning, real, quick we'll get back out of this in a second I'm sorry this one's a little. Bit funny anyone, familiar with cap theorem. Yeah. So cap theorem this, was actually after I was in university this was taught so a few of us in this room probably are in that boat or. Was. Defined I guess cap. Theorem is a, theorem, by a. Computer. Scientist who, stated. That it's impossible. For any distributed system to, simultaneously. Guarantee, consistency. Availability. And partition, tolerance when. He did this work he was talking about network partitioning, but. Any distributed, system is always subject, to network partitions, and. So this kind of gives you a a, choice. That you have to make when, you're thinking about do you want that data proximity, or not. Is that you have to think about what's more important, to you you. Have to choose between consistency. And availability and only. One of these things is possible, you know and. This is the choice you're making so. Be aware of that choice sometimes, you, you, do need to make that choice other. Times you don't and this is not unique to event hubs this is just cap. Theorem is a computer science concept so. Be. Aware of that be mindful of it and make correct, choices or. If, you're less the computer science person and more of a a literature. And English person, I guess the idea would be the. Question is to key or not to key, right so think about that and be aware of it and just know what decisions you're making don't accidentally, fall into them so, our default, for event hubs as well as the default for Kafka, is to, just send across all partitions, so if. You just do that you'll get a good distribution of data you won't get any hot partitions, you, won't get order but, at least you'll get good availability, so on our service if a single partition is down and you write to, us we, will we will write to the next partition, we don't even try for one that's maybe doing the load balancing, or something like that. So. Now back to some reality from this, thinking. About okay, you have this dream it's great it solves a bunch of problems for you how. Do you want to process the data that's in this stream so. There are really kind of three ways to think about how to do this and it's. What tools you want to use the. First is do you want to be code free and serverless so, do you want something that just like event hubs is really just a service and it's just going to run and you don't really do anything with it do. You want to do sort of frameworks, or, use, frameworks, you're already familiar with things like spark or. Do you want to do custom code you, know and so those are the choices you have to make they're all valid choices under different. Circumstances but, I show, them this way because, I think the vast majority of cases can be solved, through, a service based approach, which. I'll, talk about for next and. Then after that you kind of get to the the cases that sort, of filter, you down to more. And more work you're gonna have to do but more and more control you're going to get as you get there so. Stream analytics anyone, using Azure stream analytics today.

If. You're using event hubs you should at least look at it it's, a very good service it gives you a declarative, sequel language will actually look at stream analytics a little bit later just just, a little bit there, was a good session Wednesday. About it that I'm sure was I think was recorded, the. Idea here is that it's out-of-the-box quick, to go it's, directly, integrated to event hubs you. Can actually get started with this in like two minutes if you're doing something simple so and. Its sequel, based so something like select star with. A declarative, language one. Cool feature for it is that it's available on the edge so there is an edge runtime for it if you're doing IOT stuff works, on our IOT edge and. It's it is just like event hubs it's a pay-as-you-go service, it's paper, and you can scale it in place I mean, it does have an SLA. In place just like most Azure past services. After. That you would think about processing. Frameworks so, if you want to actually use existing, frameworks, there are a lot of them out there there are a lot of cool ones the open source space Apache. Spark is a good one and you have a choice to either run that yourself or use Azure data bricks for that data, bricks is a cool service for spark in. Azure it's, very, slick it's integrated, directly into all. Of our toys, another. Is that you could use other things like storm or, or. Flink and other things that you can run an HD inside or you can run on your own compute, and. You can either use our services, for these or you, can just run them yourself in like Generic I as or vmss, or kubernetes, clusters, is what I see a lot of now for. People doing stream processing, which is, actually. That's a talk I'm gonna give next week but, if you're interested in that we can we, can chat about it at the end. So. The last option is writing your own code this. Is actually kind of where we started four. Years ago with this service sending, people is to write your own code the. Codes not that complex this is code this is real code for, actually sending to. An event hub I have this running, on my computer right now so, this, codes trivial, it's not really doing much it's just sending pretty static data but, what you can see is all you're doing is you're creating a client from a connection string you're. Creating, a message so, that message is some JSON in this case I'm. Adding some properties, just to so show that you can do it and then you call send and, whenever. You're dealing with our services you should always use our async, async. Operations, our, clients, even if you call something synchronous, it's async behind the scenes so, the only one blocking is you just. If you're you know every every major language has some async support right now for. Dotnet. It's pretty easy you can just use tasks, and wait. For. Java. It's similar you just use completable, futures I have that, I'll show in a second too and if. You really want to get the most performance you, know when you're sending async, you just want to kind of be in a loop where you say I'm gonna send up to this many messages and then, a weight on all of them a dangerous. Thing to do is just call Sunday sync and not wait you. Know it not wait ever because, if you sent a million things, they're just kind of queuing up locally it gets to that local back pressure I talked about and then, when your things start throwing you, don't you'll, have a difficult time telling where you were when things failed. Receiving. Is, actually pretty easy too this is real code for receiving this, also is running on my computer the, way this works is we have an agent we have it in all the major languages, we support it, looks the same in all of them it's called event processor, host you, create it somewhere, in your application, post, it wherever you want you, give it your, event, hub name the, SAS.

Connection, String to your event hub and. A storage account actually, we do use a storage count for which I'll talk about in a second and then. You implement, this interface, event, processor I've, implemented, it here it has four methods I'm hoping. Everyone can guess what those methods do I won't. Really explain them but. You can see the important one here which is the process of NC async it gives you a partition, context, so, you know which partition, these these. Events. Are from these data. Came from and then, it gives you an enumerable, of messages, so, you're getting a collection, or a stream of messages you can control, all these things like how big do you want that to be maximum. Through. Through this processor, through options, when you register it but. How this looks at runtime is pretty slick. Four. Years ago five four and a half years ago when this was new I was super impressed by it I still, am to a large extent and. The reason it was very impressive is because. Orchestrators. Have come a long way in that time so four years ago there weren't a lot of, quality. Orchestrators, for distributed computing and, there. Was they were all kind of peer to peer based and some, like zookeeper, were heavier weight than others are harder to run when. You run an event processor, host this is how it exactly, how it works you. Have a virtual machine say and you're running it and it's, going to create it's going to look at your event hub it's going to see how many partitions do you have in that event hub and then it's going to create a. Instance. Of your processor, class for, each partition and if. You're the only one running it will get all of them it's going to use the blob storage account. As lease, mechanism, so it creates blobs for each of the partitions, uses. Blob, storage leasing, to make sure you have a lock on that so no else is reading it and it, also writes your check points you might have seen in that previous code I actually wrote a check point at the end this, last piece writing. That check point is the client-side way of saying I know, where I am now you, know I don't need to go back to the beginning of the stream I'm acknowledging that, I'm at position X so. Check pointing is kind of your responsibility. In a client-side cursor world we, make this fairly easy the. Other thing we make easy with this library is that. If you add more nodes say. You have two VMs, then, we'll actually auto-scale between them that whole leasing idea is that, every 30 seconds, there's a least check going on that blob and if. You get more and more of yem standing, up they. Will actually. Start. To fight for. Those leases and you'll eventually get a pretty, even distribution the. Important thing to remember here is there's, no point in having more VMS, than, partitions. You. Know there's. That doesn't make sense so, it's, okay to have less it's not really okay to have more so. That's your degree of parallelism is I have four partitions I can have up to four VMs I can make them very big VMs. But, having eight VMs, is not going to help. So. No matter what you choose you, have to remember. To. Keep in mind back pressure back pressure is going to build somewhere. In your system sooner. Or later at scale something is going to break and you just have to be aware of where it's going to break first. Think. About if you're trying to load something like a data warehouse data. Warehouses, don't really like being, told to load hundred thousand things a second they're, very happy being told million, to load millions of things in a batch you, know like a bulk load operation. And that's kind of the way to attack that problem. So. If you're many electrical, engineers in the room oh. Oh. This jokes gonna fall flat never mind so. Just think about resistance. The. Other thing I want to talk about while I'm there is about. Party. Choosing, how many partitions, you really need it's. Very tempting to say more is always better you. Know that's, not always the case sometimes. It is sometimes it's not think. About cases where it might not be better more, cholesterol or, calories, you. Know more taxes, I don't know they. Might be okay they might not, but. In more is not always better, because that whole idea that you have to read from all those partitions well. I have, 30 if you're never gonna have more than four machines you. Know that's not not, really gonna make a lot of sense. So. Real quick I want to talk briefly about what people actually do with streams this.

Is A cool cool. Case I'll just brush on they've. Published a lot of cool stuff themselves already. There. Is a company called mochi oh they. Do connected. Car and location-based, services, so, this is stuff where you can set like boundaries, of where your kids can take the car her times they can use it and and things. Like that and they'll, give you real-time alerts, when those get get violated. Or you know real-time tracking for that the. Challenge here is that, they. Need to match a lot of different streams of data in real-time you, know it's not really useful to know that your your son or daughter to went somewhere they weren't supposed to yesterday. I mean, I guess it is but, that. Yesterday. You know there's no proactive action at that point so, what, they do is they have a rich API and application, front-end running, an engine X they're. Using event hubs it's a real-time stream they're, doing some processing behind, in service fabric and then throwing. A lot of this into Hadoop. And, doing further sort of pattern processing, off it after. The real-time processing. So. This is a really cool use case someone actually using this they do this a tremendous scale. So. We should be fairly familiar with streaming right now everyone comfortable with with the streaming analogy with the water analogy everyone like that okay. Well how about when you don't want to take a shower how, about when you want to take a bath you could, just I guess try and take a bath one cup at a time that. Probably wouldn't be very useful this. Is a nice place in Kyoto that my wife and I went to a few years ago the. Japanese have a great culture, of outdoor. Bathing so. If. You want to take a bath that's a different approach to processing, the stream right you don't necessarily want, all the individual, pieces in it you want them collectively together so. This is another thing we're giving you an answer for in, event hubs is capture. Capture, is a feature on event hubs where you can have a batch on top of your stream so. Some frameworks, like spark that I mentioned will do this for by reading a buffer and then holding that buffer in memory and then, you do some processing with that buffer in memory maybe you only want data. Every 10 minutes we, actually built this into the service its, policy based and you use your own storage we push to your own storage. We. Use an avro format we chose that because most of the big data of tools already, speak Avro already, understand, Avro, and. It's. Safe for us to transmit, whatever type of payload is in the message at that point whether it's JSON or CSV or, anything else, really. Cool part is that we actually raise event great events off this anyone familiar with event grid or. Heard of it it's, another service in the in the messaging portfolio, event, grid is this, is a good example of it it's a service designed, to give you notifications, when other things happen, in Azure so, you can take reactive, measures in this, case when. A capture event occurs meaning some thought some file was created with your stream data in it for a time window you specified, you're, then able to use other Azure resources, like functions or a CI or something else to, go and process that batch because, processing, a batch is not the same thing as processing, a stream and that, was very happy that that Wikipedia, article even said that there.

Are Some other cool aspects to this this does not impact the throughput of your stream so, it's not going to slow your stream down to use this this happens on the back side of this so no, impact, on your stream at all and. You can offload, any heavy batch processing, you have from. Your real-time stream, so, that your real-time stream stays fast so. Fast is almost always expensive I've yet to see a place in the world where fast is cheaper than not fast I. Think. That's true so, I was thinking maybe like a cruises or something I don't know maybe, planes are cheaper but. Usually, fast is more expensive and so, saying, I'll do everything fast is, kind of a naive way to approach, data, streaming or data processing, I push, that for years actually there's someone in the audience here who, I used to push real-time stuff with all the time and I've. Learned, since then that. That there is still batch there, is still slow. And I've. Acknowledged, that my last paycheck will probably be cut on a big batch and my, death certificate will, probably, be processed, in. A batch as well probably on a main s400. And that's, just life, so. Setting up capture is super easy we. Actually might have some time I'll just walk through the portal maybe later and show you this stuff this. Is our portal for event hubs I've. Just gone into a namespace. Here and I've gone into a specific topic just clicked into it and there's a little icon under. Features for capture you, turn it on and, you can choose your time and size window so how many minutes or how many megabytes you want for the capture feature to kick in then. You pick a storage provider let's go back to that you, pick a storage provider it can be at your storage it can be data lake and. You pick container, in that storage provider that you want us to write the files, to and then, finally you pick a format that you want them in these, these things. In the curly, braces or macros we do want all of those but you're free to put them in any order you want this drop-down has a bunch of different examples and, you're also free to put whatever text around them, that you want so you can come up with your own naming, strategy that works for you for how you want these files written. And. How capture, is going to work is that you have your event hub we kind of saw this earlier it's, got the data in it you've, given us a file, or. Storage, economy and you've, set up a time and size window and the, first trigger that happens so the first thing across the finish line whether it's time or size is going, to trigger files being written on a, per partition, basis and, so we'll keep writing those and now they're in your storage and you, can do things like keep them forever if you want like a golden copy you. Can do things in Azure storage like set a policy so they'll aged off on whatever time you want you. Can use things like a cool storage account if, you want them to be cheaper to store for a long period of time so. There are a lot of cool features integrated. Into storage here that make this a good, story.

For Making. Your stream not necessarily. Be so ephemeral, and go. Away so quickly, so. This is anyone, using capture. No. It's. Worth checking out it's a cool feature. Next. I want talk a little bit about securing, your stream who. In here cares about security. Yes. Ok a few hands in. So. A few things I wanted to throw out just, first that everything in all of our messaging services but event hubs in particular is, always encrypted all the time so, all of our services require for messaging require, TLS and SSL so. One or the other we do not let you do plaintext. Communication, with our services so. You are always encrypted in transit and we, use server-side, encryption, in our storage so, it is always encrypted at rest so we have no sort, of data, exfiltration leaked from, disks or anything like that so. You're covered there if. You're very interested in bring your own key and things like that those are features we'll bring to our dedicated tier which I'll talk about in, a little bit. There's. Also managed service identity is anyone familiar with managed service identity yet, this. One's been sneaky a couple, people this, is actually, probably my favorite feature in. Azure all up that's been happening over the, last year, you. Saw earlier in my code example, I had a connection, string if I scrolled. Over enough you'd have seen a key in that connection string and that's a secret so, like that's a way to access that resource so, that's kind of not necessarily, good you have to be careful when you're storing secrets places, managed. Service identity as a way to end that so. It's giving, you aad identities. So, you can have your computer your resources run under an identity, in your Azure Active Directory and then you can grant that, compute, access, to different resources in Azure and then when you do that with something like. Like. App service supports, this VM, scale set supports this then, you can actually give the connection string is just the DNS connection, you don't have to put the key in there because, our client will see that and know, hey use this identity this. Is in preview now it is going to be GA soon we, have samples. For it up on our website which our doc site which will be at the end of this and. It's a it's an awesome feature for really getting good security the best part is this, is this, is doing key rotation, so this is doing all the security stuff behind the scenes between. A ad and that service that has the identity but, you don't have to do any of it yourself you. Know and you can still cut off access and things like that so, when you set this up you, literally just go into our portal right. Where I was a, not gonna go you, go to access controls you, hit plus it's gonna bring up what, role you want to do so this is the are back roles that you do the same role as you do for users in an azure portal and now you can do them for service principles and.

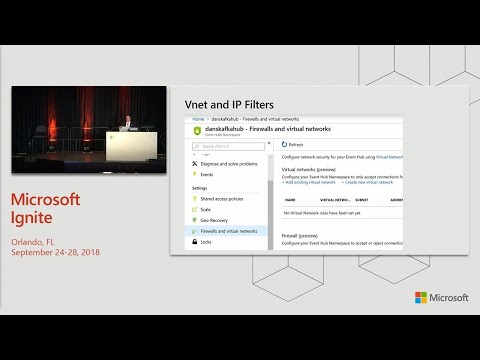

This Way you can assign. Which exact service principle do you want to have what access you, get the same things that we have already which are like send so, listen I guess we call it send and manage so. Those are the, operations. You get to grant on identity. Basis and. It works with groups as well. Next. For securing I want to talk a little bit about V nets and IP filters anyone, using V Nets and azure at all I. Would. Expect all hands actually but I'm surprised. Maybe you're just tired, so, one thing we did recently, over the summer actually we, added V net service end points so, now you can actually make your event hub only available to, two resources. Within a V net so. When you turn this on you're actually turning off public Internet access to, that endpoint to that event hub and now, you can, only success. It through your own V net you, can have multiple V nuts you can set the subnets for them so, this is a very cool way to have control, if your existing. Infrastructure. Beyond. That we also have IP filtering, IP. Filtering, is for, situations, where you aren't necessarily using V nuts but, then you want to be able to filter public, Internet traffic and, this is a policy based setup as well we like policy, stuff you, get to set a filter. Name you get an IP range it's a mask so you could do like one. Two three four. Five six that's, not valid but whatever and. Then you get to decide if you're going to accept or reject you could have ten rules and the, way it works is the first evaluation, will fire the. Decision, so, if you put a reject. On 0.0.0.0. Nothing. Will get it so. Don't put that one first, if. You plan to use this. The. Next I want to talk about a little bit was monitoring, monitoring. Your stream so. There, are a few ways to monitor in here we have pretty cool portal based metrics, which I'll probably show in just a second. I have a picture of them but I'll probably show them live as, well the. Portal based metrics, just when you go to our overview page you'll see them if you scroll down on this you'll so this for a namespace wide traffic, if you scroll down on this you'll see your individual event, hubs or in cough coal and you would call them topics and you can click into them and see and you. Get to pick you know how, what time you, you want to see and what sort of metrics. You actually want to see if you, want to be more deep. About this and more, integrated. It's like a wider. Monitoring, platform as. You're monitoring actually, supports event hub supports Azure monitoring. Literally. Actually because in, the background all of our telemetry is going through event hubs anyway but. So your event hubs publish metrics that go through Azure monitoring's pipeline and then, you're able to to, get those metrics, there are a lot of metrics in here I'm showing here just incoming bytes and. You can actually set metrics and alerts on this and that's the cool thing with Azure monitor is that. You can use the same alerts for any service so CPU, utilization memory. Pressure things like that and then you can say megabytes so, if you want to see is is no one reading your stream there's.

Outgoing. Bytes as well so. That's a really good alert to say hey if my outgoing bytes a zero something's, really wrong you know there's there's a problem. And so that's a really good alert to have and, then. Last but not least we do have code base metrics and. When I say that I don't necessarily I, mean there's API for all those metrics if you want but, these are inside out monitoring, so these are metrics that are available within our clients, in, this case I'm showing some for for the lowest level reader we have and I didn't show this today but it is there a partition, receiver which is like the thing that actually connects to the partition does the reading we, do have this, runtime, info metrics on it and so, you can see things like the last in queued offset, so, what is the last offset, that was written into this partition, what. Was the time that it was written at and what was the sequence number the, offset, literally is just a storage location so, it's just going to grow the, sequence, numbers a monotonically, increasing, number so it gives you basically, a way to see how many messages not just how, big, is, the backlog, and. Then you can actually see the time at which this, was called so, that you can tell what is the drift so if you're, getting you. Know when you get the message you're gonna have the current offset and sequence number in it and you can use this to see how far behind am right now and you can do that from within the process of the reader so you can do both outside in, monitoring. Like a sure monitor and inside, out monitoring, like. Like. This type of code based monitoring. Next. I want to talk a little bit about planning, for disasters, and being, prepared for them, first. And foremost van hub's is already a Chae so people, ask about high availability van. Hubs is a che it runs on service fabric clusters, this. Gives us a lot of availability, for the platform any. One server going down is not going to hurt us number, of servers going down is not going to hurt us for. Standard, and basic you're in a very large cluster, with a lot of machines there's, a lot of durability in there we. Do actually have availability. Zones available now so the, three regions. It's. Central France and somewhere else, that. You that have a zis fully. Available to the public you, can actually when you're creating your event hub it's an option to decide if you want availability, zones if you want to deploy it into an AZ and what, that means anyone familiar with a ZZZ its availability zones yeah. There's probably a cool talk this week about it there there cool it's a great feature it's really I put the fire icon, on here because it's really meant to solve, things like fire or electric. Or network problems because we ever done and see for all of those between the zones and. Then last but not least we. Actually do have a Geo D our feature already within. Our service so, that, is a feature that you can use for. Something a bigger, disaster, and, when I say a bigger disaster what do I mean I really, mean something like this so. Or maybe, more precisely an earthquake, I guess Godzilla, might only attack one data center not three but, an. Earthquake would certainly cause a regional, outage or potentially. A hurricane or something like that so, these, are really big disasters, and when.

You're Doing disaster, recovery it's. Not so much about the. Same. Aspects. That hae ever and familiar between actually I like this conference because people tend to adhere as opposed to build tend, to know more about H a and D are and more, about RTO and RPO a. Lot, of developers, don't seem to understand, those terms a lot of people who actually run services, a lot of infrastructure, people. Like they live and breathe that stuff so. For us this is a feature that you can actually set up in our portal as well there's a cute little icon, for geo recovery and when. You don't have it set up you'll see this piece on the left and you'll see a button that says initiate, pairing and what, you do there is you pick a region. You can pick whatever region in the world you want you. Pick a name, for. For. What you want to call that. Namespace. It's gonna be your secondary namespace and then, you pick an alias in. The idea behind an alias is that an alias is a region, list dns and, from that point forward you, should use that dns, to communicate, with your van hub we, do have a feature in. Our CLI. And powershell for you to actually upgrade your. Existing domain, name your existing, namespace, name into, the alias so, that you can set up a pairing and you don't have to change anything and it'll just work there, will be a few minutes of downtime while we flush the dns is and redirect everything but, that way you can do an in-place change I mean, like I said you can actually set. This and from anywhere to. Any two regions, you pick it's up to you and, you. Also importantly, you actually control the failover so, you can come into the portal and kick, off a failover you, can do it through CLI or PowerShell it's really just an arm command so anything that speaks arm which is most of the API most of the SDKs, can. Trigger this and we, don't really we don't do it automatically, because dr is not a trivial. Thing like, when you're doing a dr like you're probably having to coordinate a lot of things to move for your dr if, a, data center if a whole region of azure is really down you probably have to move your compute you have to move other stuff and, this, way we're letting you coordinate, that together and, that's why it's a good idea to use like CLI or powershell, or arm templates, to do this sort of thing because, you can spin up your dr all at once. Anyone. Using geo dr. Anyone. That's my favorite feature. Actually. This might be my favorite feature sorry geo dr. Anyone. Familiar with apache kafka anyone heard of it yeah. So apache Kafka's gaining a lot of popularity, it, is a open source. Project. From the Apache foundation, Apache. Software Foundation and, it's it's. Very similar to all the things that it is all the things I described so, it is a distributed. Log it is a it. Works the same way it's partitioned based and. At its core what it works on is a concept, just like event hubs so, it looks exactly the same it's, grown over time and.

Added More stuff to it and we've. Actually come to embrace it a lot so, what we actually did in May was, we added the, Apache. Kafka 1.0, protocol, to, Azure event hubs so, now instead of speaking to us through AMQP which is an ISO Oasis, IEC standard you can actually use Apache clients, to speak with us and this, is pretty powerful too because. Well. The core of Apache is really a stream. System just like this the. Real power or the, core, of Kafka the real power of Kafka, is the, is the. Ecosystem. Around it, so as Kafka's grow and they've added new tools to it tools like mirror maker tools, like Kafka Connect which let you connect a. Sink. In a source so, say your source is my, sequel database and your sink you want to have be a file, somewhere maybe blob. Storage or something else you can actually set this up in connect without writing any code so. It is something you just configure and you start you can write code to make it do exactly what you want the type of mapping you want but, it's very easy to get started with almost nothing and it's pretty lightweight and. It uses Kafka. Topics which are like event hubs behind the scenes as the transport mechanism so you don't really see them but they're there and so, what we're giving people with this is the ability to use things like mirror maker and connect. And all these other Kafka features without. Having to run a Kafka cluster themselves, so, you're using compute, of your own but you're getting this the stream from us we. Are going to GA this next month we. Are going to offer this for no additional cost and it will work with all the Kafka defaults so one megabyte messages, even in standard we're gonna turn that on we. Are going to roll this out globally over, the rest of the year we, were we. Were thinking, about just, turning it all on now but, we run about 2.2, or 2.3, trillion requests, a day and we generally run it on five to six nines of success, rate and then.

We Got a little bit nervous about should we turn on a whole bunch of new stuff all. At once everywhere in the world or should we just kind of trick so the regions that have Kafka enabled event hubs today will be GA, in. October and then, we'll add other regions over time we're just going to turn it on make sure there's no problems. So. One. Question would be why to use this why. Use our. Event. Hubs with with Kafka, there. Are a lot of challenges with Kafka the first is that it's software I can I can say is someone who is part of the team running messaging, software running messaging software is hard there's. Not a lot of glory in it anyone ever run an email server, ever. Be responsible, for an email server did anyone ever thank you for running the email server. Yes. No, they don't but, the moment it stops working do they find you and and. There's not a lot of thanks going on there so. The. All those same aspects, of running a messaging broker are, something we're going to solve for you so there's no hardware, or VM procurement, no network configuration, no, installation, or managing of OSS or security, or load balancing, we do all of that stuff it's, really a pass experience, it's, all the event hub stuff I mentioned so all the features all the capabilities, are already there and. It just works so it's, a pretty easy way to get started you can do cool things like use capture with, Kafka now so, that's pretty powerful you, get auto inflate, so, if you're Kafka producers. And a lot of things that use Kafka, like. Log4j. Or log4net have, Kafka, appenders so, a lot of the software you'll start to use will. Be able to write to Kafka, but, since you're not writing that software is writing you might not be aware of what type of traffic volume it's going to do and something. Like auto. Inflate is really gonna help you protect the same problems, solve the same problems we talked about so help you protect against back pressure and and, defend against that sort of stuff so. When. We look at the. World of messaging right now of streaming for a long time it's kind of people have looked at Microsoft and said this you, know do I use event hubs or do you use Kafka. And the way we look at it is that you can use both and. We actually want this to be open we want you to be able to move between vendors, we, think that this will encourage us to run the best platform, which I'm confident we already do but to, continue running it because. The avail the ability for you to come to our platform with your Kafka workloads is powerful, for. You and the, available. The ability of you to leave our platform is powerful for us is motivation, for us so. That's actually good. Real. Quick I don't, know if I necessarily have, time for this but I do want to show a little bit of it let's. See if this lets me do this right. Yeah. Cool so, this, is a Kafka, producer you, can see that this code I actually wrote this in Eclipse but I didn't, want to load up Eclipse right now you, can see that there are no Microsoft, packages, in this there's just the, the imports that is these are just Kafka, and, Java. Imports, the. Packages that I'm declaring. For this is just the sample itself so, this is a maven application. And. I've got a producer when. I'm creating it you can see what it looks like I have this start sending call and you, can see that, this looks, other than, the setup pieces which, are these properties sending. Looks a lot like, event. Hubs did so, its producer, dot send this is an async call I'm, actually. Awaiting. These, tasks. While they happen so. The. Cool thing here is that this this, Java code that's already here for talking to Kafka the things I had to add to it to make it work were one I just had to set the bootstrap server that's a Kafka thing you always have to do that you tell it where am I talking to my Kafka brokers for, us it's one IP or DNS all the time because we have virtual, IP that all of our machines live behind so, it's not a list of IPs which is what you would usually do in Kafka and IP for each broker, then. You do have to authenticate. With us so, you have to set your security, transport. To SSL we require that and your, mechanism.

To Sasol plain so. That's what we do and then you set up a Jazz configuration. And this, just tells us a username and password basically. Which, the the password, is. Sorry. The password is your connection string to your van hope you get it out of the portal you put it in here and it looks just. Like it. Would somewhere else so your your exact connection string and. From this you get to still do all of the other things like retries, batch, size all the normal Kafka, stuff and this, just works, Kafka. Consumers, actually they, change this a couple years ago to make a high-level consumer, API that looks a lot like ours as well and. We'll, you'll see when I start, this up you, you set some properties again these same ones I've. Got a few properties some clients some comments I probably should have taken out and then, all, I'm doing in here is I'm subscribing, to, a topic which that's, the Kafka name for event hub and, I'm just giving a topic name and now. I'm calling, receive. On it so, this. Isn't a you know an infinite. Loop we're just gonna keep receiving this, counts up by the millions, and and runs these it's. Doing by the millions because I am running this right now on this. Yem, that. I'm connected, to if, I, go run. PS on this I can see that these. Processes. Which, are my my sender, and my receiver, and my sender have, been running for 8 days 21, hours 57, minutes and, a few. Seconds and. I can actually go look inside. Like age top and see what the, see. What the actual usage looks like in here it's. Actually at the bottom it doesn't use a whole lot of resources per se, but. If. I come down here I'll see them and. I can see my sender here so I got my sender jar I can see how many resources, this is using I can, see you, know this is normal normal, Enix stuff for age top I can see my resource utilization on, send, and receive a cool. Thing with this is that. I can actually then go and look in the portal that I was going to show you and. See what my usage is like so here, is, a, smaller. View of our portal now that I've scrolled, out unfortunately and, if, I look at the last one. Day and you. Know this has been running for about eight days I can. See that my incoming, and outgoing messages, match almost exactly, this, is actually, two lines I don't know if you can see the light blue behind it but. There sorry that means I'm getting a very balanced, throughput from this I, can, actually see if I'm doing any capture bytes I'm, not I'm not using capture on this which, is because I'm writing about. A half a terabyte a day into. This right now and. That is all running from this one. Which is kind of cool because this VM it's just a Linux VM and I can see my resource utilization in, this so, this, is a little trivial of a workload it's doing send and receive but it's not really doing anything with the messages so I would expect very. Good flow, control with this like very good back pressure stable, and you, even see stable usage. And stable resource. Utilization so. This is a pretty cool, sample, of this running this. Particular, one I can actually you, know it's not even going to be useful to run this command but, I, could. Actually do. Tale. Receive. Dot out, and. See how many messages it's run. And. That's. How many millions, of messages. This thing's read in those.

Eight. Days. 52,000. Million so. I guess that's 52 billion. So. Yeah, it's just something along this thing just runs it's it's pretty solid and this is with the Kafka api's so, this isn't even using our I could write this in our dotnet core library or a Java library ourselves. But, I just used the the, Java API the, COFF gave yes. So. That is. That. Piece now let's come back to. To. Going big with streaming this is a fun one to talk about, so. How do we sell event hubs this, is kind of the funny part that. That. Half. A terabyte a day I'm writing that, costs me like $20, $25. It's about, 100 milliseconds, of latency on average I haven't gotten a single error and those 52 billion or it would have written out to that log so I'm counting them as well so, it's. Pretty solid I'm using the standard tier 4 that I've. Got auto and played on so it just grew to what it needs which is right now about 8 megabytes per second, which doesn't really sound like a lot at first 8 to 10 megabytes until you realize it's really writing a half a terabyte a day so. That's that's pretty solid and. You, can see the way we charge for this is this is paper use so, you are doing a throughput unit, but you're actually paying for ingress events and if, you fill up that pipe all the way you're sending that thousand messages per second the ingress, events will actually cost you more it, sounds cheap at 2.8 cents per million but at a thousand a second you're going through 3.6, million an hour so, you know we just get add up when. You want really big workloads, the thing we sell is dedicated, and this is a paper resource, model this, one this is something I'm pretty big about pushing. In Azure but in, some, services do but not all is that I don't believe there's a one-size-fits-all. Pricing, model I talked, to some of you about this the other day actually, whereas. It's really good to start with pay-as-you-go. Or paper use but. It really high scale, you're gonna get to a place where paper. Use can get pretty expensive, and.

That Generally, I it is really high scale a lot of people will never hit there but, the place where we start that is we, think at about 50/50. Throughput units so 40 50 megabytes per second, is where, where you're gonna hit that point depending, on which features you're using and so. The model we have for that is we. Do dedicated, event hubs these. Are available in any region you want right now you have to call us or email us to set it up we are working on making that available through the portal soon, very soon it's. Still the same service so it is event hubs it's, just unlike standard, and basic where it's multi tenant and there's a lot of people on that cluster you're the only tenant, on that cluster so it's a cluster just for you you still pay for hours it's like six dollars an hour for the capacity, unit that's the thing we sell it's a set of servers you, can stack capacity. Units up together to make a bigger cluster, you don't have to have, any downtime or anything to do that and here's, a table that shows some examples of what we did recently with. One of these clusters that had four capacity, units which yes is a pretty big cluster but, you can see the kind of workloads it did so if you're sending one. Kilobyte messages, and, you're batching them 100 at a time we, were able to sustain for, days for, multiple days 400 megabytes per second of ingress and we're actually reading this twice because what we've seen is that a lot of people actually read their stream twice I think, if you use capture you might be able to get away with maybe, less but usually. Each, type of processing, you're doing you're gonna have a reader for that stream unless. You make your readers really complex which is kind of a no-no, in a lot of situations and, then you could see that if you change that that. Workload. To 10 kilobyte messages, the, same size of what you're sending on the wire it's just they're bigger messages, this time you're, actually getting higher throughput, so, you're getting about 160, 60 use out of these four capacity, units I mean. Out of each of these four capacity, units in. The previous you were getting a hundred and then if you're sending larger messages still like 32 kilobyte this is kind of where we get into a real sweet spot where we're able to sustain a gigabyte, per second on, something, that's costing you I mean it's like 24 bucks an hour but.

It's A gigabyte per second like, that's a lot of data there or not I can probably count on my two hands the number of customers, that need that you know that we have I mean I'm not gonna you know I don't. Think Facebook, or Google or Amazon are gonna use this service so I guess, I don't need to count them, but. There's. Not a lot of people that need more than gigabyte but we can do it if you need we. Cou

2018-10-05 23:09